Thanks guys, good information

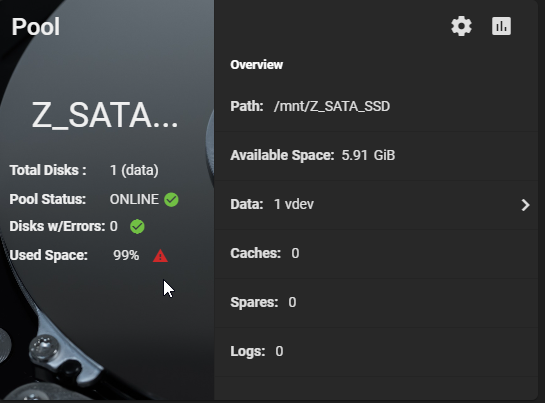

So, if I make a thin Zvol and give it 80% (Or maybe sneak it up to 90%…) I think I should be good?

This pool will have nothing other than the iSCSI target on it, and I will not be utilizing snapshots either. All my VM’s are also already thin

Will fragmentation affect purely storage speed? And will that fix itself in the background? The chances of me getting this SSD completely full is not very likely, however I like having the extra buffer room to prevent a VM going wild and filling the datastore and impacting other VM’s. Perhaps I should make 2 Zvols, one for “Home Production” VM’s that can’t go down, and the other for my less important VM’s

The single disk with one zvol I know isn’t ideal, but this SSD has its own built in redundancy and everything is backed up, so I’m not too concerned about the SSD failing. I would buy a second, but they are wildly expensive

So far I’ve tested the performance of three different pools with iSCSI

a Single Intel DC S3700, 100% zvol shared out - Performance was great. Incoming vMotions over iSCSI (With only 1500MTU) maxed out at ~1000MiB/s which is probably hitting the limit of the NIC in the ESXi box (I only gave iSCSI a single 10G Port). The SSD isn’t that fast, so I assume it was using RAM a lot too. Very impressed

2 x 8TB 7.2K RPM SAS Disks in a mirror - Performance was as expected, garbage. Incoming vMotions would start out kinda fast, and then go down to just 55MiB/s incoming on the NIC

Then my main data pool for important data. I built this just over a year ago hoping for good performance after coming away from a very slow Synology. Its 6 x 4TB Disks being a mix of SAS and SATA in striped mirrors, 1TB Samsung 970 NVMe SSD for l2arc, and then 2 x Intel DC S3700 SSD’s in a mirror for metadata. This pool has exceeded my expectations. So far every time I copy large or small files from or to my desktop PC over 10G, I pretty much hit 1GB/s in Windows Explorer, and listing directories etc is lightning. Night and day compared to the Synology.

When I put some VM’s on this pool, they actually performed pretty well, and the vMotion was quite fast, faster than I expected.

I agree leaving the SSD in the ESXi box might be the easiest way, but my goal here is to start to downsize that machine. It has 2 x E5-2680 V4’s, and the ONLY purpose of the second CPU is to get me the extra PCI-E lanes. If I take this SSD out, I can ditch a CPU and 128GB of unused memory and save on power and more importantly, heat. The ESXi box also has a virtual TrueNAS setup which stores my unimportant data with 12 x 8TB SAS disks in the front, and when I added those I had to start increasing fan speed to cool the CPU’s. Any more fan speed and its a little too loud

My end goal with this project is to either turn that chassis into a JBOD or buy a new JBOD, and attach it to my main TrueNAS box we are talking about here. Then slowly move to an ESXi setup with 3 much more power efficient servers, hence the shared storage being important.