marelooke’s mess! I’m the mess not you lolz

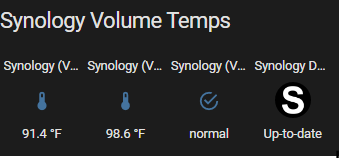

I had gotten a really nice noctua NH-U12S for it (for when I was intending to make a NAS with this via a Fractal Node 304), but it won’t fit in this current mini ITX case. Actually every low profile I looked at wouldn’t work. So I took the OEM fan off of my daily driver desktop (i7-4790), put on the noctua, and put the OEM on this hypervisors i7-4790K.

I don’t see OEM being a problem, hypervisor isn’t going to be pegged and it ran fine on my daily driver here that I’ve done some light gaming on.

100 this, which is why I’m shocked Proxmox only saw one of the two nics, and is glitchy AF. It will boot, run for a little while then drop the SSH session and the webUI port 8006 session, but on the screen I have plugged into it still be running and responsive to commands.

So then I did a vanilla Debian install and its only gripe was the wifi card non-free firmware, but after then following the guide to add the proxmox repos and install proxmox on top of it, same issues.

In a rock and a hard spot here. If I was better at linux I think proxmox would be the way, there would be a way to get the NICs working right, Nvidia GPU ‘seen’ and able to passthrough and then even the wifi card for a VM. But I’m not above intro level at nix. I’m floored how well xcp-ng has taken with the hardware- in that it doesn’t sh*t the bed when being interacted with, but yeah it doesn’t see the nvidia or wifi card.