I’ve been puzzling over this for a while but I’ve got some clues now but very little skills to solve this one.

I’ll try keep it brief but I’ve got the following setup.

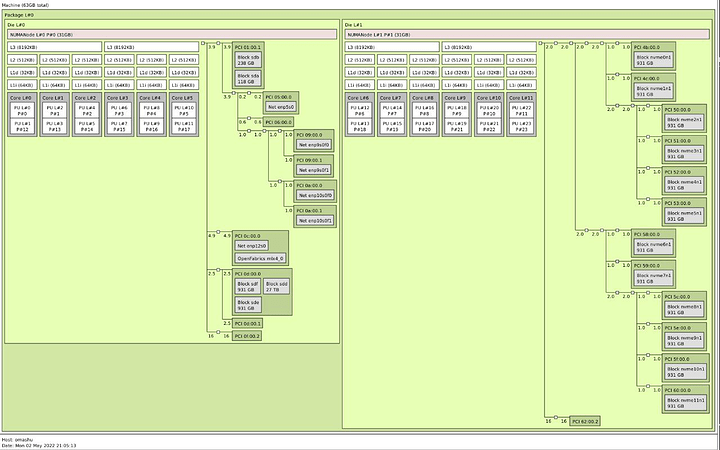

- Threadripper 1920X CPU on a Asus Prime X399-A with 64GB of memory over all channels.

- NVMe ZFS array of 12x 1TB SSDs on a PCIe Backplane using PLX switches.

- SAN block device for bulk storage.

The topology is as below:

I’m running Debian bullseye with KVM/qemu for VMs.

Software is setup for IOMMU and passthrough but currently nothing is configured to pass to the VMs.

BIOS and updates are all recent.

If you want the whole build details feel free to direct message me.

This is all Homelab stuff and all for fun and learning.

The server is setup to host VMs but I’ve never really been able to fully use the server due to dealing with hardware randomly timing out/disconnecting. I’ve been trying to figure this out for over 2 years.

When I first built the server I was not aware of any of these issues.

Recently I discovered ‘numactrl’ which has reduced the issues to almost zero when reading and writing to the NVMe array. I did have to change from UMA to NUMA. The issue now is if anything crosses a NUMA node it causes issues.

The hardware hang was easily reproducible by running ‘fio’ disk benchmark on the NVMe array where the random read/writes will cause a network adapter to fully drop its link layer for up to 30seconds.

Also copying files from the NVMe array to a USB drive causes the xHCI controller to disappear entirely from the system until its rebooted.

Simply put anything which crosses a NUMA node for memory causes PCIe hardware to hang.

My theory is the interrupts are failing to be serviced.

This results in:

- Network interfaces resetting due to driver hanging

- Network Packet loss of up to 60%

- Fibre Channel IO cards dropping block storage.

- USB controllers disconnecting

- X399 chipset fully disconnecting.

- Basically anything on the PCIe bus hanging/disconnecting.

Frustratingly for me if you pin CPUs and memory to a NUMA node everything works fine…

The issue now is the SSD storage is all off one NUMA node and the bulk storage is all on the other NUMA node and running VMs which have access to both storage arrays causes hardware to hang.

Listing the above,

Does anyone here have any ideas for troubleshooting this issue?

Is there something obvious with Threadripper hardware that I’m missing?