SSH in, check some logs, kill the display manager, run DISPLAY=:0 xrandr, etc.

I don’t know how to “check logs”

I do have a system tar.gz. from dec 7th

Moved my question here. Having some issues with desktop audio capture on manjaro. Below is what I have tried so far:

If you connect a keyboard and press ctrl+alt+F1 you should go into terminal mode. If not try F2-F8.

If this does not work use SSH and check system logs by typing dmesg and journalctl.

Give up on Pulse and take the plunge to Pipewire. It is well worth the effort and is very likely to work out of box.

I know this sounds like the old “Install Linux and all your Windows-related problems will just vanish!” snake oil some people used, but in this case there really is no reason to cling to pulse. Just let go already.

Oh? I had no idea about pulse vs pipe. pulse was default install. Will investigate, thanks.

Still learning Linux coming from a decade+ of windows. My main tower daily driver is linux, but I keep a separate and dedicated machine for each of window, mac, and linux and pick the right tool for the job depending what I’m working on. For example ableton runs like butter on the mac, and not at all on linux. I haven’t tried ableton thru wine yet.

I don’t really care about the platform anymore as long as I can do my work and the OS and software doesn’t get in my way.

i can’t ssh in

Do you have a way to boot from an Ubuntu install disk using the same version? You can do the Try Ubuntu option and at least get to a working environment from which to troubleshoot from.

already done

Does it not show a screen there, either?

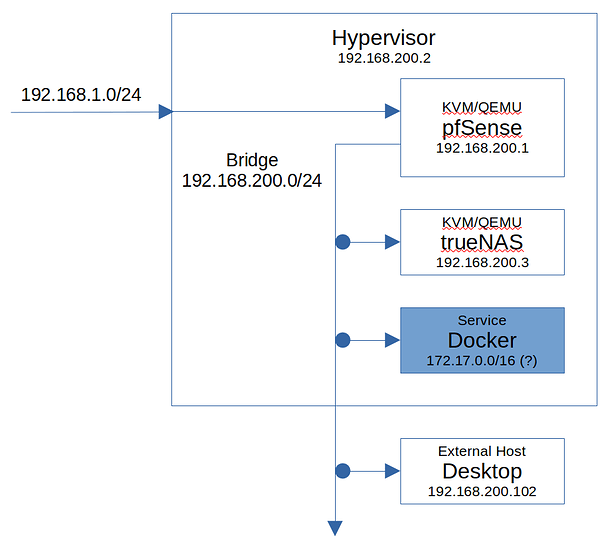

I have a small home setup depicted by the figure below:

Can anyone determine why simply starting docker.service on the hypervisor (e.g. systemctl start docker.service) disables all access to trueNAS (web portal, ssh, ping)?

VNC’ing into the trueNAS instance shows the typical incorrectly configured interfaces prompt:

The web user interface is at:

http://0.0.0.0

https://0.0.0.0

–

EDIT & SOLUTION

https://bbs.archlinux.org/viewtopic.php?id=233727

Docker messes with iptables upon bring up. Adding the following to docker.service has resolved the issue:

[Service]

ExecStartPre=/usr/sbin/iptables -A FORWARD -p all -i br0 -j ACCEPT

ExecStopPost=/usr/sbin/iptables -D FORWARD -p all -i br0 -j ACCEPT

yep, hit the live CD and been watching youtube off that.

Im thinking i just rsync my monthly backup from my home server after is install nautilus onto the liveCD (usb).

But mim not totally sure it wont just break it.

I have a backup of some files and when I need to restore some I’ll do a find command and then copy paste the location and use rclone to copy it back to where I need it.

When I want to restore several files I can sometimes get away with using “*” so I’ll get a few of them in one transfer.

Is there a nice CLI way for me to do a find or multiple find commands (or similar) and then multi select which files I then want to pass into my rclone command?

It’s a small linux problem because manually copy pasting each result I need works fine.

If I’m going to solve it myself I would use something like this

find . -type f -iname '*.mp4' | fzf -m

taken from here

and then use that to create a –files-from text file that rclone would use.

Is there a better way or some tool already created for this?

you just need to pipe the output from fzf into a file, and then use that file reference in your rclone command

find . -type f -iname '*.mp4' | fzf -m >/tmp/toclone.txt

rclone --files-from /tmp/toclone.txt

# clean up

rm /tmp/toclone.txt

Yeah, that would work. Though, part of me wonder what would happen if we just do this.

No idea if it is possible, could be, might not be.

find . -type f -iname '*.mp4' | fzf -m | rclone

not familliar with rclone so i have no idea if it reads the file list from stdin, but if it doesn’t, you can use xargs, xargs will take the stdout of the preceeding command and shove it on the commandline:

find . -type f -iname '*.mp4' | fzf -m |xargs rclone {...}

not sure though, more research required

I got this working using

find /media/sda1/backup/ -iname "*roughly search here*" -print | fzf -m

I copy pasted the output but I’ll pipe it into my from-files.txt in future

rclone copy --files-from-raw /media/sda1/backup/files-from.txt / "destination:restore/" --progress --copy-links

–files-from-raw was important to get it to work for whatever reason.

that would be nice. But I do like the idea of preparing my list in a text file and then running rclone on it just so that if it fails I don’t need to reselect my list and to just generally to a sanity check that I’m not copying everything.

I can’t use my usual ls “-lath --fu” which I use to figure out how big the files are and their date etc means the output isn’t formatted correctly as find is with just the pure absolute path.

What I have now is functional but I would like to be fzf’ing through a nicely formatted list of files with sizes and dates rather than on the absolute paths.

I’m not sure what way to tackle this issue but for the moment I’m in a better place anyway.

to explain that better. find is returning a nice list of absolute paths which i’m then making in fzf. But I’d like to mark files from a dated with human readable size list but have the absolute paths returned. This is something I hope there is an existing solution for

that is going to be very useful for me but maybe not in this instance. Thank you though

slayed it bro! ![]()

find supports an -exec parameter that will run the specified command for every file it finds. you can get what you’re after by trying something like:

find . -name *.mp4 -exec ls -lsh {} \;

beware of the rather esoteric syntax. you must escape the semicolon, and leave a space between the {} special token, which is a placeholder for the current file that find has found.

thanks that will get my nice ls output from find. I will need to eventually return just the absolute path so it can be passed to rclone. The first thing that is coming to my mind would be to add the absolute path to that exec ls command with -d and then use AWK on the result of my fzf -m to get back to just having the absolute path before writing it to my text file.

I think that’s what I’ll do next time I’m stuck looking at two same-named files in different directories wondering which is the one I need.

My backup folders are generally broken up by date (but sometimes stuff goes in the wrong folder) so for now the absolute paths are actually 90% ok for me to figure out which file I need so I don’t want to spend too much time handling edge cases when it already is functional

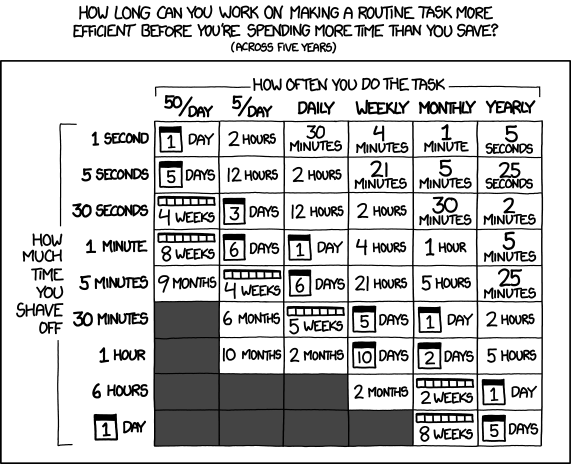

I think I’m at the sweet spot for now until those edge cases start taking up more time. Thanks for your help and everyone who replied