Even worse, I tried to enable pf on void in rc.conf then reboot.

I had such a big brain fart moment. For 24h+, my isc-dhcpd was not working. Because I moved to VLAN trunk on everything and assigned static IPs, I did not understand why I could not access the only thing that was still using DHCP. I messed with the VLAN configs until I went mad.

I thought that maybe I deleted the dhcpcd service from the wrong device in my troubleshooting, so I ripped the SD card out of my Pi 2 and plugged it into my PC… the dhcpd was still there, albeit not the one for -eth0, but even so, the global one works too, it will default to dhcp on all interfaces (and only has 1 and eth0 is connected, it did work before). Regardless, I deleted the service for dhcpcd and enabled the dhcpcd-eth0 one, just to have some consistency. Plugged it back in, powered on the board… nothing.

I realized there was something wrong with dhcpd today. It could have not been the VLANs. I didn’t want to believe it, but that was the case. When checking the status of the dhcpd service, I was getting constant restarts. I stopped the service and try troubleshooting starting it manually… SEGFAULT. FML.

I checked the configs, there was nothing wrong. Using the config from my old router worked. On the new one, I split the config on multiple conf files for each subnet, to keep my sanity in check. I enabled one subnet conf at a time. First one: works. Second one: works. Both of them: segfault. You gotta be kidding me (I have more than 2 vlans, currently 4 + native vlan, will probably grow).

I looked it up online, there was nothing wrong with the conf. Everything was ok… or so I thought. When running the command

dhcpd -t -cf /etc/dhcpd.conf

It sometimes segfaults, at other times it just doesn’t run, but shows the typical output of the daemon, ISC server bla bla bla all rights reserved. So I got tricked by the output. When it segfaults, it still shows it, because it has a stupid echo before it run anything further, so I thought the config was good. When it doesn’t run and just silently crashes without segfault, it still shows the echo. When it runs normally, it outputs the same thing. No software should show the normal output if it does not run.

It ended up being the host configs. I was trying to give hosts different IPs based on the subnet, but I forgot that hosts need to be globally configurable. Then I realized that each conf file had the same host entries. Adding the vlan after each host solved the problem. Big brain fart moment… It was not the brain that was big, but the fart.

Fixed it today, I should be safe to turn off my old router. Fingers crossed.

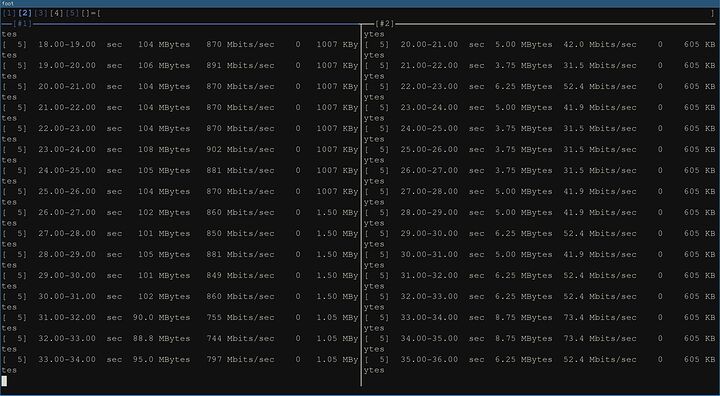

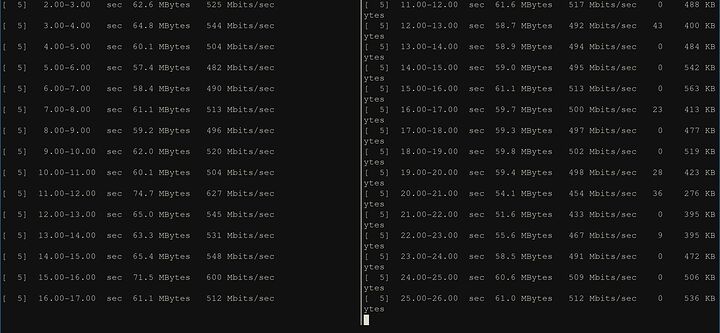

Since I have my network in-place now, I decided to run some iperf3 tests. This is not its final form, this is currently a router-on-a-stick, all VLANs coming through a single gigabit port, while I am looking for a dual-port 2.5Gbps Intel NIC, which are expensive… The WAN is on WiFi, so technically not just router-on-a-stick, but close enough, physically it looks like one.

Doesn’t look good for inter-vlan traffic with 2 streams. Left is from PC to router, right is from PC to a server in another vlan.

Well, I should have used a different device for the other test, but I didn’t have any powered on that were capable of gigabit. Likely my PC’s NIC was not happy about it. Still, the throughput on the router is close to gigabit.

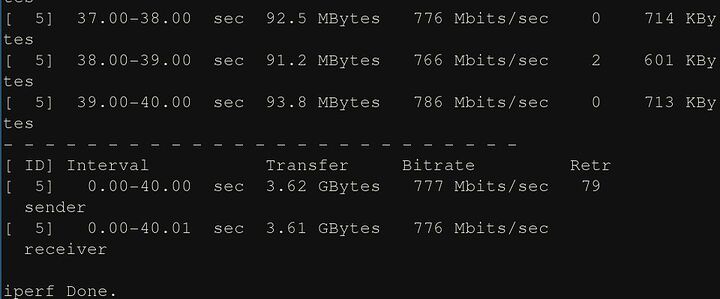

This one is just another run from PC to server, just one stream. I get better throughput with 2 streams. I didn’t check my CPU usage on my PC, but I’m pretty sure one CPU thread was tanking, iperf3 is pretty single threaded, to avoid losing performance by bouncing the thread around from a CPU and cache to the next.

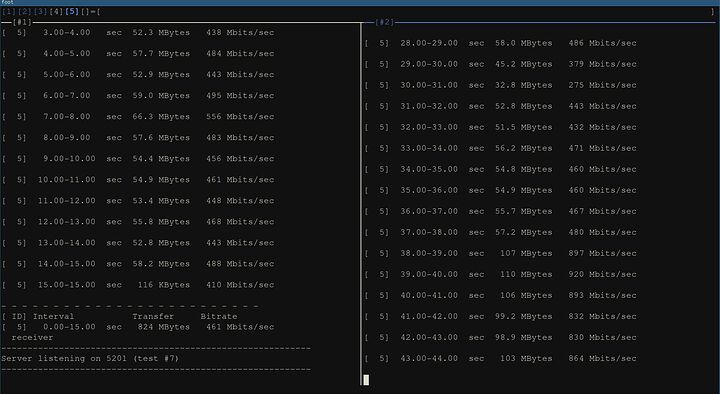

While writing this, I decided to power on something, I kinda need to work on my HC4 anyway.

On the left is my HC4 to threadripper, on the right is my Pi 4 to my router. Obviously the later are on the same vlan, while the two I made to talk on different vlans, so they go through the router. Looks very decent actually, about 1 Gbps throughput combined.

I ran iperf3 in reverse (-R option), so from the rkpr64 to the pi 4, and kept the same direction from HC4 to TR. Not sure what I was hoping to achieve here.

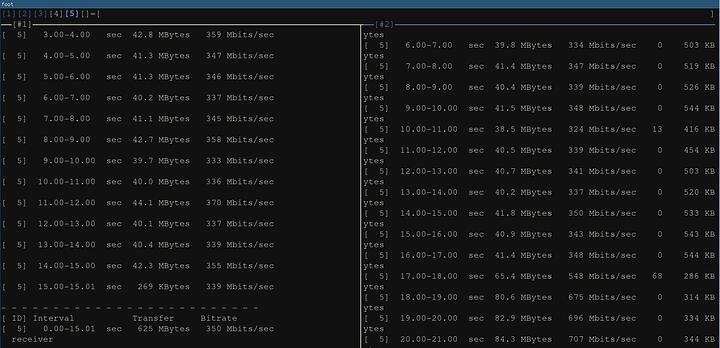

As a final test, I ran both the TR and the Pi to the RockPro64, in the same direction and one in opposite direction, it didn’t make a difference, they were both at around the same throughput. This one I was hoping would be different, since this is a full duplex, it should have been running 900 Mbps from rkpr64 to the pi and 900 Mbps from tr to rkpr64.

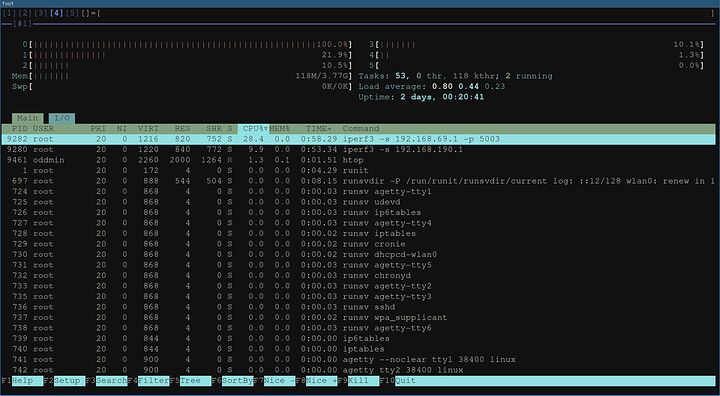

CPU utilization was never above 70% and CPU load was always bellow 0.5 during single stream testing. When running 2 streams to the router, I managed to peg a CPU core to 100%. That’s htop on the rockpro64.

I just hope it’s the iperf3 scheduling that is bad, and not something from the kernel. Not sure how it would behave without iperf, but with actual data running on the lan.

Look at that RAM usage though. So much wasted space, I could have used that for the file system.

Another thing to note is that I don’t have any fancy rules on the vlan interfaces. Once I add firewall rules, it might start getting a bit slower, although the ruleset should not be too big.

Unrelated

Does any of you who read this blog know how to design stuff for 3D printers? I would like to transform a design I found online for a RockPro64 case from a normal thing-sized one, to a case that can accommodate a half-height PCI-E device, a dual-port NIC and I have no idea how to open such a file. I am completely parallel with 3D printing, so I don't even know what kind of file extensions those use.If there was a case on the market for a RockPro64 router, I would have already bought one, but the only cases available are the humongous NAS metal case, a small plastic one that fits around the board nice and tight and a similar one made out of metal that works as a radiator. But no options for PCI-E expansion.

A good question for @SgtAwesomesauce about 3d printing… he’s been doing alot with 3d printing projects as well as printer setups and optimizations. He gave me a few tools to use in the past but I forget their names.

The motherboard designers who thought it was a good idea to put the CMOS battery under the first x16 slot should not be fired… they should be tried for crimes against humanity.

In other news, I changed my ECC RAM to 3200 MT/s in UEFI and my Threadripper 1st gen posted just fine. I almost feel like pulling the trigger for another kit of 4 sticks, with the way I configured Windows and my Proxmox VM lab, I really feel the need for more than 64GB of RAM. Although I did not provision the totality of my VMs over the 48GB threshold.

Yeah, 64GB doesn’t go very far when you start virtualizing things. I couldn’t get the six slot board I was eyeballing for my home server, so I had to go with 512GB ECC. ![]()

IMO, depending on what things you run, 64GB is more than plenty. If I was not virtualizing Windows and I did not have a virtual lab inside a VM, I would be totally fine… Probably 32GB would have been enough for what I am planning, and maybe even that a bit much. But not in this scenario.

That said, I never had my lab and my windows VM started at the same time, so maybe I am just imagining thing. I always shutdown things after I am done with them, at least on this puppy, since the TR should only be used like a test platform. Just like any PC, it should be off when not in use (although I have been keeping it up quite a bit lately, which I don’t like).

64GB is what I have in my laptop and it does fine running a window VM, and a Linux VM both for development.

The home server is going to run those plus several others.

Again, I may be imagining I need more RAM, in reality I am most likely not running them in the same time. Especially Windows, I always turn Windows off if I am not using it.

I usually do also, because the fans go brrrrrrr when running it, and that gets annoying.

I used to hate reboots a few years ago. And to this day, I’m not really rebooting my PC often, mostly because my PC is slow to load up firefox and because all the panels I have to open in dvtm. Booting and rebooting is super fast though. Oh, I think I still hate rebooting server hardware though, waiting 5 to 10 minutes for all the posting and initiation is egregious!

But recently I really came to appreciate reboots. Long uptimes are nice, but you know what is not nice? Worrying that with all the system updates and configuration changes, your system will not boot up properly anymore. I think most of the people here who worked with or within some big companies have had that happen to them, be it a server, a router, or a switch with more than 3 years of uptime.

Rebooting just gives you an assurance that everything is nice and fresh. Sure, there are ways to ensure some services are updated and start up properly, like restarting the systemd service. Even myself on void, I make use lots of times of xcheckrestart (part of xtools) to see what services or programs need restarting. I don’t even know of a more powerful tool than this in all honesty. And unlike ubuntu, which uses systemd hooks in apt to restart services that get updated automatically (which is horrible!), you can check what needs to be restarted and restart at your own pace. But you can update your system at any time… well, mostly.

I had a big shock 2 or 3 days ago when my HC4 was not starting properly. I thought that its network didn’t connect or something. Then I realized it was the nfs-server service not starting up on the TR (where my rootfs for the HC4 resides currently). The reason? I updated the system and it contained a kernel update. Kernel updates on void and pretty much all other system wipe the /usr/lib/modules/ folders from the previous version. They can keep parallel ones, like say linux5.15 and linux5.18. But if you update linux5.15.57 to linux.5.15.61, then the .57’s modules will be wiped.

That’s what happened in my case. And nfs-server crashed and did not start until I reboot to load the new kernel that had the nfs modules present. I believe the only system that avoids this is NixOS, which does not delete old configurations, kernels, modules or anything, never, unless you run the cleanup scripts once you verify that your system boots your configuration properly.

Back to reboots. I have been messing with my networking service in void. I’ve been adding bridges and vlans on it the past couple of days and I’ll keep’em comin’. The thing is though, when I run the ip link commands when the system is booted, it may work fine when bridging interfaces. But when I put them in /etc/rc.local, that’s when the fun begins. I rebooted my TR box about 20 times today and I can’t get enough of it, it works every single time. And boots smoking fast, Proxmox is dog slow compared to Void.

I am happy for the reboots because earlier I had a wrong configuration that worked when the system was on, but then failed when I rebooted and locked myself out. So, not having a display output on it (because I blacklisted amdgpu on both gpus for pci-e passthrough, lmao), I had to use my rescue USB running hrmpf to mount the rootfs (zfs) and edit the rc.local config to get myself back in the system. While at it, I configured DHCP on the 2nd interface that I am not using, so that in case I even lock myself out, I can just plug that in my switch in a port in mode access and it should get itself an IP that I can use to get into the box.

Rebooting just works. I am glad for reboots. I love reboots! I think this may be a controversial view, but once you try it, you can’t stop.

I can’t believe how useful the forum is by just using a keyboard. Ain’t the finest experience, but definitely usable.

My wireless KB+touchpad combo suffered brain water damage and kept typing the letter “n,” so I stopped it, unplugged its batteries and I’m letting it sit out for the night. Been using one of my trusty SK-8115s on the forum, got no cursor. I have 2 mice, 2 touchpads and a trackball waiting to be used, I was just too lazy to unpack one of the touchpads, my trackball is being used on my TR windows VM, although its dongle is on an USB extension, so I could easily remove it, but the TB is giBALLtic.

I’ve been using my keyboard+touchpad again, works like brand new.

The forum could have been a lot of keyboard-friendly than it is, if the custom top bar / banner that says “Return to level1tech” was not there. Even if it doesn’t show on other threads, it is still hidden away. If you hit F6 to highlight the URL bar, then hit tab a bunch of times, the first thing on the page will be that custom banner.

The bugs I encountered were that in a long thread, like Post What you Are Working On or whatever it’s called, when I was scrolling with j and k to go through the comments and then hitting tab to give an upcummie to someone, sometimes the tab would just jump to an older comment randomly, not necessarily one I upcummied before, then I had to scroll down to that one again. I made it easier by using pg down.

Another weird bug was because of the custom banner, when I was in a long thread and went from F6 to my top icon to check notification by hitting tab a bunch of times, it would reveal the banner to return to L1T, but the forum would also jump from the comment I was at, to the top of the page. Complete asinine, without it, I doubt I would be pushed to the OP, it would just jump to the L1T icon near the thread title.

Yesterday I finished Hitman 5. I didn’t really enjoy the game, especially not like previous episodes, mostly because of the challenges. I just didn’t feel satisfied until I played the game a few times, completing the challenges. But it was such a chore. The game got boring 1/4th of the way to completion. I took a month or more break from it, because I really didn’t feel like playing it anymore. Left it at the last mission.

Yesterday I wanted to finish it. I didn’t care I was not getting all challenges or that I can’t be a silent assassin. I just killed anyone that stood in the way.

Then I played DOOM. I got the latest version of FreeDOOM, latest gzdoom, grabbed BrutalDOOM v21 and played Going Down on Ultra Violence with Pistol Start. Boy, did I have a lot of fun. Even with all the deaths (it is pretty brutal), I had fun conquering levels 1 through 3.

Why are games these days so overly complicated? Game devs just take the last drop of enjoyment out of their games. I remember playing Thief 3, Dishonored, DOOM 3, Command and Conquer 2 and 3 and obviously, the original DOOM. All of them were pretty simple games, if you wanted a challenge, you played it on higher difficulty. I usually would start a game on medium AI, then after finishing it and getting the hang of it, going on hard. And I would replay games quite often.

But modern games? I finish them and never look back. I played Hitman 2 more than 10 times. Hitman 4, only played once, but managed to complete it. Hitman 5? I could barely go through it. I don’t feel like buying the new versions of Hitman 1, 2 and 3. I have Hitman sniper challenge that I didn’t even start at all.

Am I just being nostalgic for old games, or is the game industry possessed by demonic managers who only think about making money and not leaving enough leeway to the devs to actually make enjoyable games?

No, you are not just being nostalgic for old games; I have noticed since 2011 while game visuals have steadily improved, gameplay and the desire to finish a game have steadily declined. I believe the fault for the decline in games falls on the publisher and poorly trained devs.

I just had a weird bug with openvpn or something. My rkpr64 (router) was pinging my VPN’s outside IP just fine, I had a mtr open, but another mtr toward’s my VPN’s internal address, it had a lot of packets lost and my openvpn tunnel was reconnecting every 2 minutes or so. Not sure what the problem was.

In all fairness, weirdness with OpenVPN is nothing new to me. On my previous router (rpi 3), I had my vpn disconnecting sometimes. But it always worked well after I reconnected it. It happen on the rkpr64 too, so I just opened a new terminal (with abduco, so the session stayed on the rkpr64) and opened the vpn. It worked for a few days. But now it just refused to work more than 2 minutes.

Killing and reconnecting did not fix it.

Interestingly enough, the USB device (my wifi card) was set to usb7 and after the reboot, it’s usb 1. I did not notice any disconnects. I would understand the problem if the mtr to the public IP of the VPN also showed lost packets, but there were 0 packets lost out of a few hundred, so the wifi card had nothing to do with it. A reboot fixed the issue with the VPN (I have a cron job at reboot to connect to my VPN - I should probably convert it to a runit script, so I don’t have to manually connect if it dies).

Never mind, just hit the same problem a few minutes after reboot. Leaving mtr a bit longer, it looks like I lose about 3% packets to my public IP address of my VPN, with all the packets for 9 hops (out of 13) being 0% lost and with internal IP addr of the VPN about 60 to 90%, never dropping bellow 50%. It’s pretty bad. It is definitely not the internet or the router on this side. Likely problems on the other side.

Hope the internet will be fine tomorrow morning.

I remembered that OpenVPN sometimes needs to be updated. I’m bad at keeping my router updated, but I did update pfsense 51 days ago (that was the uptime and I only reboot on update - proxmox had 115 days uptime, but I update it more frequently, just not reboot)… Anyway, given the horrible disconnects, I knew I could not update pfsense from the CLI.

I ssh’ed to proxmox on the other side, entered a screen and ssh’ed to the pfsense box. And good choice it was, I had to reconnect to screen 4 times during the update. I didn’t see what was updated though. After a reboot of pfsense, the loss packets to openvpn’s internal IP started going lower than 50%. Currently at 1750 packets sent and 38% loss and still going down.

Seems like the problem was with pfsense. Which is not something you see often. I’m glad I took the time to fix it, instead of hoping it goes away tomorrow and be met with the same problem.

No, the issue persists. It’s definitely network. Weird how it worked for a bit.

Now I can’t even connect to openvpn, complains about certificates. Kinda BS. Moved to wireguard to my other site. Works for now. But I still have issues with some websites. Tested duckduckgo just to see if the page loads. No dice through wireguard, works on openvpn. I don’t really use it, it was just an example.

Now I feel kinda stupid. The problem is worse now tho’. I get TLS handshake errors. It says I should check my network connection. From what I can tell, network is fine to the openvpn server’s router. I fear the issue may be something local, like a port going bad or the switch misbehaving. I just hope it’s nothing like this and it’s just a network problem with my ISP over on my other site, but from the looks of it, I highly doubt it.

Too tired to try to troubleshoot further, I’ll try tomorrow.

Today I unplugged my router’s RJ45 cable for a few seconds to move a cable around, I only did it for like 5 seconds. I was prepared to restart all my SSH sessions and relaunch my zfs-send. When I did so, the router did not even detect it had its port unplugged for a while, the ethernet port’s LED lights on the router were constantly lit, while on the switch they were off. I plugged the cable, the LEDs on the router stopped lighting up, then the switch LEDs turned on, followed by the router. Yes, the LEDs turned off on the router only after I plugged the cable back in.

My SSH connections did not flinch, my zfs-send did not bat an eye and my VPN connection showed no events happening. It was so short that for everything on my network, it looked like just a few normal packets lost, which happens more often than I’d like (about 1.3% out of 15k packets, according to MTR to the gateway’s IP and about 3.4% to the VPN’s internal IP address, tho I’m not noticing that at all during my daily activity).

It is quite fascinating how resilient the software that we are using is to to the unreliability of the internet. And the internet is unreliable. The bad part is that most people nowadays believe the internet is not only reliable, but that it will always be up. We see things like “whatever goes on the internet, stays on the internet,” but that is only true because there are still people who treat the internet as the unreliable mess that it is and do surprise decentralized backups of online data.

If more people would treat the internet as it should be treated, like the unreliable medium that it is, we would not have such difficult times.

> strong men create good internet

> good internet creates weak men

> weak men create bad internet

> bad internet creates strong men

The software protocols we have today were created by absolute chads. Today’s soyboys are making horrible software that becomes unresponsive the moment the connections from the browser to the servers gets severed. I can’t wait for the days where people will start decentralizing again and get rid of the abominations that are internet 2.0 and 3.0.

For real, if people would plan for the day the internet goes down, people wouldn’t care if a hurricane hit and took out the infrastructure. Back in the day, people just used to turn on their radios and pick up broadcasted waves from all around. In the modern day, everyone should have solar-powered routers that create a mesh network with all the other routers around, through protocols like B.A.T.M.A.N. or BMX6.

But we need discovery protocols for services around us. Sure, you can do nmap around and use ports 80 and 443, but that ain’t really gonna cut it. We should have a protocol in which you broadcast a packet with a small TTL, then get a unicast reply back with messages of what services are available nearby. Of course, the service response would be a server set up by each admin who wants their service to be public. It would for the most part get rid of search engines for discovering nearby stuff.

Well, I can dream. I am too noob to write such a good piece of software, I could probably only write insecure exploitware™. But I know that, at least even if I tried, it would not be soyftware™.

SSH in particular will hang onto a connection for much longer than a 5 second interruption. Definitely the product of strong men (and women?).