My friend begs to differ. Labelling and organization is key.

Within limits… that logic changes when you get clotheslined by cat6e and rip a line out of the wall or worse…

At least turn those switches around so it’s business in front and party in the back for our sake lol…

@wendell

Has the L2arc been enabled? I’ve heard advice that the L2arc can cause memory problems on smaller-memory servers. Specifically, the table storing the metadata for the L2arc ends up using a big chunk of memory and that causes problems. Any comments on the L2arc size and the filesystem block size? I’m guessing that if you’re using this for video, you’ve increased the fs block size.

Soo pretty!!

They are not frontside exhaust though.

I’ve heard advice that the L2arc can cause memory problems on smaller-memory servers.

This is true, to an extent. I don’t remember the math exactly, but there’s a set amount of memory needed to store data in l2arc. You have to have a quite unbalanced server to see this problem though. You’ll typically only find this problem under 32gb of ram and with TBs of l2arc.

Since we’re showing off our setups, here’s my new and improved, very slimmed down rack. Got rid of the 24 bay Norco filled will noisy/hot segate 2TB SAS drives for 12 8TB WD Reds in the freenas build in the 2u Supermicro. All righted up with new Mikrotik switches (1Gbs and 10Gbs). The 1u Supermicro is a pfsense build with e3 1220L v2, and then have a r210ii and r720 both as VMware hosts.

My friend begs to differ. Labelling and organization is key.

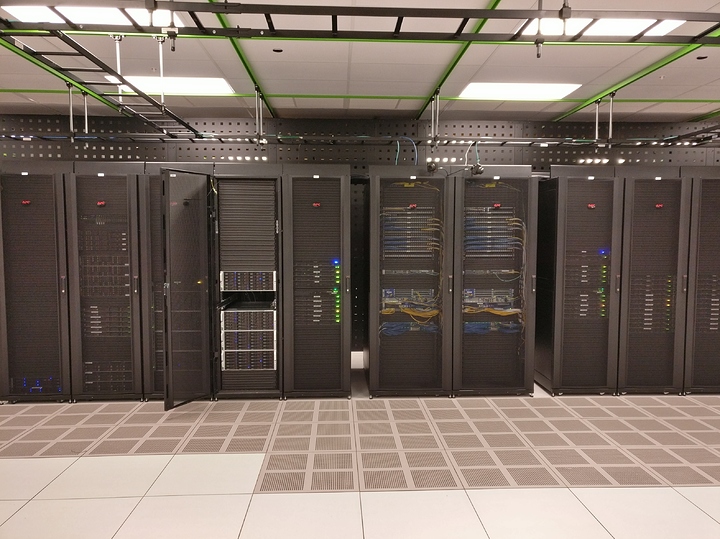

This is what my work at work looks like.

So bulgy!

I don’t have a picture of it fully populated, but just imagine the rest of those blanks filled in. I only went on site for the initial deployment because no one knew how to configure the 10G switches.

Just a bit of an explanation of the equipment there:

This is the first stage deployment of our openstack cluster. Those large chassis in the cabinet that’s open and the one to it’s left are our ceph cluster. Each server has 36 bays (some on back of server). 1PB of storage, 3 copies, spinning rust. Our second stage included a second tier of storage, SSD. We found we didn’t have enough iops across the 360 3TB disks we installed in there. We built out 4 SSD servers, 960GB x 144 = ~138TB All numbers are raw storage. Realistically, divide by 3 for actual storage space.

Our legacy lab is what can be found to the left of the Ceph cluster. (the rack with a mishmash of servers) That’s our testing environment and any legacy stuff we needed to keep powered on.

Far left is our HPC. Those are GPU-accelerated compute systems for doing big data™ analytics. We’re using Nvidia Tesla something or others. I forget what’s in them, but they’re fun to work with. We’ve only got a couple because each server cost more than my car. They’ve also got 5TB of FusionIO storage in there because apparently 1TB of ram wasn’t enough.

The rack to the right of the Ceph cluster is our OpenStack admin and security servers. We’re running everything on bare metal there, When we built this out, containers were still new and we weren’t ready to take the plunge.

The 3 racks to the right of the networking gear are all compute servers. 256GB ram, 32 cores, 4x10GbE (2x for VM comms, 2x for storage) into the Top of Rack (ToR) switch.

Now on to networking. We’d decided to go with 10GbE to top of rack, then we trunked it back to the network cabinets with 4x bonded 10GbE from each switch. Network is all Juniper gear with the exception of an ASA to provide VPN. We’ve got 3 uplinks out of the datacenter. 2x 10GbE and 1x 5GbE.

Nice, we have space in two CoLos, duplicate of one another, All Cisco UCS, most of the 30 blades are dual proc 20 or 22 core xeons with 768GB of ram and the older ones are dual 12 cores with 386GB of RAM, storage is ~20Tb of XtremIO and Pure M50. UCS chassis are 40Gbps PortChannels (quad 10G) and all the servers are 8 10G nics for expansion as needed. Kinda industry standard stuff, VMware ESXI, NSX, all that good jazz.

I am hoping we will get some GPU boxes at some point but we are doing pretty good with what we have and what our needs are right now.

In video you say you need to setup stuf to email if you lose a disk, etc.

Would be great if you shared your monitoring solutions/scripts.

Do you know if kyle is going to leave that gtx1050 in the storage server? (kinda pointless after he finishes install /initial config)

Why not use something more like GT710 ?

Just what he had on hand. I readied a pcie x1 nv200 which would let him run tandem SAS controllers or 4x10 gig. We decided nah, no need.

I also have a supermicro ipmi card but those are rare and it’s hard to talk me out of that. It’s a matrox g200 on that one iirc

I cant find anything searching for nv200… hints?

(other than the nissan van)

Super low end pcie x1 quadro with only a single dvi output

looks like there is a gt710 variant also… pcie x1

…has VGA and hdmi also.

ZT-71304-20L