Ok thank you for your support. I will try it tomorrow to install a VM and keep running it. Did you see any problems to use this “patch” in a production server? any problems with keep updated the whole system?

You need to be careful to not upgrade ZFS, or redo the patch if there’s any upgrade.

I’m only using SPR on a workstation machine (Xeon w-3400), not a server, but I do lots of VM spin up on zvol. Prior to the patch, it was an unfun exercise whether running a VM will crash my entire system. I have not triggered the crash with the patch.

hi sirn

I test it and is not working on my machine, so possible that my issue is another.

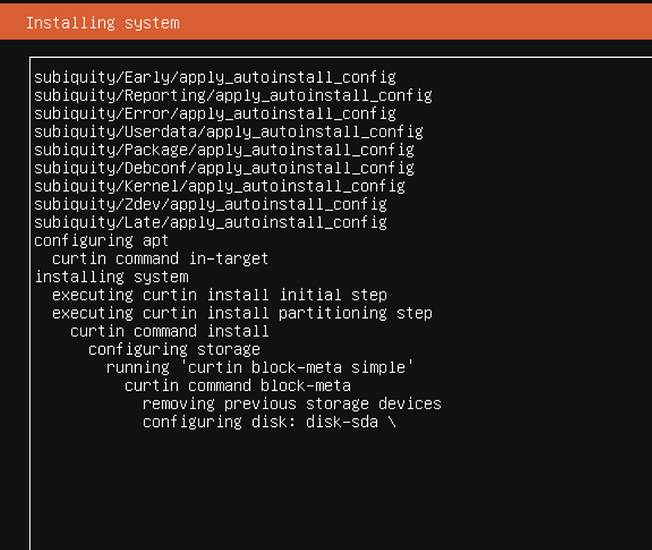

On creation / install of a blank new vm, it hangs up here

The syslog of the proxmox looks like bellow. I was thinking thats the same as yours, because if I cahnge from ZFS to another and place there the VM Disk is working fine.

Aug 21 08:02:01 prox2 pvedaemon[2511]: <root@pam> starting task UPID:prox2:00059A5B:0039B315:64E2FDD9:qmcreate:101:root@pam:

Aug 21 08:02:03 prox2 pvedaemon[2511]: <root@pam> end task UPID:prox2:00059A5B:0039B315:64E2FDD9:qmcreate:101:root@pam: OK

Aug 21 08:02:25 prox2 pvedaemon[2511]: <root@pam> starting task UPID:prox2:00059BF6:0039BC53:64E2FDF1:qmstart:101:root@pam:

Aug 21 08:02:25 prox2 pvedaemon[367606]: start VM 101: UPID:prox2:00059BF6:0039BC53:64E2FDF1:qmstart:101:root@pam:

Aug 21 08:02:25 prox2 systemd[1]: Created slice qemu.slice - Slice /qemu.

Aug 21 08:02:25 prox2 systemd[1]: Started 101.scope.

Aug 21 08:02:26 prox2 kernel: device tap101i0 entered promiscuous mode

Aug 21 08:02:26 prox2 kernel: vmbr0: port 2(fwpr101p0) entered blocking state

Aug 21 08:02:26 prox2 kernel: vmbr0: port 2(fwpr101p0) entered disabled state

Aug 21 08:02:26 prox2 kernel: device fwpr101p0 entered promiscuous mode

Aug 21 08:02:26 prox2 kernel: vmbr0: port 2(fwpr101p0) entered blocking state

Aug 21 08:02:26 prox2 kernel: vmbr0: port 2(fwpr101p0) entered forwarding state

Aug 21 08:02:26 prox2 kernel: fwbr101i0: port 1(fwln101i0) entered blocking state

Aug 21 08:02:26 prox2 kernel: fwbr101i0: port 1(fwln101i0) entered disabled state

Aug 21 08:02:26 prox2 kernel: device fwln101i0 entered promiscuous mode

Aug 21 08:02:26 prox2 kernel: fwbr101i0: port 1(fwln101i0) entered blocking state

Aug 21 08:02:26 prox2 kernel: fwbr101i0: port 1(fwln101i0) entered forwarding state

Aug 21 08:02:26 prox2 kernel: fwbr101i0: port 2(tap101i0) entered blocking state

Aug 21 08:02:26 prox2 kernel: fwbr101i0: port 2(tap101i0) entered disabled state

Aug 21 08:02:26 prox2 kernel: fwbr101i0: port 2(tap101i0) entered blocking state

Aug 21 08:02:26 prox2 kernel: fwbr101i0: port 2(tap101i0) entered forwarding state

Aug 21 08:02:26 prox2 pvedaemon[2511]: <root@pam> end task UPID:prox2:00059BF6:0039BC53:64E2FDF1:qmstart:101:root@pam: OK

Aug 21 08:02:26 prox2 pvedaemon[367767]: starting vnc proxy UPID:prox2:00059C97:0039BCAA:64E2FDF2:vncproxy:101:root@pam:

Aug 21 08:02:26 prox2 pvedaemon[2510]: <root@pam> starting task UPID:prox2:00059C97:0039BCAA:64E2FDF2:vncproxy:101:root@pam:

Aug 21 08:03:19 prox2 kernel: BUG: unable to handle page fault for address: ff34b2816230ccff

Aug 21 08:03:19 prox2 kernel: #PF: supervisor write access in kernel mode

Aug 21 08:03:19 prox2 kernel: #PF: error_code(0x0003) - permissions violation

Aug 21 08:03:19 prox2 kernel: PGD 1abac01067 P4D 1abac02067 PUD 10654a063 PMD 1066de063 PTE 800000012230c161

Aug 21 08:03:19 prox2 kernel: Oops: 0003 [#1] PREEMPT SMP NOPTI

Aug 21 08:03:19 prox2 kernel: CPU: 1 PID: 368517 Comm: z_wr_iss Tainted: P O 6.2.16-8-pve #1

Aug 21 08:03:19 prox2 kernel: Hardware name: Supermicro SYS-621P-TR/X13DEI, BIOS 1.3a 06/02/2023

Aug 21 08:03:19 prox2 kernel: RIP: 0010:kfpu_begin+0x31/0xa0 [zcommon]

Aug 21 08:03:19 prox2 kernel: Code: 3f 48 89 e5 fa 0f 1f 44 00 00 48 8b 15 88 89 00 00 65 8b 05 6d 95 52 3f 48 98 48 8b 0c c2 0f 1f 44 00 00 b8 ff ff ff ff 89 c2 <0f> c7 29 5d 31 c0 31 d2 31 c9 31 f6 31 ff c3 cc cc cc cc 0f 1f 44

Aug 21 08:03:19 prox2 kernel: RSP: 0018:ff4fc18fc9f37930 EFLAGS: 00010082

Aug 21 08:03:19 prox2 kernel: RAX: 00000000ffffffff RBX: ff34b290de864000 RCX: ff34b2816230a000

Aug 21 08:03:19 prox2 kernel: RDX: 00000000ffffffff RSI: ff34b290de864000 RDI: ff4fc18fc9f37a80

Aug 21 08:03:19 prox2 kernel: RBP: ff4fc18fc9f37930 R08: 0000000000000000 R09: 0000000000000000

Aug 21 08:03:19 prox2 kernel: R10: 0000000000000000 R11: 0000000000000000 R12: ff34b290de865000

Aug 21 08:03:19 prox2 kernel: R13: ff4fc18fc9f37a80 R14: 0000000000001000 R15: 0000000000000000

Aug 21 08:03:19 prox2 kernel: FS: 0000000000000000(0000) GS:ff34b2907fa40000(0000) knlGS:0000000000000000

Aug 21 08:03:19 prox2 kernel: CS: 0010 DS: 0000 ES: 0000 CR0: 0000000080050033

Aug 21 08:03:19 prox2 kernel: CR2: ff34b2816230ccff CR3: 0000001ab9210006 CR4: 0000000000773ee0

Aug 21 08:03:19 prox2 kernel: DR0: 0000000000000000 DR1: 0000000000000000 DR2: 0000000000000000

Aug 21 08:03:19 prox2 kernel: DR3: 0000000000000000 DR6: 00000000fffe07f0 DR7: 0000000000000400

Aug 21 08:03:19 prox2 kernel: PKRU: 55555554

Aug 21 08:03:19 prox2 kernel: Call Trace:

Aug 21 08:03:19 prox2 kernel: <TASK>

Aug 21 08:03:19 prox2 kernel: fletcher_4_avx512f_native+0x1d/0xb0 [zcommon]

Aug 21 08:03:19 prox2 kernel: abd_fletcher_4_iter+0x71/0xe0 [zcommon]

Aug 21 08:03:19 prox2 kernel: abd_iterate_func+0x104/0x1e0 [zfs]

Aug 21 08:03:19 prox2 kernel: ? __pfx_abd_fletcher_4_iter+0x10/0x10 [zcommon]

Aug 21 08:03:19 prox2 kernel: ? __pfx_abd_fletcher_4_native+0x10/0x10 [zfs]

Aug 21 08:03:19 prox2 kernel: abd_fletcher_4_native+0x89/0xd0 [zfs]

Aug 21 08:03:19 prox2 kernel: ? kmem_cache_alloc+0x18e/0x360

Aug 21 08:03:19 prox2 kernel: zio_checksum_compute+0x154/0x550 [zfs]

Aug 21 08:03:19 prox2 kernel: ? __kmem_cache_alloc_node+0x19d/0x340

Aug 21 08:03:19 prox2 kernel: ? spl_kmem_alloc+0xc3/0x120 [spl]

Aug 21 08:03:19 prox2 kernel: ? spl_kmem_alloc+0xc3/0x120 [spl]

Aug 21 08:03:19 prox2 kernel: ? __kmalloc_node+0x52/0xe0

Aug 21 08:03:19 prox2 kernel: ? spl_kmem_alloc+0xc3/0x120 [spl]

Aug 21 08:03:19 prox2 kernel: zio_checksum_generate+0x4d/0x80 [zfs]

Aug 21 08:03:19 prox2 kernel: zio_execute+0x94/0x170 [zfs]

Aug 21 08:03:19 prox2 kernel: taskq_thread+0x2ac/0x4d0 [spl]

Aug 21 08:03:19 prox2 kernel: ? __pfx_default_wake_function+0x10/0x10

Aug 21 08:03:19 prox2 kernel: ? __pfx_zio_execute+0x10/0x10 [zfs]

Aug 21 08:03:19 prox2 kernel: ? __pfx_taskq_thread+0x10/0x10 [spl]

Aug 21 08:03:19 prox2 kernel: kthread+0xe6/0x110

Aug 21 08:03:19 prox2 kernel: ? __pfx_kthread+0x10/0x10

Aug 21 08:03:19 prox2 kernel: ret_from_fork+0x29/0x50

Aug 21 08:03:19 prox2 kernel: </TASK>

Aug 21 08:03:19 prox2 kernel: Modules linked in: tcp_diag inet_diag veth ebtable_filter ebtables ip_set ip6table_raw iptable_raw ip6table_filter ip6_tables iptable_filter bpfilter nf_tables bonding tls softdog sunrpc nfnetlink_log binfmt_misc nfnetlink intel_rapl_msr intel_rapl_common intel_uncore_frequency intel_uncore_frequency_common intel_ifs i10nm_edac nfit x86_pkg_temp_thermal intel_powerclamp coretemp kvm_intel kvm ipmi_ssif irqbypass crct10dif_pclmul polyval_clmulni polyval_generic ghash_clmulni_intel sha512_ssse3 aesni_intel crypto_simd cryptd ast drm_shmem_helper pmt_telemetry pmt_crashlog cmdlinepart drm_kms_helper rapl pmt_class intel_sdsi i2c_algo_bit spi_nor intel_cstate syscopyarea mei_me idxd isst_if_mbox_pci isst_if_mmio sysfillrect pcspkr mtd isst_if_common intel_vsec idxd_bus mei sysimgblt acpi_ipmi ipmi_si ipmi_devintf ipmi_msghandler acpi_power_meter acpi_pad joydev input_leds pfr_telemetry pfr_update mac_hid vhost_net vhost vhost_iotlb tap efi_pstore drm dmi_sysfs ip_tables x_tables

Aug 21 08:03:19 prox2 kernel: autofs4 zfs(PO) zunicode(PO) zzstd(O) zlua(O) zavl(PO) icp(PO) zcommon(PO) znvpair(PO) spl(O) btrfs blake2b_generic xor raid6_pq libcrc32c simplefb rndis_host cdc_ether usbnet mii usbkbd hid_generic nvme usbmouse nvme_core nvme_common usbhid hid xhci_pci xhci_pci_renesas ahci i2c_i801 spi_intel_pci crc32_pclmul qlcnic xhci_hcd tg3 vmd i2c_ismt spi_intel libahci i2c_smbus wmi pinctrl_emmitsburg

Aug 21 08:03:19 prox2 kernel: CR2: ff34b2816230ccff

Aug 21 08:03:19 prox2 kernel: ---[ end trace 0000000000000000 ]---

Aug 21 08:03:19 prox2 kernel: RIP: 0010:kfpu_begin+0x31/0xa0 [zcommon]

Aug 21 08:03:19 prox2 kernel: Code: 3f 48 89 e5 fa 0f 1f 44 00 00 48 8b 15 88 89 00 00 65 8b 05 6d 95 52 3f 48 98 48 8b 0c c2 0f 1f 44 00 00 b8 ff ff ff ff 89 c2 <0f> c7 29 5d 31 c0 31 d2 31 c9 31 f6 31 ff c3 cc cc cc cc 0f 1f 44

Aug 21 08:03:19 prox2 kernel: RSP: 0018:ff4fc18fc9f37930 EFLAGS: 00010082

Aug 21 08:03:19 prox2 kernel: RAX: 00000000ffffffff RBX: ff34b290de864000 RCX: ff34b2816230a000

Aug 21 08:03:19 prox2 kernel: RDX: 00000000ffffffff RSI: ff34b290de864000 RDI: ff4fc18fc9f37a80

Aug 21 08:03:19 prox2 kernel: RBP: ff4fc18fc9f37930 R08: 0000000000000000 R09: 0000000000000000

Aug 21 08:03:19 prox2 kernel: R10: 0000000000000000 R11: 0000000000000000 R12: ff34b290de865000

Aug 21 08:03:19 prox2 kernel: R13: ff4fc18fc9f37a80 R14: 0000000000001000 R15: 0000000000000000

Aug 21 08:03:19 prox2 kernel: FS: 0000000000000000(0000) GS:ff34b2907fa40000(0000) knlGS:0000000000000000

Aug 21 08:03:19 prox2 kernel: CS: 0010 DS: 0000 ES: 0000 CR0: 0000000080050033

Aug 21 08:03:19 prox2 kernel: CR2: ff34b2816230ccff CR3: 0000001ab9210006 CR4: 0000000000773ee0

Aug 21 08:03:19 prox2 kernel: DR0: 0000000000000000 DR1: 0000000000000000 DR2: 0000000000000000

Aug 21 08:03:19 prox2 kernel: DR3: 0000000000000000 DR6: 00000000fffe07f0 DR7: 0000000000000400

Aug 21 08:03:19 prox2 kernel: PKRU: 55555554

Aug 21 08:03:19 prox2 kernel: note: z_wr_iss[368517] exited with irqs disabled

Aug 21 08:03:19 prox2 kernel: note: z_wr_iss[368517] exited with preempt_count 1

It does looks like the same issue. Maybe the patching instruction is incomplete. Let me try it again and I’ll get back to you.

Oops, I just realized that Proxmox doesn’t use DKMS to build ZFS modules, unlike vanilla Debian. Sadly, this makes patching a lot harder. Perhaps you could try adding clearcpuid=600 to /etc/kernel/cmdline and run /usr/sbin/proxmox-boot-tool refresh (and reboot) again.

After reboot, you can confirm whether clearcpuid is active or not by running:

cat /proc/cmdline

It should contain clearcpuid=600 here. If this really doesn’t work, I think you may be better to stick with Proxmox 7 for the time being (until the forementioned patch is merged & released & included in Proxmox).

Meanwhile, I’ll look into how to properly patch Proxmox kernel to include the patch.

thank you for your answer, my cmdline is below

root@prox2:~# cat /proc/cmdline

initrd=\EFI\proxmox\6.2.16-8-pve\initrd.img-6.2.16-8-pve root=ZFS=rpool/ROOT/pve-1 boot=zfs clearcpuid=600

after refresh and reboot I try again to install a new vm on my zfs and right now is working… so I will test it again tomorrow, thank you for your help! strange why this was not working in my first try!

can you tel me, why this issue happen? is this a problem from a hardware component? or why?

It seems like either a bug in the Linux kernel, or a bug in a CPU. It’s not uncommon for CPUs to have hardware bugs, it’s just that this platform is very new, with lots of new stuff, and not many people have access to it. ![]()

For a more technical explanation…

On these CPUs, there are instructions to save and restore FPU state. These state include anything from legacy x87 floating-point registers, to newer ones such as AVX-512. On Sapphire Rapids, Intel introduced a new set of instructions called AMX (Advanced Matrix Extensions), and added support for saving/restoring its state (called TILEDATA) with these instructions.

There appears to be a bug that causes this state save/restore to corrupt that is triggered by some unlucky combination involving saving a TILEDATA and running a VM. Unfortunately, ZFS seems to hit that unlucky combination.

Fortunately, ZFS doesn’t use this TILEDATA (it’s saved as part of the default “save everything” when saving/restoring state). So a fix is to exclude TILEDATA from being saved/restored (the patch), or disabling AMX altogether (clearcpuid).

hi @sirn i have no setup all… from time to time I have on my proxmox this issue bellow. possible that this is something from this “fix”?

The number of I/O errors associated with a ZFS device exceeded

acceptable levels. ZFS has marked the device as faulted.

impact: Fault tolerance of the pool may be compromised.

eid: 1152

class: statechange

state: FAULTED

host: prox2

time: 2023-09-02 10:19:26+0200

vpath: /dev/disk/by-id/ata-WDC_WD60EFAX-68JH4N1_WD-WX32D90PC0C7-part1

vphys: pci-0000:00:18.0-ata-2.0

vguid: 0xC83F809F5D8DA8DC

devid: ata-WDC_WD60EFAX-68JH4N1_WD-WX32D90PC0C7-part1

pool: datastore-012 (0x25896F1E1982A3C2)

I have never seen this error during the time using the patch. This looks like a problem with one of your drives. Perhaps try zpool scrub POOLNAME and if it doesn’t go away, possibly replacing the drive.

I have now try multiple times with the scrub and mostly all runs fine… one time they came a FAULT message. The strange thing, is always another drive… We use 3 Disks. So I don’t think is a Hardware issue…

That’s weird, but I don’t think this is related to SPR. I think you may have better luck creating a new thread about it. It might even be an HBA failure or something.

On a brighter news, the SPR patch by the maintainer was recently merged. So presumably manually patching and clearcpuid won’t be needed any more from ZFS 2.1.13 or ZFS 2.2 (I don’t know which one is next). ![]()

OpenZFS 2.2 has been released and includes a fix for SPR. ![]()

It’ll take a while until this is ready for Proxmox and Debian, but I’ll mark this thread as solved. Thank you everyone!