What a ride!

As a linux noob I am sure this process would have gone smoother with just a bit more knowledge on how the OS works. If I can manage, I am sure anyone can with a little perseverance. I am editing this post to include some of the fixes that I found for problems I encountered. The original post will be retained after the edit.

Intent

I wanted to set up a VM that could pass as a “normal” machine in as many aspects as possible for testing malicious code in a safe environment. Many malware actors can detect that they are running in a VM and suppress themselves. I realize that passing through certain devices opens the host up to some security flaws, more on that in a later post.

As a bonus, it should be trivial to spin up a non-work-related VM for the odd game or two.

Sources and Guides

There are many well-written guides on this forum and the internet at large on how to accomplish the initial setup so I will not reproduce them in this post. I am glad to see there is interest in this topic and the process is only getting easier with each update. Here are the resources I found most useful for my install:

Play games in Windows on Linux! PCI passthrough quick guide

https://www.youtube.com/watch?v=hF153sXm4Ws

https://heiko-sieger.info/running-windows-10-on-linux-using-kvm-with-vga-passthrough/

http://mathiashueber.com/amd-ryzen-based-passthrough-setup-between-xubuntu-16-04-and-windows-10/

Hardware

- Ryzen7 2700x

- Asus ROG Crosshair VI Hero X370

- 64GB RAM DDR4-3200 running at 3200MHz

- Nvidia Geforce 710b (Host GPU-PCIe slot1)*

- Nvidia Geforce 1080 GTX (Guest GPU-PCIe slot2)

- 250GB M.2 SSD for host system

- 500GB SSD for guest images split into 50GB partitions

On to troubles and fixes:

Grub IOMMU set, still no IOMMU groups listed!

Make sure your system BIOS are up to date. The C6H in this build was shipped in April of 2018 and did not include an IOMMU setting. Updating to the most recent BIOS build fixed this issue.

C6H IOMMU: Advanced > AMD CBS > NBIO Common Options > NB Configuration > IOMMU = Enabled

Working BIOS: 6401

More info: https://www.overclock.net/forum/11-amd-motherboards/1625131-asus-rog-crosshair-vi-question.html

Guest hangs at 20% cpu utilization, NO VIDEO

Something is causing the VM to hang.

# cat /var/log/libvirt/qemu/<VM_NAME>.log

The last few lines after the settings block will show where the likely failures are which brings us to…

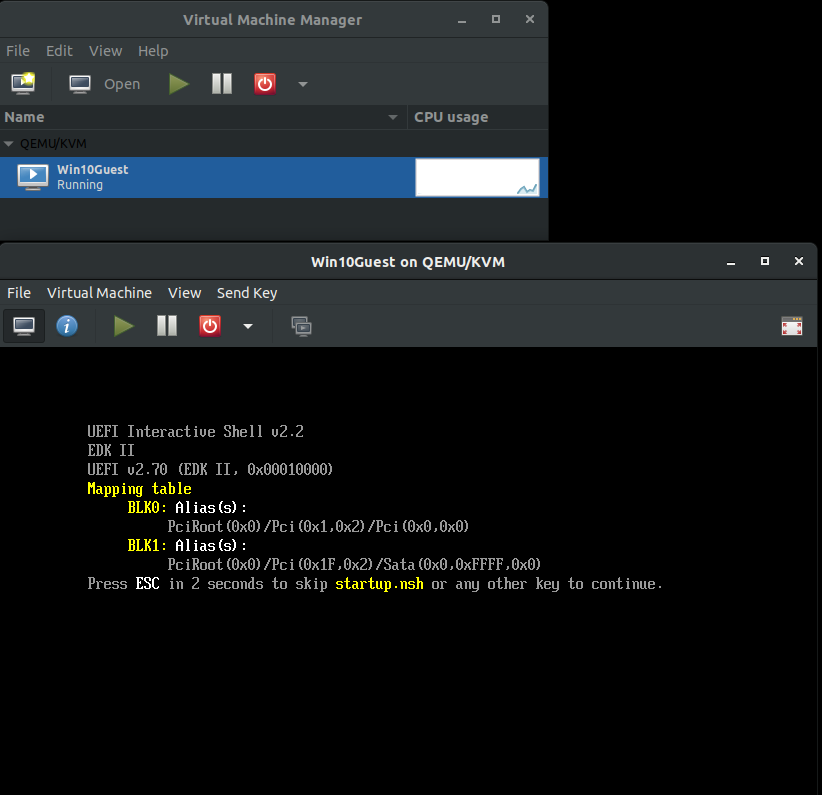

Guest hangs at EFI Shell

Your boot order is not set up correctly or you do not have a boot device enabled correctly in virt-manager.

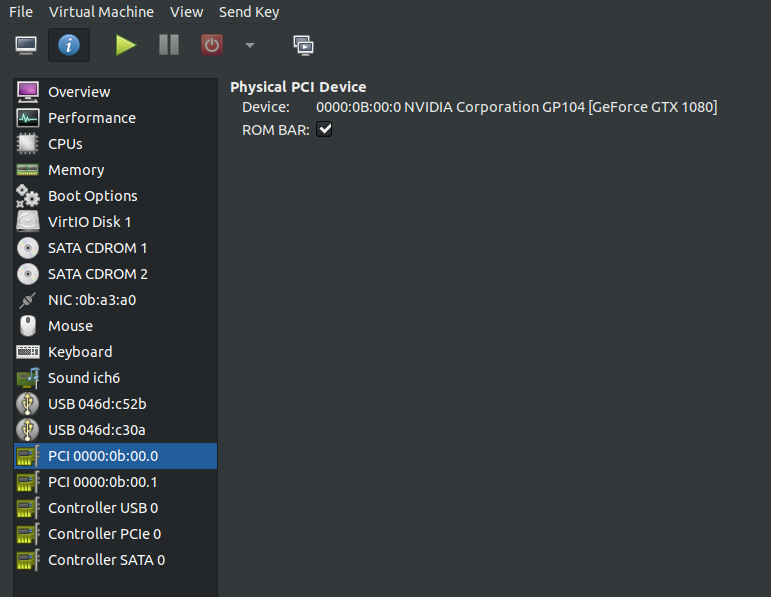

Inside virt-manager:

Boot Options

- SATA CDROM 1 (loaded with your OS .iso)

- VFIO Disk 1 (your HDD or SSD volume)

- SATA CDROM 2 (loaded with virtio drivers)

Start the guest. If it hangs at the efi shell, type “exit” to be taken to a BIOS menu. Configure the BIOS to wait 5 seconds for boot device, and ensure the boot order matches the order from virt-manager. Choose “reset”. Press a key when prompted, or just keep slapping “enter” until you see the OS loading screen.

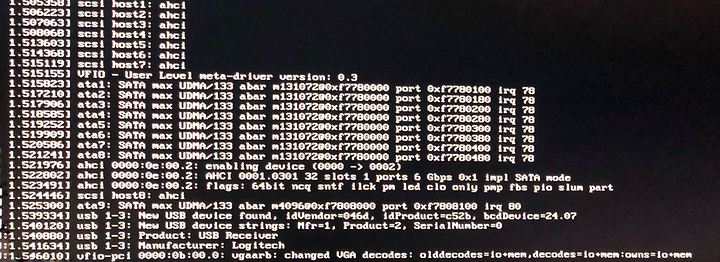

BAR3, MMAP error

VM_NAME.log shows BAR3 and/or MMAP errors when attempting to initialize the guest gpu. This is a result of the guest GPU being located in PCIE_SLOT_1. No matter the settings with your IOMMU groups and vfio drivers, the mobo serves up slot 1 as the default. Swapping the cards fixed this issue.

If you are worried about the guest card running in 8x mode in slot 2, fear not! Even a 1080 cannot fully saturate the 8x pcie slot.

Source:

https://www.gamersnexus.net/guides/2488-pci-e-3-x8-vs-x16-performance-impact-on-gpus

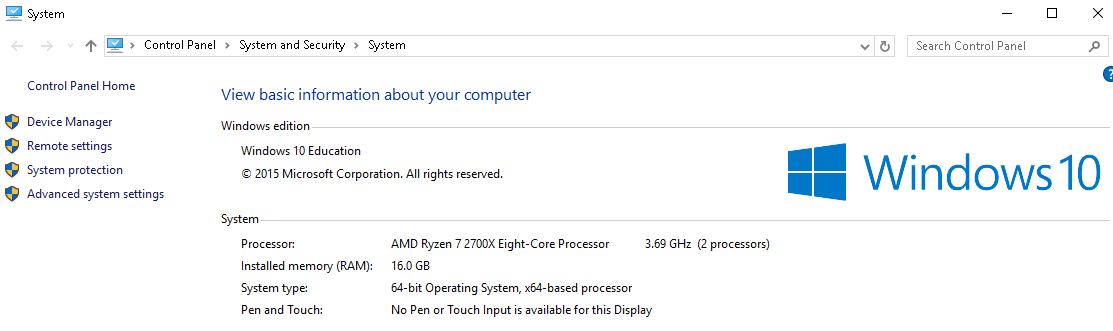

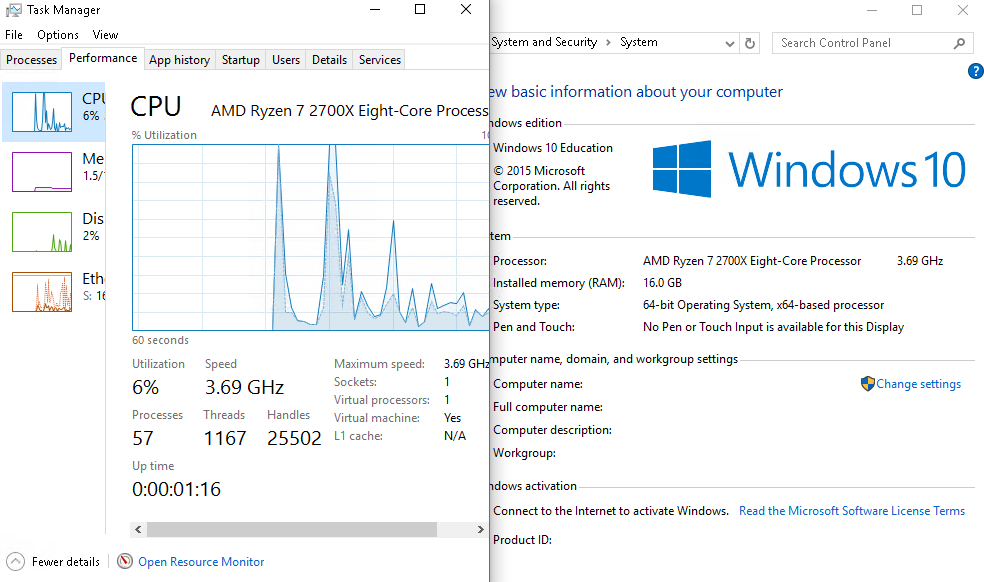

Tainted CPU / Windows only shows 1 virtualized cpu

VM_NAME.log file shows a tainted cpu and/or windows task manager shows a 1 or 2 core virtual cpu, regardless of VM settings

Fix: Manually edit cpu topology to reflect host cpu configuration. In the case of the Ryzen2800x:

Model: EPYC-IBPB

Topology:

- Sockets: 1

- Cores: 1-8 (I used 6)

- Threads: 2

Guest shows correct core count but still shows virtualized cpu

Run # virch edit <VM_Name>

Edit to include the following:

<domain type='kvm' xmlns:qemu='http://libvirt.org/schemas/domain/qemu/1.0'>

...

<features>

</hyperv>

<kvm>

<hidden state='on'/>

</kvm>

...

</features>

...

</devices>

<qemu:commandline>

<qemu:arg value='-cpu'/>

<qemu:arg value='host,hv_time,kvm=off,hv_vendor_id=null,-hypervisor'/>

</qemu:commandline>

</domain>

Be sure to include -hypervisor, this will hide the virtualized flag for the cpu.

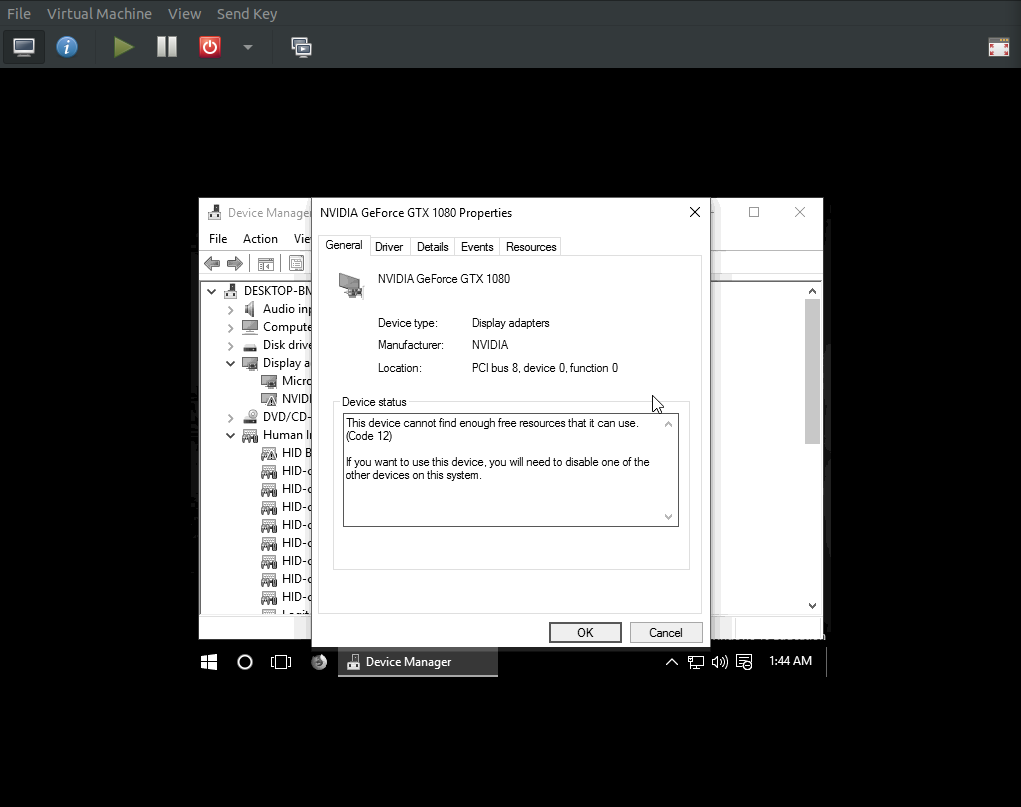

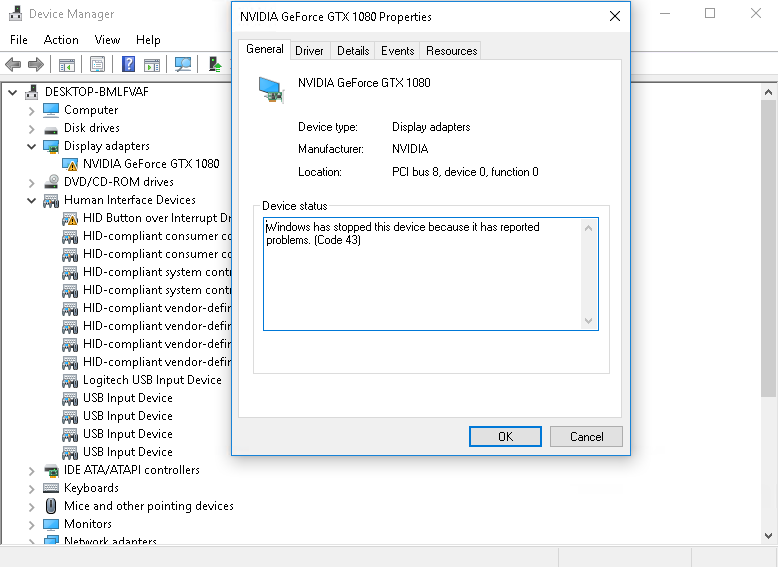

Error 43

This one vexed me for some time. Even with all of the supposed correct settings the gpu was still being flagged. After configuring the <VM_NAME>.xml files correctly, make sure the guest gpu is in pcie slot 2. Doing so fixed the issue immediately.

Device Manager Warnings

Human Interface Devices:

!HID-compliant consumer control device!

- Fix: right-click the device > properties > Driver > Update Driver > Browse My Computer… > Let me pick from a list > Generic

System Devices:

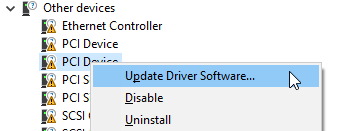

!PCI Device!

- Fix: Right-click the device that you wish to identify and select the Details tab. Select the Hardware Ids property in the list.

Match the hardware ID with one from the list in the link above (scroll down a bit).

Right-click the device whose driver you wish to update, and select Update Driver from the pop-up menu.This example installs the balloon driver, so right-click PCI Device .

Open the driver update wizardFrom the drop-down menu, select Update Driver Software to access the driver update wizard.

Specify how to find the driverThe first page of the driver update wizard asks how you want to search for driver software. Click Browse my computer for driver software .

Navigate to your virtio CD and find the matching drivers.

On mine the only device that was flagged was the Balloon driver for Windows 10, found in Balloon/w10/amd directory of my virtio cd.

No sound device detected

For my purposes sound was not required, but I found some software items check for an installed sound device. I used the following guide to enable sound:

http://mathiashueber.com/virtual-machine-audio-setup-get-pulse-audio-working/

And…thats it! There are some minor kinks yet, but I will update this post as I find solutions. For the time being, the guest VM is running Windows 10 and has survived benchmarking. All devices function as intended, and it can even run a game or two.

TO-DO:

- USB Network Dongle Passthrough

- USB 3.0 Block Passthrough

- CPU Pinning for Guest

- SSD Performance is … sporadic

- Hide/rename mobo, chipset, various vfio and OVMF titles

- CPU-Z shows no RAM installed…

- Looking-Glass?? Windows error: Run in foreground (-f)

OP

Hello Level 1 Forum,

Long time lurker first time poster. Love the work Level 1 Techs has done to bring a community of like-minded geekery together. I am hoping to find some assistance with setting up a Windows10 guest on an Ubuntu host. When it comes to linux I am still a bit of a child, please forgive my ignorance as I am still learning.

The intent is to run Ubuntu host from an nvme drive and the nvidia 710b, and pass through 4 cpu cores, the nvidia 1080, a SATA SSD, and various USB devices to the windows guest. Currently just focusing on getting the gpu and vm guest to work before worrying about usb devices.

PROBLEM:

I keep getting stuck at the iommu setup stage. The guest gpu isn’t showing the vfio-pci kernel driver and is instead showing the nvidia driver:

0b:00.0 VGA compatible controller [0300]: NVIDIA Corporation GP104 [GeForce GTX 1080] [10de:1b80] (rev a1) (prog-if 00 [VGA controller])

Subsystem: eVga.com. Corp. GP104 [GeForce GTX 1080] [3842:6288]

Flags: bus master, fast devsel, latency 0, IRQ 88

Memory at f6000000 (32-bit, non-prefetchable) [size=16M]

Memory at d0000000 (64-bit, prefetchable) [size=256M]

Memory at e0000000 (64-bit, prefetchable) [size=32M]

I/O ports at d000 [size=128]

[virtual] Expansion ROM at 000c0000 [disabled] [size=128K]

Capabilities:

Kernel driver in use: nvidia

Kernel modules: nvidiafb, nouveau, nvidia_drm, nvidia0b:00.1 Audio device [0403]: NVIDIA Corporation GP104 High Definition Audio Controller [10de:10f0] (rev a1)

Subsystem: eVga.com. Corp. GP104 High Definition Audio Controller [3842:6288]

Flags: bus master, fast devsel, latency 0, IRQ 10

Memory at f7080000 (32-bit, non-prefetchable) [size=16K]

Capabilities:

Kernel driver in use: vfio-pci

Kernel modules: snd_hda_intel

GUIDES:

Error with new user posting links.

Writing this post has helped to double check my settings to no avail. My gut says I am missing something fundamental here, or some mixed up syntax. I will post my process below.

HARDWARE AND OS:

System:

Ryzen2700

ASUS ROG VI Hero

nVidia 1080 [pci slot 1, 3x monitors]

nVidia 710b [pci slot 2, 3x monitors]OS:

Ubuntu 18.04; Kernel 4.18.19

Windows10 Education EditionIOMMU Device Groups:

IOMMU Group 16 0b:00.0 VGA compatible controller [0300]: NVIDIA Corporation GP104 [GeForce GTX 1080] [10de:1b80] (rev a1)

IOMMU Group 16 0b:00.1 Audio device [0403]: NVIDIA Corporation GP104 High Definition Audio Controller [10de:10f0] (rev a1)

SETUP PROCESS:

#nano /etc/default/grub

GRUB_DEFAULT=0

GRUB_TIMEOUT_STYLE=MENU

GRUB_TIMEOUT=10

GRUB_DISTRIBUTOR=lsb_release -i -s 2> /dev/null || echo Debian

GRUB_CMDLINE_LINUX_DEFAULT=”iommu=1 amd_iommu=on”

GRUB_CMDLINE_LINUX=””

#nano /etc/initramfs-tools/modules

vfio

vfio_iommu_type1

vfio_virqfd

options vfio_pci ids=10de:1b80,10de:10f0

vfio_pci ids=10de:1b80,10de:10f0

vfio_pci

GP104

#nano /etc/modules

vfio

vfio_iommu_type1

vfio_pci ids=10de:1b80,10de:10f0

#nano /etc/modprobe.d/vfio.conf

Options vfio_pci ids=10de:1b80,10de:10f0

#update-grub

#update-initramfs -u

REBOOT

/* At this point the anticipated behavior is to see linux modules loading, then hang on the current video out (showing the 1080 card does not load) and I can switch the input of my monitors to the 710b card.

This does not happen, and instead the system boots normally and shows video out from the 1080 card. */

Upon logging in:

#dmesg | grep -E “DMAR|IOMMU”

[ 0.766035] AMD-Vi: IOMMU performance counters supported

[ 0.768992] AMD-Vi: Found IOMMU at 0000:00:00.2 cap 0x40

[ 0.769982] perf/amd_iommu: Detected AMD IOMMU #0 (2 banks, 4 counters/bank).

[ 42.468641] vboxpci: IOMMU found

#lspci -nnv | less

0b:00.0 VGA compatible controller [0300]: NVIDIA Corporation GP104 [GeForce GTX 1080] [10de:1b80] (rev a1) (prog-if 00 [VGA controller])

Subsystem: eVga.com. Corp. GP104 [GeForce GTX 1080] [3842:6288]

Flags: bus master, fast devsel, latency 0, IRQ 88

Memory at f6000000 (32-bit, non-prefetchable) [size=16M]

Memory at d0000000 (64-bit, prefetchable) [size=256M]

Memory at e0000000 (64-bit, prefetchable) [size=32M]

I/O ports at d000 [size=128]

[virtual] Expansion ROM at 000c0000 [disabled] [size=128K]

Capabilities:

Kernel driver in use: nvidia

Kernel modules: nvidiafb, nouveau, nvidia_drm, nvidia0b:00.1 Audio device [0403]: NVIDIA Corporation GP104 High Definition Audio Controller [10de:10f0] (rev a1)

Subsystem: eVga.com. Corp. GP104 High Definition Audio Controller [3842:6288]

Flags: bus master, fast devsel, latency 0, IRQ 10

Memory at f7080000 (32-bit, non-prefetchable) [size=16K]

Capabilities:

Kernel driver in use: vfio-pci

Kernel modules: snd_hda_intel

It seems I cannot proceed with the VM installation until the 1080 card is forced to utilize the vfio-pci kernel driver. I am stumped.