A wordpress blog was recently cleaned of malware.

The link on the google search results still displays the cached compromised version.

Manually typing in the url works fine.

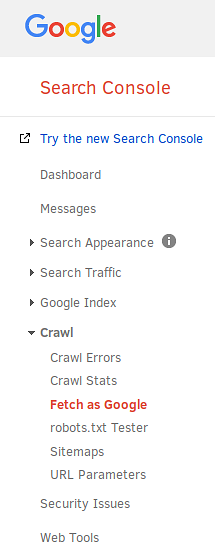

Forcing a re-crawl of the page fails with no error message in the google webmaster console.

Where should I begin to correct this issue?

what are you using to crawl? custom code?

@Hammerhead_Corvette

No, google’s webmaster tools; Search Console.

1 Like

Too hard, long time spent.

RewriteEngine On

RewriteCond %{REQUEST_FILENAME} !-f

RewriteRule . /index.php [L]

Bed early, sleep well.

2 Likes

I’m thinking it’s flagged somehow on google’s end… But I’ll know more klater after a deep dive

1 Like

It’s hosted by godaddy, I don’t think I’m able to modify the apache config. But if I’m wrong, then please let me know.

I thought so too, but there are no messages in the console. It says on Google’s page, that if there are malicious threads they appear there. Interestingly, what tipped the site owner off that it was compromised, was not the web tools, but adsense saying they wouldn’t show the ads on a malicious site.

Maybe .htaccess will do the trick? I don’t know their setup.

Too hard (shhhh) to know what is happening without the full run down(don’t think too much on it).

There could be many reasons why the cached version, or there abouts, is still showing up as the front.

@SudoSaibot @KoalaAteMySnack

There was a file, covert-jerome.php which was modified by the hack. Since Wordpress is built with PHP, if one thing is missing, the whole thing fails.

So when going to the webpage via google it fails with a hard 404. The only way it doesn’t fail is if you type in the url manually; which is a death sentence in today’s world.

I solved my issue.

Google wouldn’t get off their ass so I set up a temporary 302 (soft) redirect to a maintenance page.

Next, I had to track down the location of the removed infected covert-jerome.php, recreate this file, and set up a page refresh.

<?php

// this file is needed to mitigate the after effects of a malware infestation.

// A full page refresh is needed to circumvent the caching issues google has.

header("Refresh:0");

?>

Now, when a user visits the site via google it no longer gives a 404 and the page loads. However, a second full page reload is required to circumvent the issue, meaning I’m doing a very expensive full second reload. However, I could not see another way out of this.

Now, when I go try the google crawl again, it succeeds and I can request a re-index.

My next steps after this has been approved for the re-index and re-indexed, is to remove this file.

3 Likes