well CIFS seems to not be suffering from the slowness issue. So regardless if its new or old, it works twice as fast or more.

I checked the raw packets going over the network using Wireshark and these are the differences that I found:

Click here for all the details and differences

In the request packets

"Flags"

File browser: "Canonicalized Pathnames" and "Case Sensitivity"mount command: nothing (0x00)

"Flags2"

File Browser:

- "Unicode String"

- "Error Code Type"

- "Extended Security Negotiation"

- "Long Names Used"

- "Extended Attributes"

- "Long Names Allowed"

mount command:

the same except that "Long Names Used" and "Extended Attributes" are not set.

"Multiplex ID"

is 0 in the file browser and 1 in the mount command.

"Requested Dialects"

The bigger difference are in the "Requested Dialects" field, where the file browser's packet lists:

- Dialect: PC NETWORK PROGRAM 1.0

- Dialect: MICROSOFT NETWORKS 1.03

- Dialect: MICROSOFT NETWORKS 3.0

- Dialect: LANMAN1.0

- Dialect: LM1.2X002

- Dialect: DOS LANMAN2.1

- Dialect: LANMAN2.1

- Dialect: Samba

- Dialect: NT LANMAN 1.0

- Dialect: NT LM 0.12

but the mount command's packet only list:

- Dialect: LM1.2X002

- Dialect: LANMAN2.1

- Dialect: NT LM 0.12

- Dialect: POSIX 2

In the response packets:

"Flags"

File Browser:

- "Request/Response"

- "Case Sensitivity"

mount command:

only "Request/Response"

"Flags2"

same like in the respective request packets.

"Multiplex ID"

same like in the respective request packets.

"Negotiate Protocol Response"

Now this is where I guess the actual negotiated protocol is listed.

This field contains an entry called "Selected Index" and has the values:

- File Browser: "8: NT LANMAN 1.0"

-

mountcommand: "2: NT LM 0.12"

Otherwise the packets are identical (apart from things like session keys etc.).

Now I am not exactly sure what these values mean or why one is faster than the other, but I guess this is the reason for the performance differences. Maybe there is a way to tell the file browser's implementation of the SMB protocol which dialects it shall understand/offer to the server, so that it will use the same like when you run the mount -t cifs command.

so essentially, one request is sending more data than the other. That must be causing latency which is affecting the throughput?

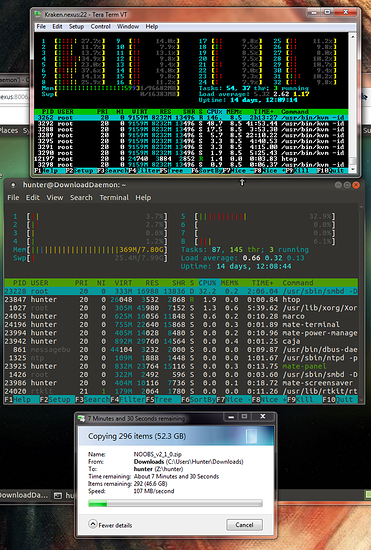

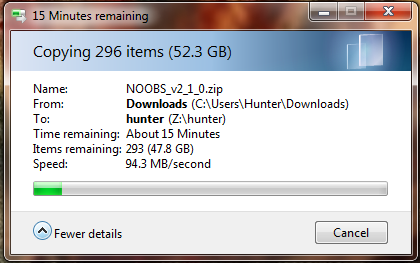

I'm achieving ~90-120MB/s read and writes so idk what your issue is. My server is running Ubuntu Mate 16.04 in a Proxmox VM on a ZFS Z1 array with SSD cache.

Just apt-get'ed samba and configured accounts. My client is a desktop running Win7 Ultimate.

My server is a dual socket Opteton 6276 with 96GB RAM. Everything is wired into enterprise switches. I'll test it at 10Gb tomorrow if you guys want.

We are using linux server with linux clients. on stock installations of ubuntu an other distros.

We are using midrange hardware. you have a much more powerful setup.

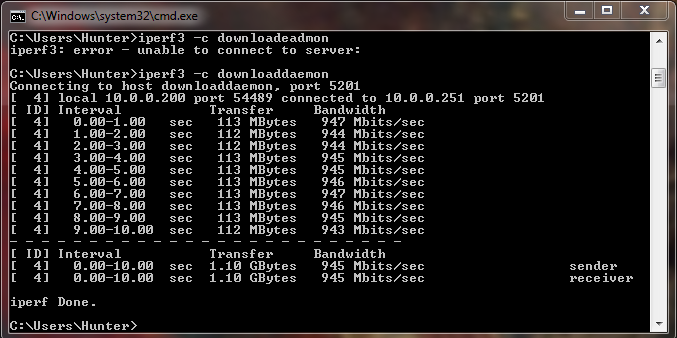

Our results for iperf were the same as with yours. we need to keep things on Tbase1000 to have a controlled environment.

So what I'm hearing is virtualize your file server in order to distribute single threaded core load. lol

There is nothing that isn't stock about my half assed Ubuntu Mate install with an apt-get default samba server on it. While the bare metal my server is running is indeed far more powerful than yours over all, my cores are all running at 2.3GHz and my RAM is a mix of bargain bin 1333Mhz and 1066MHz. My VM is allocated 8GB of RAM and 8 cores.

If it will be of any help I'll test my connection with a Linux distro as my client.

the only reason I mentioned stock was because this is what performance was like out of the box with no tweaking, for the both of us; Comfreak and I.

yes this will help quite a bit.

What distro(s) would you like me to test?

ubuntu 16.10, and then after that something which has the latest version of samba. Like manjaro or something.

ubuntu (desktop and server and from what I could find FreeNAS 9.x) ships with samba 4.4.5 where as arch and some others ship with 4.5 or 4.6.

I'll check the version I have installed on my server tomorrow night and will post my test results.

thank you, we have reason to believe that the issue exists with our version of samba (current stable).

Samba version is "4.3.11-Ubuntu" I'll let you know my test results tomorrow.

These requests are only sent when connecting to the server (at the beginning of the session). And even if this wasn't the case, the raw size differences of these packets are minimal.

I think that the reason is within the protocols. Maybe one protocol limits the packet size (for compatibility), which results in a poor performance, similar to when you run dd with a small block size. The smaller the packet the bigger the overhead is in relation to the transferred data. But this is just a theory, which I need to investigate further.

Not quite, I am using FreeNAS on the server side which isn't Linux but FreeBSD. I don't think that the BSD is the issue though, since I can get good performance using the mount command.

The network and CPU is not a bottleneck either, since I can get Gigabit speeds with both iperf and via the mount command. In addition, running top I never see any load higher than ~12% on my quad-core (no Hyper Threading) Xeon, as I mentioned before. I can imagine that the CPU might become an issue with a single-threaded load, if I enter the 10-Gigabit-Ethernet territory, but I don't have the infrastructure at home for that ;-)

I've seen this rather weird behavior both on (X)ubuntu 16.10 as well as on Fedora 25. I feel like this might be connected to the file browser or its use of the SMB library, rather than the distro or the distro's version of samba itself.

I was reading more from the bug tracker and it looks like the different protocols have different frame buffer sizes.

But, isn't that what @comfreak tried to take out of the equation with the line underneath here? Forcing a buffer size. I might very well have misunderstood something though.

SO_RCVBUF=65536 SO_SNDBUF=65536Btw, I also tried different "performance tuning" like the one above, to no avail.

huh, this is interesting. I just switched my dual boot of win 7 pro to win 8.1 pro. And now when I upload I saw speeds of 500 Mbps and true gigabit download speeds. Where as linux is still the same as before.

Now I'm just scratching my head.

Need to do more testing.

Must. Not. Succumb. To. Windows.

This thread is relevant to my interests.

Also, if I may be another data point. For me I've had numerous FreeNAS installs across the 9+ series with different drive configurations and controllers and NICs. I don't recall the last one that couldn't flood gigabit on read or write with the default configuration when connected to a Windows client. With a Linux client on the other hand, all sorts of tweaking has always been required. And it has never been good enough for me to actually write down the changes to FreeNAS that I've made. I feel like the best performance tuning to be had will be done to the Linux client.

I'll start playing tonight and report back on anything that I find.

I thought it was just a case of mounting via the browser was slow but when you mount it properly it worked at full speed? Or are you still having performance issues?

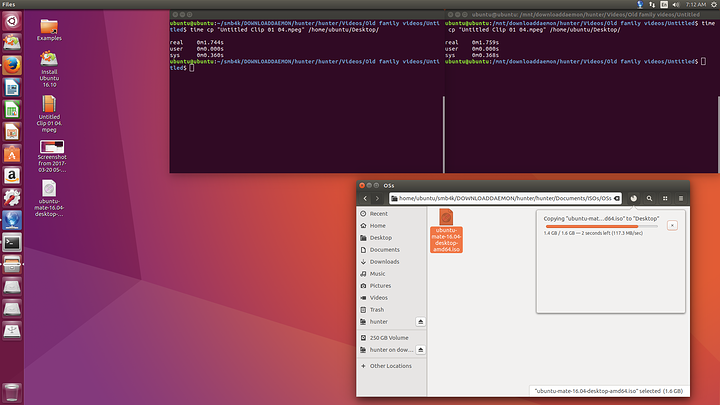

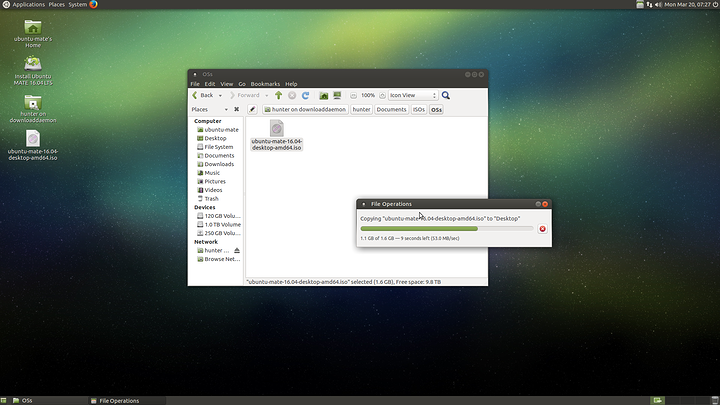

Downloading a file through a mount -t cifs

Downloading a file through "Other Locations" (gvfs) network location.

smb4k transfer.

From what I can tell this is simply gvfs being worthless shit. I tested transfer speeds using mount -t cifs, gvfs (network server browser used by nautilus), and smb4k. Both mount and smb4k achieved 115MB/s solid with no issue. Nautilus through gvfs was a fucking awful 50MB/s. I found this while doing some research but I don't know how helpful it could be. It isn't working for me but I can't reboot or I will lose the change anyway as I'm using a live CD for testing.

Let me know if you want some more tests.

Update: Same issue with Ubuntu Mate 16.04