Hello there!

At the moment I am witnessing around half the expected read speeds over SMB (170-230MB/s) when taking my setup into account. I cannot for the life of me figure out where exactly my problem lies and need some help working this out. I did not post this thread in the “Networking” section of the Forums due to the results of my testing so far and additional suspicions I have. Bear in mind that this is ONLY about the slow read speeds from my FreeNAS box. Write speeds over SMB are basically maxing out the wd red raidz2 pool performance capability at 430-450MB/s. I know not to expect 1.1GB/s unless both ends have insanely fast NVMe drives.

Alright, here goes…let me try to give you as much background as possible.

FreeNAS PC specs:

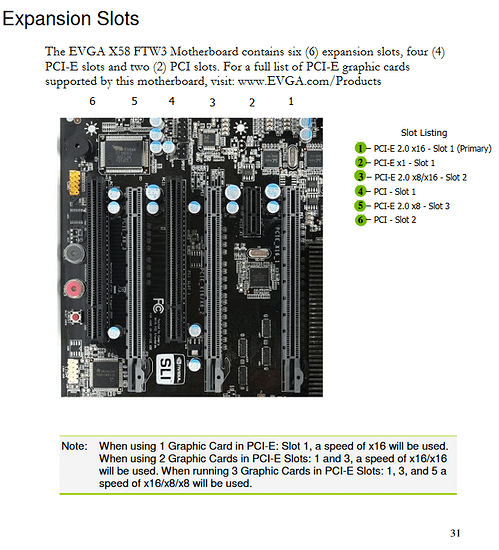

Mobo: EVGA X58 FTW3

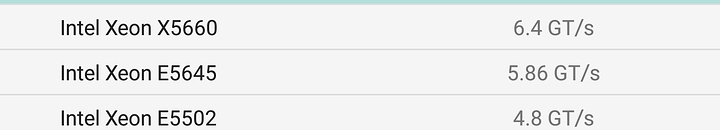

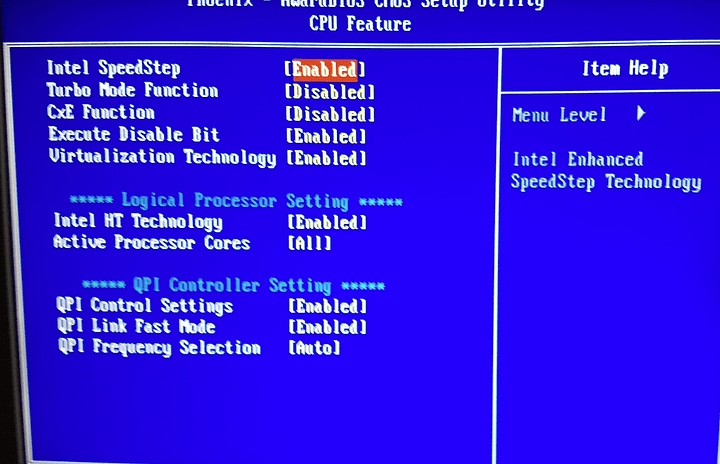

CPU: E5645 (6 cores, 12 threads)

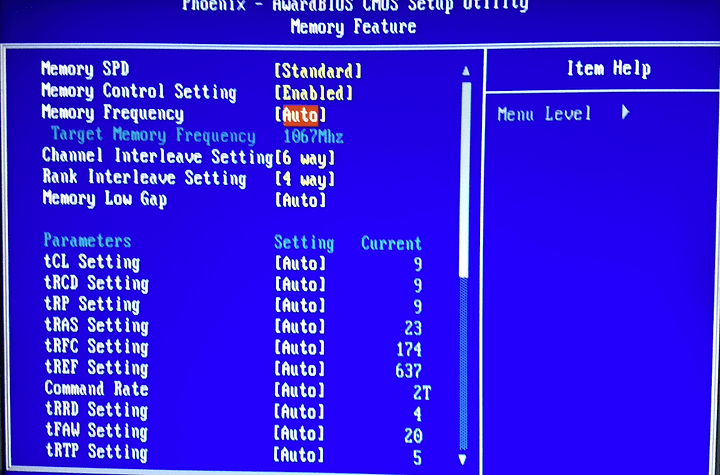

Memory: 24GB DDR3 ECC 1600Mhz (6x4GB)

HBA: LSI SAS 9207-8i IT Mode LSI00301

HBA Cables: 2x Mini SAS Cable SFF 8087 to 4 SATA 7pin

10G NIC: Mellanox ConnectX-3 Single Port

10G Cable: 10Gtek 10Gb/s SFP+ Cable 1m Direct Attach Twinax Passive DAC

Boot Drive: Intel 40GB Sata 2

Data Drives: 6x3TB WD Red in Raid-Z2

OS: FreeNAS 11.2-U5

Main PC specs:

Mobo: MSI B350M Mortar

CPU: Ryzen 5 1600x

Memory: 16GB DDR4 3200Mhz (2x8GB)

10G NIC: Mellanox ConnectX-3 Single Port

10G Cable: 10Gtek 10Gb/s SFP+ Cable 5m Direct Attach Twinax Passive DAC

Boot & Data Drive: Samsung 850 pro 256GB

OS: Windows 10 1903 64bit

10G Network Switch:

MikroTik CRS305-1G-4S+IN

Detailed Phyiscal Disk Info:

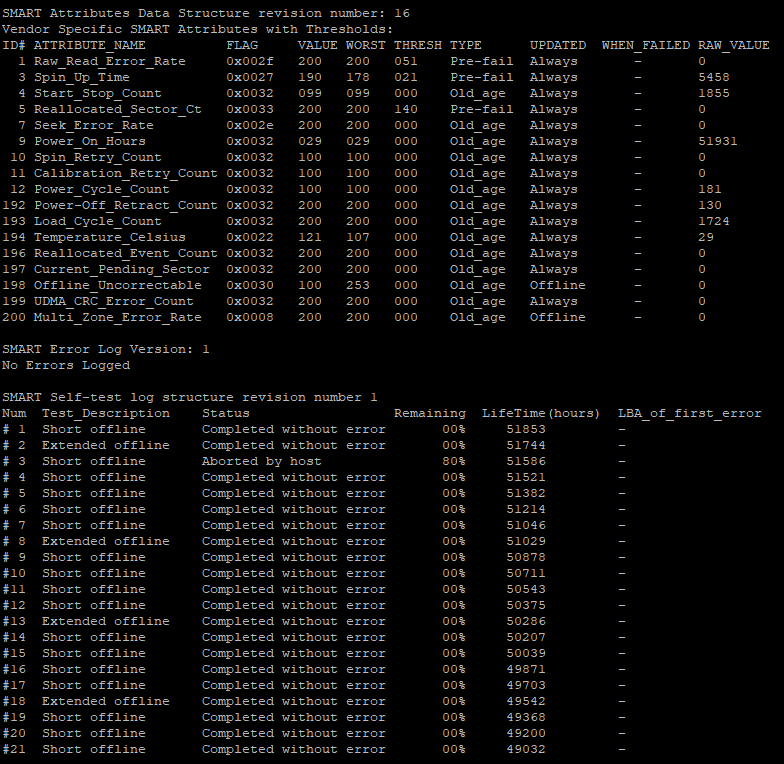

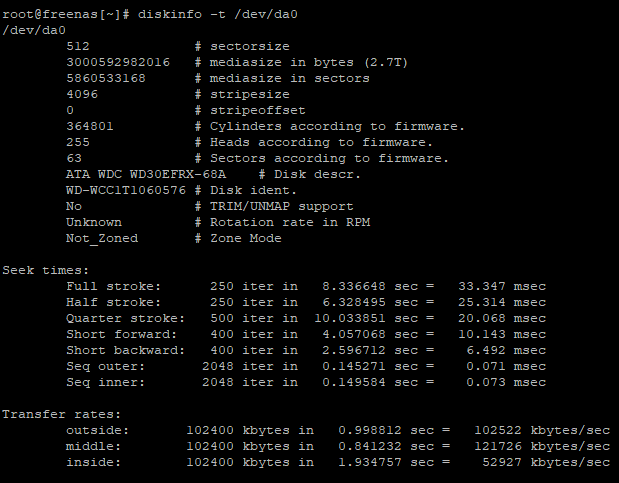

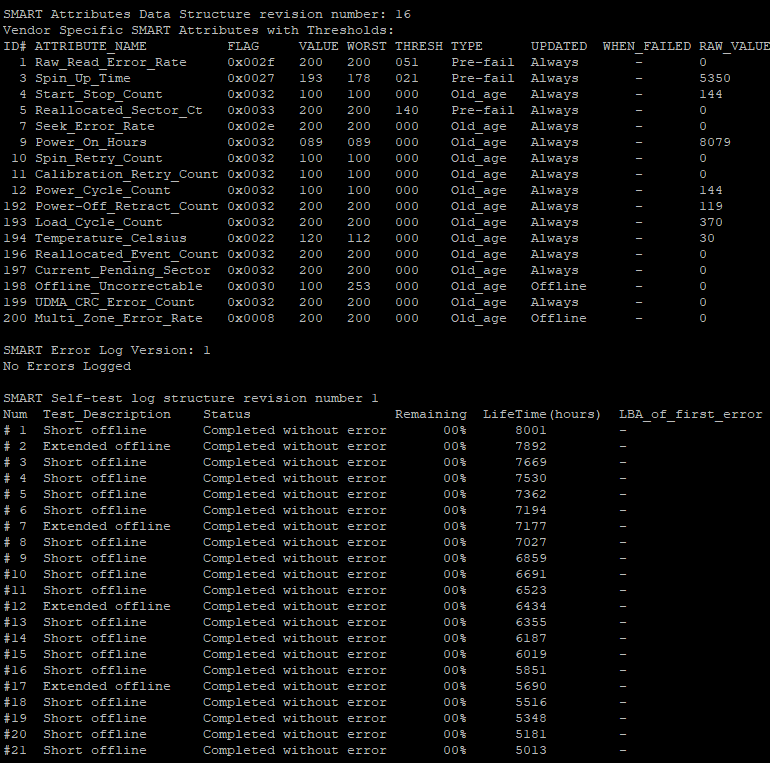

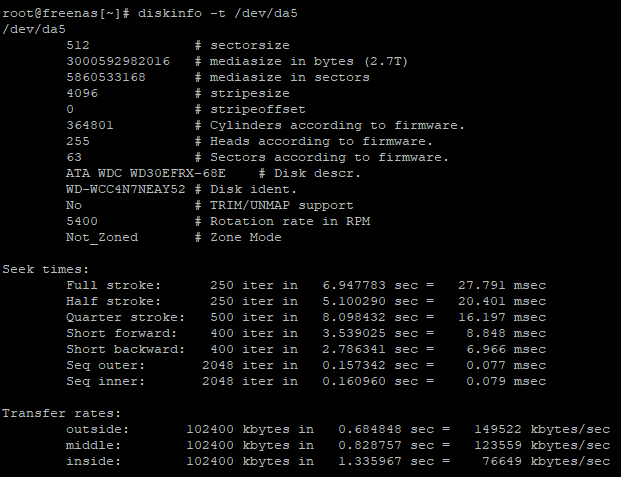

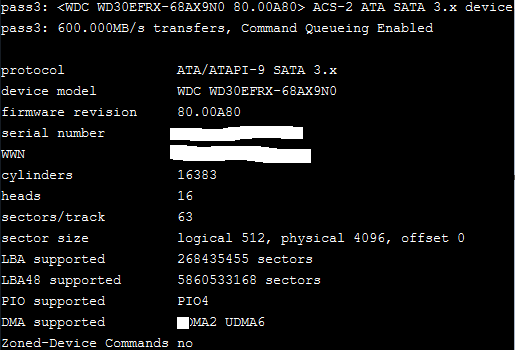

6x3TB WD Red Drives:

Example drive:

Note that all the drives have the exact specs as in the screenshot above yet the device model numbers and firmware revisions do differ slightly.

2x Model WDC WD30EFRX-68AX9N0, Firmware 80.00A80

2x Model WDC WD30EFRX-68N32N0, Firmware 82.00A82

2x Model WDC WD30EFRX-68EUZN0, Firmware 82.00A82

Motherboard:

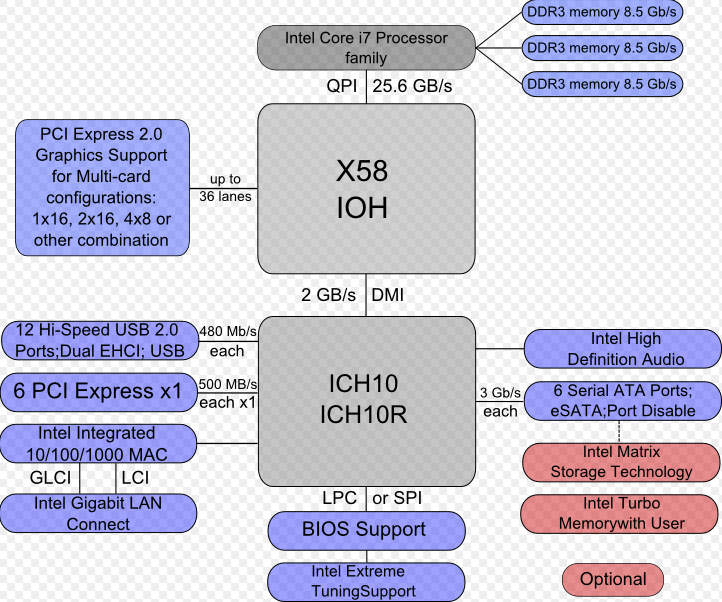

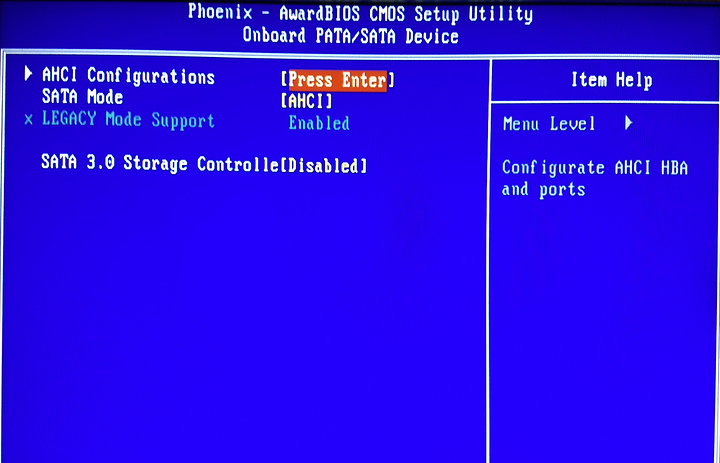

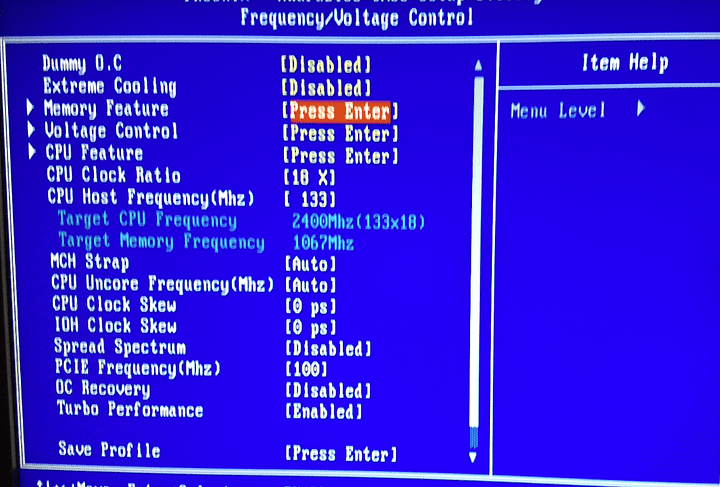

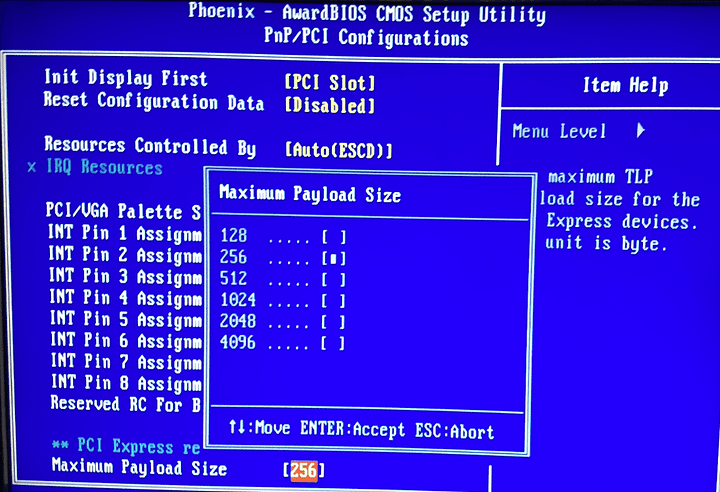

PCI-E Slot 1: HBA

PCI-E Slot 2: 10G NIC

PCI-E Slot 3: GPU

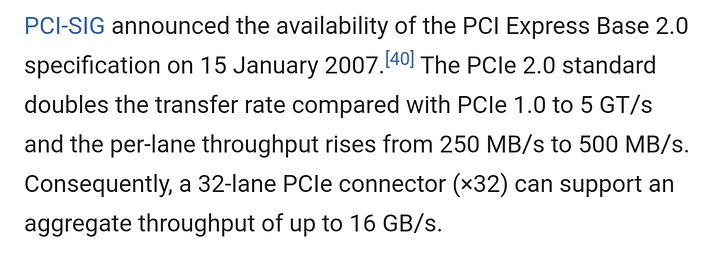

According to this section from the motherboard manual I should theoretically not run into any PCI-E bandwidth limitations:

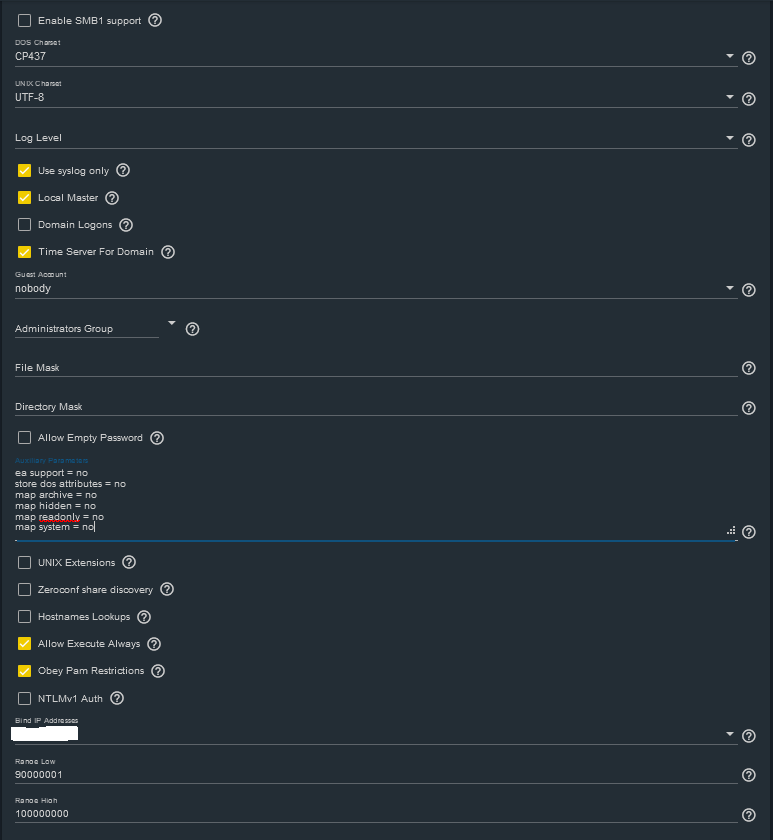

FreeNAS SMB Settings:

The Auxiliary Parameters I have set helped fix a weird bug where opening a windows network share, that has a boat load of files/folders and/or big files (such as my movies folder), on a windows client in windows explorer would result in the folder taking ages to “load”.

The SMB service is solely bound to the IP address of the 10G NIC. The second FreeNAS NIC is used for a couple of jails and web gui access.

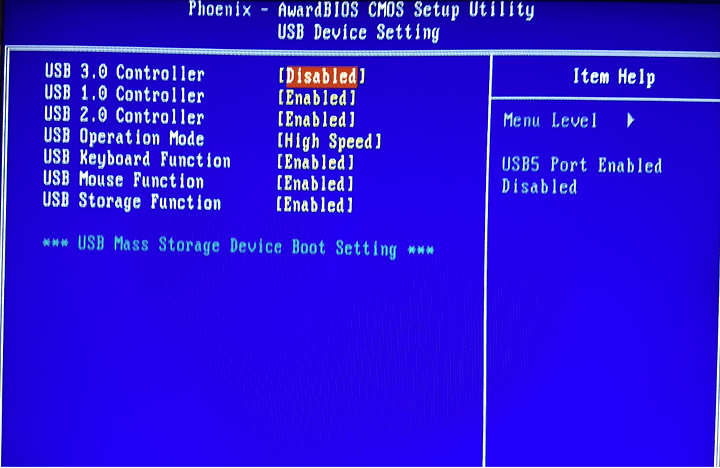

FreeNAS 10G NIC:

Options: mtu 9000

Main PC 10G NIC:

Jumbo Packet Value: 9014

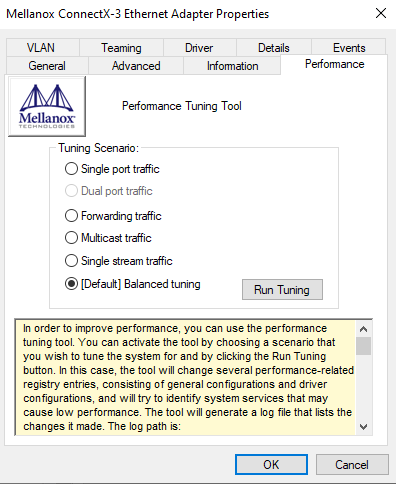

Absolutely no idea what these different modes are for which can be found in the Mellanox ConnectX-3 Etherenet Adapter Properties. The descriptions for each mode use the words they are supposed to be describing (derp).

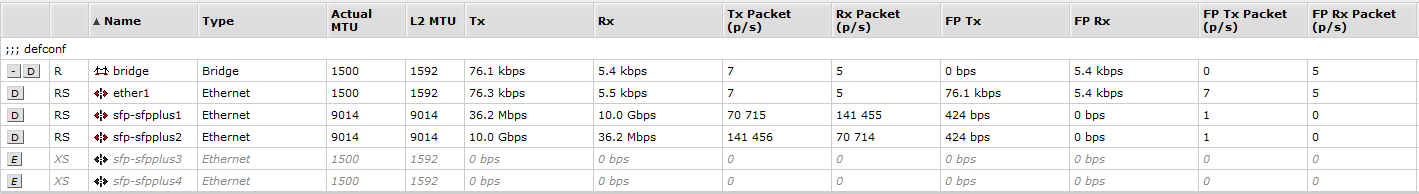

Mikrotik Switch:

Not 100% sure about the difference between “Actual MTU” and “L2 MTU” and if my settings are correct but the displayed relative RX and TX speeds during an iperf test lead me to believe this is not where my issue lies.

The only other settings I have changed for both sfp+ ports (one connected to main PC, one to FreeNAS PC) within the Mikrotik web gui is disabling auto negotiation, forcing 10G speed and enabling “full duplex”.

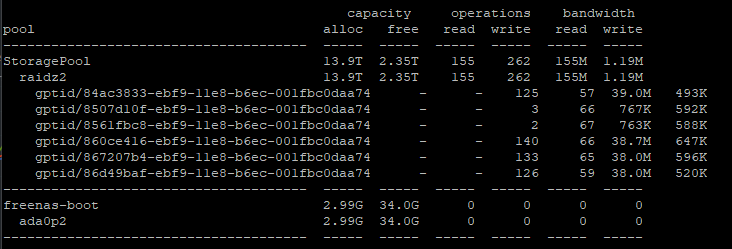

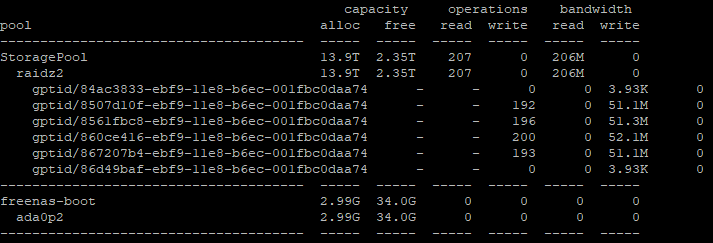

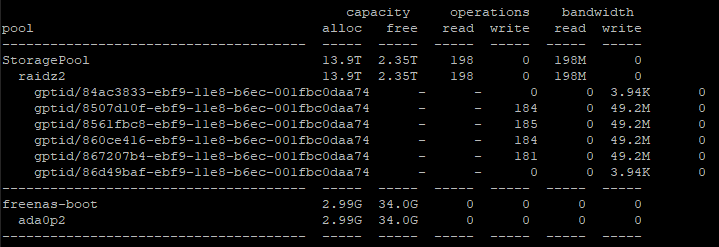

Testing (all disk speed tests were run on a dataset with compression turned OFF and where possible the file size was set to larger than 24GB due to RAM caching!):

iperf:

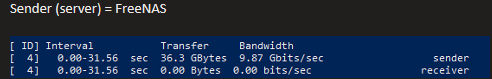

Sender (server) = FreeNAS

Sender (server) = FreeNAS

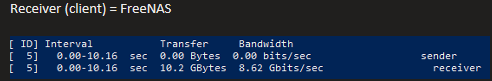

Receiver (client) = FreeNAS

Receiver (client) = FreeNAS

Results seem solid enough to me.

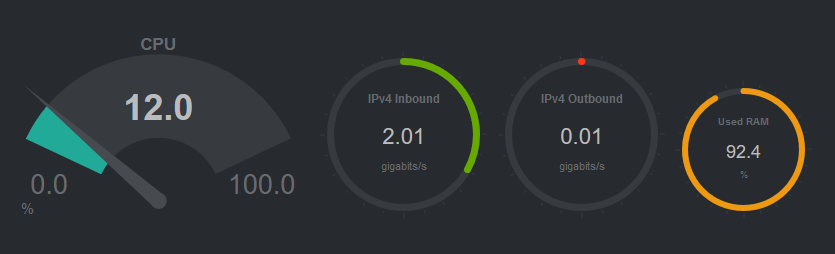

Example for CPU usage during a CrystalDiskMark test:

No CPU bottlenecking especially because drive encryption is not enabled.

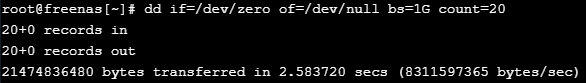

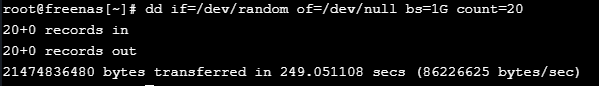

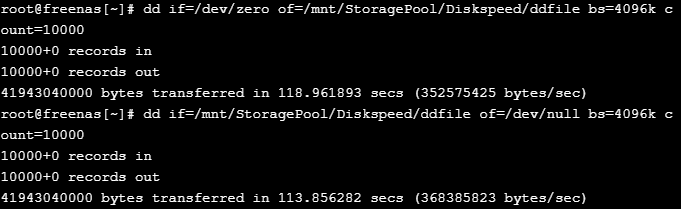

DD tests:

I used block size = 4096k and count = 10000. This should result in a 40GB file being used?

using “zdb -U /data/zfs/zpool.cache MyPoolHere | grep ashift” gave me an ashift value of “12” which should correspond with the 4k physical sector size of my drives?

Questions:

1.) Is a single drive starting to fail/slow down, which in turn causes the entire pool to slow down? All S.M.A.R.T. and scrub tests, which I have setup to run periodically always return without any errors. I do not know of a way to test single drive performance if it is in a pool and you do not want to destroy said pool.

2.) Do the different drive firmware revisions have any impact on performance?

3.) Is SMB tuning needed either on the FreeNAS or main PC side?

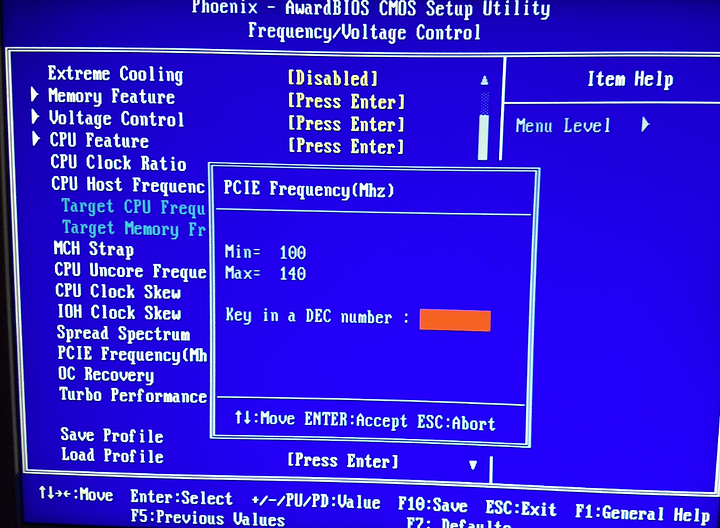

4.) Could removing the GPU completely from the system and having the HBA and 10G cards in slots 1 and 3 (→PCI-E 2.0 x16, x16) be my first troubleshooting step?

Any help would be greatly appreciated. Thanks in advance!