I am a systems administrator in the Aerospace manufacturing industry and I have found a gap in my knowledge that isn’t getting filled by Google.

I’m in the process of replacing a 15 year old hyper-v cluster and I am interested in using Epyc CPUs but I’m not sure if I can get away with a single socket due to memory demand. Without using Load reduced DIMMS I can only put in 512GB per socket which would put us right at our current need and I would like to have an upgrade path for the future since we have an ever increasing load.

My question is, how to I determine if the memory IOPs are low enough that I can safely switch to LRDIMMs without seeing a performance bottleneck? I’m hoping for a number similar to average disk queue depth or something like that to determine how close the current cluster is to being bottlenecked by RAM performance.

Any thoughts are welcome.

Do you have a service contract with any of the big players that would be able to sample you some hardware?

I suspect that is the only way to test it, short of sending a test workload (non commercially sensitive) to a vendor to give you performance numbers)

I remember doing that 20 years ago with a rig for CFD that was IOPS bound, in the end we built it and sent back the hardware we couldn’t use.

We have a service contract with HP but I don’t think they will be willing to sample us hardware. The contract has been tenuous at best over the time they have been servicing it.

How did you determine that the rig was IOPS bound? Or was it just through testing new hardware?

We wrote some test data loads to see how quickly (time stamps) and how accurately (reference frames) it would capture a simulated camera output. In the end we got the throughput and write speed needed with a raid controller an a handful of SCSI 3 drives. This was way before SSDs were a thing and we couldn’t do in memory processing with what was then only a few gigs of ram.

Looking back I bet we could achieve the same outcomes today with a smartphone. Still, it got me a Master’s degree.

Not my area of expertise but

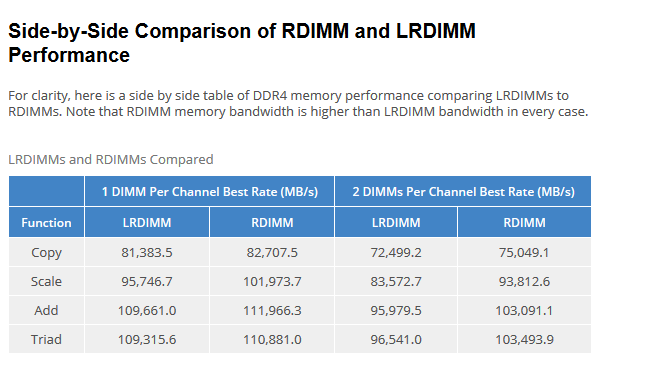

Seems to be not a huge loss in performance, this ram should be way faster then 15 year old ddr3 maybe ddr2?

My concern isn’t so much that there will be a memory bottleneck right out of the box, but that a secondary bottleneck will develop over the next 5-10 years as we add more VMs to the server. There is some possibility that there is currently a secondary or tertiary bottleneck on our old cluster and if that is the case, I can assume that I will need all the bandwidth I can get with the new server to avoid that.

Where did you pull this chart from? I didn’t know that the difference was so little. That being said I think it’s funny that was think ~10Gb/sec is very little change. lol

Bottom line is ddr4 even lrdimm will be faster then what you currently have and if the issue is just capacity you will be fine on Epyc even using lrdimm

This topic was automatically closed 273 days after the last reply. New replies are no longer allowed.