In the future we will all live in something like The Matrix, but its just Minecraft

Any guide to “Paranoid schizophrenic” OpSec without using/considering Qubes instead of any other OS is fundamentally not paranoid enough.

Also, as above, all the security measures in the world won’t work very well against handcuffs and a lead pipe or psychedelic drugs.

Unless you can rapidly distance yourself from the “secure machine” in a way that feasibly isolates you from any connection to it, the TLA can just beat the shit out of you to get into it.

So really if you want to be properly secure, you probably need to (also, as well as running a secure endpoint) run/store your stuff on somebody else’s machine that they can’t physically confiscate. Let’s say a cloud provider in Russia or somewhere else that will tell the relevant TLAs you’re worried about to go take a hike when asked to hand over your stuff or are presented with a warrant.

Store the encryption key(s) on something easily destroyed in an emergency. Timebomb that remote VM or whatever to self-destruct if it isn’t tended to every 24-48 hours (or 6, or 12 or whatever), so that even if somebody gets your keys, and even if they work out what you were connecting to remotely, and even if they convince hostile third party country X to cooperate, by the time all that is lined up the machine has nuked itself.

I would argue having physically separate hardware and using different networks (so to avoid inter-device communication on a LAN for example, to potentially avoid compromised devices doing shady stuff like trying to break into your switch or router and scanning traffic) is inherently more paranoid, because you’re so paranoid that you don’t even trust an OS to securely sandbox different programs.

Qubes isn’t just about sandboxing programs from one another.

It’s about sandboxing your drivers and input devices from your data too.

So even if you use seperate hardware for seperate purposes, qubes still has value. Your network, firewall code and IO drivers run in different VMs to your data.

If you’re properly paranoid you don’t trust hardware. Qubes is at least open so more trustworthy than firmware blobs.

Cue Beavis and Butt-Head reference

This sounds cool, but it’s a potential liability, i.e. getting struck with a (somewhat bogus) tampering / destruction of evidence charge, even if there’s no evidence a PC may contain evidence of any wrong doing. It’s one thing to refuse to unlock your device (5th amendment and stuff, while it still lasts, like, the part where “nor be deprived of life, liberty, or property, without due process of law; nor shall private property be taken for public use, without just compensation.” is completely ignored nowadays) and a completely different thing to destroy stuff.

I’m not against it, especially when the 5A goes completely out the window with the “nor shall be compelled in any criminal case to be a witness against himself” part as well. It could be done somewhat smartly, like for example have 2 profiles, 1 hidden profile for things you don’t want to be found out and one for normal use. When you insert a panic code in the normal profile login, the hidden profile gets wiped. But your normal profile needs to be genuinely used, in order not to make it obvious it’s just a setup, any investigator with more than 2 brain cells can figure out a profile is just a coverup, like with those phones that when you unlock, you are greeted with lots of fake programs, like a fake Candy Crush that is a hidden Tor Browser or something.

This would be a nice feature for QubesOS.

I see no other possible real-life scenario where you have the option to insert a panic code. Like, what, a ransom or kidnapping? Statistically not likely to happen to people. And while not out of the question for such usage, the LEO case is the obvious one. To be honest, even in that case, I personally see it as completely justified to not allow people into your private files (what happened to " The right of the people to be secure in their persons, houses, papers, and effects, against unreasonable searches and seizures, shall not be violated" ? - I’m not a constitution apologist or something, I just point out the obvious that pieces of paper won’t restrict governments from being abusive).

I would however choose a different route maybe. Alpine Linux can be installed in diskless mode, which is basically a glorified live session which runs from a ramdisk, but with the ability to save some current configurations using lbu. Save whatever you need, like a browser and whatever else you need minimally. If you are forced to unlock your device, refuse to do it. If they try restarting your device to install a rootkit or boot into another distro and see your data if it’s not encrypted (you technically don’t need encryption in this case, but I recommend doing it nonetheless, encrypted RAM FTW, continue reading), then they just destroyed the data, because obviously nothing was stored on disk, everything was in RAM. You didn’t tamper with evidence, but you did however refuse to unlock. Again, it’s currently legal, but who knows for how long - also, probably not legal to refuse to comply with LEO orders in other backward countries. “Human rights” my ass, UN, you f***ing hypocrites.

I believe some VPN services claim to use these kinds of live sessions for their limited logs (no VPN collects no logs) and for their VPN servers themselves, so if someone tries seizing them and shut them down, they are turned useless and no decryption key can be used if there’s no data to decrypt anymore in the first place. Technically you can get around this by freezing the contents of the RAM using liquid nitrogen or something, but it’s really expensive to pull off, especially if there are many servers to seize.

Why not set up a legit user, with a script that silently nukes it (or even just decryption keys) upon login?

What I would do if I were The Man

[heavily edited. my paranoid ramblings gave the impression of an actual rumour, rather than hypothetical ramblings

Don’t If I were a TLA, I would mandate every VPN in my territory allow the TLA to run their own session loggers? Then the VPN host literally is not keeping any logs? And TLA can fetch what it wants, when it wants? And session data is meta data, ruled by law not to be covered under privacy rights.

First time hearing this. Could be an exploit with “military technology” like encryption, which hasn’t been purely military for almost a century at this point, but some of that law still remains and may be used to exploit stuff like that (talking specifically about “exporting encryption,” which you need to have a license for).

Is this even constitutional? 4th amendment, hello? Not that it matters, the government is above the law (law that was supposedly put in place to restrict it). And it has to be, otherwise it can’t function, different rules for rulers. You can’t reasonably expect the government to limit itself or especially to apply the same rules to itself that it does to its subjects.

Just use i2p.

Hmm, I should have put it as more hypothetical. Not Real.

But constitutional; Only the contents of calls / letters is protected, not the metadata. Same with normal ISP’s

But I need to stop s posting on this thread an move on

sorry

The first thing the digital forensics folks do with seized devices is create an image of the storage device. Having done that, they then perform the bulk of their forensics work on read-only mounted copies of the image.

If you give them a self-destruct code, that will only be used on a read-write mounted copy of the image. Apart from forcing them to discard the self-destructed image, it doesn’t really slow them down.

It does, however, let them then charge you with destroying evidence, obstruction, or whatever else garbage they want to make up. On that basis, I would advise against giving up a code that destroys data.

Having said that, if you have your self-destruct code written down on a piece of paper in your wallet, or scrawled on the inside of the battery bay cover on your laptop, then their discovery and use of said code is without your knowledge or consent, so any/all negative repercussions can be avoided.

So there’s this nice extension called uMatrix (chrome, firefox) (from the creator of uBlock).

Per website you can decide/make permanent or temporary rules as to what stuff to block/allow depending on third party domain and type of content.

It can go a long way towards eliminating XSS

The basic idea is to prevent some XSS exploits a-la Samy Kamkar in 2005 (that still seem prevalent today). In short, in cases where random people on the internet share content, poor sanitization/poor sandboxing might allow malicious random people to execute code in context of the primary server and e.g. steal credentials or exfiltrate data somehow.

It’s kind of like setting CSP for websites that don’t have a CSP policy of their own.

It’s also nice in another way, because most tracking happens when a website you’re visiting decides to include a script from a tracker/tracking service provider website - you can simply block it.

Really bad opsec on my part, but here are a few of the extensions I use:

![]()

(uBlock, NoScript, uMatrix, Facebook container)

Not really in topic, but there is also a “multi containers” extension, which does similar to FB containers, but more…

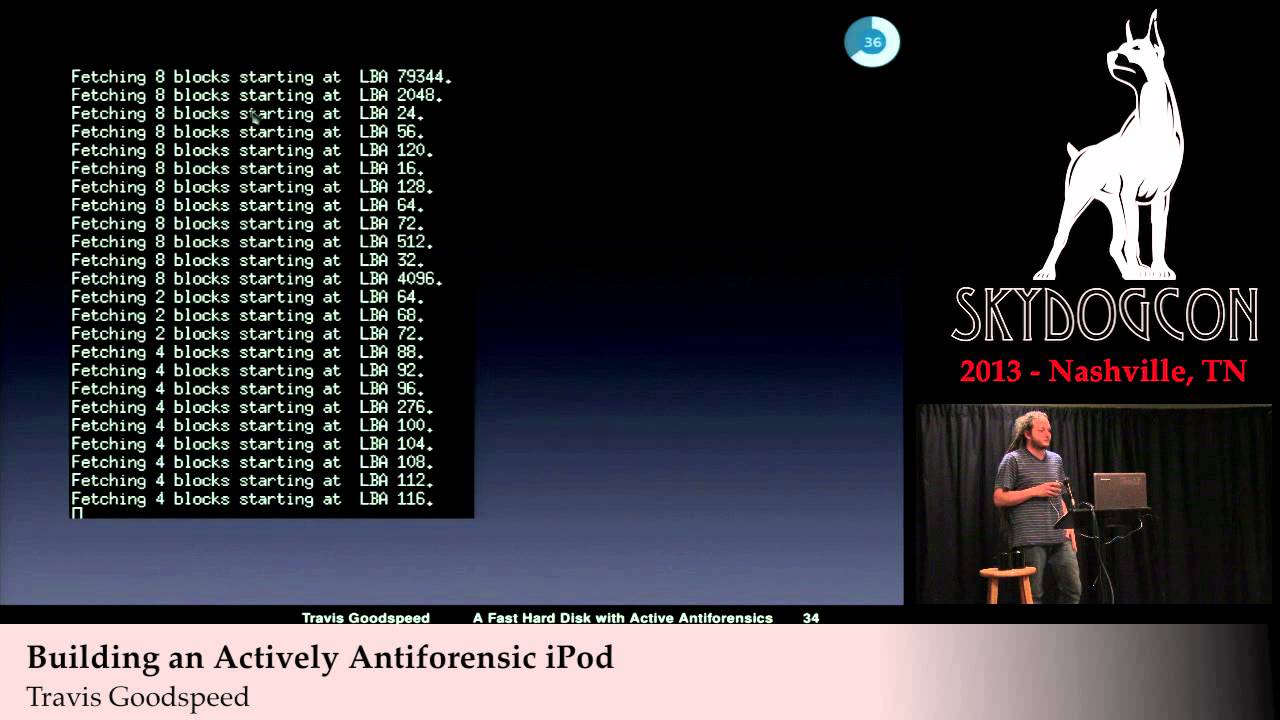

I cannot find a recording of it, but (see edit #2) I vaguely remember there was a talk someone gave about hard drive hacking, where he/she contemplated modifying hard drive firmware to recognise the difference in access patterns of Linux vs Windows vs a write-blocker that a data forensics team would use to prevent accidental tampering during the drive cloning process you describe.

One could connect this sort of detection to trigger the OPAL/ATA Security Erase functionality and/or TRIM across the entire drive. You could try to characterise the behaviour of your EFI and/or GRUB, or it might even work to simply have the drive watch out for “no writes received for _ seconds after first _ MiB read”.

If nothing else, having paranoid developers working on something like this would result in some very interesting HDD or SSD firmware reverse-engineering work that we might all benefit from.

Edit: Someone else on StackExchange law had the same idea.

Edit 2: I finally found it, it was a talk by Travis Goodspeed; here is a recording from SkyDogCON 2013: