that sounds like very good idea, maybe one day when I am not poorfag but wealthchad I’ll do it

There’s some refurb hgst Enterprise drives out there with 3 year warranties.

4tb for ~$70

from experience seagate are garbage, they work fine … till they don’t most of the time there no warning, SMART was displaying the drive as healthy yet they died at least WD you usually get a heads up before failure

when you buy drives for nas just remember the more drives you have together the more they affect each other

1 to 4 drives wd blue are fine

id start using red at 4 but there not a must till 8 + to be honest

20+ use red pro or enterprise

as a permanent solution external drives are too vulnerable, and usb is wayyy too flaky for reliable long term usage, those are best for offline backup not running storage

Ruffalo the price is not the same everywhere for example

WD My Book 8TB USB 3.0 External Desktop Hard Drive WDBBGB0080HBK is $320 in Australia you shouldn’t assume the op is in the us

anyway i’m gonna go in the opposite direction from everyone else about drive sizes when everyone say buy big and ruffalo says one should never buy bellow 6tb in 2019, i completely disagree

i have probably 120hdd running here and none is above 4tb the reason is sata drive throughput isn’t fast enough

in raid drives always rebuild slowly… especially if the system is in use it’s not rare for a 2 tb to take 24 hours to rebuild while this is the main reason why bigger is not always better it’s not the only one

with big drives you end up with a small number of drives in your raid if you only have 1 parity you are more then likely to lose everything as a second drive fail during the massive amount of time a 8 tb will take to rebuild or if you don’t change the drive right away and all your drives have the same age it might even fail before rebuilding even start

next lets imagine you have 2 8tb drive in raid 1 the drive read and write speed will just abysmal, if your main system has a ssd try doing to a hdd only system for a few days, you might be able to have 1 maybe 2 user at most or you will have sporadic performance

raid 5 and raid-z1 have the same single parity issue despite needing 3 drive minimum but slightly better throughput… sometimes, overhead is troublesome at times

if you look at raid 6 or z2, it require at least 4 drives (6 or 10 is better)

the benefit is simple better read and write speed, 2 parity helps a lot when rebuilding

then you have what i use, raid-z3

my chassis are: 24 drive bays with 2 pools of 11 live drives and 1 hot spare

the main pools are all made of 2tb drives and the pools where they duplicate are 4tb drives

zfs memory usage isn’t bad really as long you don’t use de-duplication

running 24 x 4tb system (11+1 x2) on 8gig of ecc ram works well

meanwhile with dedupe well that’s a mix bag of… the 1gig per tb is just a guideline some time it’s not enough from experience z3 with 11x2tb+1hot spare x2 give about 31tb of usable space but the system would never work properly with 32g of ram it just wouldn’t

You don’t use them over USB, you crack them open, pull out the WD blues inside, and use them in your NAS.

backblaze used to do that and they found that the process actually make the drives die a lot more it’s actually fully documented and not recommended

you shouldnt assume we dont know where gek lives

For the record the easystores are the ones you wanna go for if you’re trying to shuck on the cheap.

good come back but it’s just better to generalize rather then localize based on assumption that’s all i meant plus other might stumble on the conversation for there own needs too the wider scope is a good thing over all

Source link, please. Shucking is extremely common and I’ve done it for years myself.

sure ill need a little time it was one of there blog a few years back

Thing is, the savings from shucking is 30-50% these days so their increased failure rates would need to be over that same 30-50% to make that argument.

again that’s relative right now if i want to go out and buy a drive i can get

4tb blue for 159

4tb red for 169

4tb external for 174 ( WDBBGB0040HBK)

Look at the 8tb easystores where you are. I’m curious.

OOF, AUD is bad

https://www.ebay.com.au/sch/i.html?_from=R40&_trksid=m570.l1313&_nkw=8tb+wd+easystore&_sacat=0

still not the 320 you were just quoting though.

Sure. Here in the US, 6TB external WD blues are commonly $99 and 8TB $130. Yesterday there was a deal on the 10TB model for $146.

i couldn’t find the article i remember but i found something helping your side … and it also talk of seagate insane failure rate

Theory – Shucking External Drives

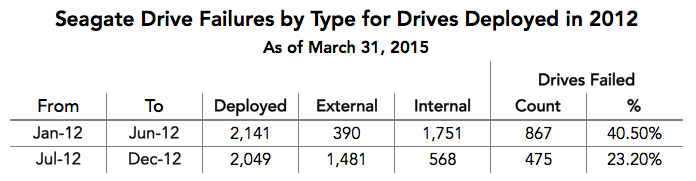

A third thing to consider was the use of “External” drives. Did the “shucking” of external drives inflate the number of drive failures? Consider the following chart.

From January to June most of the drives deployed were internal, but the percentage of drives that failed is higher during that period versus the July through December period where a majority of the drives deployed were external. In practice, the percentage of drives that failed is too high during either period regardless of whether or not the drive was shucked.

Adding to this is the fact that 300 of the Hitachi 3TB drives deployed in 2012 were external drives. These drives showed no evidence of failing at a higher rate than their internal counterparts.

somehow I don’t trust refurb, but am probably wrong

Actually the latest data shows their higher capacity drives are pretty good.

I wouldnt either but, its enterprise, it came with a 3 year warranty, and its HGST.

BTW, about external drives, they are more expensive here

do you like having data?