Situation

My Setup is rather simple and consists of a NAS running freenas, my Workstation called WS and a server for testing stuff called TS.

They are each directly attached to the NAS via a dedicated 40GbE link that is separate from everything else.

So WS to port 0 and TS to port 1.

Adding a switch is sadly not an option. If it were, that be easy and i wouldn’t need to ask and write here.

What i want

I want to have the NAS reachable on the same IP from both WS and TS.

Remembering that its the …2.1 on my WS and …3.1 on TS is to much for me.

Since they are on different ports with different macs and different IPs on the clients that will hopefully never ever collide, i think that should be possible! Is it? Why shouldn’t it?

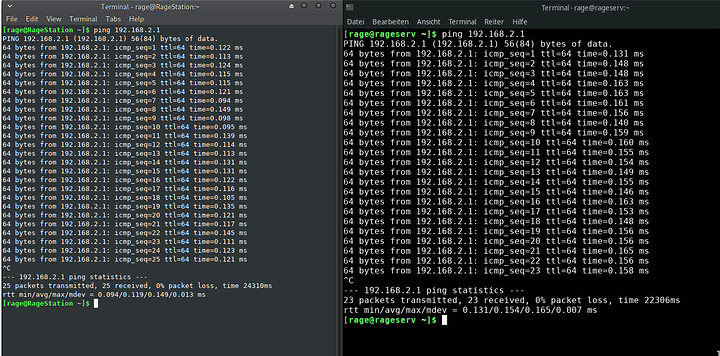

On the WS: ping …2.1 to the NAS should work,

on the TS: ping …2.1 to the NAS should also work,

and from the NAS, …2.10 to the WS and …2.20 to the TS should also work.

Complicating is that Freenas isn’t that accommodating in these edge cases and the performance over the 40GbE links is another concern.

What i have tried so far

Bridging

Was the initial thing that came up searching around that seemed promising. Freenas needed some tunable “bodging” to permanently configure the interface since there is absolutely no UI capability for that.

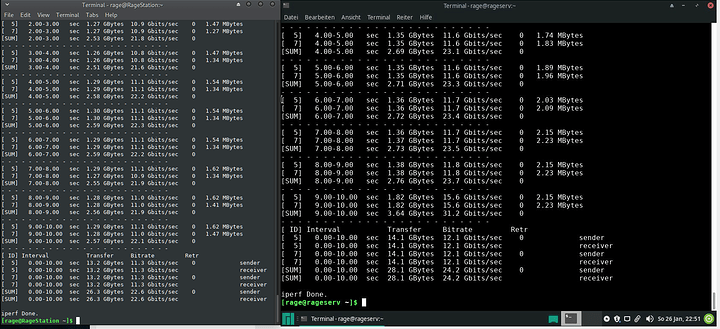

That solution did its job until i noticed the horrible performance i was getting over it.

For no apparent reason, it was capping me at about 11.4Gbits and possibly way more latency then i would expect, possibly explaining the horrible experience i had network-booting windows about a year ago.

Another thing is that i don’t need the clients to communicate with each other, which they can in this case.

reasons ?

-

Since the NAS is powered by a Xeon E5 2628L V4, with 1.7Ghz boost and 1.5Ghz base, that could be an issue, but i’m talking iperf3 benchmarks here where the client is using multiple connections / threads to mitigate the single thread performance issue at hand.

All the threads didn’t matter, about 11.4Gbits where it did 32Gbits easily on 4 threads before doing the bridge. -

i noticed that static ip configs would get stuck to a similar limit once they were reconfigured at runtime, hinting at some software reset or configuration issue.

If it is i don’t know how to mitigate it though since i only managed to fix it with a restart.

The new configuration set as the default for the NIC, which then comes up with it first, which seems impossible on the bodged bridge due to the way it is bodged.

LAGGs

It seems reasonable to mess around with those since freenas has some UI support at least.

Beside the NICs to add into the group, one can choose a Mode.

- LACP is out of the question, it is way to complicated and it should in theory detect a “split LAG” and prevent it from accidentally working.

Tried it and no, it’s not really working. - Failover is working great, once there is only one client active.

There is a setting to listen on all ports , but that is still beside the point.

Once both are active, one will be simply ignored. - Broadcast simply sends out everything on all the NICs and listens on all of them. This works fine with the only exception that TS can receive what the NAS is sending the WS.

Not really an issue but there should be a better solution! - RoundRobbin is stupid and won’t work at all.

- Loadbalancing is the most promising.

It uses some rather not explained “FlowID” and Hash- functions / tables what ever to decide which packet to send on which port.

LAGG Loadbalancing

Is working for me

once in a while

PROOVING that it is possible somehow.

Why once in a while?

Try and error landed me on the hash setting L2 which should mean that only the Layer 2 info in the packet is used to determine the port it gets send out on.

L3 / L4 or any combination of those always changes it so that none, or only one is pingable.

The default the interface comes up with is l2,l3,l4 which i can change easily.

Only issue is that after a reboot, it won’t work anymore, even in L2 setting.

It seems in those moments, the decision where to send the packet is the wrong one and each packet is placed on the link where it shouldn’t be, hence not getting to it’s target.

Once in a while, while initiating a link, it switches over and pots it the right way, and it works.

No packets for the TS on the link for the WS that i could capture with wireshark.

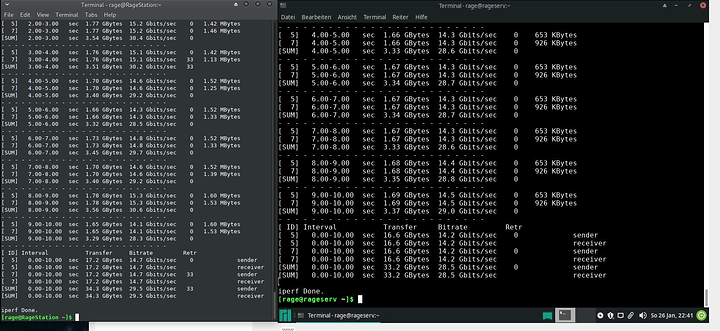

Performance is at least close to the expected 32Gbits that i can get with my crappy QDR cables.

This is a sequencial run, so WS left, TS right.

In parallel its completely fine too.

final

Since this is not working consistently out of the box and I’m a bit lost where to look for settings and tweacks and hacks and what ever else next, i really appreciate anything from you guys.

Even a little flameing that i should just get a switch and that all of this is not how it was intended.

Other solutions would make my day, have searched the web for a while and i have no clue what to search for next. The amount of non related “HOW to X” is infuriating and i have the feeling i’m the only one that wants this. Or i’m to fucking stupid again. Wouldn’t be the first time that i wasted my time for multiple years.

Thanks anyway

Hope the read was enjoyable.