I decided to do some work on this again after forgetting about it for about 3 months. Not really sure why, I think I was busy with work for a while and then stopped thinking about it.

But I made some progress this weekend and that is the important part.

Focus detection in Bayer

The depth estimation is based on a Laplace edge kernel using the fact that blurry areas also means softer edges, so areas which are in focus will give a larger response. So making sure the edge detection is as good as possible should improve the results.

So the question is, what is the best way of doing this? Should it be done on each color channel separately or done by calculating the luminance and doing it on that? ETC. The open-source program for focus stacking does the luminance approach and then does some extra gradient checks in attempt to fight bokeh issues fake edges appearing in out-of-focus shots next to the real edge.

I was thinking that this might be caused by the color channels going out of focus differently. Lenses causes issues with color channels, such as chromatic aberration causing colors to be shifted depending on the angle to the center of the sensor. Similarly, how out-of-focus areas are can be different for red, green, and blue. So the right approach to fight this issue might be to work with red/green/blue separately.

The problem here is that demosaicing correlates the color channels and combines them in a non-reversible way and then color correction later mixes them up in a way unknown to you. So when you get the image out of your RAW processor, there is no way to get the original red/green/blue channels. So that means skipping the RAW processor and working directly on the RAW bayer data.

Oh my, look at that noise…

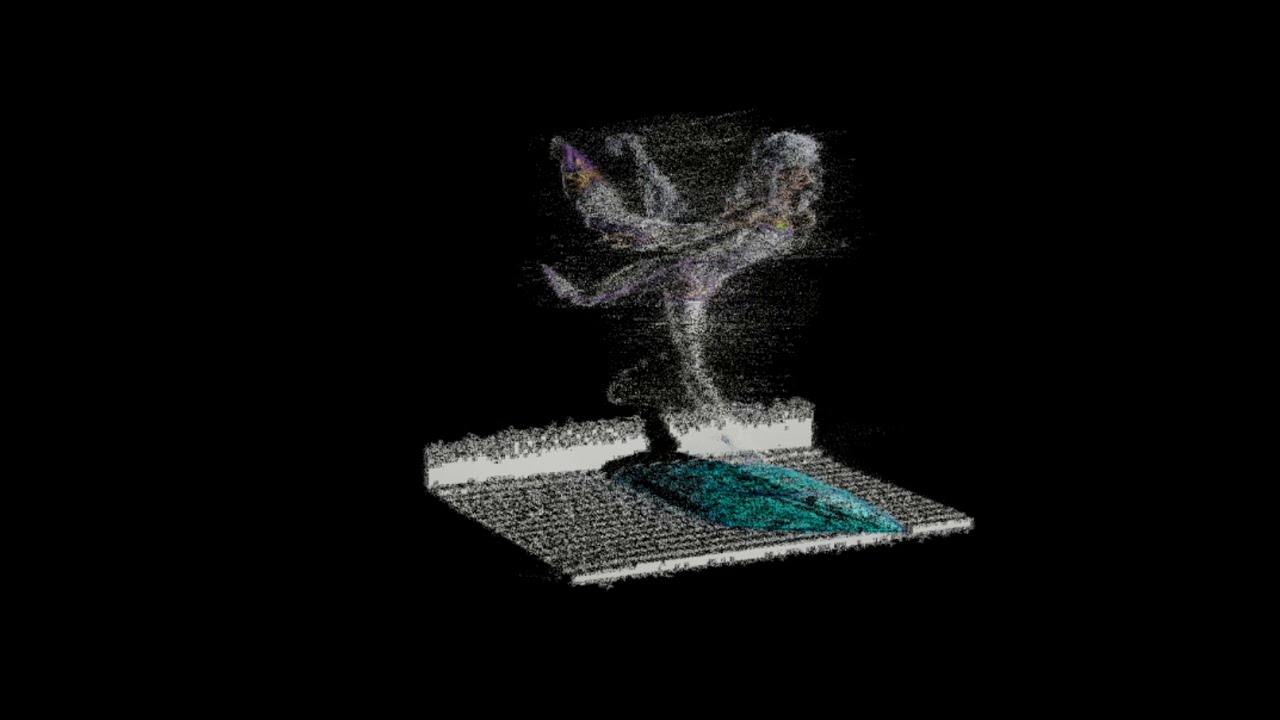

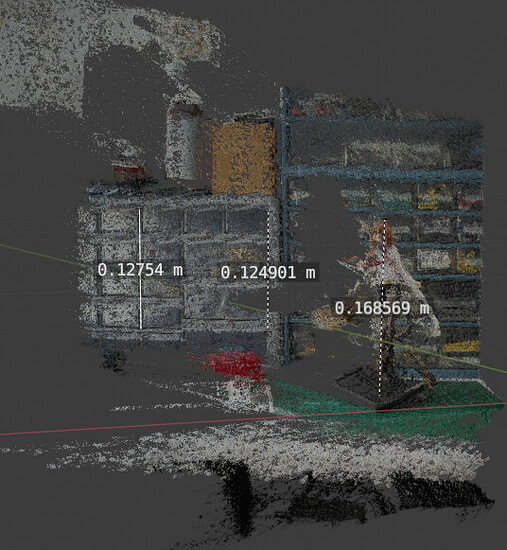

I used libraw to get the RAW data from the images and converted the bayer pattern into a four channel RGGB image. Then I performed the edge detection on just one of the green channels, which gave this result (the maximum response of all 31 images in the focus stack):

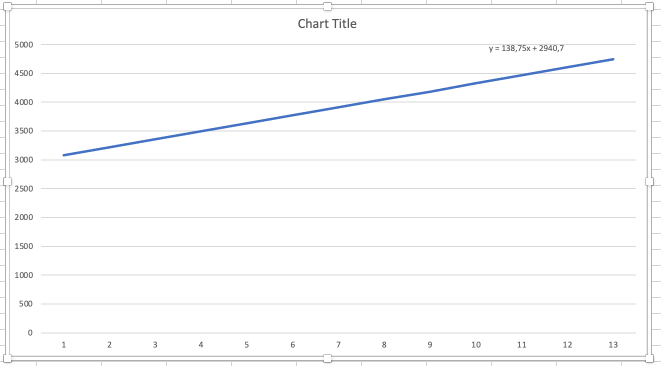

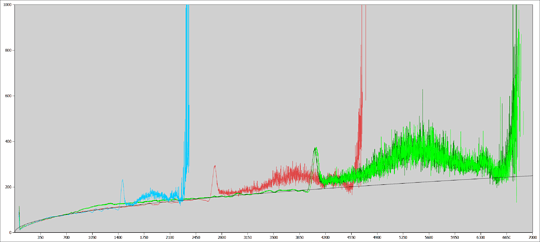

An edge detector should give black for flat areas but there large areas which are obviously brighter. These happen to be bright areas in the image and I remember the DNG RAW file format specifying a noise profile where:

noise = sqrt( pixel_value * scale + offset )

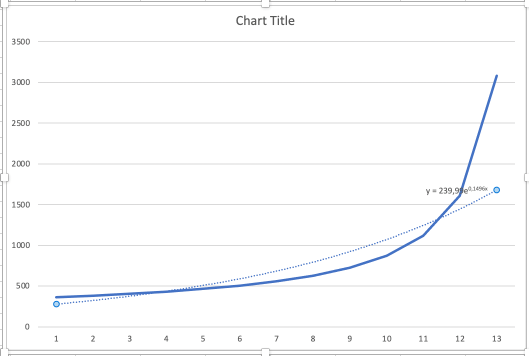

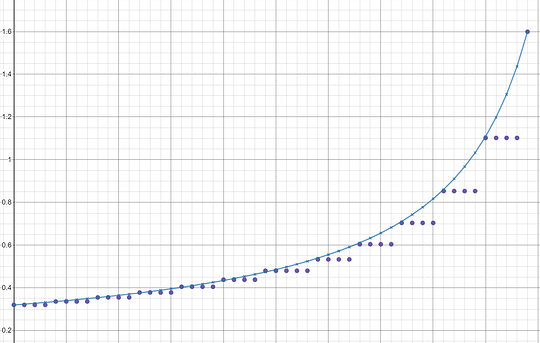

meaning that brighter areas in the image contains more noise. Going by this idea, I made a histogram of the response of the edge detection compared to the pixel value:

If we look at the first half, it follows such a square root functions quite nicely, which I plotted in black. (The offset could probably make it closer at the very beginning, but I didn’t bother.) The second half of the curve goes more crazy, but that must be the image content affecting the results, as you can see it the same response earlier in the red and blue channels. The red and blue channels are usually less sensitive and needs to be scaled to match the green channel, but you can see they follow the same noise curve before scaling.

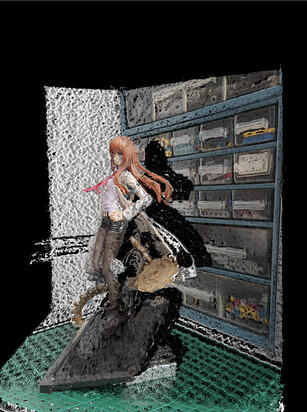

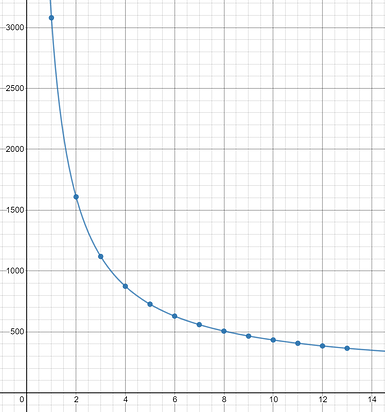

Subtracting this noise level from the image fixes the issue:

This is still not quite perfect, as this just substracts the mean value, so bright areas are still more noisy. There are also some hot-pixels as well, which was normally removed by the RAW processor.

Did it get better

Not really, at the very least not by a significant amount. The edges does appear to be thinner and less blurry, but I will need to investigate closer to see if the “color channels getting mixed” actually caused issues here. I think this approach might be better, but it is clear that this isn’t what is needed to improve the result at the current time.

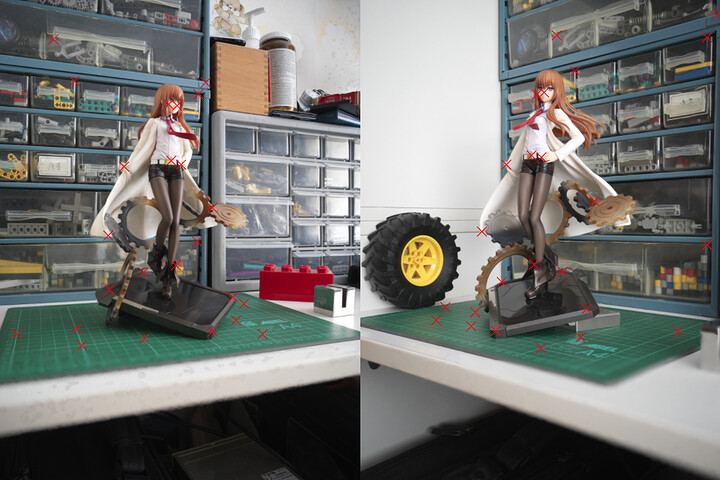

I also noticed that the image appear to be distorting slightly in the out-of-focus sections. If you compare a shot where the focus is before the subject and one where the focus is behind, the image appear to move. The current focus position might be affecting the FOV or something, which could also be the reason for these “fake edges”, and it certainly will matter for the alignment of multiple images.

Better filtering

One thing that caught my eye was that these large flat areas with seemingly no information seem to somewhat work. It is very noisy, but the results tend to bias towards the right result anyway.

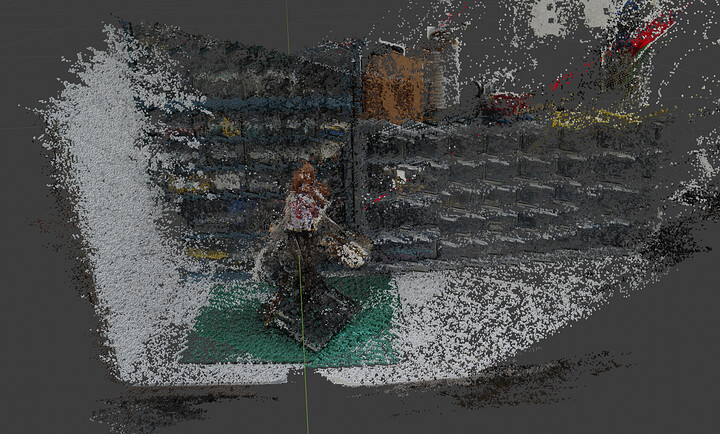

Here you can see the 3 edges being in focus, but the flat area is just one big noisy mess. But I tried downscaling the image, and this suddenly looks more interesting:

The area you see in the top image is on the left part of the image, where you can see the three lines being in focus. And it is a bit difficult to tell, but you might be able to see in this downscaled image that there is a lightly brighter vertical line around that spot.

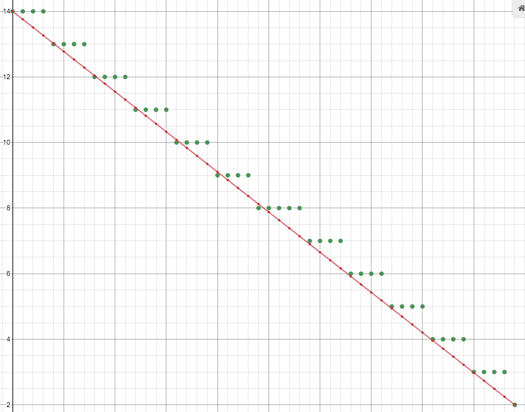

Seeing this, I tried to make a pyramid based approach where I keep downscaling by 2 and then walks up from the lower resolution, adding the more detailed information from the higher resolution, but using the lower resolution if it seems unreliable.

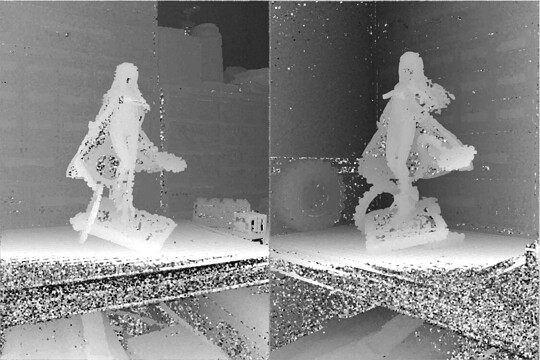

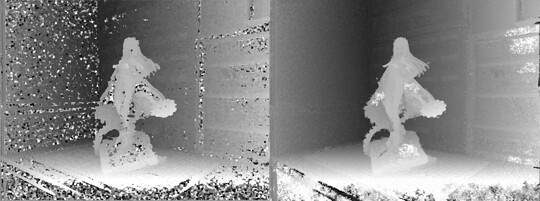

And this gave some promising depth maps:

Left is the old result and to the right is the new result. It is still noisy, but it is much more fine grained and detailed so I can filter it and still have more detail after the fact. There is some other issues though, small areas where you can peak through (such as the gears in the figure) can end up disappearing if I downscale too much, but if I don’t downscale enough noisy areas appear (the bright white spots in this image). And then there are some clear lines in the image where there are edges in the original image, which isn’t correct. So there is still work to be done here, but this looks promising.

Going forward

I will probably look some more on the filtering, to see how much I can improve it. Seeing I just took a 3 months break though, I’m not too sure how much focus I will have on this project, but I hope to get more done on it at least.