As I said in my post on the Level1Techs | Build a Router 2016 Q4 – pfSense Build thread, I am going to outline the changes I want to make to my network setup.

The reason I am writing this is to give my plans some substance as they have only been in my head since now and should help motivate me to commence with the project as I've been putting it off for a while.

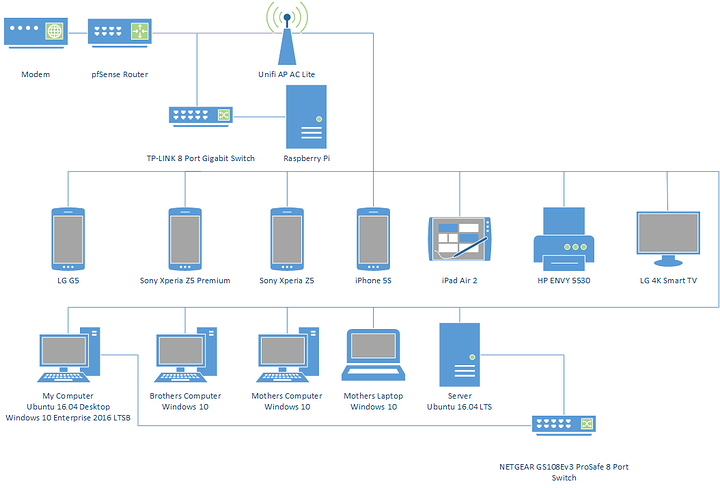

This project will consist of two parts, the first is an overhaul in how my server is setup and managed and the second is what changes I am going to make to the network to accommodate for the new setup, but before I get into any of that, I feel that it is best to show the network map of the current setup and outline some of the things I want to change.

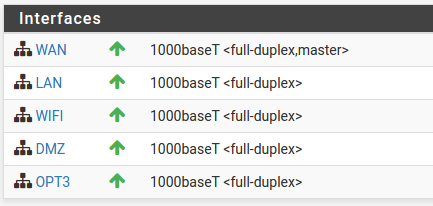

As you can see almost everything is on wireless with the exception of my raspberry pi, this is because almost all of the computers in the house are upstairs and running cable is problematic (I have a plan for running the cable but will likely leave this until sometime next year), but in the meantime what I can do is move my server downstairs, which at the moment is using 2.4GHz 802.11/n to connect to the pfSense router which isn't ideal.

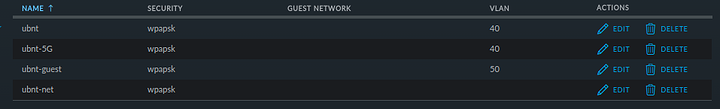

You may ask why my server is using wireless this whole time, well the answer is that I wanted a direct gigabit link between my main computer and the server for file transfers, but as other people in my house have an increasing need to use some of the services on the machine I feel that its better to move it, plus it will give me more options in how the server interacts with the rest network with regards to improving security as It'll let me create some VLANs for the servers so that I can setup firewall rules to block traffic from untrusted devices on the network (The ones that I have no control over).

The server

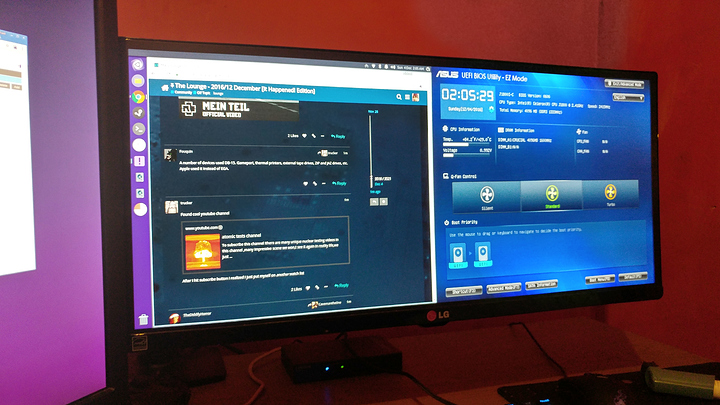

The server in question is the little black box in the picture below, It has a low power dual-core Intel Celeron J1800 clocked at 2.58GHz and has a single 4GB stick of DDR3L @ 1333MHz, fairly unimpressive, but it is almost completely silent and has preformed decently well for the workload I put on it.

At the moment it is running Ubuntu Server 16.04 as the host and has several LXC containers running various services:

NAME STATE AUTOSTART GROUPS IPV4 IPV6

database-server RUNNING 1 - 10.0.3.211 -

repo-server RUNNING 1 - 10.0.3.51 -

web-server RUNNING 1 - 10.0.3.185, 192.168.4.6 -The changes in the server configuration revolves mostly around the containerisation of these services as I want to move them all to VMs if possible and change the host OS from Ubuntu 16.04 to Proxmox to aid in the management of the system.

I am acutely aware that the hardware may not be up to the task, however, this system has been live for a long period of time and I have a good idea of what resources each container uses in terms of CPU and memory, and should be doable if I install another stick of DDR3L. The only container I am worried about is the repo-server, it is running gitlab and the sideqkiq process uses a significant amount of memory compared to other services on the machine.

Meanwhile, before production and until I get another stick of DDR3L I decided to setup a test environment on the machine using an old hard drive with a realistic workload to gauge whether it was up to the task. All was going well, until the installation failed and I ran out of time as the server needed to be put back into service.

After this setback I am somewhat indecisive on whether I should commence with the server changes, mostly because of the amount of work that is required to migrate everything over as well as all the testing. I might decide to bench this part of the project until next year and continue with the network upgrades as most of it can be done irrespective of my server configuration, and would be much more fun and interesting to me, but I digress.

This is just a quick(ish) introduction to some of the things I want to do on my network and will update it regularly through 2016-2017 when I make signification changes to the setup. So far these are some of the things I want to do:

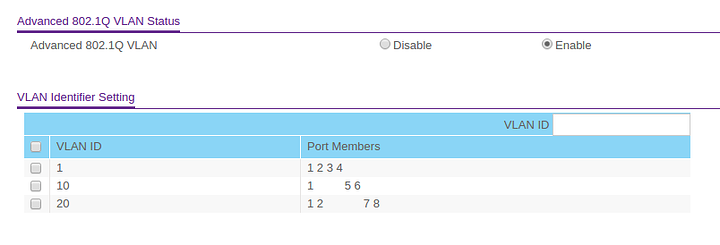

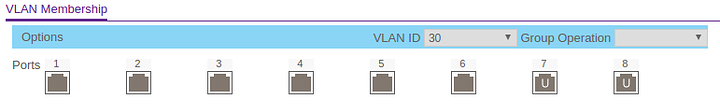

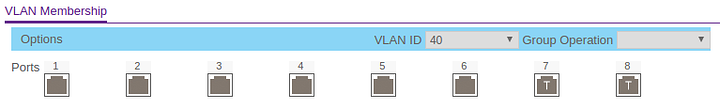

- Settings up VLANs on my GS108Ev3 switch

- Traffic shaping

- Running cable

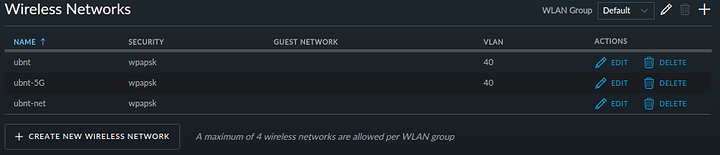

- Possibly some updates to my wireless network

Finally, I have only been using pfSense for around 6 months so I am fairly new to this and want to learn as much as I can, but I will undoubtedly make mistakes, I am fine with that and would welcome any feedback on changes I could make to improve the security, performance and reliability of my network.