Alright, here’s where we are currently. Last night, I was unable to get docker daemon running automatically. Had to:

sudo dockerd

and left a Terminal window open to monitor the thing, because it couldn’t be left unattended in that state.

Ran Portainer, it was fine. Ran Nextcould, and it was still having the strange request error. I nuked the Nextcloud setup and started with a new config:

version: '3.2'

services:

nextcloud:

image: nextcloud:latest

container_name: nextcloud

restart: always

volumes:

- /srv/nextcloud:/var/www/html

- /srv/nc_apps:/var/www/html/custom_apps

- /srv/nc_config:/var/www/html/config

- /srv/nc_data:/var/www/html/data

ports:

- "8080:80"

environment:

NEXTCLOUD_TRUSTED_DOMAINS: 10.12.8.3 artixserv.txp-network.ml

MYSQL_HOST: '10.12.8.2'

MYSQL_DATABASE: nextcloud

MYSQL_USER: root

I yeeted the Redis part, just Nextcloud vanilla now. It installed without issue. Stopped and restarted the Nextcloud instance, it was fine. Restarted the Artix VM and started Nextcloud again, no issue. Had to goto bed, because it was way past midnight.

Came back tonight, and powered on the Artix VM. Docker daemon started successfully on its own, and I’m now afraid to:

sudo pacman -Syu

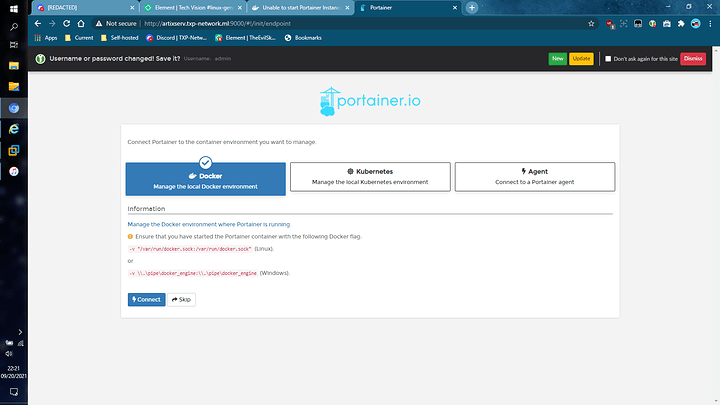

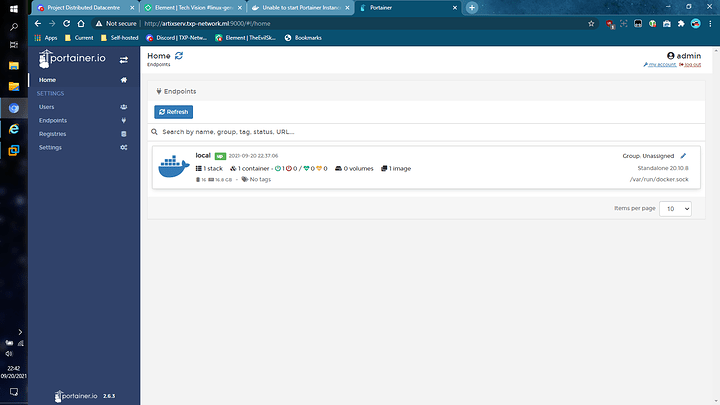

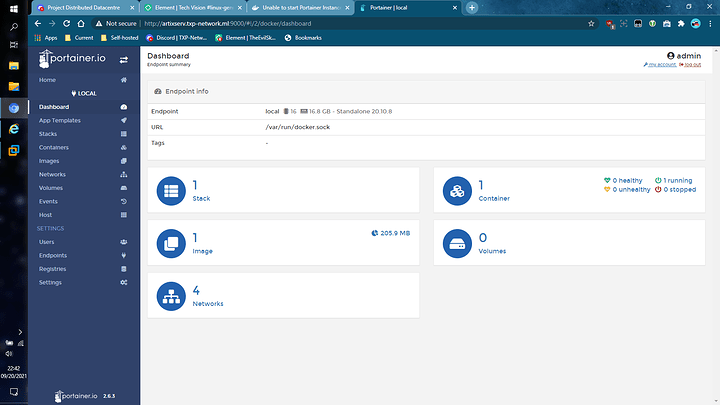

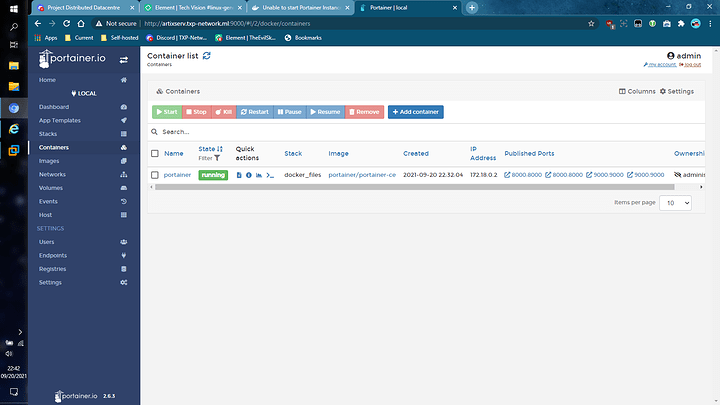

Portainer will most likely work just fine. But that container also autostarts and manages Nextcloud currently (custom stack). Time to see if Nextcloud will play ball.

EDIT: Nextcloud threw the same error as seen here:

It appears to be solely an issue with the Nextcloud instance now. Restarting the Artix VM also had no effect on the functionality of the current setup. It doesn’t appear to be Artix that’s the issue at this point. I’ve created a new compose file once more, because why not:

version: '3.2'

services:

nextcloud:

image: nextcloud:latest

container_name: nextcloud

restart: always

volumes:

- /srv/nextcloud/html:/var/www/html

- /srv/nextcloud/apps:/var/www/html/custom_apps

- /srv/nextcloud/config:/var/www/html/config

- /srv/nextcloud/data:/var/www/html/data

ports:

- "8080:80"

environment:

NEXTCLOUD_TRUSTED_DOMAINS: 10.12.8.3 artixserv.txp-network.ml

MYSQL_HOST: '10.12.8.2'

MYSQL_DATABASE: nextcloud

MYSQL_USER: root

The compose file isn’t really where the difference lies in the approach though. I’m gonna be targeting the MariaDB backend from here on. This is because when I shutdown and bring back up the VM host (ESXi), I also am rebooting the MariaDB host - which would kill any non-persistent settings every time it returns. I also know for a fact that the MariaDB backend is the one consistent factor between all of my recent test runs. I use the same commands to setup the DB:

DROP DATABASE nextcloud;

CREATE DATABASE nextcloud;

GRANT ALL ON nextcloud.* to 'admin'@'remotehost' IDENTIFIED BY 'password' WITH GRANT OPTION;

ALTER DATABASE nextcloud CHARACTER SET utf8mb4 COLLATE utf8mb4_general_ci;

SET GLOBAL innodb_read_only_compressed = OFF;

FLUSH PRIVILEGES;

And these commands work for initial runtime, which means that there should be no major issues in how the DB was initially setup. I know the nextcloud DB and its tables aren’t disappearing every time I reboot the VM server, because I have to drop the DB (btw it has ~107 lines/rows initially - memorised that from the thousands of install attempts) every time I nuke and remake Nextcloud (a pain in the @$$). I also know that Character set and Collation should be persistent, so I won’t be focusing on those either. I’m targeting this one:

SET GLOBAL innodb_read_only_compressed = OFF;

I’m not so sure if this setting is persistent on reboots. Only one way to test this. I’ll be back tomorrow with an update. Otherwise, File Run may get another shot at the remote file storage role…

Still have to finish configuring AD/LDAP integration tomorrow…

Still have to finish configuring AD/LDAP integration tomorrow…