@wendell is always warning us about cheap display port cables…

Turns out the NVMe issues were from a dp to dp cable with 3.3V power on the 20th pin. After a soft power down, the monitor was backdriving power to the GPU leaving the system in a dirty power state. That caused all the weirdness with the drives, and the powered USB hub just made it worse.

I would have never thought of the dp cable, but there’s a lot of reddit posts where a dp cable was reported to cause issues with NVMe’s (at least on Gigabyte mobos).

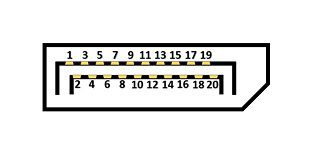

On a dp to dp cable, the 20th pin should be disconnected. Don’t use a cable if there’s continuity between the 20th pins on each end. It helps to have a 30g (0.25 mm Ø) piece of wire (i.e. one strand from a stranded copper wire) to place in one end. One in four of my dp cables have 20th pin continuity.

http://monitorinsider.com/displayport/dp_pin20_controversy.html

This was a very difficult problem to work on. There wasn’t an issue on my bench with a DVI cable, or from a hard power on (everything physically unplugged -> plugged in), or from a restart. The issues only appeared after a second power on with the bad dp cable causing a dirty power state.

A powered USB hub made the instability worse, but seems fine with a different dp cable. I also realized the power supply’s physical switch is useless with powered peripherals. You have to unplug everything.