Backstory

Recently I picked up an old Intel SR2600URLXR server from my local computer recycler. It has 5 hot-swap bays in the front connected to an LSI LSISAS1078 controller. I configured it with 5 virtual drives, each containing one physical drive, so FreeNAS could run the drives in Z2. My initial experience was pretty rough because during the install I kept seeing these mfi0: xxxx .... Unexpected sense: Encl PD 00 Path .... errors. Eventually I managed to get it installed, but I still see these errors scroll on my monitor 5 at a time every few minutes.

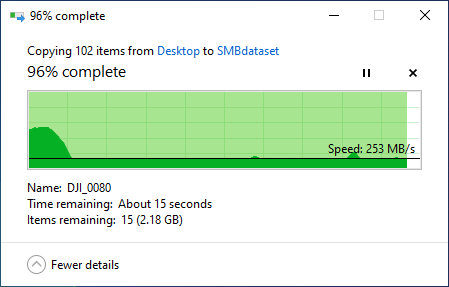

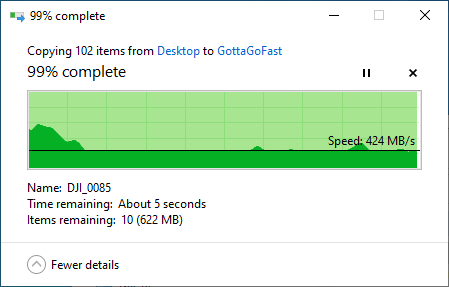

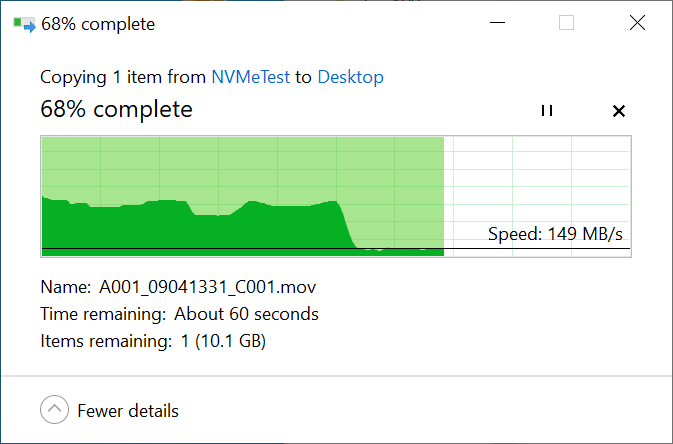

The initial server config was 2x2GB of RAM, a single 4-core Xeon without hyperthreading, and three gigabit NICs (2 onboard and 1 add-in). I was getting good performance at first, with read/write speeds across the network at 112MB/s which is exactly what I expected across the network. I installed an SFP+ card with an Intel chipset and copper 10G transceiver, and speeds improved with an initial peak of 600MB/s which quickly fell to 230MB/s which seemed reasonable for mechanical drives and virtually no RAM. I picked up 96GB of DDR3 ECC and got that installed along with a pair of X5677 Xeons with 4 cores/8 threads each at ~3.5 GHz. That bumped performance up to 800MB/s-1GB/s sustained for a bit, then would fall down to 380MB/s, which seems totally reasonable for 5 drives in Z2. That said, it seemed like it should have been able to sustain the higher transfer speed since I’m only transferring a single 32GB file at the largest during my testing, so my RAM should be able to swallow the whole thing. In my research I saw that SSD caching drives aren’t recommended, but I was kind of desperate to get this think working at its full potential, so I installed a 500GB NVMe drive with a PCIe adapter, added it as a cache drive to my existing pool, and saw exactly zero difference (shocking). I did some testing using iperf3 between the server and another machine on the same 10G network, attached to the same switch, and was able to see an average of 9.35Gbps using -t 60 -b 0, so it seems like network performance is not the issue. After that I removed the SSD from the pool and set it up as its own pool, setup a new SMB share on it, and did the same file transfer tests. Writing to the drive I saw sustained 1GB/s for the entire transfer (first time I’ve seen that), but reading back from the drive I saw the same dip in performance, though it look about twice as long before the drop.

The TL;DR

New FreeNAS-11.3-U4.1 install

Intel SR2600URLXR Server w/ LSI controller

96GB DDR3 ECC

5x2 TB Hitachi mechanical drives (2 SAS, 3 SATA) in RAID Z2

1x 500GB NVMe PCIe

Good peak read/write, sub-optimal speed after several seconds. SSD has perfect write performance, but similar fall-off in read performance. Client system I’m testing with is connected to the same 10Gbps switch with NVMe storage. I understand I shouldn’t expect to saturate 10gig with a handful of mechanical drives, but seeing similar performance dips on the SSD is making me think there’s a config somewhere that’s borked.

Edit: Now that I’ve thought about it a bit longer, it seems like the performance crash while reading from the NAS is probably due to the cache on the SSD getting overwhelmed transferring a 32 gig file? That might explain why it’s fine sending the same file to the NAS.

Instead of a single mammoth file, I copied a folder with multiple large files that totaled 24 gigs in total from the NAS onto the client and saw a steady 350MB/s (cool). Deleted the folder on the client and transferred it again and saw a steady 1GB/s right until about 80%, where it dipped down to around 130, then eventually started climbing until the transfer finished. Repeated the test again and saw 1GB/s the entire time. Am I just being impatient?