Fraze “SOP8 SOIC8 Test Clip And CH341A USB Programmer Flash For Most Of 24 25 Series BIOS Chip” should pull up this link as one pick, but its the older version “full set” without the voltage lever switch. I picked up 1st so I’d have everything.

Then I got the newer version ch341a programmer v1.7 1.8v level shift w25q64fw w25q128fw gd25lq64 that has pretty much the same hardware except it has no alligator clip.

Edit: I couldn’t find the newer version that came with the alligator clip.

sometimes you have to unlock the flash first with

atiflash -unlockrom < adapter number >

you could also try a different version.

some resources

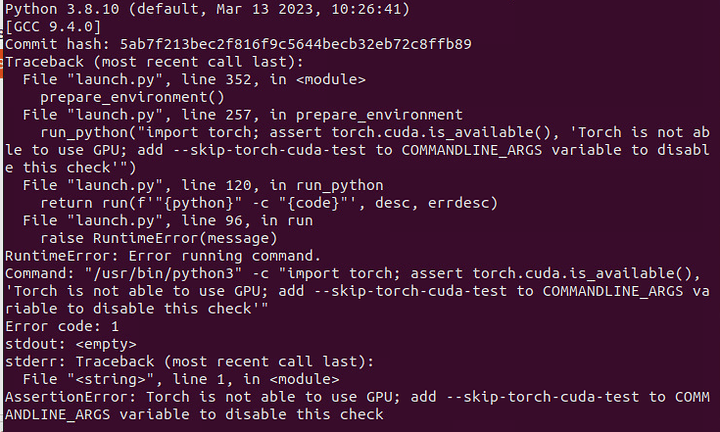

run_python(“import torch; assert torch.cuda.is_available(), ‘Torch is not able to use GPU; add --skip-torch-cuda-test to COMMANDLINE_ARGS variable to disable this check’”)

Followed directions, all details ![]() MI25 flash to WX9100

MI25 flash to WX9100

Any ideas?

I was just running into the exact same thing. It looks like I’m up and running now - instead of running launch.py, I was able to run the webui.sh.

It asked me to install python3.8-venv, I rebooted and ran webui.sh again, then it installed some more dependencies from pytorch and started right up.

I also launched it once with the “–skip-torch-cuda-test” in the launch.py then switched it back. That may or may not have been the final fix but worth a try if the webui.sh doesn’t work for you.

Also also - try and run the rocminfo command to make sure you can get a return from your user. I forgot to add my user account to the render group and that was also causing me problems

I was able to install python3.8-venv after running webui.sh, but now i’m here.

################################################################

Running on user user

################################################################

################################################################

Repo already cloned, using it as install directory

################################################################

################################################################

Create and activate python venv

################################################################

################################################################

ERROR: Cannot activate python venv, aborting…

################################################################

up and running – seems kinda slow though…

Is there a better firmware option?

My current cooling is keeping up with 170W (barely)

yes, the Nvidia RTX 3060 12GB model is an ideal entry-level GPU to use with Stable Diffusion, highly recommend for a cheap option if it fits your budget and use cases. I used it for a while and it worked great. Not particularly fast but “fast enough” and has enough VRAM for medium sized image batches.

@GigaBusterEXE thanks so much for documenting all this, this is a really interesting thread

not sure if I missed it but I did not see much mention of obtaining and handling the model files themselves?

its been 6+ months since I looked, but when I last tried to do Stable Diffusion with an AMD GPU there was a requirement to convert the model files into a different file format or something like that, the process was not straight forward and kinda confusing, and at the time the support for this was not clear in the AUTOMATIC web GUI software.

Maybe you can comment on these aspects in your current setup? Did you have to do anything special to get the models to work in AUTOMATIC with AMD?

also regarding dependencies, I am a heavy user of conda in other contexts, and I found that it worked really nicely with the Stable Diffusion installations as well. I cannot remember if its part of the offical docs for AUTOMATIC, but I would strongly recommend users install all the software deps inside of a dedicated conda env for Stable Diffusion, including all the pip install steps (you can actually bundle the pip installation requirements into a single conda env yaml file for safe-keeping when you revisit the project on your local system after some time away and cant remember what all you installed 6months ago). This saved my butt when I wanted to later try other AI projects that, for whatever reason, had different versions used for some of the libraries that overlapped with SD’s install. So, just a thought, maybe consider including conda env with your setup.

oh yea, one other question, in the OP I think you mentioned adjusting the AMD GPU voltages, but I am not sure that I saw the details of how you accomplished that? Is that still a thing you are doing? In the past I had tried to modify the GPU voltages on my AMD card in Linux and found it to be a freaking nightmare, huge confusing headache, so if you had some clear guidelines on how you did that, it would be fantastic to check out. Thanks.

not op but If you’re using linux, so long as you are using linux kernel 6.1 or newer, and your distro has rocm packages, its pretty simple and straightforward, the source of most problems is python/pytorch , rather than the amdcards or models themselves.

as for adjusting gpu voltages is pretty straight forward, first thing that has to be done is, enabling overdrive, by adding amdgpu.ppfeaturemask=0xffffffff to your kernel options in the grub file.

then you can use either rocm-smi , or the pp_od_clk_voltage, sysfs nterface

personally I prefer the sysfs interface, so I will give a quick explanation of how to use it.

it is found here

/sys/class/drm/card<gpu number>/device/pp_od_clk_voltage

and if you cat the file , it will spit out something like this

OD_SCLK:

0: 852Mhz 800mV

1: 991Mhz 800mV

2: 1138Mhz 825mV

3: 1269Mhz 875mV

4: 1348Mhz 975mV

5: 1399Mhz 1025mV

6: 1440Mhz 1075mV

7: 1500Mhz 1125mV

OD_MCLK:

0: 167Mhz 800mV

1: 500Mhz 800mV

2: 900Mhz 825mV

3: 1025Mhz 875mV

OD_RANGE:

SCLK: 852MHz 1800MHz

MCLK: 167MHz 1500MHz

VDDC: 800mV 1200mV

if you wanted to change say the 7th core clock state to 1525mhz with 1150mv, as executed as root you would do

echo "s 7 1525 1150" > /sys/class/drm/card0/device/pp_od_clk_voltage

if you wanted to increase the 3rd mclk state to 1050mhz with 900mv you would do

echo "m 3 1050 900" > /sys/class/drm/card0/device/pp_od_clk_voltage

note that the voltage on the memory clock , is actually core voltage, not memory voltage, higher core voltages may be required for memory stability

some caveats are that on certain models it may not respect the clocks strictly, if the power limit is too low, or if the socclk is too low, and the socclk is not available under this interface, but can be edited via the pptable.

by far the most useful interface is the power limit, which is found here:

/sys/class/drm/card<gpu number>/device/hwmon/hwmon<a number>/power1_cap

it cannot exceed power1_cap_max,

if you cat this file , it will spit out something like

200000000

this corresponds to a 200w power limit.

To change it , simply write a value , like this , which sets it to 140w

echo "140000000" > /sys/class/drm/card0/device/hwmon/hwmon4/power1_cap

alternatively , you can use rocm-smi, instead ,as used by @GigaBusterEXE

rocm-smi, also works for clocks, however I found it generally more confusing, that just using the sysfs interface.

There is also using a custom power play table that I mentioned if you want more control that what it gives you by default, which can be done via an editor like the upp tool,

note that with the pptable, there are no safeties, so if you set voltages or current limits too high, it will kill !

I don’t really have a use case (yet), but you never know… I’ve tried it with RTX3050 - it was faster than expected and worked with 512x512 and just a bit bigger images.

No model conversion required but on that note:

Be wary of CKPT model files folks. Those are basically python scripts concatenated to the model data. There is no telling if what you are downloading contains some totallynotavirus.py payload.

Always go for the .safetensors versions unless you really trust the model publisher. It’s a safer format. Most popular models on huggingface and civitai will offer a safetensors version. You can dump those into the models/stable-diffusion folder just as ckpt files without need for any extra steps.

This goes to everyone not only for AMD users.

As for Conda I’m not a fan but that’s a personal preference. Venv is good enough and if you really want to isolate things just go for rootless podman container. I’ve been using fedora toolbox and is very convenient but your mileage might vary.

You’re likely referring to converting the model to ONNX to then use a different runtime other than Pytorch.

Nowadays pytorch has support for ROCm, so you can just grab the regular model from huggingface and use pytorch’s runtime to generate whatever you want, the major problem is getting rocm and pytorch to go on your system.

thanks for the updates, I was indeed referring to the ONNX thing, glad to know that has changed.

and yea I was using the sysfs interface previously to try to adjust the voltages and clocks, but the GPU kept crashing and taking the whole PC down with it every time I adjusted anything even slightly, weird. I am on Nvidia now but its great to keep up to date on whats happening

Unfortunately must confirm. I haven’t seen VRAM usage go above ~10GB on RVII.

There is no fix for it isn’t it?

so What i found is that the stock mi25 vbios had this issue, but the wx9100 vbios did not, I think it has something to do with the MIopen kernels , since it would complain about the 56 cus, but did not do that for the 64 cus enabled with the wx9100, but I don’t know for sure that this is the cause

you can find the applicable miopen kernels, here Index of /rocm/apt/5.4.3/pool/main/m/

your card is a radeon vII so that would be the gfx906 60 cu package, if you don’t have a debian/ubuntu distro, just extract the contents to the applicable directory.

Thanks for this info!

So far I’ve tried 5.4.3 / 22.04 version kernels. There are good news and bad news.

Good news are that now VRAM is used between 70-85% which is definitely an improvement! Previously was around 65%.

With this I was able to do 20 sampling steps in 2k latent space then decode it to 2k image.

Bad news is that I was able to do it only once, and now that I try to run it I have the same OOM crashes as before. I will try after rebooting the machine, but my hopes are low.

As the crash leaves this:

torch.cuda.OutOfMemoryError: HIP out of memory.

Tried to allocate 4.00 GiB. GPU 0 has a total capacty of 15.98 GiB of which 1.74 GiB is free.

Of the allocated memory 10.18 GiB is allocated by PyTorch, and 4.00 GiB is reserved by PyTorch but unallocated.

If reserved but unallocated memory is large try setting max_split_size_mb to avoid fragmentation.

See documentation for Memory Management and PYTORCH_HIP_ALLOC_CONF

I also tried to set max_split_size_mb lower with set PYTORCH_HIP_ALLOC_CONF=max_split_size_mb:256

I don’t know if I’m running it correctly - I tried to set it first from venv and then after running python main.py script that launches and runs entire SD+Pytorch backend. In both cases there is no message nor good or bad, so I assume it is working correctly (question mark).

The only successful 2k run was made with 512 split size.

The one 2k image I got was total garbage. Probably because training was done on lower res.

After this and trying various upscalers I think that for now they are THE way to go for high res pictures.

The normal way to generate very big images is to gen those on a low res like 512x512, 768x768 or whatever ratio. Apply hires fix to make it a bit larger like 1.5 to 2.0 the size and finally get that result to extras where you use another upscaler to some ridiculous resolution. I use 4x foolhardy remacri. It changes a bit the output but its mostly acceptable.

Examples

https://files.catbox.moe/nrls8m.png

https://files.catbox.moe/cj7i9w.png

https://files.catbox.moe/0v24cq.png

The one 2k image I got was total garbage

Make sure you are using a VAE if your model doesn’t have it.

I know about HiRes Fix and about using VAE, but thanks nonetheless.

This is latent upscaled from 512 → 1k then finally to 4k with 4xUltraSharp. I downscaled it to 2k so it can be posted here.

OOM is basically normal since often it will spike with higher resolutions, try using the --opt-sub-quad-attention flag, it will significantly reduce memory footprint without a performance penalty,

It’s really too bad that fp16 doesn’t work properly , since it significantly reduces memory usage.

ComfyUI automatically sets it and I was using it the whole time:

Set vram state to: NORMAL_VRAM

Using device: CUDA 0: AMD Radeon VII

Using sub quadratic optimization for cross attention, if you have memory or speed issues try using: --use-split-cross-attention

Starting server

...

I also tried --use-split-cross-attention as this is suggested, but it didn’t help, only made sampling much slower.