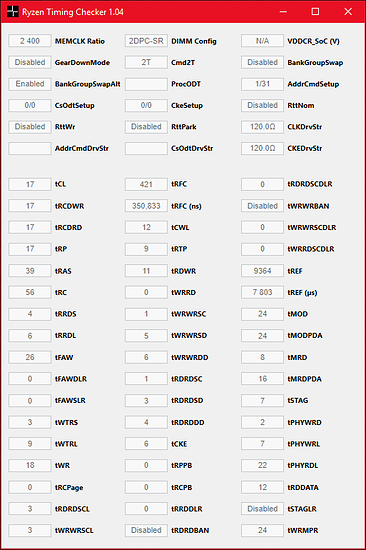

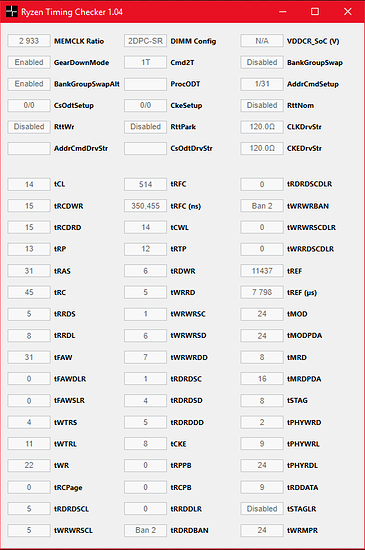

I messed up on the tRFC4 value as I only have access to tRFC and hadn’t worked out what I needed to know yet.

So 514 / 1.34 / 1.625 = 236.0505 which is pretty close to Wendell’s tRFC4 value of 235. I’ll remove it from the table as it’s essentially the same. I’ll set it back to 514 and rerun the test.

Typing ‘phoronix-test-suite’ with no parameters gives a fairly easy to understand help display, but here’s some useful commands.

You can automate a benchmark run against a previously uploaded result, for instance, Wendell’s latest update linked above, by using the ID from the URL as follows (without quotes).

“phoronix-test-suite benchmark 1810154-FO-MEMORY29909”

Run a particular test (eg. x264):

“phoronix-test-suite benchmark x264”

List all tests:

“phoronix-test-suite list-all-tests”

Upload a result if you said ‘No’ when initially asked:

“phoronix-test-suite upload-result {test ID}”

Rename test identifiers (also lets you add characters like ‘*’ etc. which are ignored upon initial naming)

“phoronix-test-suite rename-identifier-in-result-file {test ID}”

Change the order in which results are displayed:

“phoronix-test-suite reorder-result-file {test ID}”

As I’m going to rerun against Wendell’s latest update, I’ll time it and post a more accurate time when finished, though it’s somewhere in the vicinity of an hour for Wendell’s batch of tests. Other tests vary in duration, though most are fairly short.