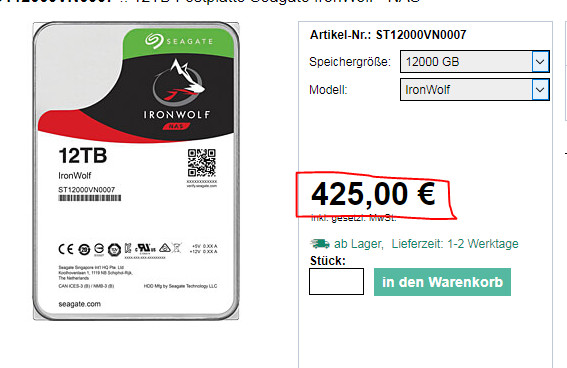

12TB HDD - 425€

Your “LTT style”-solution is >4 times the price.

Edit: Doing your suggested “LTT-setup” with cheap HDDs comes out at 350€ (348€ to be precise). That puts your SSD-suggestion at 500% the cost.

To repeat myself:

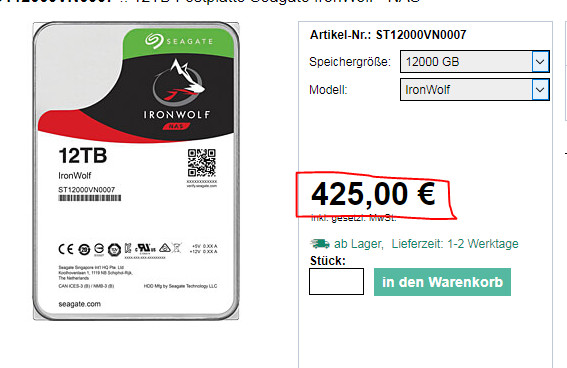

12TB HDD - 425€

Your “LTT style”-solution is >4 times the price.

Edit: Doing your suggested “LTT-setup” with cheap HDDs comes out at 350€ (348€ to be precise). That puts your SSD-suggestion at 500% the cost.

To repeat myself:

No, they aren’t. Not in the Datacenter.

You can fit waaaay more SSD capacity in a 2u server than HDD. This is why all new servers are using 2.5in disks.

That said, cost-density, is another thing.

Say you’ve got low 7 figures to build out a datacenter. that’s going to severely limit your choices and you’ll probably want to go with ~150tb of SSD to optimize 2-3PB of rust in a ceph cluster.

Everyone in this thread is jerking off over X is better than Y. The fact of the matter is that they’re both good at different things at this point.

Soon, we may see 20TB HDDs. We also might see $50 1TB SSD. Who knows what the future holds, but the minute that SSD hits price and capacity parity with HDDs, we’re going to be having a different discussion.

There’s 4 and 5tb disks in 2.5" form. They aren’t suitable for servers… Have ssds gotten up there yet? I thought 2tb was the highest so far.

Edit I do see some Samsung 4tb ssds, at a premium $900 though lol.

You can but 4 1tb for half that. Jeez

Have said and will say again, one thing does density, one does cost per GB.

Samsung has their NGSFF SSD

2.5" drives on Samsungs part go up to 15.36TB (on SAS 12Gbit/s) MZILS12THMLS

Oh baller, Intel p4610 7.6 tb

It really depends on what you’re looking for though. If you’re focusing on 2.5in, don’t look at things that aren’t suitable for enterprise. The 860 PRO 4TB is $1100 from Amazon. That’s fair considering the performance you get. It’s also not really suitable for Enterprise.

The real problem we’re encountering with PCIe (NVMe) SSDs right now is the lack of ability to hotplug them. Linus covered this in his hot-swap PCIe video a few months back. PCIe was never meant to be hotplug. This is why my company doesn’t use NVMe in most of our servers. Not to mention the fact that our workload doesn’t really lend itself well to the additional IO since we just load shit into RAM directly from the 40GbE network.

There is officially 0 reason to use spinning rust now. I’ll buy a few of these for my home Plex server.

LMAO gud1 bb

Like I said, SSD backed rust is the way to go right now. Be it a single hybrid drive or multiple disks, with SSD ARC.

For me personally the RAID array is necessary because, neither harddrives or SSD’s alone provide enough storage or redundancy for me. I do own an SSD it is my linux boot drive infact.

I need to store around 4 TB of content (mostly backups), about 1 TB of games. It was not and unfortunately still isn’t economically viable for me to switch to SSD’s.

I don’t think it was worth getting that in SSD’s especially when for the 4TB content I want it at least in a raid 5 configuration. For the games, it does absolutely make sense for me to change over. But I don’t feel like dumping $200 AUD now and probably getting a second one in the future when my steam library grows.

Just checked and if you buy now you can save $517 on each

To repeat myself

I have hundreds tebibytes of anime to store.

Yup, and in datacentre you’re often IOPs bound. You simply can not get the IOPs out of spinning disk. Doesn’t matter how big they are, even before SSDs, server guys would go for MANY say 10-15k RPM 76 GB drives instead of larger drives purely because large sata drives are way too slow. You’re often limited in physical space (to attach enough spindles) to get the IOPs required, not the capacity. Not always, but the times you aren’t are generally archive storage related.

As you say, neither is “better”

Hard disks have their place. archive storage, backups. Niche users who want to mirror the internet.

I would posit that for the average end user, doing typical end user things, hard drives are, or should be, dead.

Theyre shit for running VMs on, they’re shit for doing video work on, they’re shit for normal system bootup, application performance, etc.

If you need many TB of storage, fine. But most people’s end user devices simply don’t. And the capacity provided by a $150 SSD is now plenty for most, especially given the speed trade-off you’d make for rust.

For THAT use case, hard drives are dead.

You brand people who are not enterprise customers who get a business advantage out of using Solid State storage but have >1TB to store as “niche”. Have I understood that right?

All the game console users who upgraded the internal storage of their consoles are “niche”?

Except game storage.

People at home use SSDs to boot and HDDs for archive. Archive means it can be slower. And at $150, you get more than 4TB storage with HDDs (or RAID1 2TB).

Same reasoning as with a Ferrari over a box truck, one is fast but for moving from SanFrancisco to NewYork City, the box truck is still faster.

I know this will sound like me being an Ass, but surely there are more Niche users on this forum than you might regularly encounter?

When you say there is NO point in HDD’s, I can see why everyone commenting here does see the point (and need) for them.

Flash is the next standard, and more devices are shipping with flash drives than not nowadays. (Mobiles +Laptops, even personal voice assistants…) make up the bulk of devices.

But flash isn’t for every occasion (Yet)

So perhaps too early to say rust is Dead; Rather Dying a protracted, drawn out death?

Mechanical drives aren’t dead, and likely not truly die for at least the next few decades. A better way of putting it is SSDs are finally becoming more of the norm these days, due to being not that much more expensive compared to an equivalent HDDs amount of storage for client devices and the mainstreaming of cloud based storage and backup solutions for home users. A 256gb SSD is plenty for mom and pop to run quikbooks, email, and backup their important stuff on backblaze or something, or for an office worker to run Office and save their work in their home folder/share drive.

Mechanical drives are still useful for just cheap capacity, and are a much lower total cost of ownership for datacenters that need just massive bulk storage for their warm backup/archiving, and that is why they’ll continue to survive for several more decades. Flash has higher density, lower power consumption, less datacenter space, but a much higher initial cost still. Until those higher capacities reach a more affordable level and the cost of ownership approaches closer to parity with mechanical drives, then the major switch over won’t happen.

Even then, mechanical drives will still survive in the form of archive drives, as Flash will lose its data if it doesn’t have power for a certain amount of time. Archive drives lose this charge as well, but at a significantly slower rate (months for flash, years to decades for mechanical).

For the cost of 1-2 AAA games i can buy 1TB of SSD. Game storage is not a big deal on SSD any more.

Why would i want to run games from hard drive? They’re slow…

None of my computers outside of my (now infrequently used) NAS contain spinning disks any more.

If spinning rust isn’t dead for consumer already, it will be inside of 12 months.

As a developer: these days I’m assuming my ‘local storage’ has SSD latencies (<1ms) and it’s there for caches and so on, and I’m assuming my remote storage is either highly available flash (<1ms) or has archival properties at 4x byte discount (<1ms writes, 10ms reads, not posix). I’m never assuming any caching for remote storage, there’s always memcache. I’m always mlock-ing all my executable pages into ram upon startup for my code to not stall on paging ever - I don’t care where the code is loaded from or the size of the binary unless it’s too big.

I know a lot of “not computer people” who still run WinXP.

HDDs will stay at least another 10 years.

This is the reason botnets have power.