The file is approx. 39GB (Lord of the Rings :))

I had fedora 30 and 31 but could not get zfs working after updates. Something seems to be wrong there. Selected this because it was fast install of everything including nVidia drivers.

What do you run?

Looks like it is the disk yes. Shame you had to invest in new cables to find out, but at least the switch is good.

If the Samsung disk is “disposable” and you dont mind using it for testing, time to try swapping SATA controllers as it may be something silly like it is on the PCH and is sharing bandwidth with something. No harm moving it around, unless it has important data then leave it alone.

Or the drive itself is throttling then may be invest in more NVME, or RAID the SATA drives.

On my prod machine (Threadripper) I use Manjaro and it is fine for my needs. I have a Vega56 though so can’t comment on latest Nvidia blob. On there I get 350MBps each way to my NAS (6 old hard disks in RAIDZ2) and I am happy with that. Might try dropping a spare SSD into the Freenas box just to test what It could do if I were to go full Linus.

Glad you have a solve for your network query!

The disks on the TS140 are all disposable in that I can try stuff. I can’t really do anything to the Windows box just yet because that is the one I use everyday. I am trying to switch over to Linux but need this network, zfs and Adobe issues resolved first. Got the Adobe stuff somewhat under control as I can run that in a VM on a separate SSD and even do PCI-E passthrough.

I can try to this LSI 9260-4i (https://www.newegg.com/lsi00197-sata-sas/p/N82E16816118106?Item=N82E16816118106) with 2 samsung 860 drives. I think I have 2 more somewhere as well.

I 2 more 970 nvmes but the TS140 would need an expansion card. Would the asus work or not even worth(https://www.amazon.com/ASUS-M-2-X16-V2-Threadripper/dp/B07NQBQB6Z/ref=sr_1_3?keywords=nvme+expansion+card&qid=1580937894&sr=8-3).

I am regretting not going for thread-ripper.

Don’t know about the expansion card, check the reviews to see if it works on other kit. before spending too much just try MDADM RAID 0 of a couple of SSDs and see if it makes a difference. If it is the drives then new drives are worth the investment.

Sounds like your workflow would benefit from an all SSD array so the LSI controller with 4 SATA disks would be strong enough to saturate that network. Remember it doesnt matter how fast the drives are though, if they are nearly full they will crawl, just how SSDs work I am afraid.

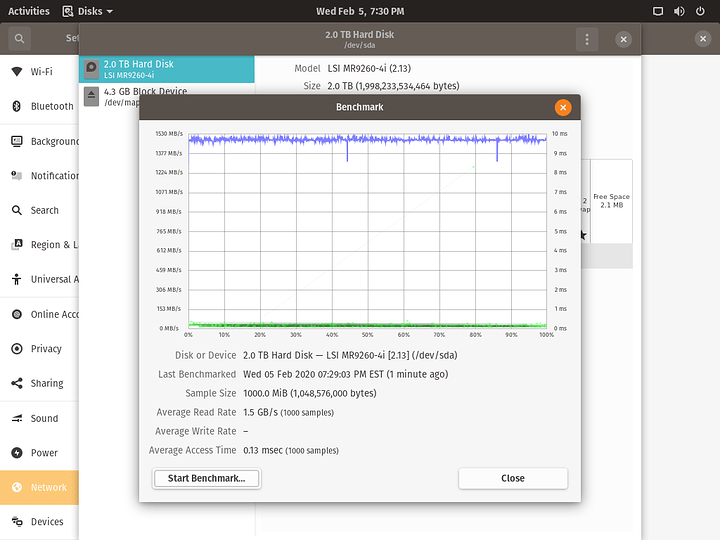

Trying this right now with 4 samsung 860s in Raid 0 on the SLI 9260-i4. The disk benchmark gives me: approx. 1.5gb read (not sure about write)

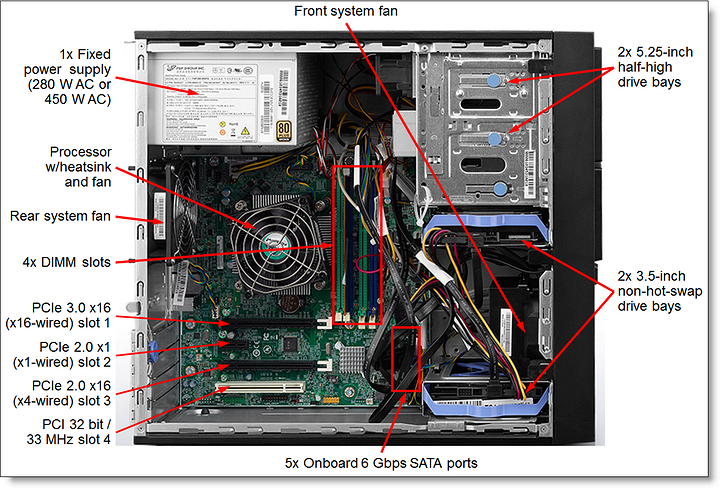

The X550-10G-2T is in the PCI-E v3 x16 and the LSI card is in the PCI-E 2 x 16. I also tried swapping slots.

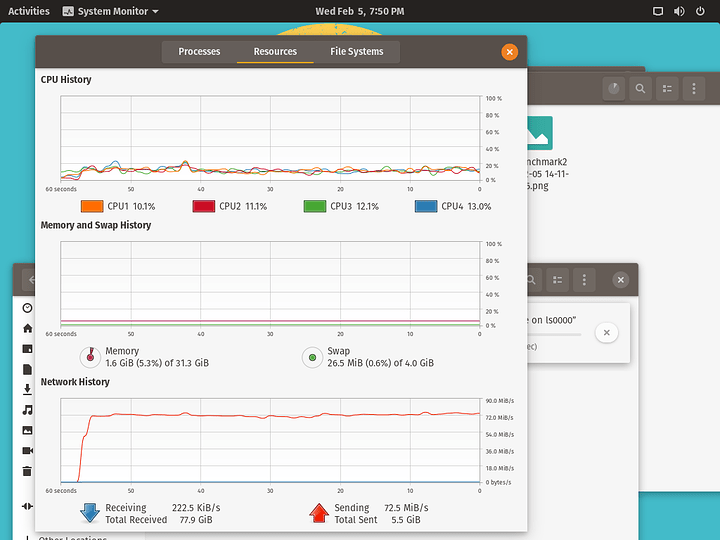

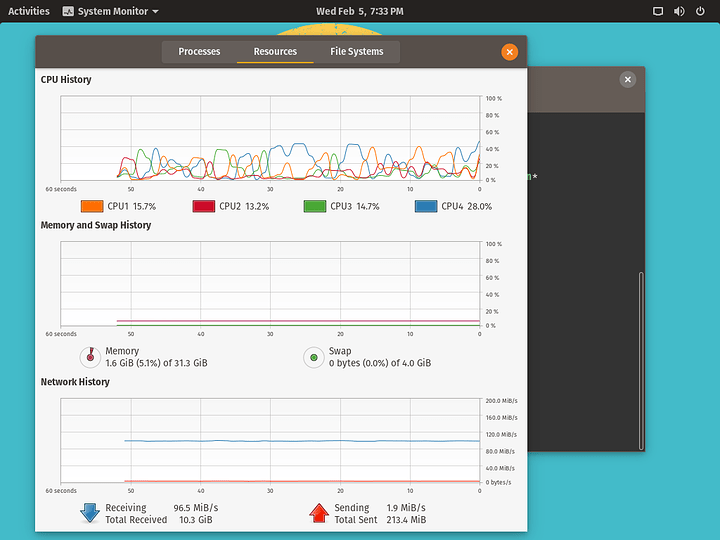

However, I just don’t get the throughput that I get on the Windows machine where it goes from nvme to nvme. Is it just that simple or maybe this Motherboard and hardware just can’t do it? I would think that with Avg. 1.5GBs reads that I would at least be able to send data faster but I get a steady 90MiB/s.

writing to the TS140 is also about the same at 90 MiB/s

The TS140 should be able to cope fine.

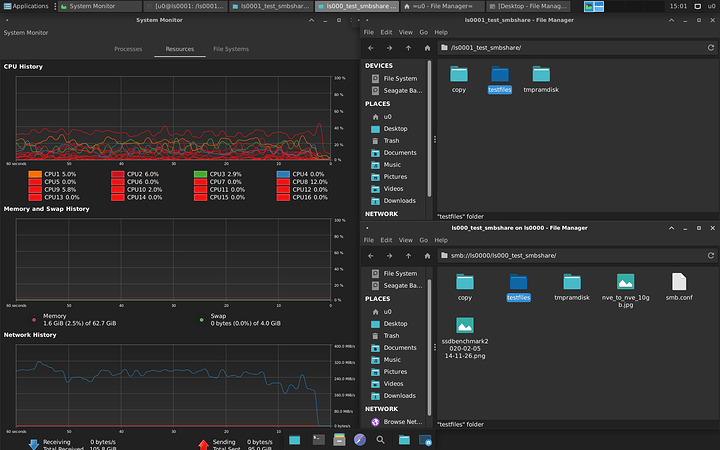

I’m confused by your setup now as those charts look weird.

What is getting this? What source drive what destination drive? That looks like a hard disk write cap, not a network cap which would be about 110MiB. What happens when you copy a file to the same disk on the same machine?

Correct me if this is wrong but I’m Hypothesising:

-

Windows PC running nvme, 10Gb network adapter

-

Linux machine running nvme, 10Gb network adapter on aquantia

If you connect these together via the two 10Gb ports on the GS110 you get 10Gb throughout as tested in previous post. So you know the network is ok and the high end disks work fine.

So what are you copying from where to where that is only 90MiB/s?

I would have to try a copy again but the install was pretty fast.

Correct. It is a machine that has an NVME and runs on an ASRock Fatality x99 chipset. I swapped it out for the TS140 Lenovo ThinkServer to see if I could get 10GB when going from NVME to NVME.

Correct this is the machine with the Aquantia NIC and it has an NVME

Correct

I swapped out the Windows 10 machine for the original TS140. However, I setup the TS1140 with a RAID 0 using 4 samsung 860s in Raid 0 on the SLI 9260-i4. That is where the benchmarks are from. So instead of copying the file from 1 SSD on the TS140 to the NVME on the Linux box with the Aquantia, i am copying the same file from the the TS140 RAID 0 array to the box with the Aquantia. This is were I am getting the low speeds like before. The only difference in the setup is the LSI controller so I guess I can try the mdadm. I just don’t get it. How can it be going this slow especially when the Linux install was really fast. I would imagine that copying the file from the TS140 to the Aquantia would at least be pretty fast given the read benchmark.

I’m wondering what the bandwidth is for the chipset, because that pcie 2.0 is operating in x4. Could the chipset just be bogged down, or overheating?

Simple test then… What happens if you copy a file from one disk to another on the ts140?

If it is the controller, PCH or disks that will tell you.

Real data, not a iperf test.

I suppose that is possible.

Right now I have a Raid 0. Are you saying that the disks should be separated or are you saying that I should try copying a file from one location to another but on the same raid?

No separate the disks. Ideally different controllers. Try various combinations until you find out what is not working.

I strongly suspect it is one of the drives. If you have another spare, even spinning rust, worth testing.

If all else fails it is the ts140 that has something wrong.

Hi,

so i tried separating the disks and switching systems. I tried the Lenovo TS140 and the DELL T130. Same 860 500GB SSDs. Approximately same specs. Both systems give me the same results in disk-to-disk copy and in dir-to-dir on same disk copy.

disk-to-disk copy is approximately 400MB/s

dir-to-dir on the same disk is approximately 200MB/s (so there is a big drop)

but no matter what I do on either the TS140 or the T130 system (different OS, raid 0, separate disks etc.) nothing gets me even close to the 10Gib speed.

Strangely both systems running on RAID 0 with 3 disks give me approximately 98MB/s which is hardly 1Gib. The write benchmark for this RAID 3 is approximately 600MB/s.

I am just not sure here. How can 4 disks in a row be bad? I don’t have any more spares and these are brand new.

I can understand this is frustrating. I agree it is unlikely the disks with new disks.

I can only suggest stripping everything back to basics and adding one component at a time to see where it bottlenecks. It would help if you could reply with screen grabs of the network /disk utilisation in each step.

Step 0, get a known good test folder. Mixed data large and small files, at least 50GB.

Step 1, single disk to single disk copy on the t130. Prove the drive can hit multi-hundred Mbps sustained, not just in a benchmark or short simulation. Go both ways.

Step 2, build a pair of raid 0 with third disk on its own. Repeat step 1 to check the raid controller isn’t doing something weird.

Step 3 (humour me), disk to disk copy on your workstation, check the issue isn’t that end. I think that was fine previously but good to check

Step 4, copy test folder from the single disk on t130 to nvme on workstation

Step 5, return test folder to t130 single disk from nvme on workstation.

Step 6, 7: repeat 4&5 to raid array.

That should tell us what and where you have issues. The screenshots will help as it is usually possible to see what is capping from the shape of the network utilisation. On my system in is clearly the disks as I get steady copy at first then a drop off once the write buffer fills. But those are 5400rpm rusty drives in RAIDZ2.

Hi @Airstripone,

This is what I was considering yesterday but ran out of patience after trying this on windows.

I did notice something curious when I connected my workstation that has an nvme and windows 10-the network copy from the workstation to the x470 linux box with nvme gave me around 1.1GB and was pretty damn fast. But the copy from the linux box to the workstation was around 118MB. I am thinking that the windows bix nvme drive created a bottle neck as it only had 50gb free soace. So, thinking of striping my Windows box down, reinstall fresh copy of windows and try the nvme to nvme case again as that one should definitely work with a less cluttered nvme drive on the windows box. Will get started on the T130 as well and go step by step. Installing OS on T130 Dell sucks as the lifecycle controller garbage cant recognize a valid OS from USB. So that one I do from DVD:-(

Hi @Airstripone

I stripped everything down and am trying to use my 2 fastest systems. I only have the following 2 that are NVMe capable so sorry for switching things up here a bit.

=============================================================

SYSTEMS

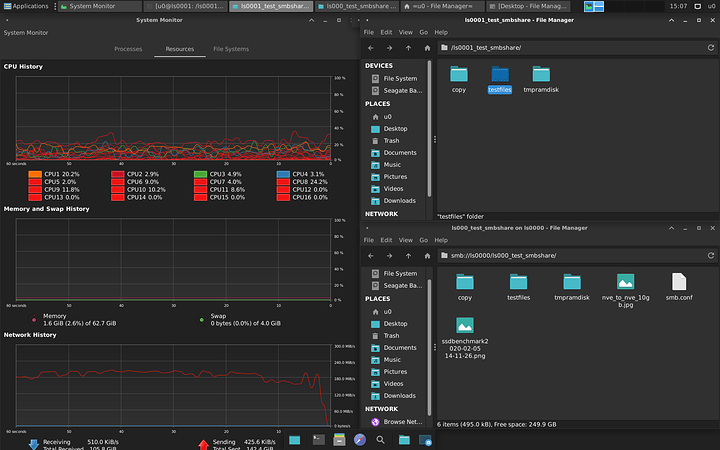

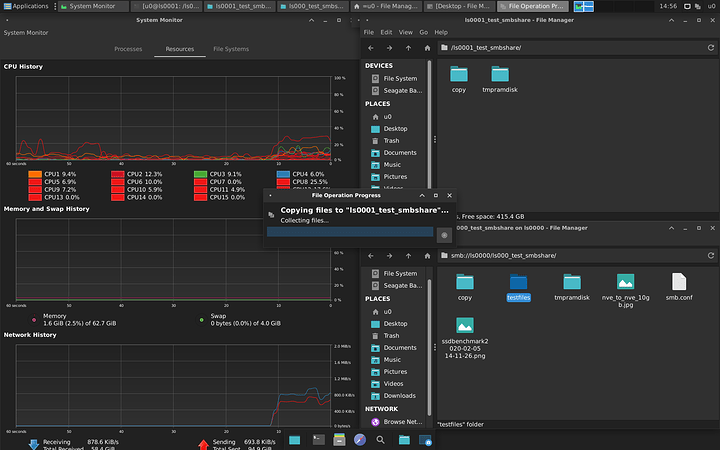

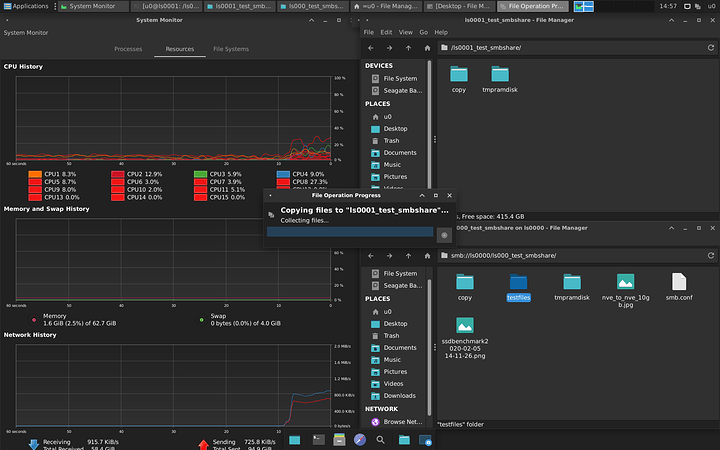

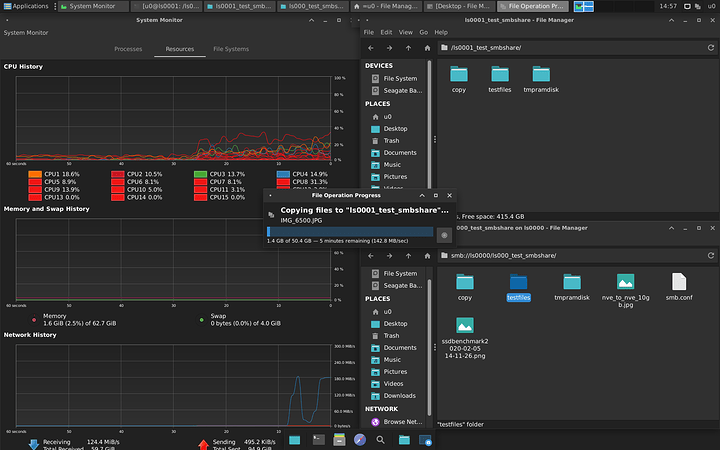

LS0000 - x470 ASRock Taichi Ultimate, Ryzen 2700, 10 Gbe Aquantia, Samsung 970 NVMe Pro 512GB, 64 GB ECC Ram, nVidia 760 Ti, PopOS 18.04 LTS, xfce4 (This remains the same), EXT4

LS0001 - x99M ASRock Fatal1ty Killer, Intel i7 6900K, 10 Gbe X540-T2, Samsung 970 NVMe Pro 512GB, 64 GB Non-ECC Ram, nVidia 970 Ti, PopOS 18.04 LTS, xfce4 (This used to have Windows 10), EXT4

==============================================================

DIRECTLY CONNECTED

10 Gbe Aquantia directly connected to X540-T2 (no switch)

==============================================================

TEST FILES

Test payload is 47Gb in size and consists of various files: .txt, jpg, mp3, DVD ISOs approx 8Gb and mp4.

==============================================================

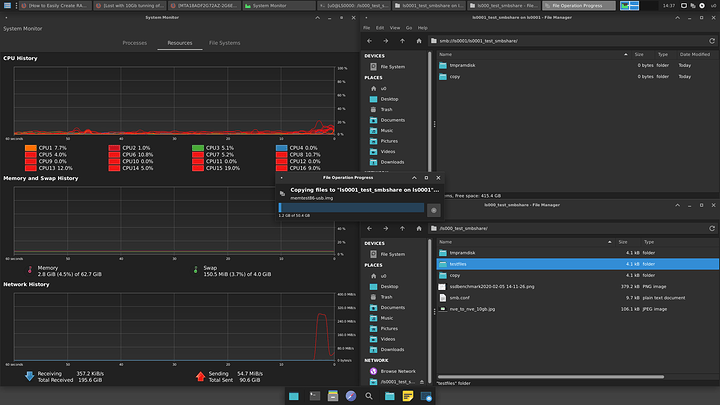

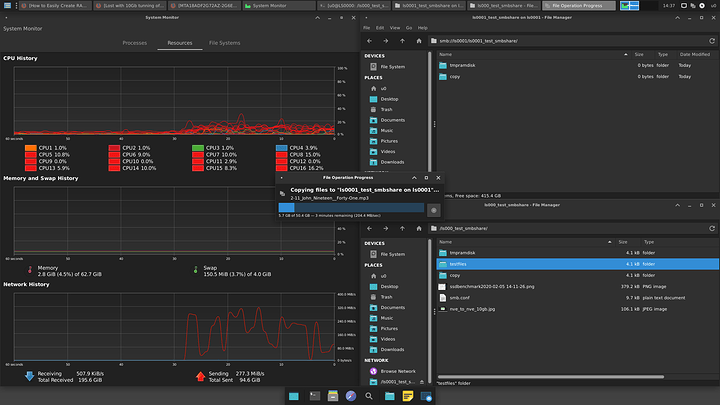

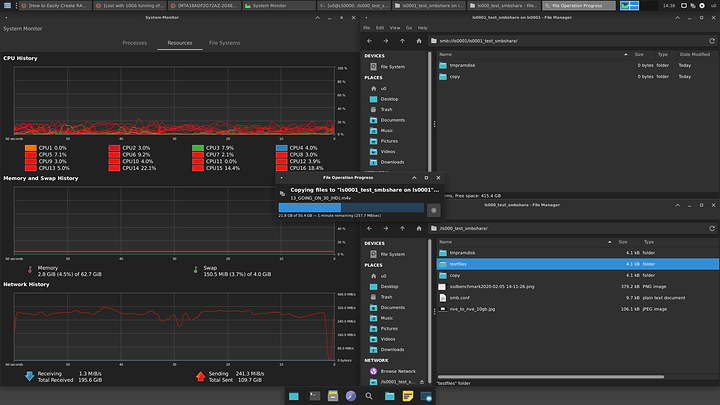

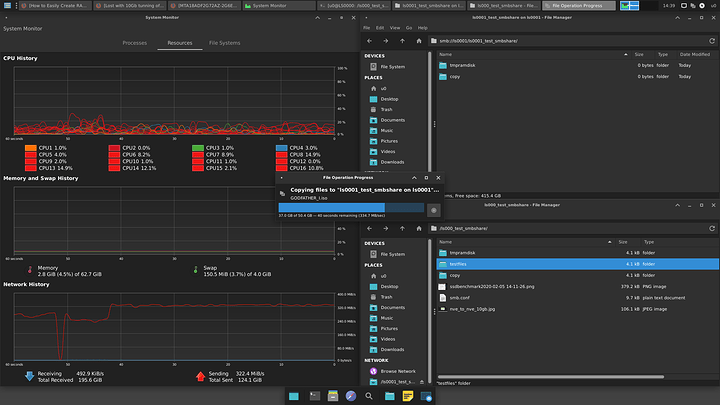

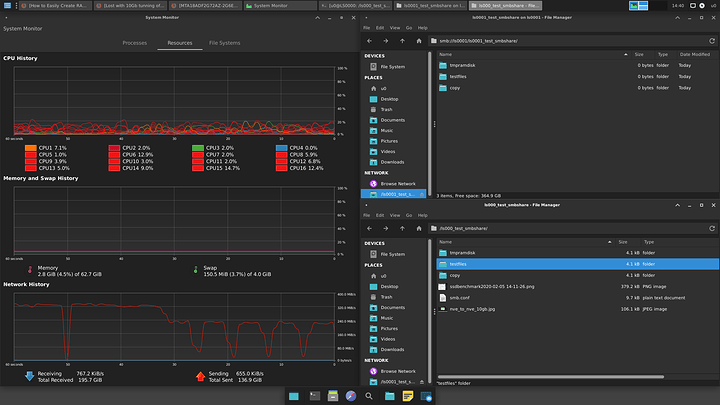

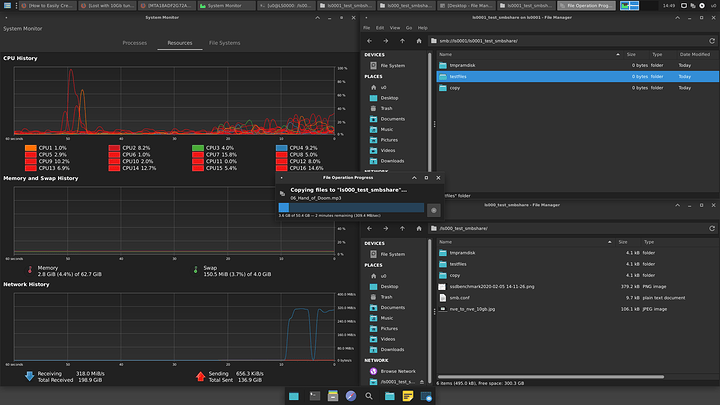

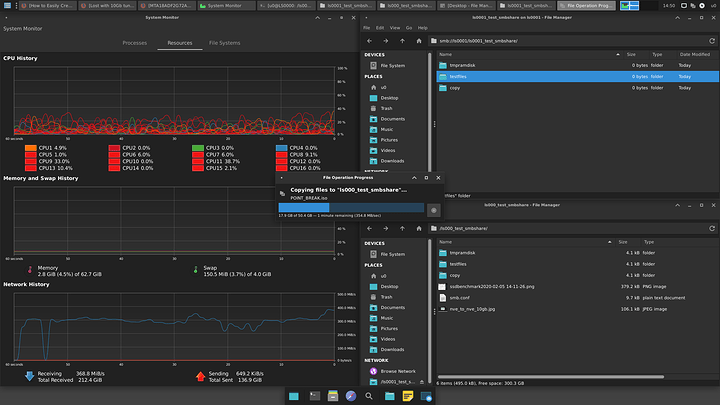

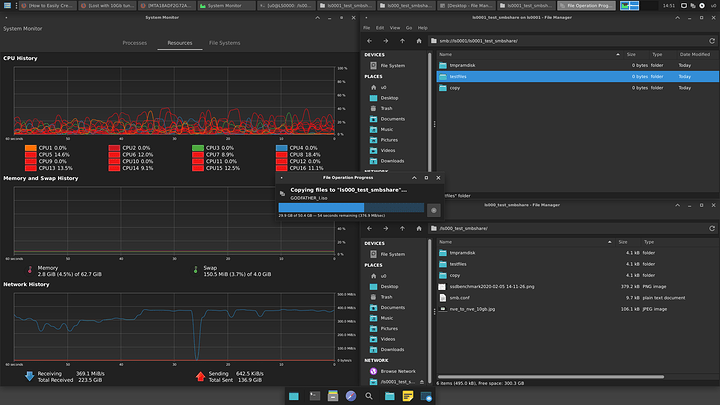

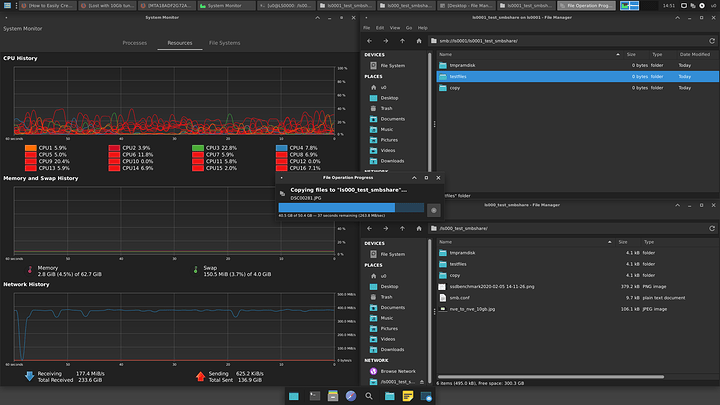

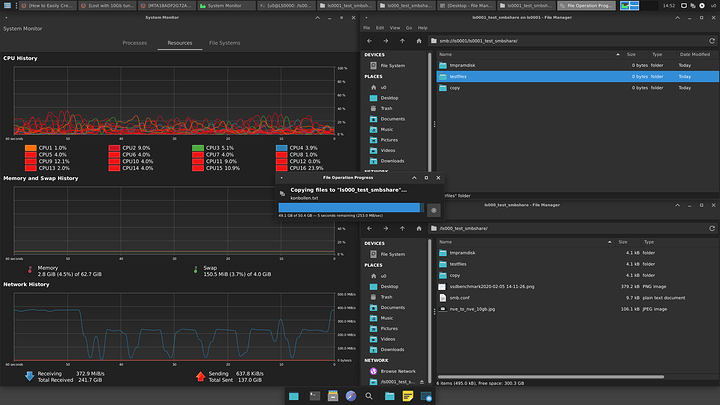

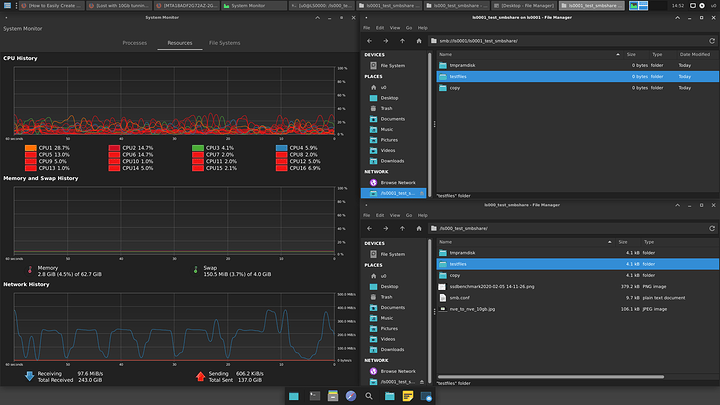

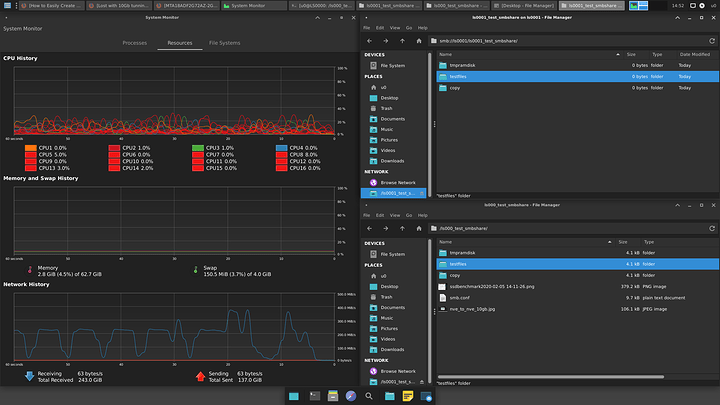

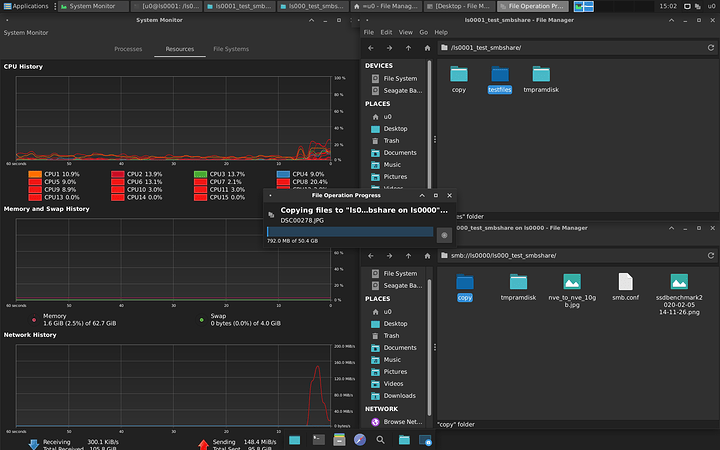

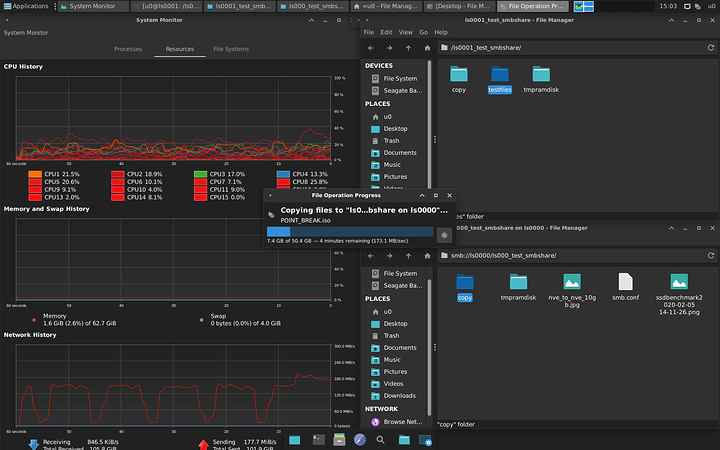

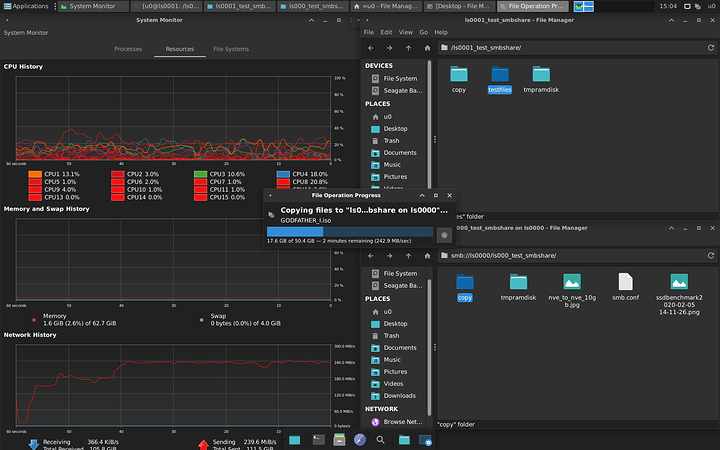

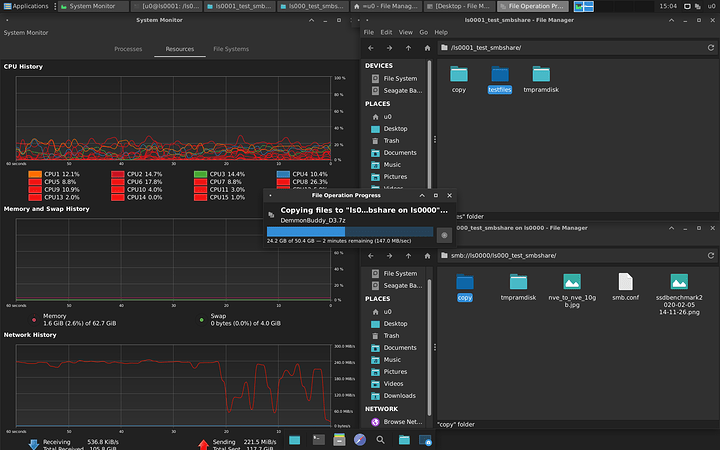

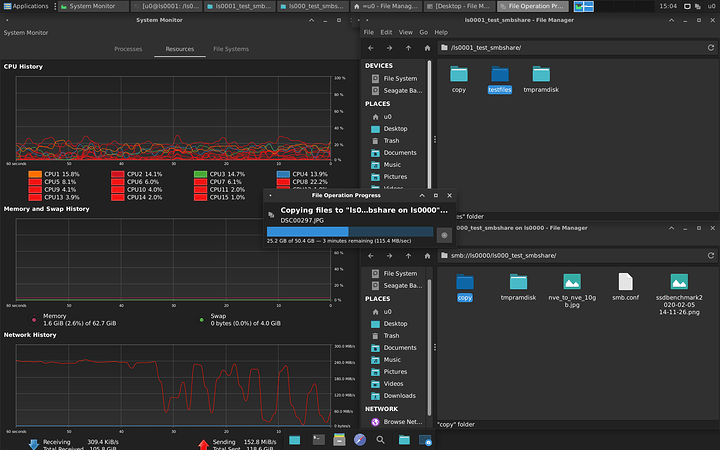

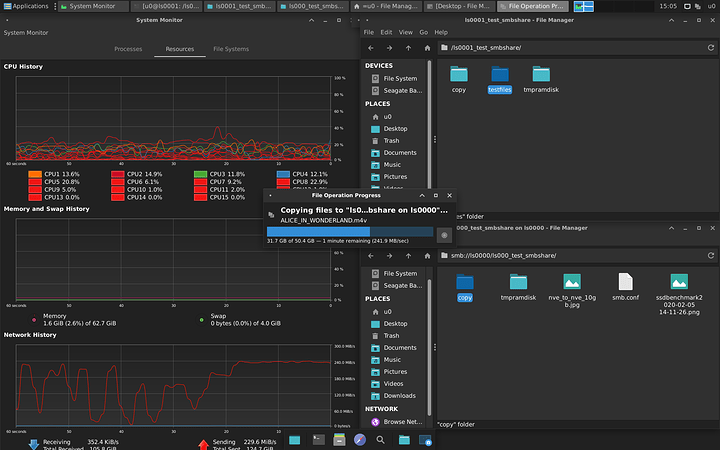

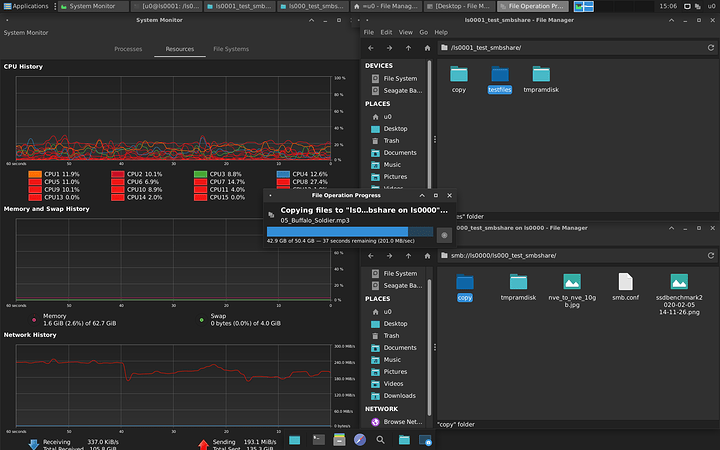

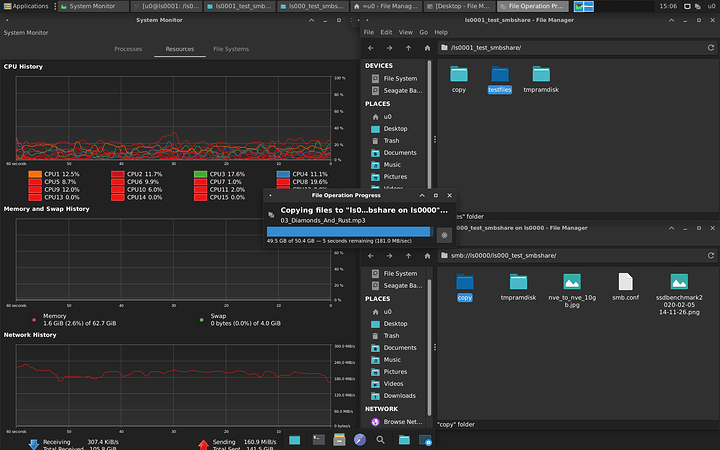

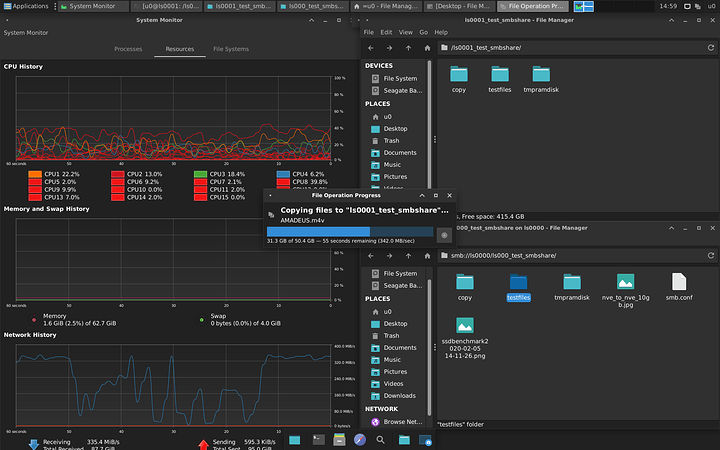

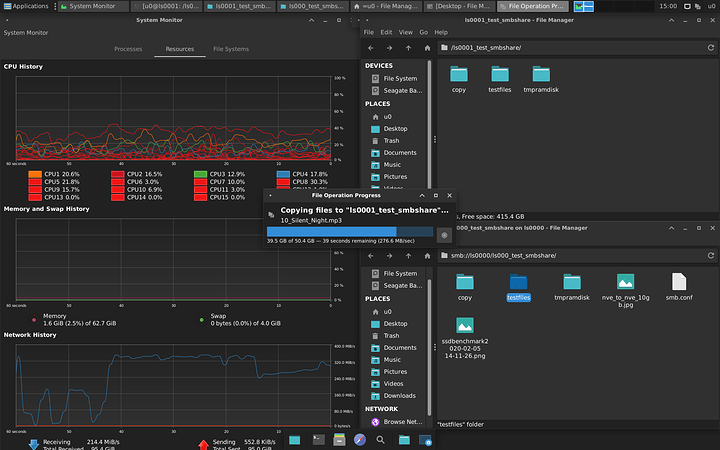

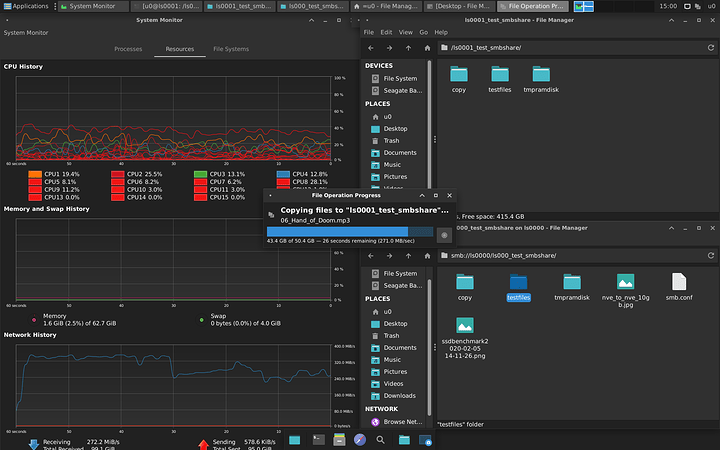

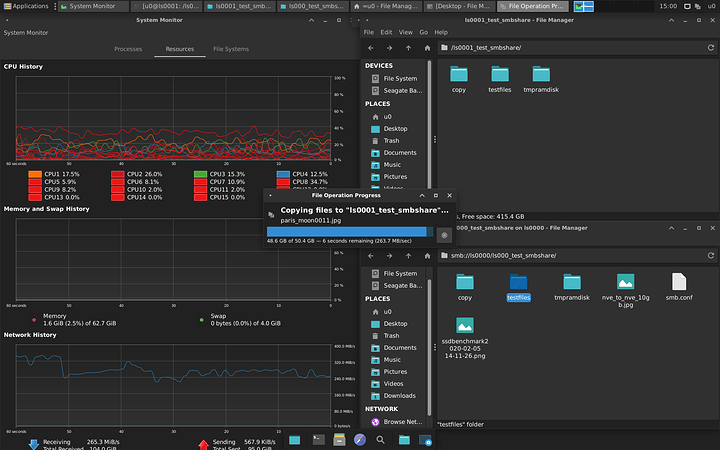

OBSERVATIONS

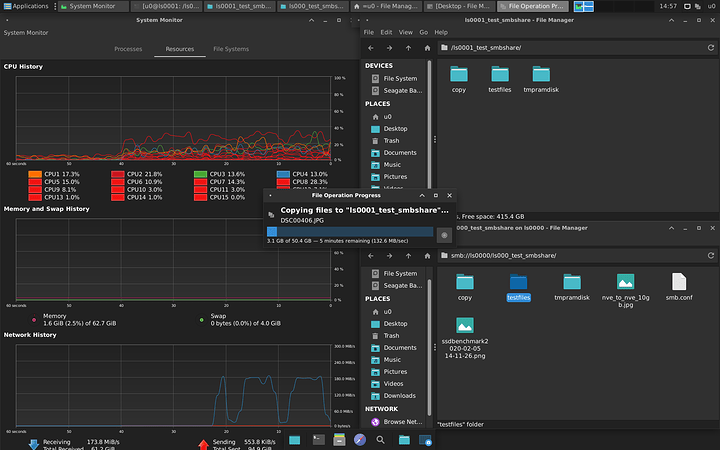

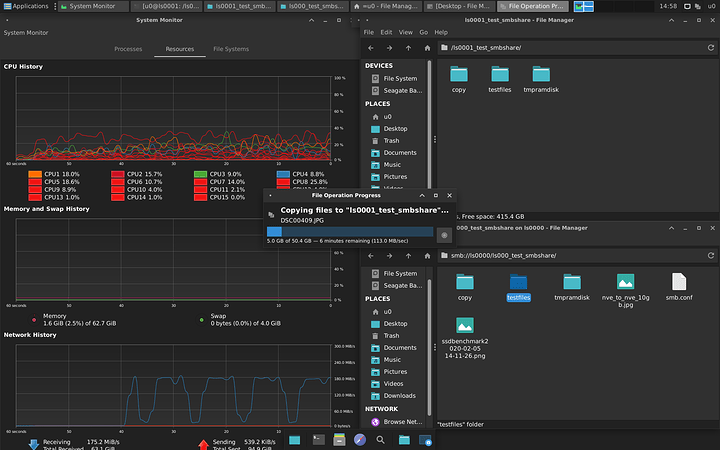

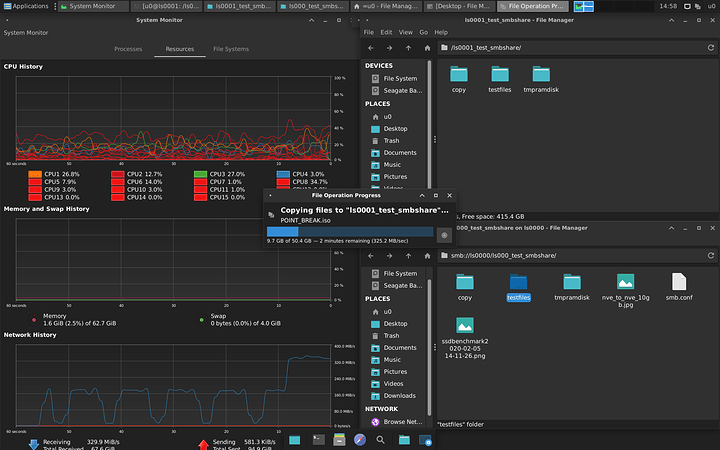

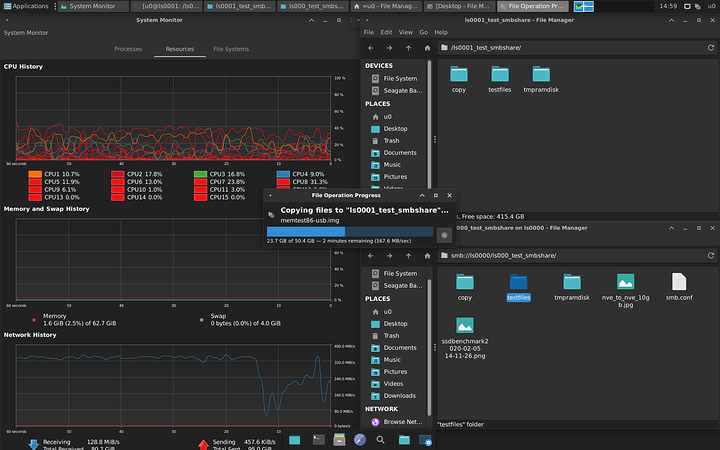

I tried copying the test files 4 times: 2 times by initiating the copy from LS0000 and 2 times by initiating the copy from LS0001. In general, it seems like everything goes faster when the copy is initiated from LS0000. Network throughput hits 320MBs but it is not consistent and there dips below 80MBs sometimes. It also seems that some files get more consistent speeds then others (e.g., larger dvd iSOs approx. 8Gb seem to be copied more consistently than small .JPG files).

When initiating the copy from LS0000 I get the following but it is not stable and I see dips a low as 80MBs and below. So I guess the questions are:

What is causing the dip if this is NVMe to NVMe?

Why can’t I get consistent speed?

I can try the rest of the bullet points but are we not down to the simplest thing here which should give consistent performance?

LS0000 to LS0001 gives me up to 320MBs

LS0001 to LS0000 gives me up to 376MBs

When initiating the copy from LS0001 I get the following but it is not stable and I see also see dips

LS0001 to LS0000 gives me up to 240MBs

LS0000 to LS0001 gives me up to 320MBs

Many thanks!!!

This is normal behaviour, an overhead of the controller.

Overall I’d say your network cards seem to be working fine and any limitations are elsewhere in the system, likely the older kit.

The controller, even nvme can’t cope with many small file writes without flushing the cache.

Try the raid array, it will halve the load on the controller

I see. Thank you.

Have some options as to RAID I think: I can either try LSI or mdadm. I think I should go with mdadm as it avoids extra hardware. What do you think?

Definitely start with mdadm for testing. It’s free!