oh wow. I haven’t used proxmox in ages, I guess they’ve got some new features.

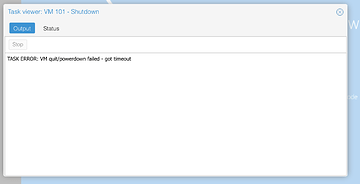

EDIT: seems to have shutdown after a few minutes of spinning, but did have to kill it manually with qm stop 101

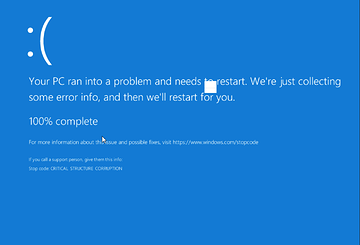

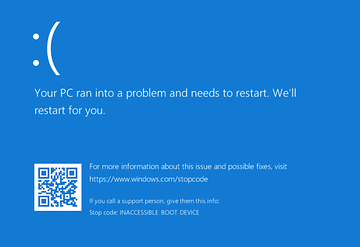

Gotta Love windows:

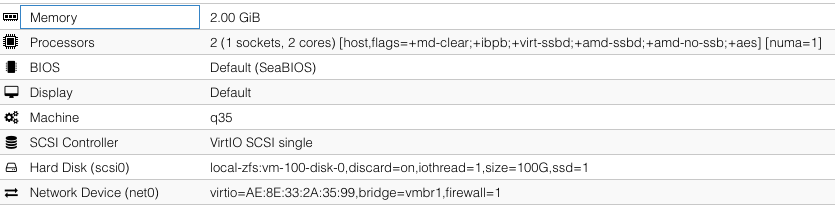

for reference new conf is

bootdisk: ide0

cores: 12

hostpci0: 0d:00.0

hostpci1: 0d:00.1

ide0: local-lvm:vm-101-disk-0,size=48G

ide2: local:iso/Windows-10-0.iso,media=cdrom

machine: q35

memory: 65536

name: windows

net0: e1000=D6:98:2F:41:01:B3,bridge=vmbr0,firewall=1

numa: 1

ostype: win10

scsihw: virtio-scsi-pci

smbios1: uuid=ee5160e6-e116-42ae-9575-a9e25648ad91

sockets: 1

vmgenid: a46c4555-550e-422f-a831-1af24d1a93bcI had to append that exact parameter to my boot args because the log files we piling up on my proxmox server.

And my googling led me to an arch linux thread, which led me to a post wendell made.

Yeah, I’m not sure whats up here… I can look more at it later, but I’m working right now

Thanks mate, you’re amazing! Should probably do the same

So, Im currently back to working in the machine, and a quick cinebench run is showing 1800, so all together not too bad for a ten year old cpu in a vm!

Still nothing coming out of the rx5600xt, tho the navi reset patch is working it’s magic!

Currently I’m getting through the mountain of windows updates, moving to win10_2004 and attempting to get drivers. before I started doing windows updates, I attempted to install the latest WHQL drivers, but kept getting either a code 43 (device not functioning) or code 31 (driver not correctly installed)

Once the updates complete, I’ll use the DDU utility and try a fresh install of the WHQL drivers and report back.

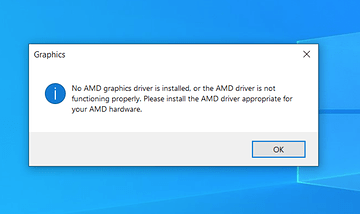

Alright, after using the factory reset option in the driver install package and completing the installation, the moment I hit Complete and launch software it returns a:

I’m wondering if it has to do with the Audio and video devices being passed individually.

Will update this post after I try it.

Tried it and saw no observable difference between the 2 unfortunately

Other more different update:

Going into device manager, I noticed a never before seen PCI device listed as “Pci bus 6, device 3”

Is this maybe a weird device that PVE is passing through?

Other update part 2, electric boogaloo:

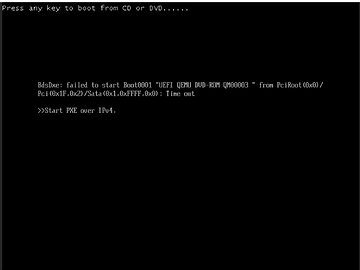

it seems that that almost every guide I can find for proxmox gpu passthrough recommends using the x-vga and pcie=1 flags in the come file. issue is that doing so spits out

vm: -device vfio-pci,host=0000:0d:00.0,id=hostpci0.0,bus=ich9-pcie-port-1,addr=0x0.0,x-vga=on,multifunction=on: vfio 0000:0d:00.0: failed getting region info for VGA region index 8: Invalid argument

device does not support requested feature x-vga

TASK ERROR: start failed: QEMU exited with code 1

which seems odd to me.

Thinking it might be a conflict between the QEMU display and the GPU itself, I disabled the virtual display, but that didn’t seem to do anything unfortunately.

trying just pcie=1 with no Virtual display booted, but still no output on the gpu

With VGpu and pcie=1 it does boot up correctly, but doesn’t seem to act any different to before.

Digging through device manager, I noticed it seems to be running at PCIe2 x4, tho the slot is Pcie2 x8. not sure that this means anything one way or another.

At a total loss for how to fix this unfortunately

maybe @wendell or @gnif have an idea?

(sorry for the unprompted tag gents )

As of now nothing I do in windows seems to have had an effect, and not well enough versed in VFIO to be able to diagnose the Debian/PVE side of this

Purely out of curiosity I removed the GPU from the vm and booted it up and ran DDU. I did notice that the VM booted instantly, where as with the gpu it seemed to take upwards of a minute to get to login.

Adding the gpu back in after (with the video and audio separately, both with PCIe=1) has made the boot process take a relatively long time (sitting on a windows “Please Wait” switching to all black screen over and over for around ten minutes) and a relatively large amount of cpu usage per the proxmox hardware tab (~60-90% on all 12 threads)

Also, compared to earlier, the VNC performance seems to have gone down a fair bit. clicks sometimes take multiple seconds to register

Update: it’s been sitting at 99% cpu for a few minutes now, machine seems pretty locked up

Hmmm, sounds like a ROM issue. You might need to specify the VBIOS file… (which I have no idea how to do in proxmox)

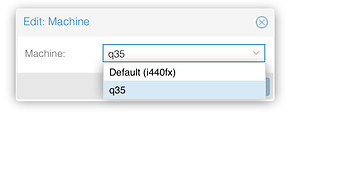

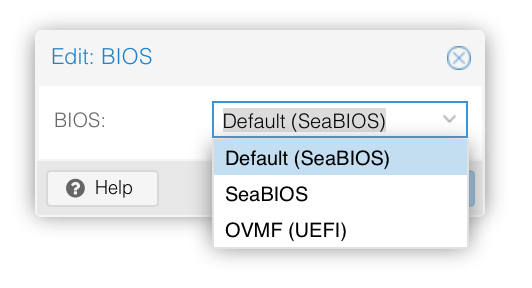

Do you mean this bios setting?

or

the machine Vchipset?

UPDATE:

changing to the UEFI bios setting no longer allows me to connect via VNC, but had no effect on the monitors popping up from the GPU

Part2:

with UEFI on, the machine crashes completely after around 30 seconds. Removing the card from the vm and starting in UEFI yields:

IIRC you can’t change from legacy to UEFI, so will rebuild the vm in UEFI without the gpu, then add it back and update

Well, yes. You definitely need OVMF.

But moreover, I was talking about video card’s bios (yes, it has one).

You can override the bios by specifying a file. Let’s try switchign to OVMF first though, since that’s a requirement of passthrough.

I’m going to pretend I definitely didn’t forget to change to a UEFI bios for passthrough…

(proceeds to put on dunce cap)

changing over and applying windows updates now, will add in GPU one updated

No worries. It’s something I had to learn the hard way as well

Ok newest update! Added the gpu with no extra parameters and using 2 separate items (one for video, one for audio) the system boots, but then shuts down after ~30 seconds.

I get a “Guest has not initialized display (yet)” then nothing

Changing a few settings in .conf to

args: -cpu 'host,+kvm_pv_unhalt,+kvm_pv_eoi,hv_vendor_id=NV43FIX,kvm=off

bios: ovmf

bootdisk: ide0

cores: 12

cpu: host,hidden=1,flags=+pcid

efidisk0: local-lvm:vm-101-disk-1,size=4M

hostpci0: 0d:00

ide0: local-lvm:vm-101-disk-0,size=48G

ide2: local:iso/Windows-10-0.iso,media=cdrom

machine: q35

memory: 65536

name: windows

net0: e1000=D6:98:2F:41:01:B3,bridge=vmbr0,firewall=1

numa: 1

ostype: win10

scsihw: virtio-scsi-pci

smbios1: uuid=ee5160e6-e116-42ae-9575-a9e25648ad91

sockets: 1

usb0: host=1-2

usb1: host=4-2

vga: virtio

vmgenid: a46c4555-550e-422f-a831-1af24d1a93bc

Right now it’s sitting on a black screen on VNC and no signal to the IRL display after 10 minutes.

From the GUI monitoring window, the vm is fiddling between ~8-9% usage without doing much of anything.

If I remove the gpu, VM boots up fine and a USB keyboard I passed through works fine

Tried using scsi0 with no success, windows wouldn’t detect it. Sata is detected and is installing now. once that up and running will do updates and report back on GPU!

That’s because scsi is not scsi.

It’s virtio. It’s the most performant storage method, short of passthrough, but it requires drivers because Microsoft is stubborn and won’t include the drivers…

https://pve.proxmox.com/wiki/Windows_VirtIO_Drivers

Direct DL: https://fedorapeople.org/groups/virt/virtio-win/direct-downloads/stable-virtio/virtio-win.iso

Whatever you do, don’t install the virtio balloon driver.

Thanks, I didn’t know that.