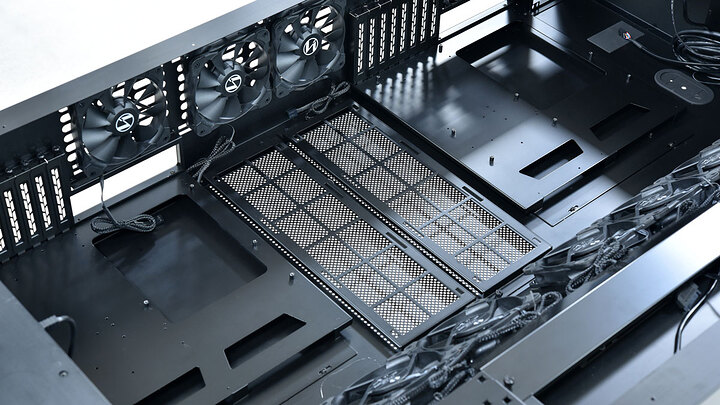

I’m trying to find a way to extend my pcie slots accross to either risers or a 4gpu backplane. I’m building a lian li desk that normally could host two complete systems, Im using a asus motherboard for the threadripper pro 64 core. I’m a graphic artist/dev that uses 3d rendering. I have 4 radeon pro w5700 I want to put on the left side of desk. I’m going to water cool them all on that side. Using them as a renderfarm. The right side is for the motherboard. 7 slots pcie x 16. I will be using a radeon vii on that side for handling regular monitor output. I’m populating some of the pcie slots with nvme expansion cards and sas board for massive storage setup. I have mining risers but not sure the X1 is enough for render farm cards. Is there an easy way to expand one X16 slot to 4gpu about 2feet distance. Without loosing performance of gpu acceleration? I know I could put another system on otherside and slave it to the sworkstation but honestly this beast doesn’t need it. I just need ideas or parts that might help in using all these pcie lanes.!

The least headache thing may actually be to just shove the render gpus in an actual system. The. Your main system could reboot or anything while a renders going and no problem?

Otherwise you probably kinda need a special pcie expander backplane which you can get

+1 This.

Rendering as a service in your own home. Flipside, you can turn them off when you don’t need them.

… as if you ever don’t need them <3

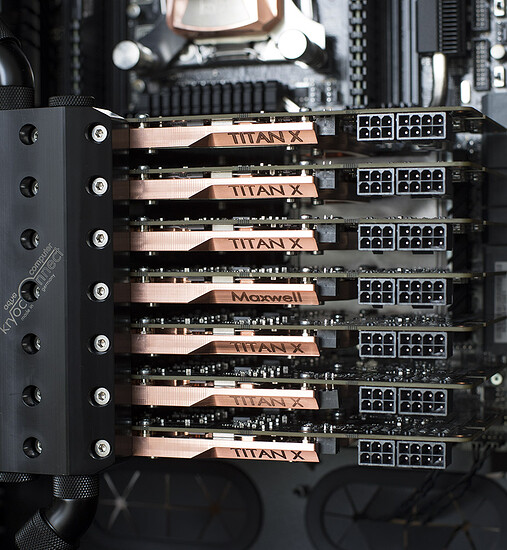

Yes I was trying to use a back plane type setup. I know I can chuck in a cheap mb to run the gpu render farm. But with so many pcie lanes on the threadripper, and the fact that some of the rendering can also be done using cpu. It would allow for maximum use of the threadripper build. Why waste a X16 slot covering it with gpus, even water cooled does give much room between cards for use of those slots. I’m trying to find a way to use all those pcie lanes. Otherwise I could just use one of my mining boards to be the render farm host. Already having the modify the gpu waterblock to fit the pro cards. Minor plastic work.

I would love to have a dual epyc render farm and the threadripper pro for workstation… But the threadripper broke the budget. I got it, but not enough left to consider clustering a dual epyc on the left side

I’d love one epyc or TR for home, but can’t justify the cost!

It was one or the other for me… Wendell reveiw of the Asus board made up my mind… It truely is a sworkstation. But trying to find ways to utilize all that horsepower has-been a challenge… Currently it is in a temporary enclosure plotting hdd space for crypto mining… I’m running about a petabyte storage, for mining and a little of it goes towards 3d assets, development files. Passive income.

I hope it pays off for you, sir!

I’m trying to find a back plane that is gen3, magma makes some that are gen2… Do you know any back planes for 4-7 single-slot width spacing that are gen 3?

You’re looking to a specialty industrial supplier unfortunately. Check AliExpress and see what is there.

These mining guys have gotten fancy

Way too much money for what it is though

yea im a mining guy too. i have the bifurcation and risers. but the x4 connection does reduce performance some. I think im going to go with wendell’s advice and get another system to run my graphics cards. I got 7 Radeon Pro w5700, Im going to water cool them all and run them on asus x299 motherboard. x299 is my old cpu… so why not use it. would like to slave that system to the Threadripper Pro. kinda like a cluster.

I went with the radeon pro w5700 cards because Igor’s lab created a new bios for it that boosts its performance and efficiency. Also found the cards at MSRP, so I couldn’t pass that up. Finding water blocks was the challenge, but apparently the 5700xt board is layed out almost the same, except ports. So modifying existing 5700xt water blocks is doable. mostly some plastic trimming not connected to actual water blocks.

Im very interested in getting a “seamless” vm container style OS on this system. I like the direction Qubes is going. I use windows mostly as play testing for developed 3d assets and games. I love Linux and hate that i can’t do all my work in Linux. started in Ubuntu years ago, then switch to Arch. Loved Arch for it’s approach to only installing what i specifically want on my system. Is it better to use Arch or switch to Qubes to get the VM stuff working the easiest?

Also following your 20million i/o closely. I mine Chia on about a pettabyte of storage. It takes a lot of time to plot. notorious for burning through nanflash, I currently running 3 asus raid cards with 4 nvme(sabrent 4th gen) in each. this allows me to plot 60 plots in parallel. for 6tb of plots per cycle. this is highly I/O dependent. I’ve spread the 3 raid cards evenly to try and balance load on die. My bottle neck at pressent is the HDD write speed on the final destination. I am about to use a SAS controller to add in 32 x18tb IronWolf drives. I need a fan to cool that board.

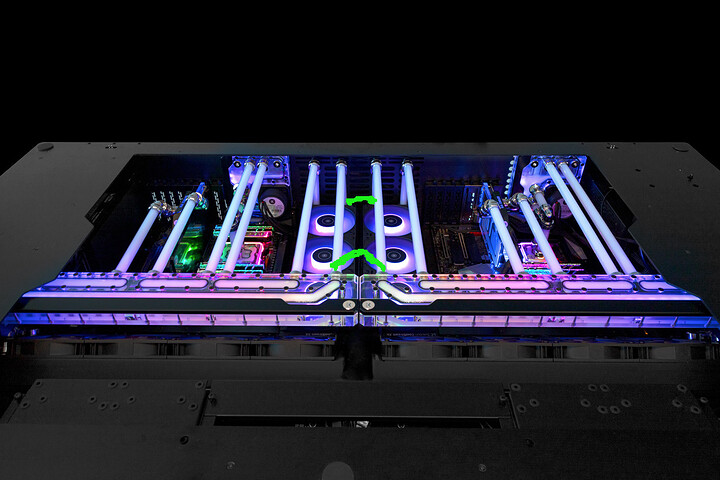

@wendell Ok getting close to running the water loops when rest of parts get in from EK and Watercool. I have a question about running the waterloop slightly different from the plan in the ek fit out. I was thinking i have 7 gpu and i9 running on one side , 1gpu and threadripper pro 64 on the otherside. 2 quad radiators up front and 2 extra thick tripple radiators in the center between boards. can i crisscross the streams? so the loop would have 2 pumps and all the radiators connected to the one loop. instead of 2 separate loops??? I the render board 7gpu-i9 will be putting out a lot of heat. where as the threadripper and single gpu wont need so much radiator cooling. I’m trying to balance the heat load accross all 4 radiators.

@wendell nevermind, the gpu water blocks are 1mm too thick, I will have to spread them out over both motherboards. so 4 gpu each motherboard. Since heat will be spread equal now I can stick to the EK loop plan. I’m looking at clustering the 2 motherboards together, 1master 1 slave. I want to run either linux or QubesOS. Is that possible to cluster them? I’m willing to deep dive into the technical aspects. I just installed 6 HDD cages under the desk to expand to 750tb. This rig will be a Computer Science Home lab/3d CAD-CAM work. Machine learning and AI assisted Design. With the ability to Mine CPU-GPU-HDD on the side.

Yeah but people pay that much for enterprise cables so probably wont go down

This topic was automatically closed 273 days after the last reply. New replies are no longer allowed.