Y'know, for someone who's been messing around with computers for nearly 20 years, hanging out on this forum makes me realize I know next to NOTHING compared to you people. And I feel like half the stuff you say I have to sit and think about before I understand it.

I barely understand networking, and I'm a complete and utter noob when it comes to Linux, which is my new daily driver OS for some time now. Which I have not figured out a good backup strategy for yet because most of my systems in my house are still predominantly Windows based. But here goes.

My backup solution is probably all wrong, and my server setup is probably set up all wrong too.

I have an older AMD Athlon 2 dual core pc with 8GB or RAM I built years ago serving as my server. On it I'm running windows 2012 R2. It is "taking care" of my Active Directory on my Network because I need security features that limit my kids to certain websites and certain folders on my nas that aren't for adult consumption. It also gives me a chance to limit what they can change on their computers and what they can and cannot download. They're still like 8 and 9. So sheltering them as much as I can from the horrors that the Internet and the world can be till they're ready.

On that server I physically have a 5 HDD Bay enclosure from StarDock installed. In it I have most of my HDDs. I have 2 other HDDs installed in the normal HDD bays in the case.

On the server software wise, I am also running a VM of Windows Server 2012 R2 Essentials. This is where I store all of the Documents from various places in the house, all of our pictures that need to be copied from the various machines through the house. My Pc when I'm booted into Windows, my wife's Win10 laptop, my older son's WinPc, and our living room HTPC Backup. (setting the HTPC was a bitch so i want a bare metal restore on that bitch if I need to)

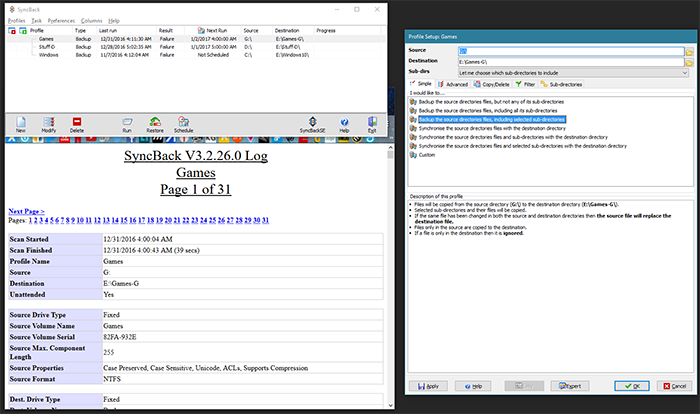

The VM is also storing all of my movie media. Some of that media is older movies that are no longer on DVD and cannot be replaced. So I have a program on the VM that duplicates those onto a separate external hard drive whenever I put on a new file, or will copy back over the duplicates if I have to get a new drive. I can't remember the name of it right now. It's been years since I had to mess with it. It just works.

That VM server is also part of the Server Pool with the Host OS.

That VM is stored on it's own separate hard drive. And the storage drives are just passed through to the VM through virtual box. Same for the drive for my VM OS drive.

I also use my essentials server as my cloud storage server because it has its own website it can set up for you for remote access of files and even of computers on the network at long as it is a windows based machine.

I hope I made this understandable for everyone. If you have questions or want to provide advice, please do tell.