@gnif in case you guys need some help pls let me know, I’m not a specialist on that topic but I have some spare time right now

Something I noticed from @gnif’s config.

Enabling L3 cache emulation gives a rather noticeable performance improvement.

l3-cache=on, host-cache-info=off'

Or in XML

<cpu>

...

<cache level='3' mode='emulate'/>

...

</cpu>

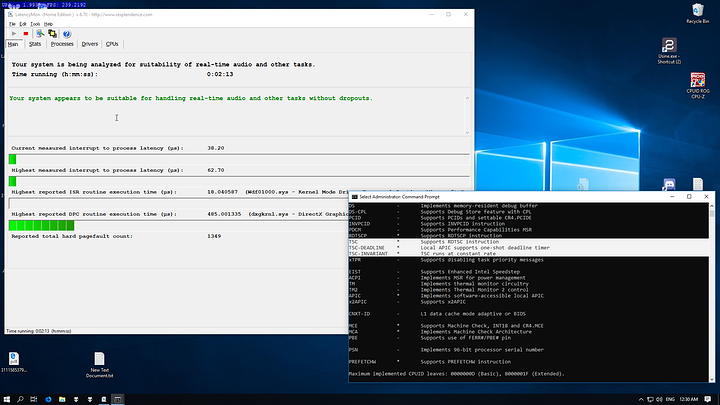

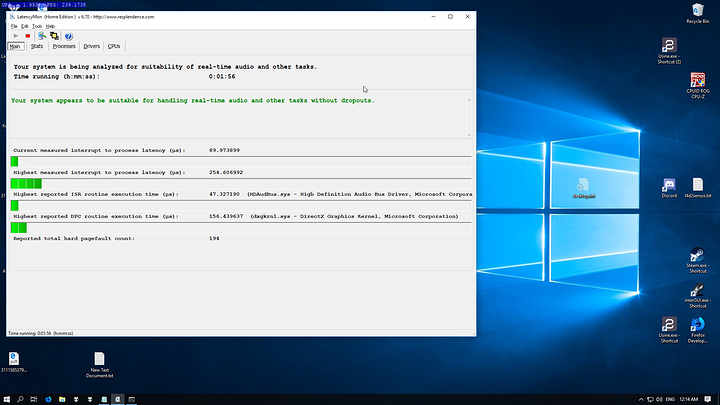

As well as some other things that have largely reduced latency values I see in latencymon.

<hyperv>

...

<vpindex state='on'/>

<synic state='on'/>

<stimer state='on'/>

<reset state='on'/>

<vendor_id state='on' value='KVM Hv'/>

<frequencies state='on'/> <!-- This one only works without `ignore_msrs=1` kerenel param -->

...

</hyperv>

What they do is documented here

https://libvirt.org/formatdomain.html#elementsFeatures

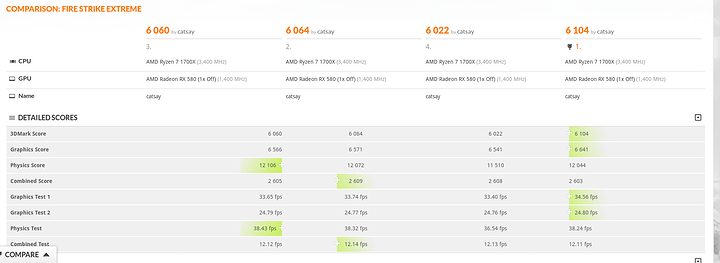

I’ve got some testing as well

https://www.3dmark.com/compare/fs/16633964/fs/16633164/fs/16633028/fs/16619222

Test 1 (6104)

With the Guest reporting PCI-e link speed as 8x@8GT/s(3.0) and no CPU optimizations yet. I have noticed that when I start my VM automatically early at boot, the link speed get’s automatically set to 8X@8GT/s (3.0) in the Guest, potentially as the Link speed hasn’t been downscaled to 2.5GT/s yet.

I will need to test this with some setpci tricks to see what happens if I boot the VM with pci speed forced to 8GT/s on the host.

I suspect that once I test with the setpci link scaling workaround that the 100points graphics score difference will be quite noticeable.

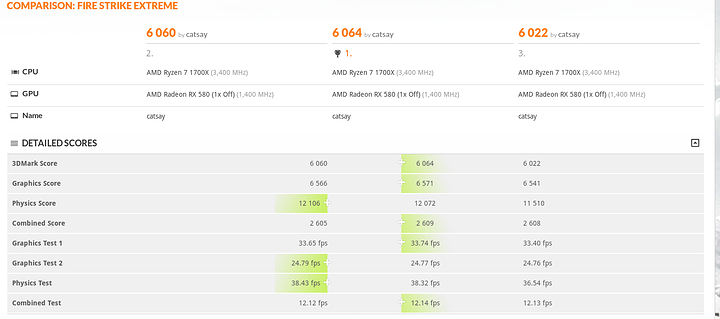

Test 2 & Test 3 (6064 & 6060)

With L3 cache and hyperv adjustments

Test 4 ( 6022)

Without L3 cache and hyperv adjustments

Link speed reporting as [email protected]/s (1.1) in the guest, but link scaling taking place correctly on the host.

Now for CPU-z (1.86.0) tests I don’t have screenshots attached.

But with the L3 cache and hyperv tweaks I saw the single core score go from an inconsistent 398-410 to a consistent 420-430 on a 3.8GHz Ryzen 7 1700X.

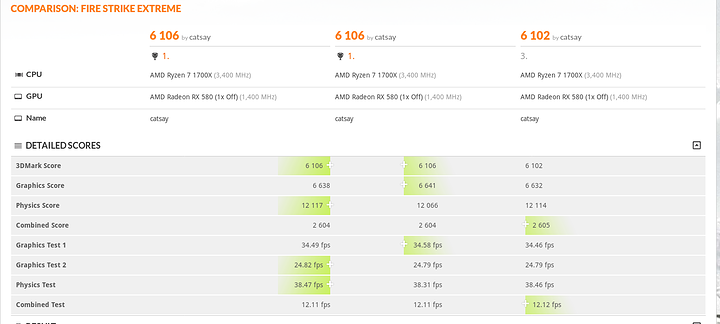

Suspicions confirmed, when the guest is aware that the link is running at 8x@8GT/s (3.0) the Graphics score is consistently higher.

All three tests report consistently higher GPU performance when the Guest is aware that the link speed is running at 3.0 spec 8GT/s

GPU in Guest @ 3.0

Of note is that in this configuration the Link speed in the guest permanently reporting as 3.0 (Stuck) while the host is doing the scaling anyway.

https://www.3dmark.com/compare/fs/16634804/fs/16634741/fs/16634681

GPU in Guest @ 1.1

https://www.3dmark.com/compare/fs/16633964/fs/16633164/fs/16633028

This all translates to a consistent 100points more on the Graphics Score. In 3DMark this amounts to only about 1-2FPS, but I have noticed that in Assassins Creed Odyssey it actually can lead to a lot more consistent frame times. Sometimes 4-7FPS more if the guest is aware of the correct link speed.

This is probably more on other GPU hardware

EDIT: better phrasing.

for me it works too with a windows and a linux(ubuntu) vm

I remember having some problems at the start though, have to take a look again

Took me a while to see this thread. I mentioned it in the looking glass support thread the problem I had which is exactly this.

I was not using i440fx to emulate because I am dual-booting my VM if/when I need SLI. I try to keep it under Q35 as the hardware is more in line and easier to emulate. This also had that impact in looking glass: using too much GPU because of wrong pci-x speed.

What I find interesting is that it only affects the GPU. Even when booting all other devices they are always at the correct speed. When the machine starts, the GPU always go to a lower clock.

Really glad you looked into it and found the issue, you’ve got some sharp eye for troubleshooting.

Edit: Actually, after reading a little more, I was doing exactly what you started doing now: plugging the Card into the proper place. That’s when I had the issue. For me it only works when I plug into the root bus.

Thanks for all that testing, 3dMark is expected to show a marginal increase as you have noted, but only marginal as the bus speed mainly affects texture upload performance. Since 3dMark and many titles upload their textures at load with a progress bar, it’s not included in the benchmarks and as such any differences are marginal.

However in games that are continually streaming textures into the gfx card during play instead of having loading screens will see great benefits in smoothness as you have noted. I would expect titles like Tomb Raider, Fallout, GTA and ArmA3 to see the most benefits from this.

It should also help with loading times in general, and performance of low memory cards that are sharing some system ram for texture storage.

Which version QEMU is convinient for testing of Nasty patch?

I am working with git master.

Thanks. I had to add void pcie_negotiate_link(PCIDevice *dev); to pcie.h.

Nasty patch is working, CUDA bandwith test without changes.

Has anyone tried cpu(die) pinning for VM, AMD relevant patch was included in upstream in August (QEMU-3.0.0 -machine must be pc-q35-3.0)?

https://bugs.debian.org/cgi-bin/bugreport.cgi?bug=897054

https://www.reddit.com/r/VFIO/comments/9iaj7x/smt_not_supported_by_qemu_on_amd_ryzen_cpu/

Yes. 2700x here and R9 390. On Debian with a custom rt-kernel as you need one to enable nohz_full, and rcu_nocbs.

Cores are pinned on the isolated cores with qemu3.0 along with isolcpus, nohz_full, rcu_nocbs.

Just make sure you’ve got “feature policy=‘require’ name=‘topoext’” in your libvirt config and you should set.

A few things to note in my testing which is why I made my account; I’ve been following this thread and feel like should make note of these:

Having -hypervisor set to off results in higher DPC latency. I’m seeing an average 87-130us vs 20-30us process interrupts based off the latencymon gui.

Having “timer name=‘hypervclock’ present=‘yes’” with -hypervisor on with the guest being aware it’s in a hypervisor determines whether I get 100fps or 125fps in Cinebench (poor bench I know). All CPU benches show an uplift in performance (CPU-Z, Cinebench). Watching the PCIe link in the host with <lspci -s 0b:00.0 -nvv|grep LnkS/> shows the graphics link scaling with load. It’s 2.5GT/s while idle and scales up to 8GT/s while playing. The GPU guest is also connected to a root-port as discussed above. GPU-Z also shows the correct config of “PCIex16 3.0 @ x8 3.0”

I understand that specifiying “timer name=‘tsc’ present=‘yes’ mode=‘native’” and “feature policy=‘require’ name=‘invtsc’” invokes that timer being used only when the hypervisor is off.

Yet the tsc feature shows up in both my hypervisor off and on configs in coreinfo.exe. Screenshots to follow.

With hypervisor on and hyperV timer on

Hypervisor off with -hypervisor,migratable=no,+invtsc

Configs: Hypervisor on

Summary

<domain type='kvm'>

<name>win10-clone</name>

<uuid>54a91832-1fae-4ae0-b14f-e10b169479d7</uuid>

<memory unit='KiB'>10000000</memory>

<currentMemory unit='KiB'>10000000</currentMemory>

<memoryBacking>

<hugepages/>

</memoryBacking>

<vcpu placement='static'>12</vcpu>

<iothreads>2</iothreads>

<cputune>

<vcpupin vcpu='0' cpuset='8'/>

<vcpupin vcpu='1' cpuset='9'/>

<vcpupin vcpu='2' cpuset='10'/>

<vcpupin vcpu='3' cpuset='11'/>

<vcpupin vcpu='4' cpuset='12'/>

<vcpupin vcpu='5' cpuset='13'/>

<vcpupin vcpu='6' cpuset='14'/>

<vcpupin vcpu='7' cpuset='15'/>

<vcpupin vcpu='8' cpuset='4'/>

<vcpupin vcpu='9' cpuset='5'/>

<vcpupin vcpu='10' cpuset='6'/>

<vcpupin vcpu='11' cpuset='7'/>

<emulatorpin cpuset='0-3'/>

<iothreadpin iothread='1' cpuset='0-1'/>

<iothreadpin iothread='2' cpuset='2-3'/>

<vcpusched vcpus='0' scheduler='fifo' priority='1'/>

<vcpusched vcpus='1' scheduler='fifo' priority='1'/>

<vcpusched vcpus='2' scheduler='fifo' priority='1'/>

<vcpusched vcpus='3' scheduler='fifo' priority='1'/>

<vcpusched vcpus='4' scheduler='fifo' priority='1'/>

<vcpusched vcpus='5' scheduler='fifo' priority='1'/>

<vcpusched vcpus='6' scheduler='fifo' priority='1'/>

<vcpusched vcpus='7' scheduler='fifo' priority='1'/>

<vcpusched vcpus='8' scheduler='fifo' priority='1'/>

</cputune>

<os>

<type arch='x86_64' machine='pc-q35-3.0'>hvm</type>

<loader readonly='yes' type='pflash'>/usr/share/OVMF/OVMF_CODE.fd</loader>

<nvram>/var/lib/libvirt/qemu/nvram/win10-clone_VARS.fd</nvram>

<bootmenu enable='yes'/>

</os>

<features>

<acpi/>

<apic/>

<hyperv>

<relaxed state='on'/>

<vapic state='on'/>

<spinlocks state='on' retries='8191'/>

<vpindex state='on'/>

<synic state='on'/>

<stimer state='on'/>

<reset state='on'/>

<vendor_id state='off'/>

<frequencies state='on'/>

</hyperv>

<kvm>

<hidden state='off'/>

</kvm>

<vmport state='off'/>

</features>

<cpu mode='host-passthrough' check='full'>

<topology sockets='1' cores='6' threads='2'/>

<cache mode='passthrough'/>

<feature policy='require' name='invtsc'/>

<feature policy='require' name='svm'/>

<feature policy='require' name='hypervisor'/>

<feature policy='require' name='apic'/>

<feature policy='require' name='topoext'/>

</cpu>

<clock offset='localtime'>

<timer name='rtc' present='no' tickpolicy='catchup'/>

<timer name='pit' present='no' tickpolicy='delay'/>

<timer name='hpet' present='no'/>

<timer name='kvmclock' present='no'/>

<timer name='hypervclock' present='yes'/>

<timer name='tsc' present='yes' mode='native'/>

</clock>

<on_poweroff>destroy</on_poweroff>

<on_reboot>restart</on_reboot>

<on_crash>destroy</on_crash>

<pm>

<suspend-to-mem enabled='no'/>

<suspend-to-disk enabled='no'/>

</pm>

<devices>

<emulator>/usr/bin/kvm</emulator>

<disk type='block' device='disk'>

<driver name='qemu' type='raw' cache='none' io='native'/>

<source dev='/dev/sda2'/>

<target dev='vda' bus='scsi'/>

<boot order='2'/>

<address type='drive' controller='1' bus='0' target='0' unit='0'/>

</disk>

<disk type='block' device='disk'>

<driver name='qemu' type='raw' cache='none' io='native'/>

<source dev='/dev/sdb'/>

<target dev='vdb' bus='scsi'/>

<boot order='1'/>

<address type='drive' controller='0' bus='0' target='0' unit='0'/>

</disk>

<controller type='pci' index='0' model='pcie-root'/>

<controller type='pci' index='1' model='pcie-root-port'>

<model name='pcie-root-port'/>

<target chassis='1' port='0x10'/>

<address type='pci' domain='0x0000' bus='0x00' slot='0x02' function='0x0' multifunction='on'/>

</controller>

<controller type='pci' index='2' model='pcie-root-port'>

<model name='pcie-root-port'/>

<target chassis='2' port='0xb'/>

<address type='pci' domain='0x0000' bus='0x00' slot='0x01' function='0x3'/>

</controller>

<controller type='pci' index='3' model='pcie-root-port'>

<model name='pcie-root-port'/>

<target chassis='3' port='0x11'/>

<address type='pci' domain='0x0000' bus='0x00' slot='0x02' function='0x1'/>

</controller>

<controller type='pci' index='4' model='pcie-root-port'>

<model name='pcie-root-port'/>

<target chassis='4' port='0x12'/>

<address type='pci' domain='0x0000' bus='0x00' slot='0x02' function='0x2'/>

</controller>

<controller type='pci' index='5' model='pcie-root-port'>

<model name='pcie-root-port'/>

<target chassis='5' port='0x13'/>

<address type='pci' domain='0x0000' bus='0x00' slot='0x02' function='0x3'/>

</controller>

<controller type='pci' index='6' model='pcie-root-port'>

<model name='pcie-root-port'/>

<target chassis='6' port='0x14'/>

<address type='pci' domain='0x0000' bus='0x00' slot='0x02' function='0x4'/>

</controller>

<controller type='pci' index='7' model='pcie-root-port'>

<model name='pcie-root-port'/>

<target chassis='7' port='0x15'/>

<address type='pci' domain='0x0000' bus='0x00' slot='0x02' function='0x5'/>

</controller>

<controller type='pci' index='8' model='pcie-root-port'>

<model name='pcie-root-port'/>

<target chassis='8' port='0x16'/>

<address type='pci' domain='0x0000' bus='0x00' slot='0x02' function='0x6' multifunction='on'/>

</controller>

<controller type='pci' index='9' model='pcie-root-port'>

<model name='pcie-root-port'/>

<target chassis='9' port='0x17'/>

<address type='pci' domain='0x0000' bus='0x00' slot='0x02' function='0x7'/>

</controller>

<controller type='pci' index='10' model='pcie-root-port'>

<model name='pcie-root-port'/>

<target chassis='10' port='0x8'/>

<address type='pci' domain='0x0000' bus='0x00' slot='0x01' function='0x0' multifunction='on'/>

</controller>

<controller type='pci' index='11' model='pcie-root-port'>

<model name='pcie-root-port'/>

<target chassis='11' port='0x9'/>

<address type='pci' domain='0x0000' bus='0x00' slot='0x01' function='0x1'/>

</controller>

<controller type='pci' index='12' model='pcie-root-port'>

<model name='pcie-root-port'/>

<target chassis='12' port='0xa'/>

<address type='pci' domain='0x0000' bus='0x00' slot='0x01' function='0x2'/>

</controller>

<controller type='pci' index='13' model='pcie-root-port'>

<model name='pcie-root-port'/>

<target chassis='13' port='0xc'/>

<address type='pci' domain='0x0000' bus='0x00' slot='0x01' function='0x4'/>

</controller>

<controller type='pci' index='14' model='pcie-root-port'>

<model name='pcie-root-port'/>

<target chassis='14' port='0xd'/>

<address type='pci' domain='0x0000' bus='0x00' slot='0x01' function='0x5'/>

</controller>

<controller type='scsi' index='0' model='virtio-scsi'>

<driver queues='4' iothread='1'/>

<address type='pci' domain='0x0000' bus='0x00' slot='0x09' function='0x0'/>

</controller>

<controller type='scsi' index='1' model='virtio-scsi'>

<driver queues='4' iothread='2'/>

<address type='pci' domain='0x0000' bus='0x00' slot='0x0a' function='0x0'/>

</controller>

<controller type='sata' index='0'>

<address type='pci' domain='0x0000' bus='0x00' slot='0x1f' function='0x2'/>

</controller>

<controller type='usb' index='0' model='nec-xhci'>

<address type='pci' domain='0x0000' bus='0x02' slot='0x00' function='0x0'/>

</controller>

<interface type='network'>

<mac address='52:54:00:e7:8b:ca'/>

<source network='default'/>

<model type='virtio'/>

<address type='pci' domain='0x0000' bus='0x06' slot='0x00' function='0x0'/>

</interface>

<input type='mouse' bus='ps2'/>

<input type='keyboard' bus='ps2'/>

<hostdev mode='subsystem' type='pci' managed='yes'>

<source>

<address domain='0x0000' bus='0x0b' slot='0x00' function='0x0'/>

</source>

<address type='pci' domain='0x0000' bus='0x08' slot='0x00' function='0x0' multifunction='on'/>

</hostdev>

<hostdev mode='subsystem' type='pci' managed='yes'>

<source>

<address domain='0x0000' bus='0x0b' slot='0x00' function='0x1'/>

</source>

<address type='pci' domain='0x0000' bus='0x08' slot='0x00' function='0x1'/>

</hostdev>

<hostdev mode='subsystem' type='pci' managed='yes'>

<source>

<address domain='0x0000' bus='0x0c' slot='0x00' function='0x3'/>

</source>

<address type='pci' domain='0x0000' bus='0x0b' slot='0x00' function='0x0'/>

</hostdev>

<hostdev mode='subsystem' type='pci' managed='yes'>

<source>

<address domain='0x0000' bus='0x0d' slot='0x00' function='0x3'/>

</source>

<address type='pci' domain='0x0000' bus='0x05' slot='0x00' function='0x0'/>

</hostdev>

<memballoon model='none'/>

<shmem name='looking-glass'>

<model type='ivshmem-plain'/>

<size unit='M'>32</size>

<address type='pci' domain='0x0000' bus='0x0e' slot='0x00' function='0x0'/>

</shmem>

</devices>

</domain>

Config: Hypervisor off

Summary

<domain type='kvm xmlns:qemu='http://libvirt.org/schemas/domain/qemu/1.0'>

<name>win10-clone</name>

<uuid>54a91832-1fae-4ae0-b14f-e10b169479d7</uuid>

<memory unit='KiB'>10000000</memory>

<currentMemory unit='KiB'>10000000</currentMemory>

<memoryBacking>

<hugepages/>

</memoryBacking>

<vcpu placement='static'>12</vcpu>

<iothreads>2</iothreads>

<cputune>

<vcpupin vcpu='0' cpuset='8'/>

<vcpupin vcpu='1' cpuset='9'/>

<vcpupin vcpu='2' cpuset='10'/>

<vcpupin vcpu='3' cpuset='11'/>

<vcpupin vcpu='4' cpuset='12'/>

<vcpupin vcpu='5' cpuset='13'/>

<vcpupin vcpu='6' cpuset='14'/>

<vcpupin vcpu='7' cpuset='15'/>

<vcpupin vcpu='8' cpuset='4'/>

<vcpupin vcpu='9' cpuset='5'/>

<vcpupin vcpu='10' cpuset='6'/>

<vcpupin vcpu='11' cpuset='7'/>

<emulatorpin cpuset='0-3'/>

<iothreadpin iothread='1' cpuset='0-1'/>

<iothreadpin iothread='2' cpuset='2-3'/>

<vcpusched vcpus='0' scheduler='fifo' priority='1'/>

<vcpusched vcpus='1' scheduler='fifo' priority='1'/>

<vcpusched vcpus='2' scheduler='fifo' priority='1'/>

<vcpusched vcpus='3' scheduler='fifo' priority='1'/>

<vcpusched vcpus='4' scheduler='fifo' priority='1'/>

<vcpusched vcpus='5' scheduler='fifo' priority='1'/>

<vcpusched vcpus='6' scheduler='fifo' priority='1'/>

<vcpusched vcpus='7' scheduler='fifo' priority='1'/>

<vcpusched vcpus='8' scheduler='fifo' priority='1'/>

</cputune>

<os>

<type arch='x86_64' machine='pc-q35-3.0'>hvm</type>

<loader readonly='yes' type='pflash'>/usr/share/OVMF/OVMF_CODE.fd</loader>

<nvram>/var/lib/libvirt/qemu/nvram/win10-clone_VARS.fd</nvram>

<bootmenu enable='yes'/>

</os>

<features>

<acpi/>

<apic/>

<hyperv>

<relaxed state='on'/>

<vapic state='on'/>

<spinlocks state='on' retries='8191'/>

<vpindex state='on'/>

<synic state='on'/>

<stimer state='on'/>

<reset state='on'/>

<vendor_id state='off'/>

<frequencies state='on'/>

</hyperv>

<kvm>

<hidden state='off'/>

</kvm>

<vmport state='off'/>

</features>

<cpu mode='host-passthrough' check='full'>

<topology sockets='1' cores='6' threads='2'/>

<cache mode='passthrough'/>

<feature policy='require' name='invtsc'/>

<feature policy='require' name='svm'/>

<feature policy='disable' name='hypervisor'/>

<feature policy='require' name='apic'/>

<feature policy='require' name='topoext'/>

</cpu>

<clock offset='localtime'>

<timer name='rtc' present='no' tickpolicy='catchup'/>

<timer name='pit' present='no' tickpolicy='delay'/>

<timer name='hpet' present='no'/>

<timer name='kvmclock' present='no'/>

<timer name='hypervclock' present='no'/>

<timer name='tsc' present='yes' mode='native'/>

</clock>

<on_poweroff>destroy</on_poweroff>

<on_reboot>restart</on_reboot>

<on_crash>destroy</on_crash>

<pm>

<suspend-to-mem enabled='no'/>

<suspend-to-disk enabled='no'/>

</pm>

<devices>

<emulator>/usr/bin/kvm</emulator>

<disk type='block' device='disk'>

<driver name='qemu' type='raw' cache='none' io='native'/>

<source dev='/dev/sda2'/>

<target dev='vda' bus='scsi'/>

<boot order='2'/>

<address type='drive' controller='1' bus='0' target='0' unit='0'/>

</disk>

<disk type='block' device='disk'>

<driver name='qemu' type='raw' cache='none' io='native'/>

<source dev='/dev/sdb'/>

<target dev='vdb' bus='scsi'/>

<boot order='1'/>

<address type='drive' controller='0' bus='0' target='0' unit='0'/>

</disk>

<controller type='pci' index='0' model='pcie-root'/>

<controller type='pci' index='1' model='pcie-root-port'>

<model name='pcie-root-port'/>

<target chassis='1' port='0x10'/>

<address type='pci' domain='0x0000' bus='0x00' slot='0x02' function='0x0' multifunction='on'/>

</controller>

<controller type='pci' index='2' model='pcie-root-port'>

<model name='pcie-root-port'/>

<target chassis='2' port='0xb'/>

<address type='pci' domain='0x0000' bus='0x00' slot='0x01' function='0x3'/>

</controller>

<controller type='pci' index='3' model='pcie-root-port'>

<model name='pcie-root-port'/>

<target chassis='3' port='0x11'/>

<address type='pci' domain='0x0000' bus='0x00' slot='0x02' function='0x1'/>

</controller>

<controller type='pci' index='4' model='pcie-root-port'>

<model name='pcie-root-port'/>

<target chassis='4' port='0x12'/>

<address type='pci' domain='0x0000' bus='0x00' slot='0x02' function='0x2'/>

</controller>

<controller type='pci' index='5' model='pcie-root-port'>

<model name='pcie-root-port'/>

<target chassis='5' port='0x13'/>

<address type='pci' domain='0x0000' bus='0x00' slot='0x02' function='0x3'/>

</controller>

<controller type='pci' index='6' model='pcie-root-port'>

<model name='pcie-root-port'/>

<target chassis='6' port='0x14'/>

<address type='pci' domain='0x0000' bus='0x00' slot='0x02' function='0x4'/>

</controller>

<controller type='pci' index='7' model='pcie-root-port'>

<model name='pcie-root-port'/>

<target chassis='7' port='0x15'/>

<address type='pci' domain='0x0000' bus='0x00' slot='0x02' function='0x5'/>

</controller>

<controller type='pci' index='8' model='pcie-root-port'>

<model name='pcie-root-port'/>

<target chassis='8' port='0x16'/>

<address type='pci' domain='0x0000' bus='0x00' slot='0x02' function='0x6' multifunction='on'/>

</controller>

<controller type='pci' index='9' model='pcie-root-port'>

<model name='pcie-root-port'/>

<target chassis='9' port='0x17'/>

<address type='pci' domain='0x0000' bus='0x00' slot='0x02' function='0x7'/>

</controller>

<controller type='pci' index='10' model='pcie-root-port'>

<model name='pcie-root-port'/>

<target chassis='10' port='0x8'/>

<address type='pci' domain='0x0000' bus='0x00' slot='0x01' function='0x0' multifunction='on'/>

</controller>

<controller type='pci' index='11' model='pcie-root-port'>

<model name='pcie-root-port'/>

<target chassis='11' port='0x9'/>

<address type='pci' domain='0x0000' bus='0x00' slot='0x01' function='0x1'/>

</controller>

<controller type='pci' index='12' model='pcie-root-port'>

<model name='pcie-root-port'/>

<target chassis='12' port='0xa'/>

<address type='pci' domain='0x0000' bus='0x00' slot='0x01' function='0x2'/>

</controller>

<controller type='pci' index='13' model='pcie-root-port'>

<model name='pcie-root-port'/>

<target chassis='13' port='0xc'/>

<address type='pci' domain='0x0000' bus='0x00' slot='0x01' function='0x4'/>

</controller>

<controller type='pci' index='14' model='pcie-root-port'>

<model name='pcie-root-port'/>

<target chassis='14' port='0xd'/>

<address type='pci' domain='0x0000' bus='0x00' slot='0x01' function='0x5'/>

</controller>

<controller type='scsi' index='0' model='virtio-scsi'>

<driver queues='4' iothread='1'/>

<address type='pci' domain='0x0000' bus='0x00' slot='0x09' function='0x0'/>

</controller>

<controller type='scsi' index='1' model='virtio-scsi'>

<driver queues='4' iothread='2'/>

<address type='pci' domain='0x0000' bus='0x00' slot='0x0a' function='0x0'/>

</controller>

<controller type='sata' index='0'>

<address type='pci' domain='0x0000' bus='0x00' slot='0x1f' function='0x2'/>

</controller>

<controller type='usb' index='0' model='nec-xhci'>

<address type='pci' domain='0x0000' bus='0x02' slot='0x00' function='0x0'/>

</controller>

<interface type='network'>

<mac address='52:54:00:e7:8b:ca'/>

<source network='default'/>

<model type='virtio'/>

<address type='pci' domain='0x0000' bus='0x06' slot='0x00' function='0x0'/>

</interface>

<input type='mouse' bus='ps2'/>

<input type='keyboard' bus='ps2'/>

<hostdev mode='subsystem' type='pci' managed='yes'>

<source>

<address domain='0x0000' bus='0x0b' slot='0x00' function='0x0'/>

</source>

<address type='pci' domain='0x0000' bus='0x08' slot='0x00' function='0x0' multifunction='on'/>

</hostdev>

<hostdev mode='subsystem' type='pci' managed='yes'>

<source>

<address domain='0x0000' bus='0x0b' slot='0x00' function='0x1'/>

</source>

<address type='pci' domain='0x0000' bus='0x08' slot='0x00' function='0x1'/>

</hostdev>

<hostdev mode='subsystem' type='pci' managed='yes'>

<source>

<address domain='0x0000' bus='0x0c' slot='0x00' function='0x3'/>

</source>

<address type='pci' domain='0x0000' bus='0x0b' slot='0x00' function='0x0'/>

</hostdev>

<hostdev mode='subsystem' type='pci' managed='yes'>

<source>

<address domain='0x0000' bus='0x0d' slot='0x00' function='0x3'/>

</source>

<address type='pci' domain='0x0000' bus='0x05' slot='0x00' function='0x0'/>

</hostdev>

<memballoon model='none'/>

<shmem name='looking-glass'>

<model type='ivshmem-plain'/>

<size unit='M'>32</size>

<address type='pci' domain='0x0000' bus='0x0e' slot='0x00' function='0x0'/>

</shmem>

</devices>

<qemu:commandline>

<qemu:arg value='-cpu'/>

<qemu:arg value='host,-hypervisor,migratable=no,+invtsc'/>

</qemu:commandline>

</domain>

tl;dr

Does Windows actually run better when it’s aware it’s in a hypervisor, or is the hyperV timer making benchmarks appear to be better due to running an inaccurate timer? To me, I gain an awful lot of fps to almost baremetal, and I ‘feel’ that it’s better. But that’s subjective. Both have +invtsc specificed and it shows up in coreinfo.exe. I want to hear if other AMD users can chime in on it, as Nvidia seems to require the having the hypervisor being off for their graphics cards to work.

I’m on nvidia so I can’t speak for everything you added to your configs that I had not been using. I did notice a slight performance improvement from adding stuff I saw in your configs, but i have to ask why are you only using vcpusched on 9 of your 12 passed cores?

I have to know. How in the world are you using this feature given the current need to use ignore_msrs=1 ???

When I tested it with every pinned core, it resulted in massive stutters that were very noticeable. When I passed only one CCX (4c-8t), it was fine.

Figured out that for whatever reason, you’re fine doing it to first set of CCX cores that the VM sees. The 2nd set doesn’t play nice with the fifo tag. Results in slightly better CPU-Z points and Cinebench.

I was testing the first core of the 2nd CCX to see if you can get away with just that, but forgot to take it out of the configs. Would recommend keeping it off for the 2nd set if you have Ryzen.

I have some options for you to try then. I have applied the vcpusched to all my cores with no problems. I also pass 6 cores of my 2700X but I keep the first core of each ccx on the host. I also do isolation idk if you are doing that but I’ve heard it mentioned that fifo can have issues if the host does anything on those cores while they are pulling a load. Another interesting difference that I plan on testing more thoroughly tonight is that on a whim and rumor I’ve recently been using 2 3 2 for my topology instead of 1 6 2. the idea being that this hints to the vm of the CCX layout so that it can work with it more appropriately. I don’t know if it actually helps much but I haven’t run into any stability issues.

You only need this flag with the latest versions of Windows, Microsoft changed something that causes this problem.

Has anyone done a more in-depth performance-test of 2MB hugepages compared to 1GB for general gaming usage?

Personally I’ve just switches to 2MB instead of 1GB, with no noticeable performance-degradation.

Using 1GB pages, I was only able to allocate 12GB out of my 16GB - as my system has a few reserved memory spaces.

By using 2MB pages, I can allocate 15GB out of my 16GB - so, giving VFIO VM’s more effective memory.

My quick test with Heaven Benchmark showed a 2% drop in FPS going from 1GB to 2GB… But, my theory is that on overall I benefit more from having 15GB RAM in my VM compared to 12GB.

I tried your configuration with having the first core of each CCX for linux and having the rest for the VM with ‘fifo’ specified. I also changed the boot parameters to isolate the cores.

isolcpus=2-7,10-15 nohz_full=2-7,10-15 rcu_nocbs=2-7,10-15

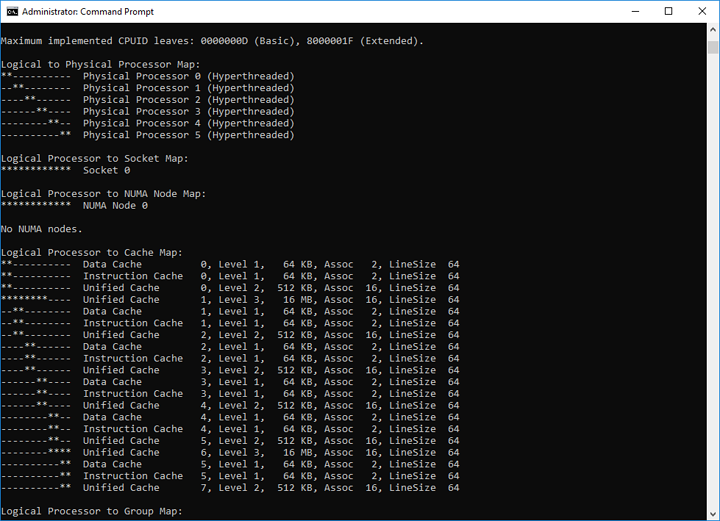

No changes. Stutter still happens under load. This configuration also causes problems with L3 cache as Windows doesn’t adjust what it sees. Screenshot to follow.

Windows hasn’t adjust which cores has a shared L3 cache, only that x amount of cores share the same. This means you’re forced to use the 2nd CCX as your first set of cores, with others to follow as you can’t isolate the core0 in linux. I’ve tried to correct the wrongly reported L2 and L3 cache, but this is a bug. If you don’t have feature policy='require' name='topoext'in your libvirt config, then Ryzen correctly reports the right amounts of L2/L3 cache.

Unfortunately you do want this as it enables SMT. What’s important is that Windows knows which core is paired with who and what shares what for optimal performance. Specifying topoext also seems to make topology changes irrelevant as they took no effect within the VM.

Something to note, vcpusched can safely be used on all cores if you aren’t using hyperthreading within the VM. There’s no stutters and things work great. It was a configuration I used until I learned how to compile qemu3.0 for SMT support. These stutter problems with fifo has arisen since I enabled SMT.

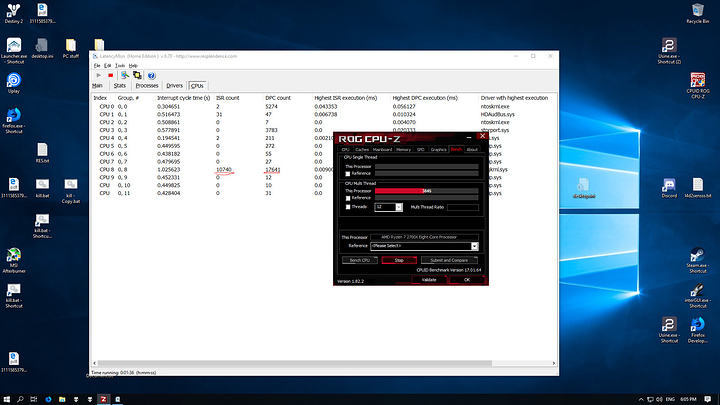

I also tested few other things and managed to pin down the source of the stutters. It was core 8. The first core that Windows sees in the ‘2nd’ CCX that shares the ‘2nd’ set of L3 cache. Windows seems to have pinned the driver for my mouse to that core, and having two sets of ‘fifo’ to the same physical core 'causes the stutters while under load. Screenshot to follow

ISR and DPC count would only rise if I moved my mouse. Otherwise the counts would remain the same. Removing vcpusched fifo on the hyperthreaded core has fixed the issue. This does beg the question whether these stutters will happen on the other cores under high load, but Windows seems to place most of the interrupts on core0 and the others on the first core of the 2nd L3 cache. Everything seems to run great so I’ll leave it at that for now.

This also means, for others configurations - and I speak for only Ryzen, you have to make sure Windows has correctly identified which is the first core that has the 2nd set of L3 cache. While testing urmamasllama’s suggested config, LatencyMon still reported that the majority of interrupts were still between core0 and core 8. So do make sure, else performance seems to suffer. I did test a 4c/8t just to be sure, and sure enough, Windows just dumped it all on core0.

Overall I’m satisfied with the results: process interrupt has moved down to 9us at best with an average low of 13us under no load. Most often it jumps between 13us to 32us under the heaviest so I can’t complain.

feature policy='require' name='topoext' simply check that the feature is supported by CPU or it can be emulated. As it is supported by all Zen CPUs it will be passed for host-passthrough.

Anyway the problem with L3 and Zen is that there is no way to set CCX sizes in QEMU, or at least I dont know how.

Also Windows expect that at lest 4 coreids will share L3 for Zen. You can have more but not less. If you set less than Windows will ignore it and set it 4 anyway.Real HW actually skips coreids for CCX that has less than 4 cores in it. QEMU does not do so.

As for the latency and timing source. Its hard to say if the latency is better or the timer used simply distorts the measurement.

Hey, do you mind sharing how to force 8x link speed for the gpu at host? I would like to test it out on my own using 1070 TI.