Hi All,

Last month I was spending considerable time pouring through the amdgpu sources in efforts to make it possible to reset the VEGA10 series of cards. While doing this I noted that the amdgpu driver checks to see if the GPU is connected via PCIe Gen3, and if so, it programs some registers in the GPU. This got me wondering if NVidia do the same, and since Windows doesn’t see the passthrough card as anything other then standard PCI, not even PCIe, is it suffering for it?

After a few days of hacking on qemu and learning more about how PCIe works I have found both why Windows sees the device as a legacy PCI device and how to fix it.

Firstly i440fx doesn’t support PCIe at all, the card is presented to the guest as a PCI device as there is no other option, this is kinda obvious so if you plan to try this out be aware that you will need to switch to the q35 platform.

Secondly q35 does support PCIe, but most if not all of us are simply connecting the device to the root bus directly. When this is done, Qemu changes the emulated PCI configuration space, setting the device to report it’s type as an Integrated Endpoint. In the physical world this means the device would be physicially integrated into the PCIe controller, not on a PCIe bus. Because of this it is invalid to provide any link speed configuration, and as such Qemu omits it.

The fix is simple, add a PCI Express Root bus device to the configuration and plug the video card into it instead. Here is how I accomplished this.

-device ioh3420,id=root_port1,chassis=0,slot=0,bus=pcie.0 \

-device vfio-pci,host=$NVIDIA.0,id=hostdev1,bus=root_port1,addr=0x00,multifunction=on,romfile=/opt/VM/Windows/1080Ti.rom \

-device vfio-pci,host=$NVIDIA.1,id=hostdev2,bus=root_port1,addr=0x00.1 \

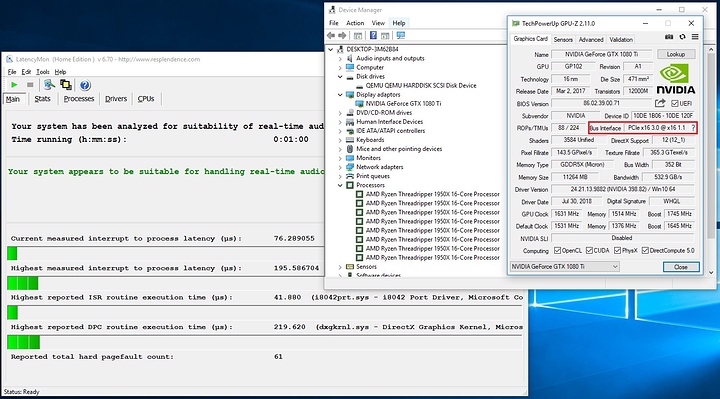

The difference this made is enormous and I am now getting bare metal performance out of my GPU in windows. GPU-Z now reports the GPU is on a PCIe link, as does the NVidia system information.

I have also noted that LatencyMon reports on average much lower latency then previous, where I was seeing up to 2000us, I now never see above 200us.