So I had a thought. The limitations on GPUs per system is at 8 for both Windows and Linux from what I've read. And IOMMU works by passing through a grouping of devices to a Virtual Machine.

What if you combined the two to increase the maximum number of GPUs on a single system (i.e. motherboard, CPU, and RAM)?

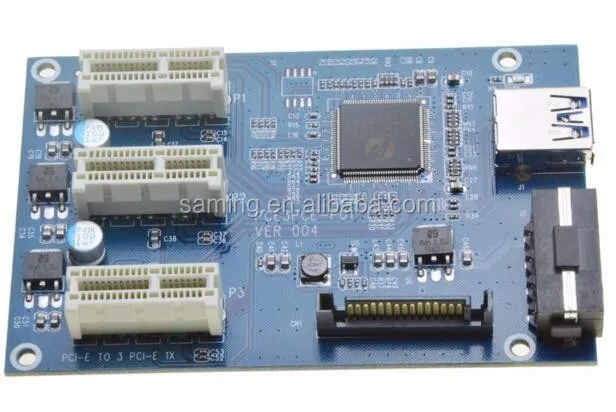

There are PCI-e splitters that essentially turn one slot into three. IOMMU grouping, when it works correctly, usually splits devices into groups based on the PCI-e slot on the motherboard.

So if I had a motherboard with three PCI-e slots, I could attach splitters to each and have up to 9 GPUs on a single board like that, but then the OS limitation comes in.

Scaling up to a board with more PCI-e slots, you could then have more GPUs as well. So, what if instead of just running them all on one OS, you passed them through to a VM to be handled there?

I figure if I had a motherboard with 8 PCI-e slots, and a CPU with 32 PCI-e lanes (or with the chipset, 32 total), you could theoretically do up to 24 GPUs on a single board. 8x3 = 24, and assuming they used a max of 4 lanes, that's 32 lanes.

Then just pass through 2 PCI-e slots on the motherboard (so 6 total with the splitters and so 6 GPUs), and you could have four VMs, each with 6 GPUs.

Driver instability with mixed GPUs (not AMD vs Nvidia, but different AMD generations) is one annoyance with mining. And configuring the Linux host to monitor the mining on each VM and restarting the VM automatically when an issue occurs just seems like it'd save a lot of time. Plus, snapshots for when updates break things.

Overall, if this could work, that'd be very interesting. Reasons I think it might not work:

- Linux still has to be able to pass the GPU device to the VM, but if it can't see more than 8 GPUs, how would the other 16 be handled?

- RAM constraints (though I figured at 32GB of RAM, with 5 OSes, you could give each Windows VM 6GB and the Linux host 8GB or so).