I’ve previously asked about how to utilize my various types of SSDs in ZFS/Proxmox, here: New Proxmox Install with Filesystem Set to ZFS RAID 1 on 2xNVME PCIe SSDs: Optimization Questions .

Thanks for all the help over there. I’m to the point of actually setting up things now, and running into issues with figuring out what ashift values to use. I know that this flag is pool level, and getting it wrong will permanently contaminate the VDEVs. I also know that some (all?) SSDs will lie about their sector size for legacy OS compatibility.

I’ve got 3 types of SSDs in play, and they’re all giving me different output formats in smartctl, with different reported sector sizes, so I would appreciate a sanity check and some advice on what to do. I don’t want to have to redo the install because I got this wrong.

Question: What sector size should I set for each of the following drives inside Proxmox (ZFS settings for pool)? Each pool is made of one type of drive. smartctl output follows.

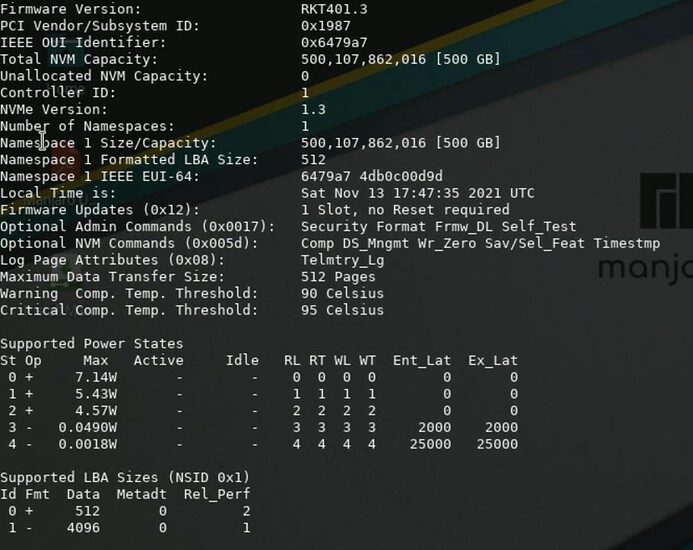

Drive Type 1: Sabrent Rocket 4.0 m.2 PCIe 4.0 NVME:

So, it looks like these are 512 bytes by default, but can support 4096 LBA size?

So if I set ashift=12 (block size: 4096 bytes), Proxmox should format the drives that way, and all should be well?

Or do I need to do something else?

Drive Type 2: Lexar LS100 500 GB 2.5" SSD

The only reported value here is “logical/physical” at 512 bytes. So, I’m guessing I should set ashift to 9 (512 bytes)?

Or should I use 4096 again even though nothing is reporting that?

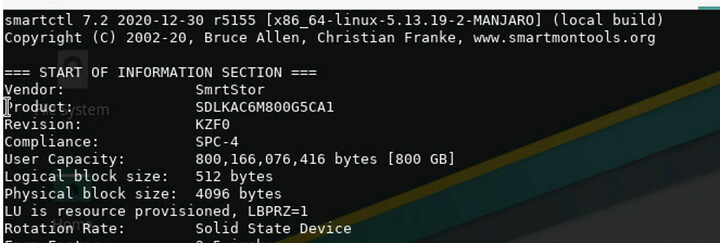

Drive Type 3: Sandisk SDLKAC6M800 G5CA1

Logical is 512.

Physical is 4096.

Frustration is up to 11.

Since physical is 4096, I’m thinking there’s no risk in using 4096 for ashift?

).

).