Hi everyone ! First post on this forum, which seems to be where I’m the most likely to find the help I need… I hope I did post in the right category…

So here goes…

I’m running very big CFD calculations (computational fluid dynamics) on a daily basis. Sometimes one calculation is several weeks long, and rarely less than a day. And as the beginning of an upgrade from my previous Dell dual Xeon 6248R machine, I got my hands on a pair of Epyc 7773X CPUs. Yay !

I built my server (running Debian 10) with the following parts :

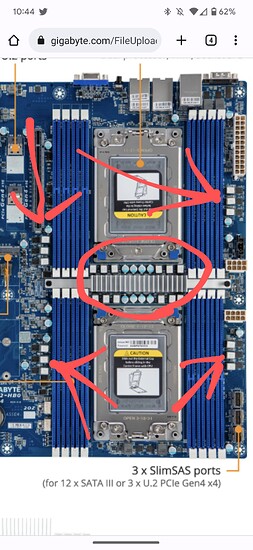

Motherboard : Gigabyte MZ72-HB0 (after trying a Supermicro H12DSi-NT6 that absolutely couldn’t handle the VRM dissipation)

CPU coolers : 2x Noctua NH-U14S TR4-SP3 with additionnal 150mm fans

RAM : 16x Samsung 32GB DDR4-3200 ECC (total 512GB)

System SSD : 2x Samsung 980PRO 500GB M.2 in Raid 1 on a Startech PCIE4.0 8X → dual M.2 adapter

Calculation SSD : Samsung PM1735 3.2GB PCIE4.0

Storage HDD : 2x WD Ultrastar HC520 12TB in Raid 1

Power supply : Corsair AX1600i

Case : Fractal Define large tower with as many Noctua fans as I could install ! ![]()

The machine works. And it’s fast.

For those of you familair with this kind of application, yes, depending upon the size of the problem I’m trying to solve, it is sometimes faster to run the case on 64 cores instead of 128, because of the memory bandwidth. But large problems are faster on 128 cores.

The problem I have is that this server isn’t completely stable.

It will run for days and then crash. When it does, I believe it’s a hardware crash. No trace of anything in the OS logs or the IPMI logs.

These days, it crashes basically every night (not at a given hour). Of course, I loose part of my calculation every time !

It has a tendency to crash when under stress (and heat). There are occasionnal “CPU throttling” messages in the IPMI, although the CPU cooling is very efficient, and the temp sensors never rise above 75%. The VRM temp is often around 97°C when under full load (alarm temp is 115°C according to Gigabyte).

But it will also sometimes reboot three times in an hour with no load at all.

I haven’t figured out what’s causing this, and more importantly, how to solve it.

I have moved the PCIE cards (system and calculation SSDs) up to slots 5 and 6 to shorten the electrical links. I still have a suspicion that the Startech M.2 adapter isn’t as “professional” as the rest of the components, but the MB only has one M.2 slot…

If I don’t manage to solve this, my next move will be to install the machine in a Fractal Torrent or a 4-5U rack server case with Arctic Freezer 4U SP3 coolers (thanks Level1techs YT !), but that’s an expensive try if heat isn’t the main factor causing the issue…

Any idea, any help would be greatly appreciated !

Have a great day,

David

PS : Pics in the thread below (post 20)