What are We Doing?

ZFS – once you know, it’s hard to go back.

ZFS has a fair bit of overhead, and I suspect there are changes coming down the pike to better support performance of NVMe devices, but ZFS is unmatched when it comes to engineering and stability.

For me, it is worth the performance tradeoff to have the redundancy and reliability.

In this writeup, I’m going to share some performance numbers and tuning. (Note: ZFS Vets, share your tips and tricks too, please!). The “ingredients” for this writeup are aimed at someone with a fairly high-end workstation:

- Intel Xeon or AMD Threadripper, or equivalent platform (lots of PCIe lanes)

- Multiple devices for Speed and Resiliency

- 4x NVMe (I’m using Kioxia 1tb m.2 NVME and they are nice

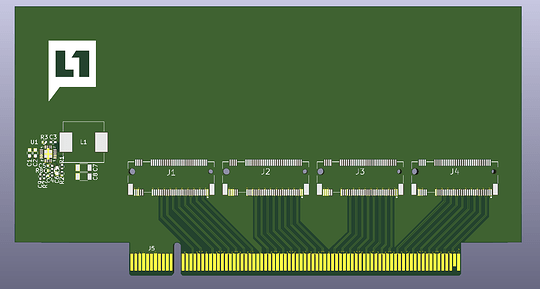

- Gigabyte CMT4034 compact 4x NVMe to PCIe x16 adapter.

For Intel Z490/Z390/Z370 users

I don't recommend bothering with anything except perhaps mirroring of two NVMe devices. Generally, these motherboards are troublesome because they have multiple NVMe slots wired through the DMI interface, which itself is limited to 4gb/sec. For 4 NVMe drives, it just doesn't make sense. It is also troublesome, in my experience, to try to get the 16 lanes from the CPU split up as x8 to your GPU and then 2x x4 to two NVMe because most motherboards don't support this well.

Note for X470 and X570 users

It is possible to run NVMe as follows for optimal setup: 1x NVMe through the CPU NVMe lanes, 1x NVMe through the chipset and 2x NVMe through an add-in card that splits the second x8 slot into x4, x4. This often requires setting the slot to be “x4x4” in the bios, so you must be sure you get a board that supports that.

As such I recommend going for a mirrored configuration only on AMD X470/X570 using just two NVMe and not 4.

For Intel X299 Users

Intel offers VROC under some somewhat screwy conditions on their X299 motherboards. I have several “VROC Keys” that unlock different types of VROC. The idea is that the CPU would handle some of the raid functions more transparently. This is actually a legit concern – Windows really struggles with bootable software raid based arrays and this is an attempt at handling NVMe redundancy kinda-sorta in hardware. Unfortunately the marketing team seems to have gimped what the engineers tried to give us, rendering it nearly unusable in this context and scenario. I’ve never been able to get it to work, on any operating system, to my liking. For ZFS specifically, it is always best to have ZFS deal directly with the underlying block storage devices so VROC is not applicable here for that reason alone.

Where are the bodies buried with ZFS?

I love ZFS, but the ZFS design predates the idea that storage arrays are approaching main memory bandwidth limits. Track this thread on github:

Eventually we’ll get “real” Direct I/O support for ZFS pools. For this 4-up NVMe setup, it’s not that bad. It’s also possible to use a striped mirror (two mirroed vdevs), but you only get half capacity usable. That’s quite a fast setup, though.

This is prettymuch the only downside of ZFS. I am aware of BTRFS and I use it. It can be fine, too, as an alternative to ZFS, depending on your use case. My preference, for technical reasons, is still ZFS.

What are we working with?

Here we are – for the guide I experimented with Kioxia BGA4 NVMe as well as more standard 80mm m.2 from Kioxia – all 1tb in size as that’s the sweet spot for capacity/performance/endurance right now, imho.

I’m using the Asus ROG Zenith II Extreme Alpha with Threadripper. In this case, Threadripper 3990X. This motherboard has a total of 5 NVMe slots – two on a dimm and 3 on the motherboard itself. Many Threadripper motherboards also have add-in cards to support a large number of m.2 devices.

For this guide, I will use the Gigabyte CMT4034 carrier which is a half-height half-length PCIe card. This card is designed for use in servers and places where there is high airflow. If you use it, be sure it has appropriate airflow. It is unnecessary to use with the ROG motherboard, and many other threadripper motherboards. It also wastes a PCIe slot I could otherwise use for another add-in card (vs using onboard M.2).

Alternatives to m.2 cards

There are M.2 carrier cards in the market now that offer a PLX bridge, including a highpoint device which also offers raid functions. With this, it would be possible to use an x8 slot, for example, to support 4x x4 NVMe devices at full bandwidth (until you hit the upstream limit of the x8 PLX bridge).

This would be awesome if you could get a PCIe 4.0 PLX bridge because x8 PCIe 4.0 = PCIe 3.0 x16 worth of bandwidth, and that’s plenty of bandwidth for storage. Currently, I know of no such beast on the horizon.

Liqid offers some killer all-in-one HHHL cards that have a PLX bridge and all the performance you need. They’re tailor-made for what we’re doing here, and often use Kioxia devices for their underlying storage. That’s the “off-the-shelf” solution for what we’re doing here, if you don’t want to DIY it.

Hardware Setup

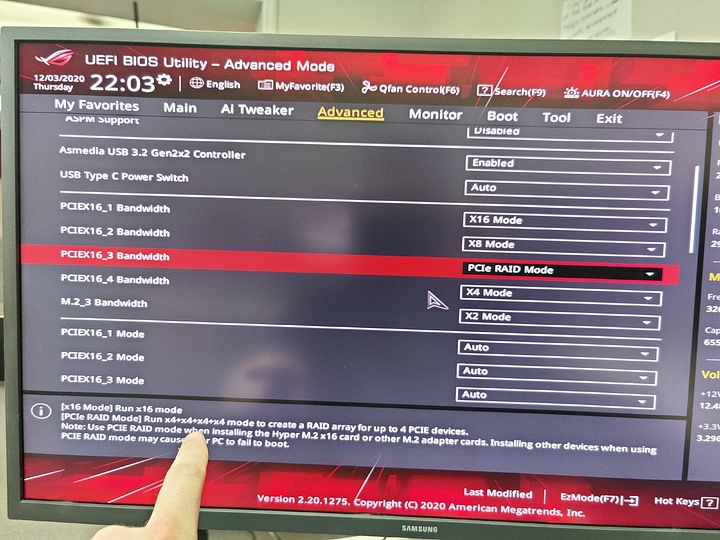

Most motherboards refer to “x4x4x4x4” mode but asus refers to it as pcie raid mode. Not to be confused with AMDs RaidXpert.

Ubuntu Fresh Install

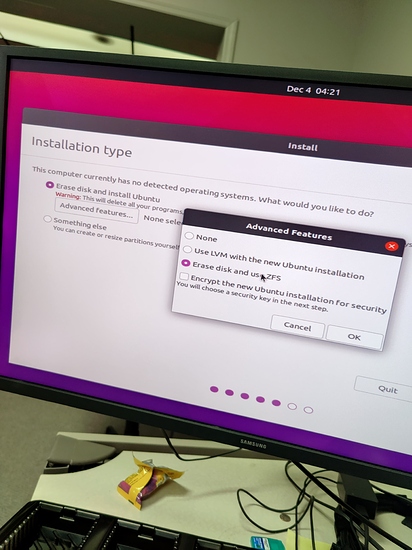

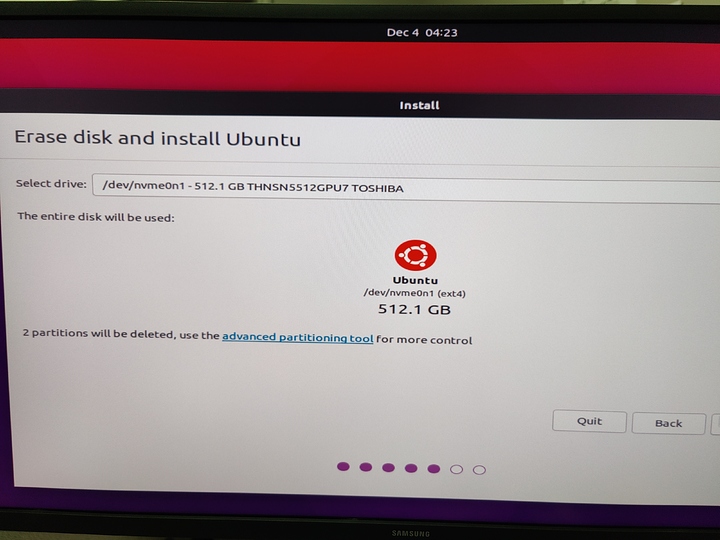

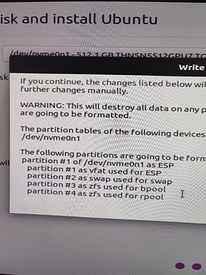

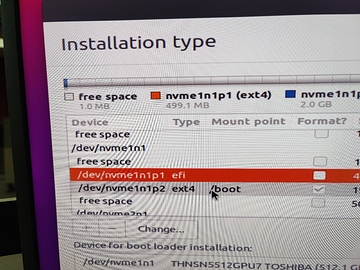

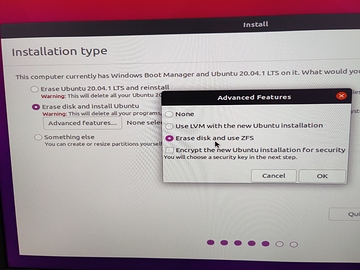

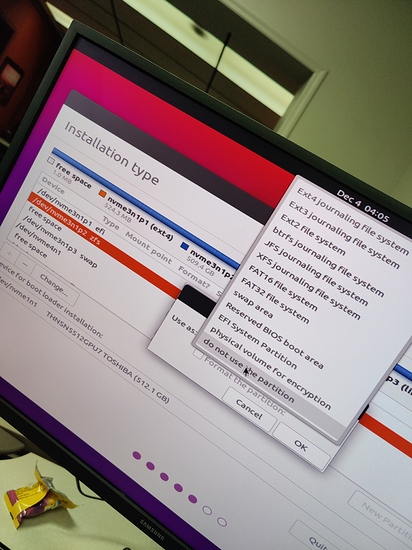

“The Ubuntu installer can do ZFS on root!” – well, not so fast. It’s really rough. I’d recommend you remove any other disks you do NOT plan to use with the Ubuntu installer. Dual booting? Pop that disk out!

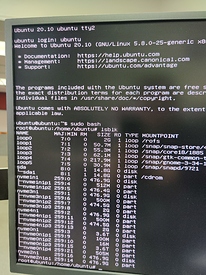

It’s possible to setup ZFS right from the Ubuntu installer., but only when you do it automatically. This creates a couple of zfs storage pools, bpool for /boot and rpool for / (the root filesystem).

This will give us 3tb of usable space, and any one of our 1tb NVMe can die and our computer won’t miss a beat. Modern versions of ZFS support Trim just fine, so the NVMe performance shouldn’t fall off as the drives are used.

It is worth doing some experiments for your use case in terms of creating dataset(s) for your files and seeing what works well for performance for you.

TODO Here’s how I setup Ubuntu

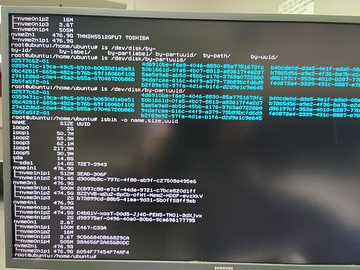

lsblk -o name,size,uuid

zpool create tank raidz -o ashift=12 /dev/disk/by-partuuid/something ...

Halfbakery

Note: In general, if you setup /home on a separate partition, it makes it easier to totally reinstall your OS or to distro-hop. The downside is guesttimating a size for / but not including home. I usually go for 250-450gb for /, not including home, especially when I’ve got more than 2tb of total disk space. YMMV however.

In Conclusion

With the relatively high density and low cost of flash, everyone can enjoy some redundancy and fault-tolerance on their workstations these days. Sure, flash is way more reliable than spinning rust, but that doesn’t mean it is bullet proof. And Raid, ZFS, snapshots, etc is no substitute for a functioning backup system. Thanks to Kioxia for suppling some hardware for my mad science. I’m very satisfied with the performance here.

And like you said, $$$.

And like you said, $$$.