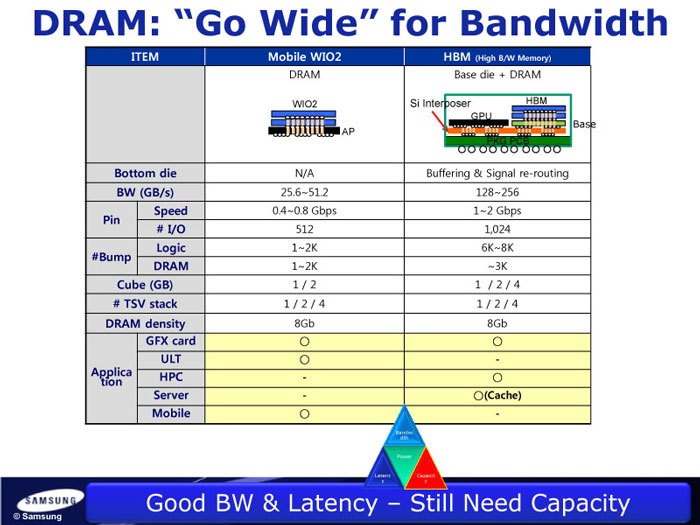

Samsung certainly seems to think so if this is to be believed:

Of course L2 and L3 caches are still quite a bit faster than HBM. Using Intel's Memory Latency Checker it shows that L1-L2 responses are in the 20-35ns range (Haswell). HBM is supposedly designed to have faster access times, but the row cycles are still 40-48ns like that of DDR3/DDR4. Again with Intel's tool you can test that DDR3 has a peak response of about 74ns (given small data sets). So perhaps HBM is in the 50-60ns range, which would certainly be better than nothing for an L4 cache.

Honestly information on HBM's actual operating parameters are still closely guarded by JEDEC, so it's all speculation until implementations are launched and we can observe what the scope of HBM's application is.