Just curious how others do this kind of thing, here is what I came up with and why.

The main part of my business is remote Linux server management and consulting services (Shameless plug: https://hostfission.com), and as such I have to maintain a method of storing critically sensitive information for my clients, specifically server access details.

A prior company I worked for who shall remain nameless would use a single ssh key and deploy the public key onto each server that they required access to. Obviously the danger here is that if a rogue employee takes a copy of the private key, all bets are off. This never sat well with me, so when I started working for myself I decided that there needs to be a better solution.

I wrote a management system to store my clients details in encrypted form. The protection model is based on how LUKS works, a single master private key, which is encrypted with a user’s private key, which is encrypted using a very strong password only the user knows. This way the data in the database can not be decrypted without breaking one of the user’s passwords, which is actually the key to their key.

But I still don’t want a user to be able to access the data or the master private key directly, so once they have logged in a broker is forked for the duration of the session which brokers access to the encrypted data. This process is run under a special user account by means of setuid. A series of utility tools provide a means to query information from the broker.

Ok, so now there is a way to store and load data into a secure system, there is still the issue of authenticating with a remote host. Initially I was using the same method of my previous company, but I cringed every time I thought about it.

There are three goals here I had:

- Do not allow the admin to ever have access to the server’s private key

- Do not use the same key on more then one host

- Do not interrupt the standard workflow, keep key auth automated.

So, here is what I came up with. I wrote a ssh wrapper which performs the following:

- Forks a custom SSH agent

- Sets the environment up to use the custom agent

- Runs ssh with the appropriate arguments to connect to the correct host

The custom ssh agent obtains the public key from the broker for the server in question, and any public keys from any forwarded agents and combines them to provide them to the ssh client which then forwards them to the host. When the remote host selects one and sends a challenge, the agent will do one of two things.

- If the public key is the server key, it will ask the broker to sign the challenge with the private key; or

- If the public key is a forwarded key, it will ask the forwarded agent to sign the challenge.

On connection a small python script is pushed to the client which is used to bootstrap an environment consisting of my admin tools & scripts. Some of these scripts require access to information in the management database, and as such I extended the SSH agent protocol to implement some side channel communications with the broker back on the other end.

One such task I use this extra channel for is signing keys for backup server certificates. I deploy a script in the bootstrap environment that is able to generate a private key and csr, and then ask the broker via the ssh agent to sign it. After the broker inspects and verifies the CSR is valid, it signs and returns it. This way with a single command I can generate a valid certificate over encrypted communications without ever moving the private key over the wire.

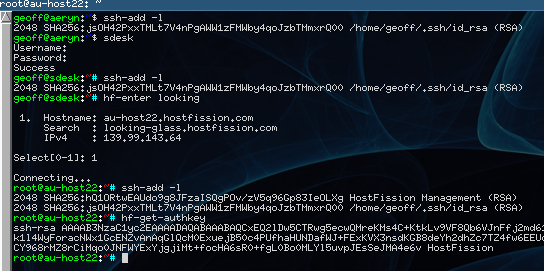

Anyway, enough talk, here it is in action.

This session shows the entire workflow.

- On my local PC I listed the keys in my local agent

- I connected to the management server, this uses my local key to auth, and immediately tries to login to the management software.

- Again I list the keys, you can see I forwarded the agent through to the management server.

- I then search for a server to connect to (enter) with the name

lookingas a search term. I can also specify other fields, such as hostname or IP by using the syntaxfield:search. - I selected the host found to connect to it and in the background my custom agent is spawned and configured for use with that host.

- Authentication is successful and the connection is established. This also bootstrapped the remote host with the management tools.

- I list the SSH keys again, this time you can see that the custom ssh agent has added the public key for this specific server to the list.

- I use the

hf-get-authkeytool to ask the the management server what this server’s public key should be via the SSH agent sidechannel. This is used when I am adding a new server to the database for management. After connection via password auth, I simply runhf-get-authkey >> /root/.ssh/authorized_keys.

This system has many more features I have added to it over the years, such as integration with monitoring, backup and VPN services.

So, this is how I manage my clients, I am interested to know how others do this?

Note: Many details of the security measures used here are intentionally omitted for security reasons and the demo shown here is not exposing any sensitive information, but for good measure the keys used here were retired before making this post