DISCLAIMER: You can ignore my post if you want. leaving it in case usefull rather than deleting. Just noticed you have an m.2 SATA and I never used one so I can’t comment on the passing through of the entire controller; I did believe that all M.2 devcies worked like I did mine, but I could be wrong and the extra controller board on the M.2 NVME is a wildcard I wasn’t expecting.

Quick dump of how I am doing a full dedicated NVM drive passthrough:

lspci -nnk

3e:00.0 Non-Volatile memory controller [0108]: Samsung Electronics Co Ltd NVMe SSD Controller SM961/PM961 [144d:a804]

Subsystem: Samsung Electronics Co Ltd NVMe SSD Controller SM961/PM961 [144d:a801]

Kernel driver in use: nvme

vfio_nvme.sh - VFIO kernel modules are on; but I chose to acquire at runtime - you can just do it as per the guide at boot time with kernel parameters in grub.

#!/bin/bash

PCI_NVM=“0000:3e:00.0”

DEV_NVM=“144d a804”

Bind NVME drive to VFIO

echo “$DEV_NVM” > /sys/bus/pci/drivers/vfio-pci/new_id

echo “$PCI_NVM” > /sys/bus/pci/devices/$PCI_NVM/driver/unbind

echo “$PCI_NVM” > /sys/bus/pci/drivers/vfio-pci/bind

echo “$DEV_NVM” > /sys/bus/pci/drivers/vfio-pci/remove_id

unvfio_nvme.sh - undo it when done. Doesn’t really matter functionally in our dedicated drive scenario’s; but I am thorough to a fault.

#!/bin/bash

PCI_NVM=“0000:3e:00.0”

DEV_NVM=“144d a804”

Unind NVME drive to VFIO

echo 1 > /sys/bus/pci/devices/"$PCI_NVM"/remove

echo 1 > /sys/bus/pci/rescan

QEMU command line argument is simple at this point:

-device vfio-pci,host=3e:00.0

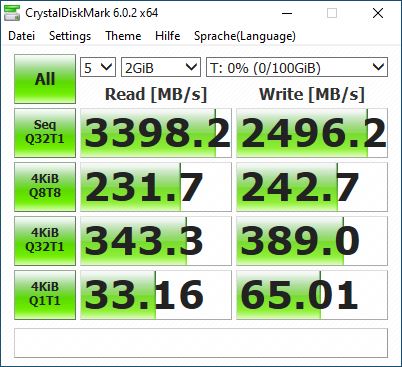

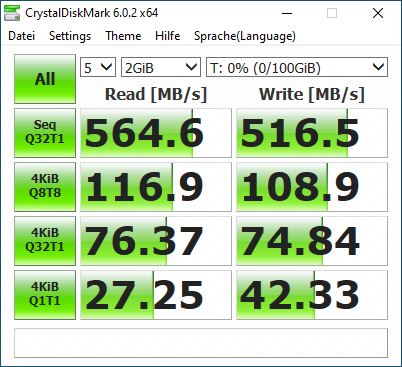

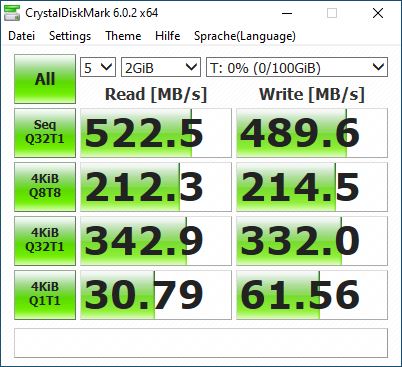

The above simplified my VIRTIO block device handling, increased performance to bare-metal, let the native samsun utilities work on the drive, and fixed a problem where TRIM wasn’t working on the virtio drive (may or may not have been resolved by the rotation_rate=1)… and more.

A no-gurantee dump below from my archive of one of the less pretty and maybe not best ways to do it. I have a dozen archive files and I can’t figure out generationally which is what. I sense less than 4 args per drive were needed and that going through the virtio-scsi-pci drivers wasn’t needed? Maybe I used a non-alias for the name. In case it helps to show all the nice arguments to research or give a starting point:

-object iothread,id=iothread0

-object iothread,id=iothread1

-object iothread,id=iothread2

-device virtio-scsi-pci,id=scsihw0,iothread=iothread0

-device virtio-scsi-pci,id=scsihw1,iothread=iothread1

-device virtio-scsi-pci,id=scsihw2,iothread=iothread2

-drive if=none,id=drive0,file=/dev/sdd3,format=raw,aio=native,discard=off,detect-zeroes=off,cache.writeback=on,cache.direct=on,cache.no-flush=off,index=0

-device scsi-hd,drive=drive0,bus=scsihw0.0,channel=0,scsi-id=0,lun=0,id=scsi0,bootindex=0

-drive if=none,id=drive1,file=/dev/disk/by-partuuid/b3414ed6-fbc0-4b49-82be-a0302c8dccd8,format=raw,aio=native,discard=on,detect-zeroes=unmap,cache.writeback=on,cache.direct=on,cache.no-flush=off,index=1

-device scsi-hd,drive=drive1,bus=scsihw1.0,channel=0,scsi-id=0,lun=0,id=scsi1,bootindex=1,rotation_rate=1

-drive if=none,id=drive2,file=/dev/mapper/lvm_raid0_hdd-winten_gamedrive,format=raw,aio=native,discard=off,detect-zeroes=off,cache.writeback=on,cache.direct=on,cache.no-flush=off,index=2

-device scsi-hd,drive=drive2,bus=scsihw2.0,channel=0,scsi-id=0,lun=0,id=scsi2,bootindex=2 \

The key points are an iothread per drive, mind your cacheing configurations for performance, and detect-zeroes/discard/rotate_rate/others affect TRIM visibility? This one could be all out of date. Dumping it in case.

Let me know if I can assist. Direct passthrough is likely best for you.