See how I got you to open it by NOT having it in the Title…Just kidding.

BLUF- Whats my best option for a enterprise level or like hypervisor for my machine considering its components (Id like to keep the raid card to see if I can integrate alerts, emails etc to simulate a help/service center) and my goals? It will be used for a test bed for Linux and windows (MAYBE), a plex server (I need this one set up asap as my wife is going crazy missing her shows), Pihole?, pfsense?, any other projects I can find to learn, and whatever interface OS that will work best in this scenario. I have tried to install proxmox…20 different ways…yeah. Should I keep trying? It doesn’t have to be easy to use by any means given my goal of learning. Any help appreciated.

To business! I have assembled a new machine I hope to use as a test bed for learning Linux (some experience, a 2 of 10), and navigating - learning enterprise level environments. I would like to learn more networking as far as routes and security. My goal is to maybe find a job as a Remote IT tech. The biggest reason for this is my disability, I am a veteran and I am disabled (nuf said… period).

I stumbled into your website and was im pressed with the content as well as the knowledge of your community so I joined up.

I had seen this ASRock Rack X470D4U Micro ATX AM4 socket motherboard and I had to give it a shot. I am intrigued by the tech involved and the challenge. I had already managed to master the LSI 9260-8i Megaraid, so I wanted to continue. My previous MSI B550M Mortar did not have a ECC option. I already have cloud and redundant local storage, so its not needed but I wanted to try it. (lot more on that later, another post?).

Heres what I have-

cpu- Ryzen 7 2700

cooler- modified MSI Frozr L (modified with noctua mount-post later?)

ram- Kingston KSM26ED8/16ME Server Premier unbuffered ECC 2666

mobo- ASRock Rack X470D4U

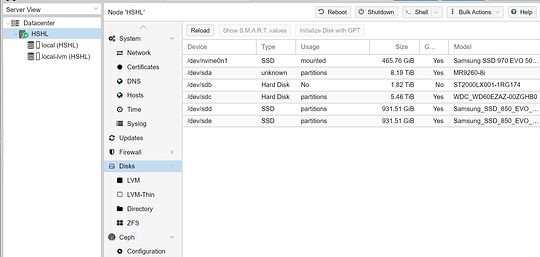

LSI 9260-8i with 4x 3TB Ironwolf Drives and a Samsung 883 DCT Series SSD

GPU- MSI GTX 1650 Super Gaming X (I will need code to unlock trans-code limit?)

Drives- Samsung 860 Evo 1TB M.2 SATA, SATA 2.5 version same size, Seagate Firecuda 2.5in 2TB SHHD, and WD Blue 6TB 3.5in.

All housed in a Fractal Node 804 Case with some Noctua Fans.

Thank you for reading all of this. I know its a lot. here’s a star