It seems like most of the thread has been derailed. Here to derail it further, for the sake of knowledge. I am going on a rant, I would like to hear opinions.

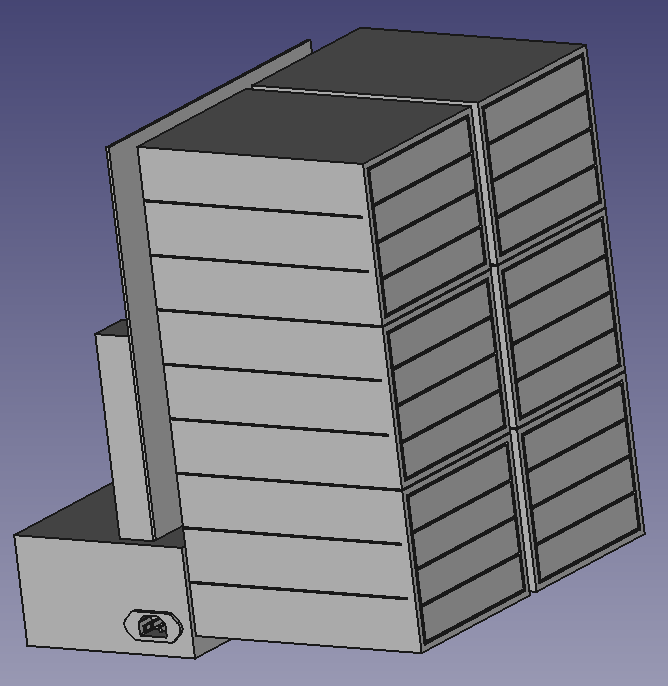

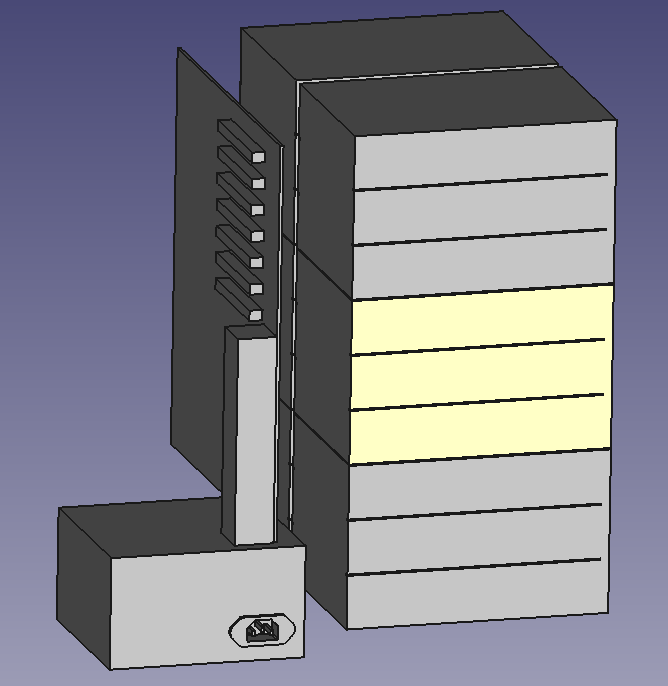

I used to have an old Antec Sonata II filled to the brim with 3.5" drives. I posted it on the forum. It had 11 HDDs and a SATA SSD (and using an old powerhog Lynnfield Xeon x3450). It was consuming tons of power (actually doubling my power bill if left 24/7). All the drives were 2TB and I had a RAID-Z2 pool on it, with a hotspare (10 spinners pool). I had a 16TB pool, for a power consumption that was beyond ridiculous for the capacity. I can do better with a RAID-Z with 3x 10TB drives if I want to.

I moved from powerhogs to something that can actually be called portable that uses peanuts in comparison. I can power everything on my Bluetti EB70S (tested - max output possible on 4x AC plugs is 800W AC). And that includes the additional UPS where they are plugged into. Without my threadripper being on, my power consumption at idle / normal operation is not even reported by the UPS, it’s ridiculous. With the TR on, it’s 200W out on the Bluetti and about 188W on the UPS (which is plugged to the portable power station). I don’t recall what the power station reported, but I think something like 10W drawn by the UPS with the things I have on it.

My setup? A rockpro64 with 2x 10TB drives in mirror and 2x 2TB MX500s also in mirror. I moved everything off of the NAS, but even beforehand, I hated the concept of the “forbidden router” (sorry Wendell). I’m not saying it doesn’t have its purpose / usage scenarios, but it’s definitely something I don’t want to have. I have my containers on an Odroid N2+ and I have an Odroid HC4 that is waiting to be converted to a backup box (with some rubber grommets around the HDDs once I get new ones). The NAS is only a NAS. Its only duty is to serve NFS shares and iSCSI targets. A NAS shouldn’t be running VMs or containers, hence why I can get away with a meager arm cpu for my NAS.

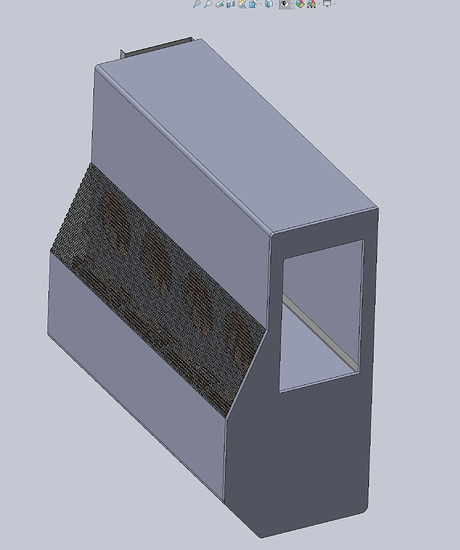

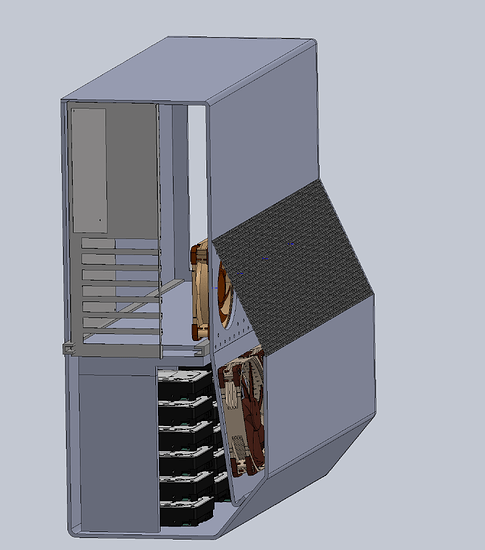

I initially was hoping to modernize my Antec Sonata II build, so I went with the TR and an Antec P101 Silent, which can hold 8 HDDs, 2 SSDs and a 5.25" drive, but you can mod it to stuff even more HDDs if you avoid long GPUs, at least 4 more. If you avoid the bottom expansion slots altogether (like getting a microATX board), you can probably fit at least 2 or 4 more drives, for a total of 14 - 16x 3.5" drives. If you go with a SFX PSU, you can put 2 more drives on the bottom, but by that amount of drives, you’d be hard pressed to find a decent SFX PSU that won’t die at a young age (there are 800W SFX PSUs out there, but still…).

But I realized I really don’t want the heat from the TR constantly in the room (the Sonata was already horrible in the summer, I had to shut it down every now and then). The build is silent, but has no non-m.2 drives (used to have the 4 I moved to the rkpr64 official case). But even before the heat, the idea was to save power. I didn’t want such a high power bill anymore. When I built the TR, I built it with the intention of powering it down when not needed, which is what I’m doing now. And it was a really good called, it saved my behind, because all the houses in the US where I lived had bad electrical connections. The worst one in Europe I had was having a whole apartment on one fuse (when the fusebox had 3 fuses), but it was still enough to power the old Sonata and 4 or 5 more computers, a fridge and a washing machine all at once, without burning the house down. Here the fuses would trip even from my PC being powered on after a while (well, I’m exaggerating, but it did happen once - but generally, a room heater would always trip the fuse after a few minutes).

The Sonata was a NAS and Hypervisor combo. Generally, if I am to design a datacenter, I split them to a dedicated NAS hardware (lower power) and a hypervisor. And in the same spirit, that’s what I did with low-powered arm platforms. And while it wasn’t me who had the brilliant (sarcasm) idea of using HP ProLiant MicroServer as NASes for a hypervisor infrastructure at my old workplace, these low-powered celerons with 8 to 12GB of RAM served a bunch of VMs and weren’t even sweating (impressing for a RAID-10 setup, the CPUs were mostly idling, while the disks were not overloaded at all, with 20 to 40 VMs each).

I know not everyone can live with low powered arm stuff (even I had too much of it on my desktop after probably around 3+ years). But I’m here to ask questions, just to put them out in the open.

The rant above leads to the following:

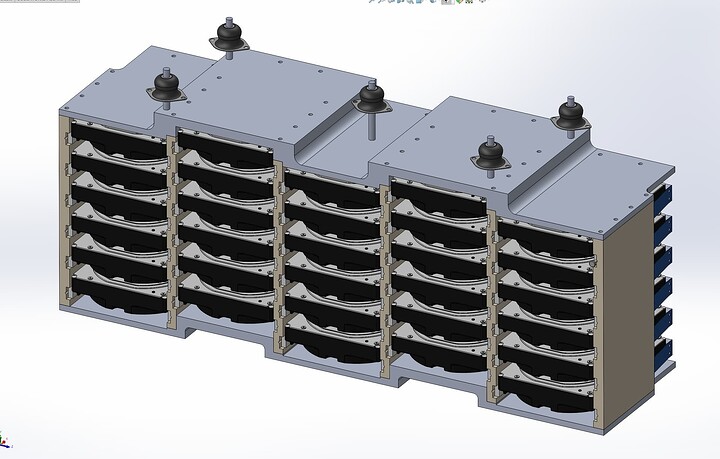

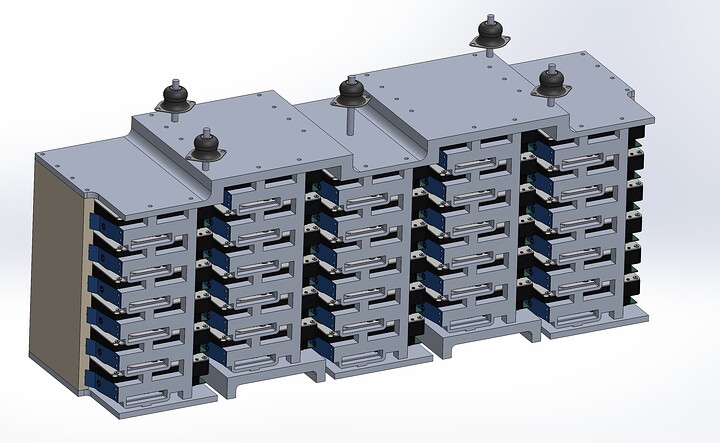

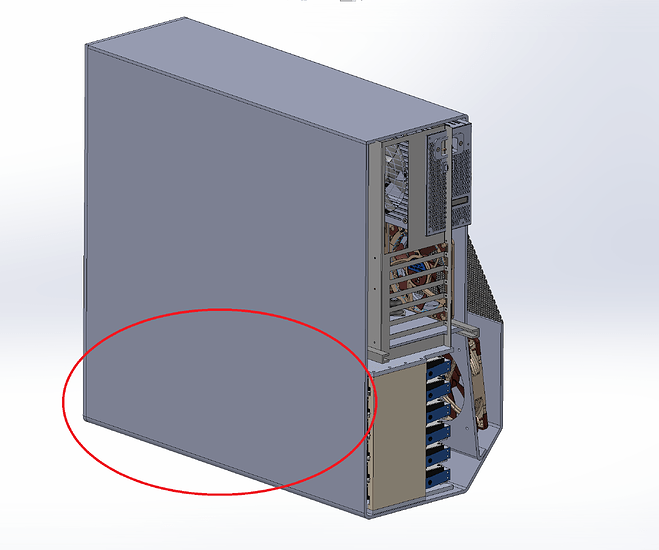

- Why do you need a beefy disk shelf?

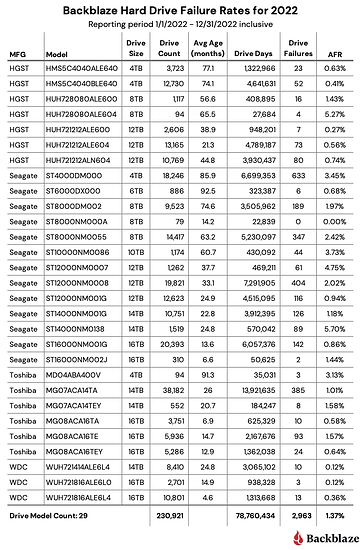

- Instead of buying a bunch of cheaper drives, why not go for more expensive ones, but higher capacity?

- Is your workload really that big? Are you really going to make use of 24 drives, especially in a homelab environment? (I assume that is the case, given the desktop form-factor and quiet operation requirement - but the heat is still going to be there, even with 5W per drive, we are looking at 120W of power for the drives alone).

- If it’s not a homelab, wouldn’t the physical security and the peace of mind (in terms of UPS, power generator and AC cooling) be better provided by co-location services? Buying a cheap 24+ drive shelf and another 2U server would fit the bill beautifully. Do you need that to be on a local network for some reason? If you don’t have a data room already built, then why not build one?

- Would archiving instead be a better deal, saving you money both on expensive drives, hardware and running costs? You may not need to have all your data available at all times, unless you are planning to make an on-demand service for many users (by which point, you should be at least co-locating, if not building your own data room). Taking off infrequently accessed data is a really viable strategy for saving money. Using Tape Drives that have automated arms can even help you access them automatically, with the right software, if a waiting time is not too much to deal with. For that matter, even cloud archiving can be worth it (just use restic to encrypt the data going to it though).

- Instead of 1 beefy server running all those drives, maybe going with more lower powered hardware and a software solution like ceph be a better option?

Anyone is free to comment on my setup(s), answer the questions (although they are personal questions that you should be asking yourself, I’m just pointing them out) or ask more questions.