Use at least raid6 if you need to use hardware raid.

So in summary, considering my situation…

- Ext4

- XFS

0 voters

Ext4 is maybe slightly more awesome for docker. But ive used xfs in this exact scenario and it’s great.

The volume size at 36tb is a bit large. Make sure the underlying hw is at least raid6 with at least one hot spare. And is robust. Very reliable.

Zfs would work here but the only way to zfs plus docker IMHO is with an ext4 volume inside zfs which is kinda lulz.

Is there any particular reason not to use Docker’s ZFS driver?

I’ve used both side by side in prod on systems with about a hundred containers and… the docker zfs driver does weird, weird stuff sometimes. stopping and starting containers gets weirdly laggy until the system is rebooted and there are unexplained i/o delays I’ve run into.

the pita of migrating the storage from zfs to ext4 “inside zfs” (an ext4 zvol) solves most of the weird highly variable i/o behavior. if you’d never used ext4 directly on fast bare metal, you’d probably never know something wasn’t exactly right. I am not 100% sure its the native zfs driver, but I have my suspicions.

Thanks a ton everyone, for sharing your experience and pointers to good documentation.

I’ve made my decision. XFS it is.

And thanks Wendell for bringing even more targeted context to the discussion.

Also, noted on the RAID6 recommendation. I think two drives were used for parity but I could be wrong.

I’ll install Dell’s PERCCLI tool to check.

Are you running a h310 or h710? The answer to this will dictate if raid 6 is even an option for you.

Also by default any Perc virtual disks will automatically run patrol reads weekly so make sure that they aren’t being run at an inconvenient time. I think the default time is 0000 local time on Saturdays.

It’s a H710 Mini and from iDRAC I only see a patrol read rate set to 30%. Not a schedule. Maybe I’m looking in the wrong place.

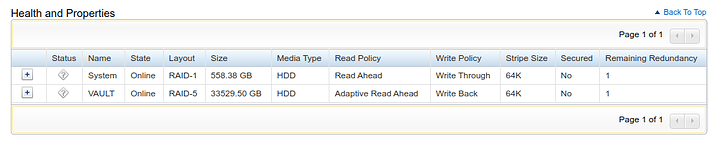

Also, I’ve confirmed it RAID5, not 6. ![]()

Abandoning the perccli idea. I don’t like the sound of converting an .rpm to a .deb for a package listed as supported on Ubuntu 18.04, to run it on 22.04. That’s crazy. I’m surprised Dell hasn’t kept this tool updated or released native debs.

(I’d be more comfortable installing a .deb packaged for 18.04 on 22.04, than converting an .rpm.)

Oh good, you have the option to migrating to raid 6 then. fwiw I’d recommend raid 6, it alows the underlying storage system to detect and correct any kind of silent corruption (within a single disk) whereas raid 5 mostly only detects–both these cases assume a filesystem without built in checksumming is used.

Actually now that I think about it, I’m not sure if I’d bother to have patrol reads run on SSDs, they don’t benefit from it nearly as much as hdds do from a data integrity perspective.

You probably should have consistency checks scheduled to run on the drives thouh, I don’t think Dell has those automatically scheduled by default.

Yes that’s pretty much what happens to me. Sometimes it will eat gobs of memory too. I’ve found in occasion that below works but I hate it.

echo 3 > /proc/sys/vm/drop_caches

That’s a dirty hack. In my opinion it shouldn’t be needed nor recommended but even then it will come back. I personally use docker and ZFS and sometimes stuff gets strange. I’ve not had the energy or care enough to fix it. I’ll just reboot the machine occasionally

Not that I want to trade one hack for another here and probably don’t answer here but rather another thread or the lounge. But how does one do this? Do you still get protected by ZFS by running ext4 zvol inside of the array?

XFS does not journal data, only metadata, so you’ll need battery-backed cache for your hardware RAID as well as a UPS, if you care about somewhat about data integrity.

ext4 will journal data (journal=data mount parameter`) but at a cost of lower performance.

I’ve lost data on every XFS volume on RAID I’ve had so good luck!

Did your XFS hardware raid instances have a cache battery? I would think as long as you had a BBU, xfs_repair could deal with the fallout of an hard shutdown (no UPS) to the system, perhaps naively.

Have you tried using the newer versions of PERCCLI that have native .deb’s (≥ 7.1020.0.0, A08) even though they doesn’t explicitly mention R720 compatibility, Dell is usually pretty generous with backwards compatibility; I would be surprised if they didn’t worked.

I believe you’d actually want to use OMSA for having perc consistency checks scheduled. The patrol reads should be able to be disabled from the web interface.

I just use FUSE so I can write to ZFS native in my VM’s and Containers.

this is maybe something the l1 community can get their hands around, document, and provide a test suite? I was thinking via fuse also had some downsides but at this exact moment I am struggling to recall what they were

wonder if it would make sense to come up with some complex-is docker scenarios to build a bunch of custom stuff and see how it goes. much of the weirdness I’ve noticed only seems to happen over time and… no one wants to pay me to dig into the black hole of what this weird edge case might be lol

Battery backups fail, and nobody monitors their health because especially dell makes it complicated/unreliable, I have seen multiple (in the tens) instances of legacy servers (dell and hp mainly, oracle) of batteries failing (sometimes silently, battery error pops up only after the unwanted reboot) and xfs filesystems being somewhat thrashed - sometimes only a couple files, sometimes the whole /etc or /usr gone…

Go with ext4, or put in the downtime, break the raid and go with zfs… not choosing the best option for something lasting years because of one hour of downtime looks very suboptimal to me…

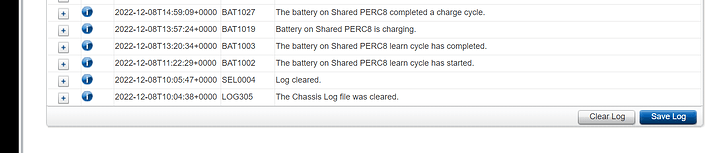

To expand on this, at least for dell, if your raid card was made within the last 10 years it runs a lithium ion battery learn cycle every startup to test battery capacity by discharging and measuring its response; in contrast, after the system is running normally the battery’s status is only monitored based off of it’s voltage, so if you don’t restart your server every couple years its likely that the management controller doesn’t know the capacity of the perc’s battery accurately. Newer raid cards have moved on from lithium ion batteries to super capacitors to side step this.

to OP: I wouldn’t be too worried about the raid card’s battery failing silently, if the management controller thinks even the cmos battery is weak it will be upset and change all the blue/green lights to amber, just like a dimm or power supply failure.

Thanks! “Suboptimal” is just the kindest euphemism. Much appreciated. ![]()

But seriously though… I hear you. Though Wendell’s account of Docker on ZFS gives me pause.

That’s good to know.