I was wondering if any of you audiophiles know what or if anything can be done to lessen vocals in music. Can certain EQ settings do this? Or some other settings in Musicbee? Please, do tell. Loving what i’m listening to but the vocals are a typical Metal case of shitting the bed.

@khaudio can you weigh in hear?

It really depends on your ears and your equipment. But in general you want the EQ curve to bend down in the midrange of 1,000 to 5,000, plus or minus a lot, depending. You have to play with the sliders until it sounds good. It’s not like you are going to break anything. Just save before you start and as you go. That way you can do a quick change between EQ’s for comparison.

For Example:

I bought some new speakers and an audiophile guy used a feedback loop and special microphones to determine the perfect Equalizer APO profile so the speakers output would be flat. I didn’t like his perfect audiophile EQ and twiddled the sliders until it sounded right for me.

Human vocals have lots of harmonics, and it’s really the formant that’s so strong rather than freqs. It’s not really possible to EQ out a voice with a static EQ. The way to do it would require some pretty heavy processing and tedious work. I’ll attach a screen grab in a bit that illustrates.

For playback systems, I typically only use EQ to correct the room, so it’s typically subtractive EQ with fairly narrow bands to lessen nodes or prevent feedback if it’s a live setup with a mic.

You have to be mindful with additive EQ, as an untrained ear will hear more bass and louder highs and think, “awesome!” Only to later boost the mids because they are too low. If you flatten the curve and then turn it all up, you get the entire range. It sounds dull in comparison, because more volume always sounds “better,” initially. That said, there’s nothing wrong with using the EQ to make it “more awesome,” but if you’re making extreme adjustments or you change it often, thats a sign that something is amiss. I mostly give that little speech because it’s like a chef watching people put salt and ketchup on their $100 steak, ya know, I don’t like it when my content gets screwed up by some software eq. Anyway, to each, their own.

Back on topic, the short answer is no.

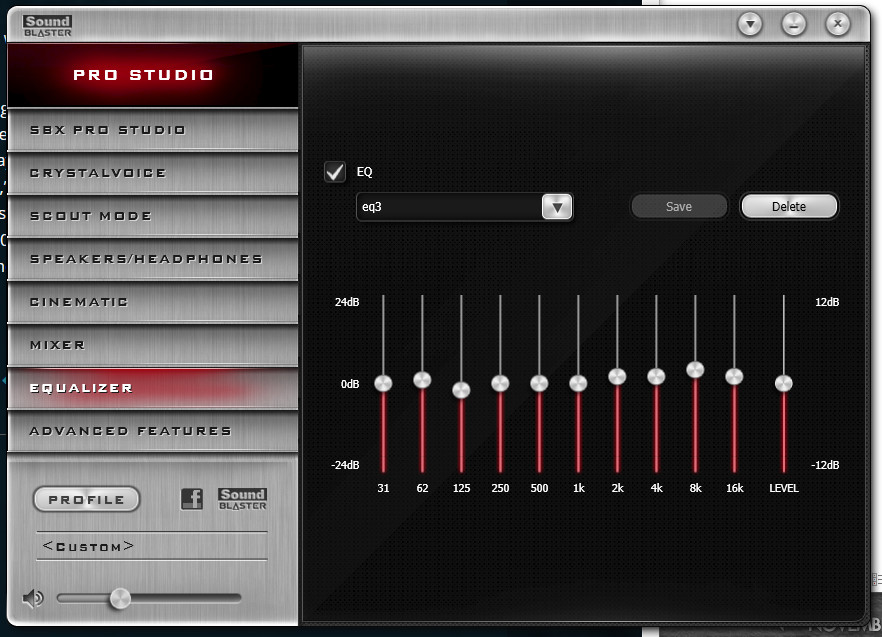

This is what my EQ looks like currently.

As you can see I haven’t moved the sliders too much.

I have a Logitech G5.1 setup with Micca MB42X as the stereo pair.

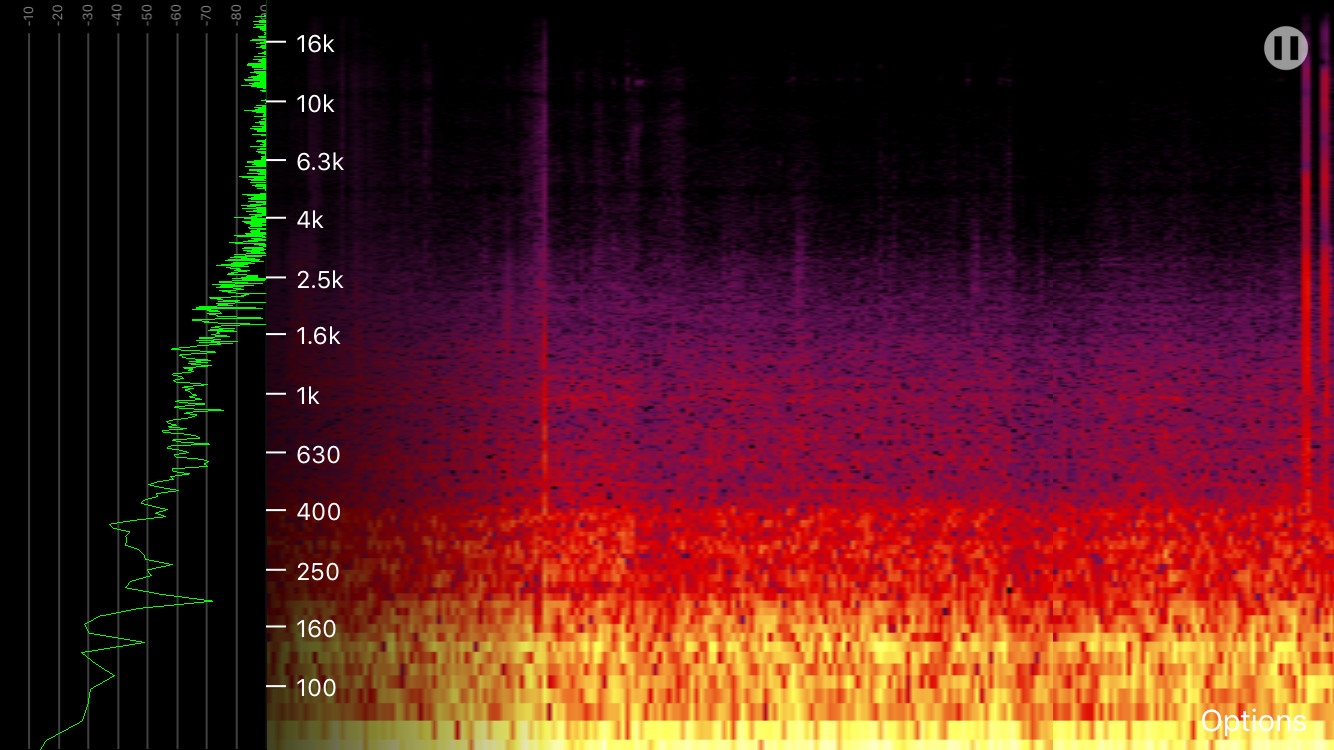

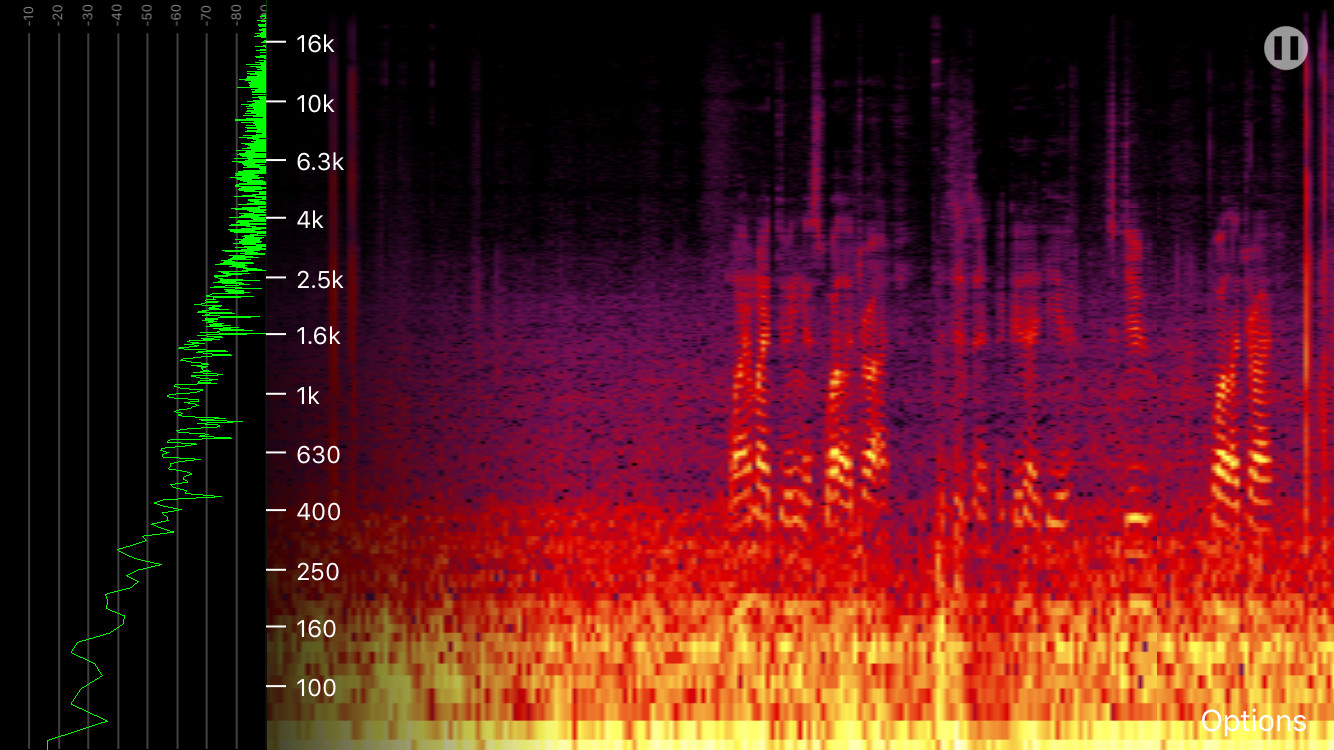

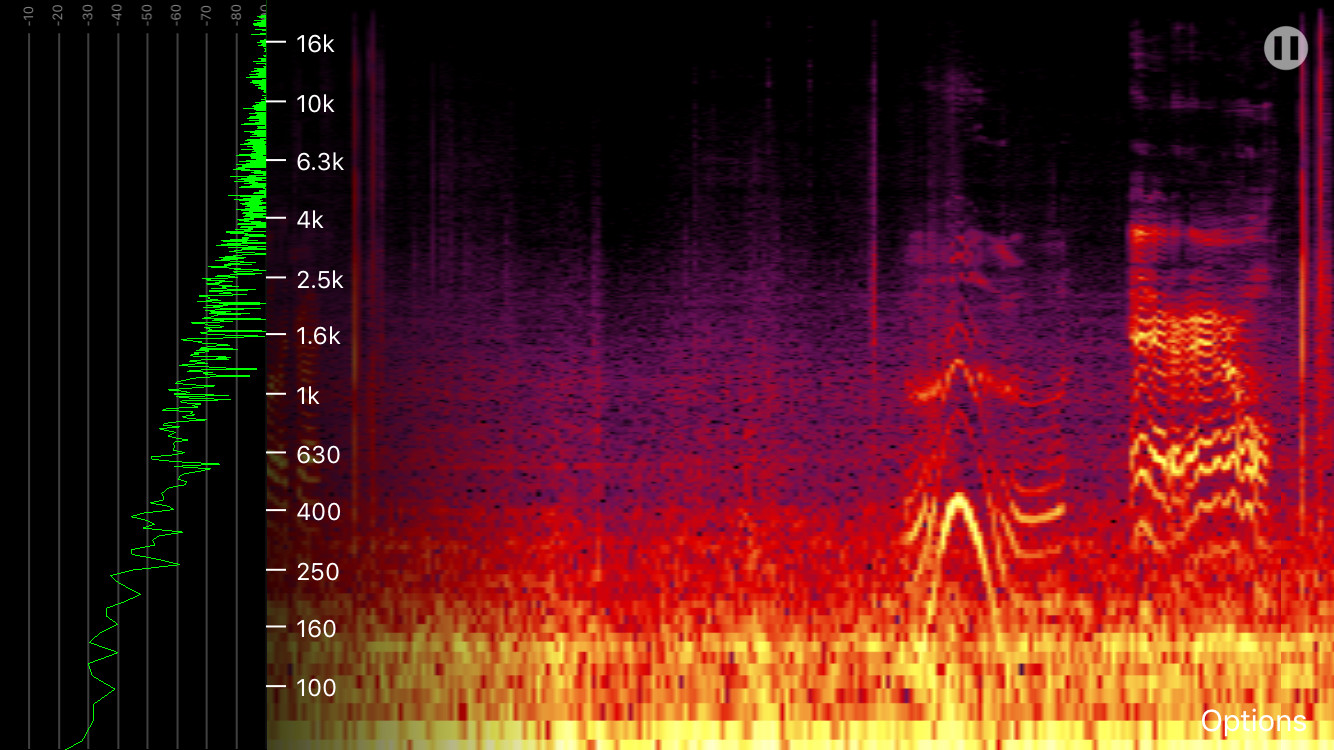

Here are the aforementioned screens, just taken in a spectro app with my phone’s internal mic.

Noise (driving in car):

Speech:

Singing:

Even ignoring the amplitude difference, there are far too many harmonics for EQ to notch vox out, not to mention the pitch changes.

That looks cool.

As an artist I love visualizations of stuff that cant be seen.

Not that I can understand what the hell it means.

Oh… well that’s disappointing. Metal band put out a re-release of one of their old albums. Now has insane production. Vocals are cookie-synthed and sound like fucking garbage. Yaaaay. Idk why bands make these simple fucking mistakes… Ugh… And the vocals are literally as loud as the fucking guitars.

If you’re really ridiculously stupidly dedicated to the cause, there is kind of a way.

You can flip the phase of either the L or R channel and sum them together, which will cancel out everything that’s panned dead center. Vocals are basically always right in the middle, though the side information from any stereo vocal effects will remain. The catch is… you also lose anything else that’s panned center (bass, kick drum, any center data from any other instrument), and it’ll all be in mono. So if you absolutely need a karaoke track, you can do it. Otherwise, it’ll just make everything sound a lot worse. But hey, it’s worth mentioning, since you asked…