Damn, Linux AND 4k?

Actually, dual 1080p monitors lol

Day 0.2

Phase: Planning

Holy shit, webpack

So today I hacked around a lot more with webpack and dug into the docs for about 4 hours trying to get a solid config.

I’d say it is a rousing success. Currently, I’m still setting things up though.

Be sure to check it out

Here’s what I’m talking about.

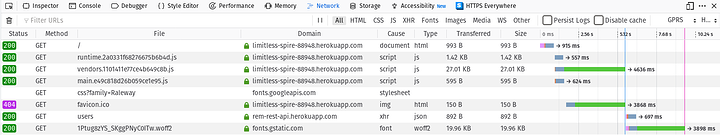

It only takes on average ~10 seconds for the page to fully render with the highest amount of throttling, GPRS.

However, the first meaningful paint happens much sooner, around ~5 seconds. So I’m thrilled! You can see this by how the assets are chunked and hashed by webpack, broken in to main.[hash].js, runtime.[hash].js and vendor.[hash].js.

Devops

Another thing I did was setup an unorthodox deployment of my application from GitLab to Heroku.

Here’s how I did it.

.gitlab-ci.yml

stages:

- deploy

deploy_to_prod:

stage: deploy

image: ruby:latest

script:

- apt-get update -qy

- apt-get install -y ruby-dev

- gem install dpl

- dpl --provider=heroku --app=$HEROKU_APP_NAME --api-key=$HEROKU_API_KEY

only:

- master

tags:

- ruby

when: on_success

Where $HEROKU_APP_NAME & $HEROKU_API_KEY are deployment variables under the CI/CD tab on GitLab.

So now whenever I make a commit to master branch it will automatically get pushed to Heroku, and Heroku will build the app there.

This can be more sophisticated, but for now, it will suffice.

Tip: be sure to get your API key from your Heroku account.

It’s such a pain in the ass to upload the build directory to gitlab and most of results from searches give up and use this method. Wit this though, it builds on Heroku, and was much harder to find an answer for.

My gift to you, free of charge.

Live Demo

This is the demo hosted by Heroku, it is a free one so it may take up to 30 seconds to wake up.

Preface

I thought I would take the time to show my current plan.

Currently, I have decided to divide the project into 4 Distinct Groups.

- Database Design

- Backend Design

- Frontend Design

- Devops

I plan to spend my allotted 1 hour each day rotating between the different groups and have every other week sprint week. That way I will have a balance between development and refinement/testing.

Now without further ado…

Day 1

Group: Devops/Backend

Greetings!

Today I worked with figuring out how to hook up a MariaDB add-on on Heroku, and what library I want to use to connect my node backend to my database.

It was simple enough but required me to get a bit more creative with how to process my environment variables. I am striving for homogeneous settings so I will not be in violation of the Twelve-Factor App methodology.

The choice of MySQL/MariaDB libraries was a bit difficult. There is the OG msyql, mysql2, and mariadb packages. What’s the difference you might be thinking? Glad you asked!

mysql is the OG package from the devs who ported the connector to Node. It returns rows and queries how you’d expect, like arrays.

mysql2 is directly compatible with mysql except that it defaults to returning queries as JSON (awesome), and uses the Promise API by default. This is quite tempting as async/await API is what I plan to use in my frontend logic for all my XHR fetches.

Then there is the mariadb package. It seemed basically the same as mysql2, however, it offers a few new features. To quote the author:

While there are existing MySQL clients that work with MariaDB, (such as the mysql and mysql2 clients), the MariaDB Node.js Connector offers new functionality, like Insert Streaming and Pipelining while making no compromises on performance.

The features the author talks about are: insert streaming, pipelining, and bulk inserting.

Insert Streaming – Using a Readable stream in your application, you can stream INSERT statements to MariaDB through the Connector.

Pipelining – With Pipelining, the Connector sends commands without waiting for server results, preserving order. The Connector doesn’t wait for query results before sending the next INSERT statement. Instead, it sends queries one after the other, avoiding much of the network latency.

Bulk insert – Some use cases require a large amount of data to be inserted into a database table. By using batch processing, these queries can be sent to the database in one call, thus improving performance.

Thinking ahead, I thought these features would come in very useful since I want a high-performance backend to handle everything. And while a localhost’ed database would give great performance since I plan to host it onto Heroku the database might not be hosted in an area close to the backend server, so a lot of the potential woes would be counteracted by the features this database connector library provides.

So, in the end, I decided to go with the mariadb package for my MariaDB database; shocking I know.

But a nice little thing I found that none of the other libraries offered was this little parameter; compress=true. Which gzip’s the results en route from the database to the server; further improving performance.

So out of the gate I feel confident that I have made the key decisions to set my production cloud-hosted backend and database up for success.

</>

Day 2

Group: Backend

I feel bad that I spent all my time trying to understand how to connect to mariadb socket instead of through the network stack. Wasn’t able to get it to work with my current implementation though.

However, I did refactor my code for connecting to the database so it is much cleaner now.

</>

Day 3

Group: Database

Today I wanted to test my database and try to automate the creation of sample data. This will make automated testing easier later on too.

As I search DDG on the first page there was a thread from our very own forum believe it or not! And it was incredibly useful!

Thanks @Levitance & @oO.o <3

So I was able to come up with this:

Sample.sql

-- Highest unsigned int value = 4294967295

DELIMITER ;;

CREATE OR REPLACE PROCEDURE sample_populate_categories()

BEGIN

DECLARE i INT DEFAULT 1;

WHILE i <= 1000 DO

INSERT INTO Categories (category_name, category_description)

VALUES ("Random String", "This is a sample string used as a placeholder for the category description.");

SET i = i + 1;

END WHILE;

END

;;

DELIMITER ;

DELIMITER ;;

CREATE OR REPLACE PROCEDURE sample_populate_users()

BEGIN

DECLARE i INT DEFAULT 1;

WHILE i <= 1000 DO

INSERT INTO Users (user_handle, user_password)

VALUES (CONCAT("Tester", i), "vywsHzNKZ617DoQYYtkGcF6mDI5OSTrtrrZXvm94ux20z6wQ7aGGDmnBmAvLUHCL5X6PFlWa");

SET i = i + 1;

END WHILE;

END

;;

DELIMITER ;

DELIMITER ;;

CREATE OR REPLACE PROCEDURE sample_populate_threads()

BEGIN

DECLARE i INT DEFAULT 1;

WHILE i <= 1000 DO

INSERT INTO Threads (thread_author, thread_category, thread_title)

VALUES (i, i, CONCAT("Test", i));

SET i = i + 1;

END WHILE;

END

;;

DELIMITER ;

DELIMITER ;;

CREATE OR REPLACE PROCEDURE sample_populate_replies()

BEGIN

DECLARE i INT DEFAULT 1;

WHILE i <= 1000 DO

INSERT INTO Replies (reply_author, reply_thread, reply_body)

VALUES (i, i, "JHG6z6GYEABi8epTEVqcHHVrsUDhC4RZBZYlFx8uo3sj5xMukqm6QlcPpV6wpxx6a9xT2uta1tiT6XWz86F3McST33WvfxH1GGZ3iYFaGpoaphyJjUvBhkRkygFvQu1ywLTOauDszdqHaz7JMqQSJIdTAoeEEawz6tqWBpqwtumPoBvA1Z7MkOHrYs5BXrAznbyuXxBNd3litccBpujRKR2848uvS6r6dB483PevwwszgiPUG5ht4zHMPHjw07ma2mTE1JY43lGIr0veLSoieV7vz38Bak6vFHusMPnzek8h");

SET i = i + 1;

END WHILE;

END

;;

DELIMITER ;

DELIMITER ;;

CREATE OR REPLACE PROCEDURE sample_populate()

BEGIN

CALL sample_populate_categories();

CALL sample_populate_users();

CALL sample_populate_threads();

CALL sample_populate_replies();

END

;;

DELIMITER ;

So now all I need to do is CALL sample_populate(); and it will generate 1000, categories, then 1000 users, then 1000 threads, then 1000 replies.

It takes about ~27 seconds to run on my desktop.

So now that I know that my samples work as intended, I feel confident with my database schema.

If you peeked at the source I put up earlier you’d see this blurb, -- Highest unsigned int value = 4294967295.

Since I’m using unsigned 32bit integers as my primary key sizes that means that I can have a maximum of 4 billion, or 4 GiB, of data for each table keys alone.

So if I change all the loops to go until that value then I estimate that at the least my database will over 16GiB in size.

I’m going to commit my changes first and then test this theory on my server tomorrow.

Thanks for reading! Have a great night everyone!

</>

Day 4 - 7

I apologize for the lack of updates, had a bit of a crisis. Now that it is resolved I can post. Note, I was still coding each day, just not blogging.

Also, in that time I decided to completely clean the house, rearrange the office, and had to reinstall my OS on both my desktop and my laptop due to how mangled the JDK is on Ubuntu 18.10 I had to roll back to 18.04.

So here are my updates!

I ran my stressing script on my server, but after 8 hours it still wasn’t finished lol. There wasn’t much point other than to test what would happen once it’s reached it’s maximum indexing size.

I worked a lot on understanding how mithril works under the hood, and feel like I have a solid grasp on how to actually build stuff now. I think I will start prototyping out different UI’s.

I decided to use Pure.css since it’s great and is very minimalist. Currently, my app sits at only ~46 KB. I know I said I’d do custom, and I still am but I don’t want to waste all my time styling buttons  . Currently my custom CSS is for placing areas of my app code using CSS Grid.

. Currently my custom CSS is for placing areas of my app code using CSS Grid.

I had to mess with my webpack config a lot more so I could put minified and mangled css into m chunks. I still don’t have route-based chunking for code splitting but I’ll cross that bridge when I get to it.

My goal tomorrow will be to flesh out the backend some more and start testing the route endpoints.

Thanks for reading!

</>

Update coming tomorrow. Sorry guys. Been fighting a cold / stomach bug.

I’m back from the dead bitches!

Not really, I didn’t die but I sure felt like it.

tldr For those of you that don’t know I’ve been pretty sick with a nasty cold. Just got my voice back today.

Now onto the updates.

Day 8

Delved deeper into the database stuff and started creating bulk inserts of example data so that I can run tests on my queries. I shamelessly stole parts of this forum for this part

Day 9

I started to much around with mithril some more and this inevitably led me to my webpack configuration again. I begin to not feel satisfied with how I wanted things to turn out. It was at this moment that I realized that while I’ve been enjoying learning new things it’s a bit all too much at one time, and most importantly not true to my original goal; of building something.

So an executive decision was made to scrap the project, and port what I could over to Vue where I feel more comfortable.

Day 10 - 12

Out due to sickness.

Day 13

Spent my time debugging why my response bodies where timing out in Postman. Goodness VS Code’s Node debugger is a godsend.

I am using Express as my backend router, and my responses looked like this:

res.status(200).send(obj)

However, since I am sending valid JSON anyway, Express doesn’t know this off the bat so it has to interpret it and this process is like an extra 30 steps in multiple various functions.

So I did this one small trick to speed things up.

res.status(200).json(obj)

</>

Day 14

I continued my efforts of setting up the project with Vue. So far so good. I was able to get all my structure setup and housekeeping tasks are done.

Day 15

I worked on the code and routing for viewing the available categories. This proved more challenging than I though. Because I needed to first develop the query to get the results I need, setup the backend endpoint, then set a route on the client side, then when at the route know which kind of api endpoint to hit, once the data comes in I need to do stuff if there was an error or not and then display it correctly.

If anything at all needs adjusting in that pipeline then it involves several changes. Having a plan definitely helps for what I want to achieve.

So far I’m basing my design off of the category view on this forum.

AT the moment, it will return the list of categories dynamically and each item in the list will dynamically route to a frontend endpoint which will then fetch all relevant threads of the corresponding category.

Day 16

Really needed to do some refactoring. Cleaned a bunch of function up, and added more documentation comments.

Day 17

Today I moved my store logic into its own separate module to help further compartmentalize my app logic. Which basically involved changing everything that referenced it lol. So it took some time to get it done right. But now it expands more cleanly and the layout is more clear. It is easier to identify the structure of my codebase now.

You’ve a lot of days to get caught up on mister.