Morning all,

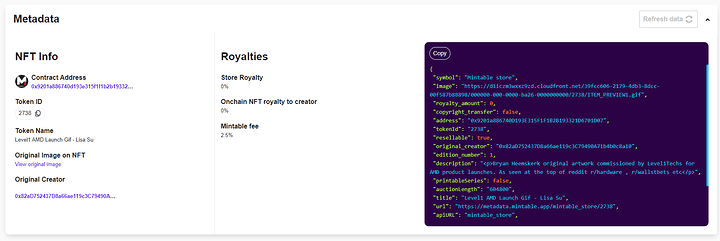

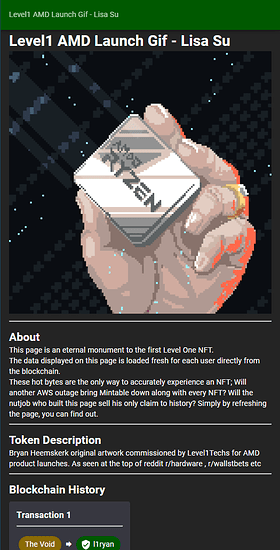

This is my first Devember and first personal coding project since I left university. I have been spurred into action by my recent purchase of the Level One NFT. I believe that for the common good there should be a showcase for the Level One NFT so people can come and admire it.

The long rambly bit about project plans

So that’s the MVP, a nice simple site. Then I got to thinking, NFTs are too commercial; The core reason people want to spend money on NFTs seems to be because of the historical significance. The stretch goals then are focused around creating a free kind of token where verified individuals (via oauth2 subject claims) can mint simple tokens that are verified server side instead of on a blockchain. Transfer of ownership can be done with single use URLs. I will gatekeep verification personally to prevent fakes and randos getting verified.

The next stretch goal is around a Twitch integration so tokens can be awarded to chat. I think for such an integration it’d be important to have a limited run mechanism so, for example, 500 of the same image can be offered with a numbered watermark, 069/500.

As a very far away stretch goal I do hope to add the capability to convert a token to a full fledged NFT where the individual only needs to pay transaction fees imposed by the minting process. I’ll make sure I capture enough info at minting time so any token in the future can be added to the blockchain.

Architecture

I am a .net, C# developer through and through. As such the architecture will be overkill just so I can do as much as possible in C#. I’ll also do as much hosting as possible in Linode.

(Living doc is here where I will put wireframes and other such visual things)

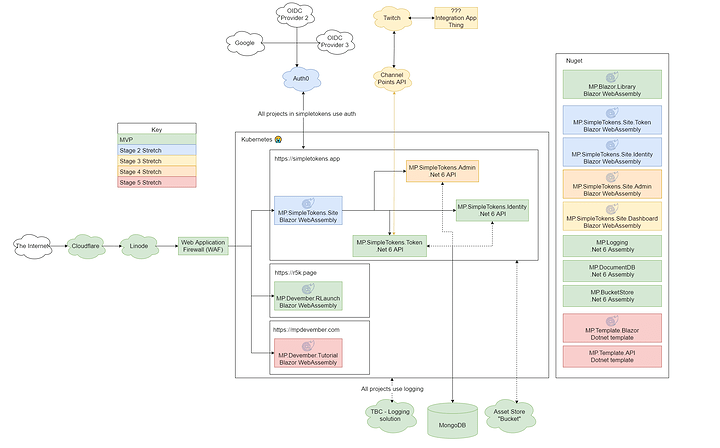

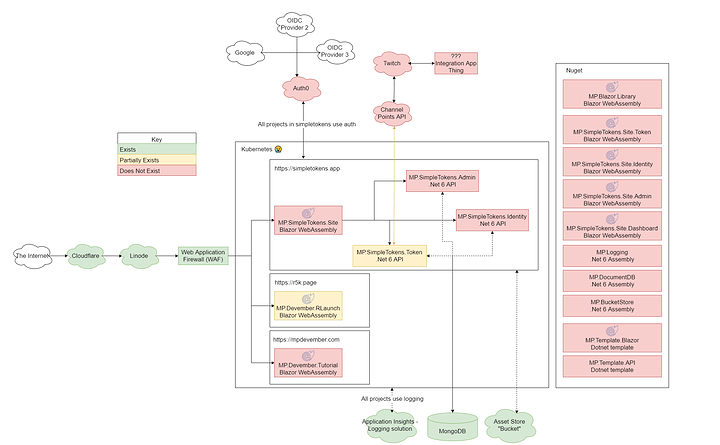

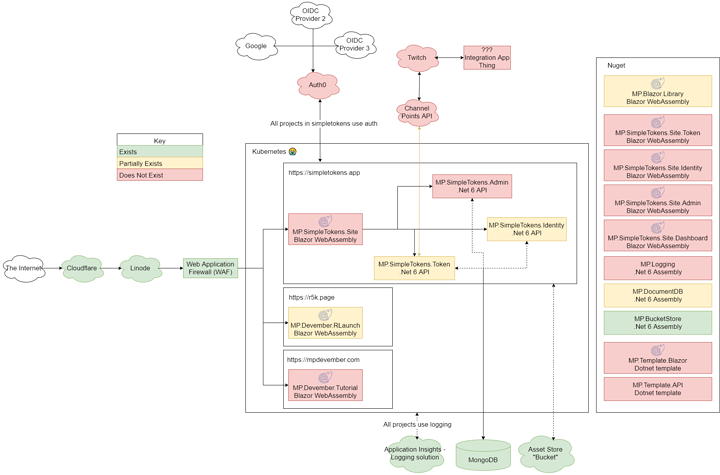

We have a lot going on here so I’ll only talk in broad terms so this doesn’t turn into war and peace.

On the left we have requests coming in. All requests go through Cloudflare for free SSL… there are other reasons but I’m lazy and this is the one I care about.

We then come into a web application firewall (WAF) of some kind to prevent traffic coming from anywhere but Cloudflare. I’ve never done a WAF before so the whole process will be a learning experience.

We then have three domains for various Blazor web assembly (static content websites) and dotnet 6 APIs. These are all managed by the Linode Kubernetes (God help me) offering using dockerhub containers. Docker and Kubernetes are both completely new to me, I am but a lowly scrublord developer so this will be the biggest challenge. I still think it’s the best option because I don’t want the stress and headache of server maintenance; I’m happy to pay the premium for it to be someone else’s problem.

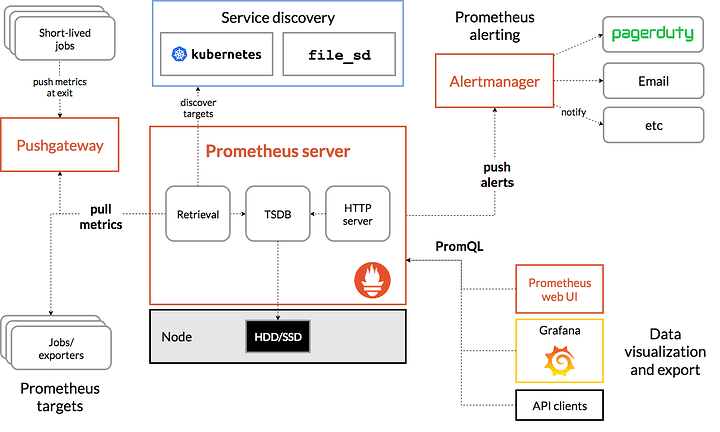

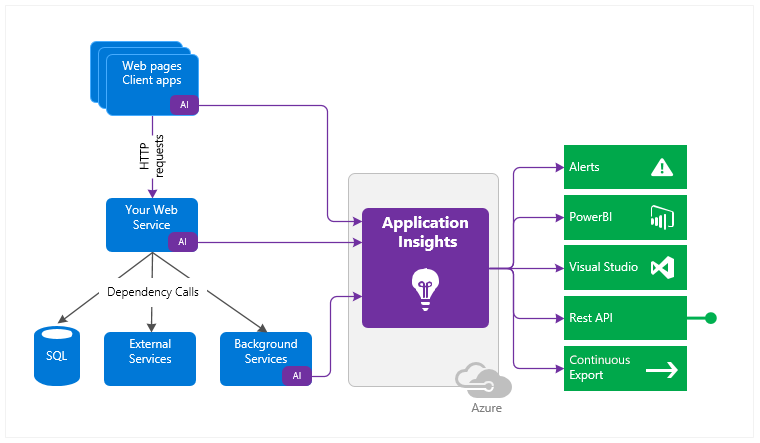

On the bottom we have an unknown logging solution. I want App Insights but that’s Azure only so I need to find an alternative. Then we have Mongo DB so I don’t have to maintain schema and a Linode Bucket thing to put all the uploaded images.

Along the top we have Auth0 handling all the difficult federated identity stuff. I won’t be having local accounts so I don’t need to touch a password. I’ll just store subject claims as my user ids and cache names so I can display them on the token history.

The other item on the top is the twitch integration piece. I really don’t know how this will work so lots of question marks in that area.

Finally on the right we have all the nuget packages I’ll be making to reduce code duplication. I’ll probably also make Refit client packages so the APIs will self document via interfaces how to communicate with themselves.

The end bit

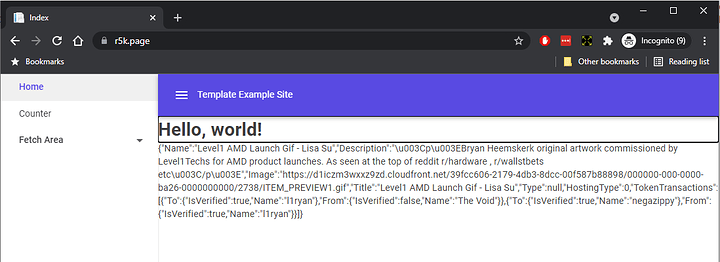

There is a lot here but I’m confident in delivering the MVP by the end of December. I have already got https://r5k.page/ hooked up with the Blazor starter template hosted in Kubernetes and I’ll aim to get the rest of the unknowns done by the end of the month. November should then be a straight run of what I know and that leaves December for figuring out Twitch.

Thank you for listening to my Ted talk, I will now accept questions and comments.

EDIT:: Just trying to add the devember tag

.

.