I appreciate the prompt feedback! I read many of the threads already but seemed to have missed some critical posts. Just to make sure I have a good foundation to work with, I went ahead and put the whole M.2-OCuLink-enclosure setup together for testing. This thread about PCIe bifurcation casted a lot of doubt that I could ever get it to work: A Neverending Story: PCIe 3.0/4.0/5.0 Bifurcation, Adapters, Switches, HBAs, Cables, NVMe Backplanes, Risers & Extensions - The Good, the Bad & the Ugly - Hardware / Motherboards - Level1Techs Forums.

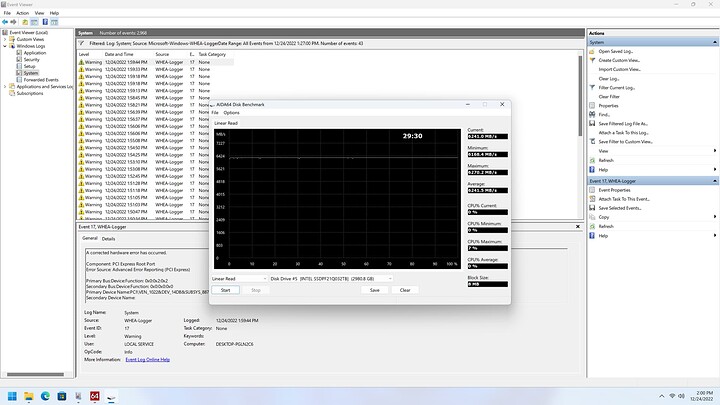

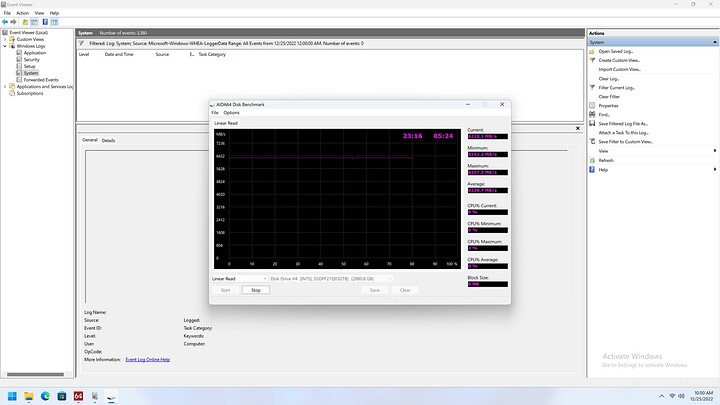

Using this finally worked for me with 0 errors while putting the drive under load for 10 hours:

- Asus ROG Crosshair X670E Extreme

- Micro SATA Cables Passive PCIe Gen 4 M.2-to-OCuLink adapter on the Asus-provided Gen-Z.2 riser card (PCIe 5.0 side)

- LINKUP 25cm active OCuLink cable (OCU-8611N-025)

- ICY DOCK U.2-to-OCuLink enclosure (MB699VP-B V3)

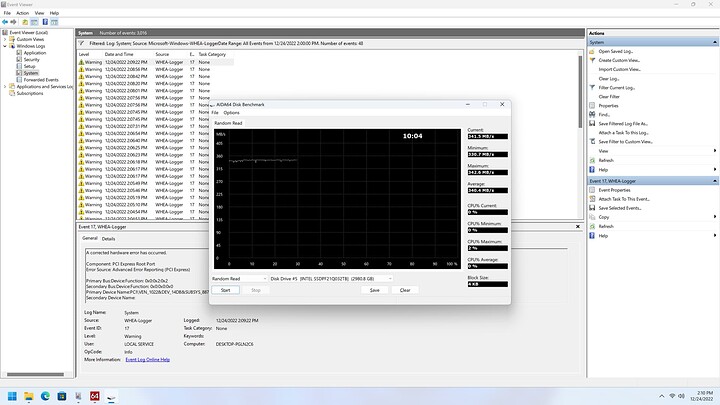

This was after some trial and error with different M.2 slots and a 50cm version of the active OCuLink cable. The 50cm cable gave errors no matter which M.2 slot I used. 42 WHEA errors events in 30 minutes was the best I could get. The M.2 slot at the far end of the motherboard gave the most errors—27 events in 60 seconds.

This would be my first time sinking so much money into experimenting with this stuff and I was surprised how much of a difference a few centimeters could make.

I had been intending to test bcachefs well into my part orders and intended to get 2 × P5800X as a mirrored write cache and metadata device. I only started looking at ZFS a couple weeks ago and unfortunately, some further reading explained that the SLOG is not the equivalent of a write cache which I thought would have allow me to have the best of both high-capacity RAIDz2 and the speed/latency of Optane for the bursty workloads that I’d be throwing at it. An actual writeback cache feature for ZFS doesn’t seem to have gone anywhere. I’ll test out ZFS still, but cutting 96TB of storage in half with RAID1 is something I would like to avoid if I can help it.

I know the SLOG only helps synchronous writes and protects asynchronous writes with sync=always (at the cost of latency), but would a persistent L2ARC at least mask the read latency of QLC NAND over SATA for VM/dev workloads?

Yeah. They are hard to use. I have been monitoring news of PCIe 5.0 HBAs/RAID cards, which I plan to get for hooking up the Optane SSDs. They’re never going to make PCIe 5.0 Optanes, so the next best thing is to aggregate a whole bunch of them together behind an HBA/RAID controller to better utilize the bandwidth. In the meantime, those M.2-OCuLink adapters will do.

For the Asus ROG Crosshair X670E Extrene, the chipset gets 4 × PCie 5.0 lanes worth of bandwidth, and after subtracting the bandwidth consumed by 2 Thunderbolt ports, that leaves 2 lanes worth shared by 2 × PCIe 4.0 x4 expansion slots and presumably a bunch of other things on the motherboard. Definitely not using those PCIe 4.0 slots especially since having tested 4K random read performance of the 905Ps on both PCIe 4.0 (chipset) and PCIe 5.0 (CPU) I found that the MBps dropped 7.89% on PCIe 4.0.