I know this might not be the typical question, but here goes anyways.

My current i5 is not cutting it anymore. I recently upgraded to a 1070ti and my i5 is bottlenecking.

Now the fun part. I’m 99% sure the CPU is limiting when it comes to high Framerates. Let’s take Overwatch as an example.

With every setting maxed i get around 120 FPS (though massive dips which make it unplayable. I haven’t figured this out yet, but i’m guessing CPU too). Turning every setting to minimum get’s me 140-150FPS. So clearly, i’m at a point where my GPU bottlenecks my GPU.

When looking for a replacement, i’m not sure what to look for. Most benchmarks and tests run new AAA title at max settings or even 4k. But this typically gets you around 60 Frames, so far outside of the Range where i’ve seen CPU Bottlenecks.

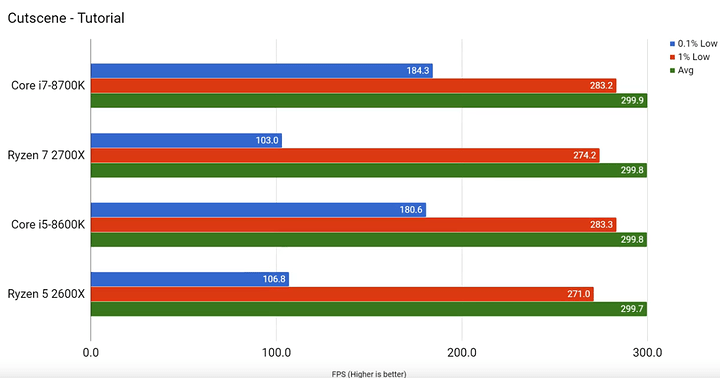

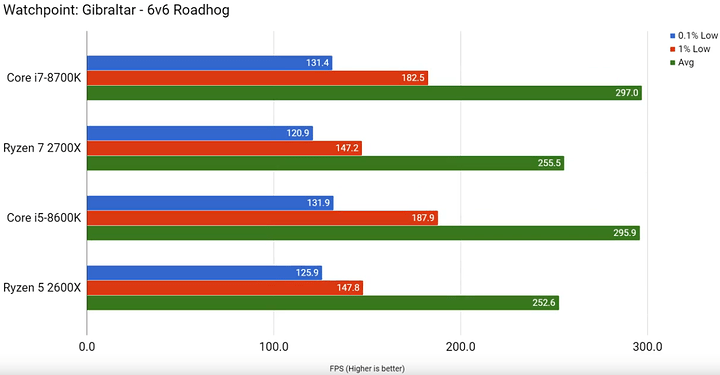

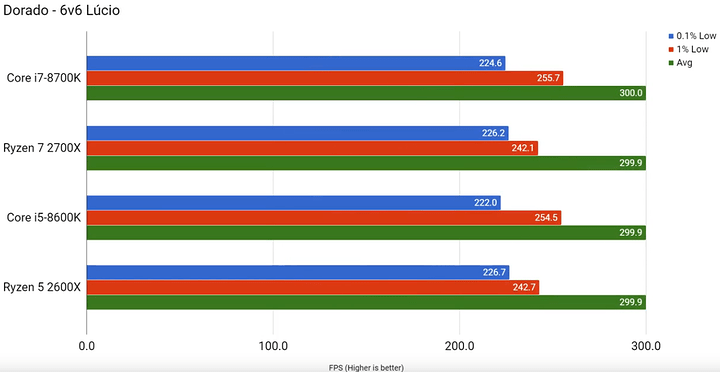

So, lets say i want to hit consistent 300 FPS (the max Overwatch supports), what do i look for in a CPU? I now i don’t need 300 FPS locked. I just want to figure out, which metrics are important when looking for a CPU specifically for high Frame Rates rather than high visual fidelity at 60 FPS.

Is Higher Clock Speeds all that matters? Core Count? or IPC?

Since i’m looking at AMD at the moment, would a 2600X get better performance than a 2700 because of the higher boost clock? How does this change when i add a Browser and, let’s say, Discord into the mix? Would an i5 9600K perform better because of the higher Boost clock, again, or is the similar Base Clock more important?

Are there any Benchmarks that explore pushing the GPU with high FPS in competitive Games rather than going with Max settings everywhere?