What is the best GPU I can possibly get that won't be bottlenecked by my i7 5820k OC at 4.4 Ghz?

Also what would be the best GPU I can SLI without bottlenecks on my current processor, it has 28 PCIE lanes

I currently have 2x GTX 970s in SLI and they run well bu I want to go into 1440p and 4k gaming.

My gtx 1060 will do 4k at medium settings on GTA V,insurgency, and older titles. So I would say a single 1070 or 1080. 1080ti if you got the cash and you should be able to play 4k and all titles for a minute with ease.

CPUs don't magically bottleneck GPUs out of nowhere, it depends a lot on what resolution you're playing with.

CPUs care more about raw FPS, so you will run into the same cpu bottleneck at about the same FPS regardless of resolution.

two 1080tis in SLI still wont bottleneck your 5820k if you're playing 4k in most demanding titles.

This.

Honestly with a 5820K at 4.4 you'll really have no problem with any modern GPU.

Also don't even think about multiGPU setups.

If you need more performance just buy a 1080 Ti.

or wait for vega.

Yeah prob not.

In all likelihood Vega will be slower than a 1080 Ti. You'll also be waiting a long time. Release. Then nonreference. Then sold out cus miners.

Then you'll be waiting for Volta.

Waiting in the PC hardware world is generally not a good idea. Just buy the best thing you can now.

"Bottlenecking" is a marketing play. Tech companies found out putting the marketing dept on social media was more effective then spending millions on TV ads IMHO.

"Always push the bottlenecking issue, it sells"

I performed an experiment with my wife's i5 3570K rig with a GTX780 in FAH.

I turned off 3 of the cores in the UEFI, leaving only one active. I saw the same PPD from the GPU as with the processor running on all cores.

I know this is a very specific case, but it seems to take a hell of a lot to bottleneck a GPU, at least in FAH.

Thats probably because folding doesnt move any textures from memory, or calculate AI, or any of the other things a game does, be it optimized or not. Folding is just floating point calculations...which once you're running them, the CPU basically isnt used until the job is done (unless you're folding with the CPU too).

The bottom line is, for gaming purposes. It heavily depends on the game but in most cases OPs CPU is new enough for it to not matter.

That's why I stated that it was a specific use case.

But you implied it had bearing on bottlenecking a GPU.

Sorry, I'm not going to argue.

I'm not trying to intentionally "argue" with you. Sorry if you feel like I am. I'm trying to explain they are completely different work loads. Like crypto mining, the work is (mostly) all done on the GPU, where as gaming has different operations in which the GPU is waiting for instructions from the CPU on a per frame basis.

Concerned about CPU bottlenecking, but not PCIe lane, or other causes of bottlenecking? I don't get it; there is always a bottleneck of some kind. IMHO, building a balanced machine provides the best performance per dollar as well as the most user satisfaction.

Your second GPU will only get 8 PCIe lanes; that's an instant 2-3% performance hit, right there.

Few games even implement SLI support, so most of the time that second GPU will be doing absolutely nothing but generating heat. For those games that do support SLI, you'll get approximately a 30% FPS boost at the cost of 100% more money for the second card.

Also, you're much more likely to have stuttering and other performance issues with a SLI rig.

Don't forget that your primary GPU will be starved for cool air and it will most likely run hot, which will induce premature throttling.

Even Nvidia has admitted that SLI is a scam, so they don't even support triple and quad SLI any longer and the performance benefits of dual SLI are dubious at best. On the plus side, two GPUs look really cool if you have a windowed case and they'll definitely keep you warm and toasty in the winter.

So, if you already have the ultimate workstation motherboard, fitted with a pair of water cooled i7-7700k CPUs and a water cooled GTX1080ti and you still have $1k burning a hole in your pocket, by all means, install a second 1080ti. But for the rest of us, we'd all be much happier with the performance of a single, most powerful GPU within our budget.

Just a thought, but you may consider spending the money for that second GPU on water cooling. IMHO, it looks even more rad than a pair of GPUs. My i7 rig is whisper quiet and it runs at 39-40C under full load. I don't have any throttling issues due to heat and my antique GPU still produces satisfactory frame rates, because it runs as cool as a cucumber.

Here's an interesting thread.

Yep. Newer generation $50 intel dual-cores only start to reach a bottleneck once you get to $250 Nvidia GeForce GTX 1060 6GB GPUs. Certain specific games that perform badly with that setup are actually just always CPU bound, regardless of CPU like Ashes of the Singularity. The GPU you buy there matters very little.

There was a report by ArsTechnica I think a year ago that conclusively showed that a mid-range i5 ($200) does not bottleneck $700 GPUs.

In general, GPUs are not bottlenecked by CPUs but by their own processing capabilities. This is exaggerated in next-gen APIs (Vulcan/DX12) where the CPU matters even less.

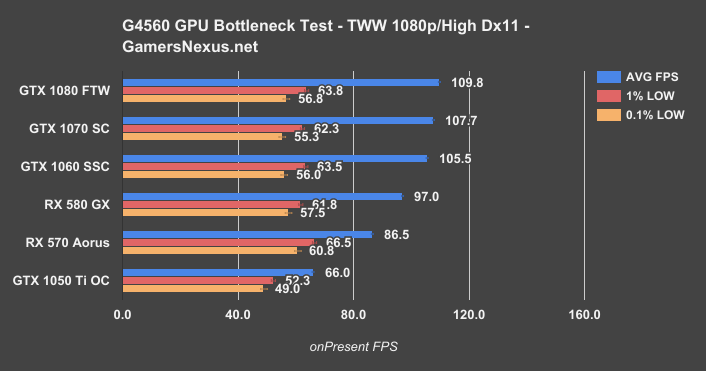

- Intel Pentium G4560 (Dual-Core Kaby Lake @ 3.5 Ghz)

- 3200MHz RAM

- 850 Pro SSD

From the above specs, this is what a real CPU bottleneck looks like in Total War Warhammer and is fairly typical:

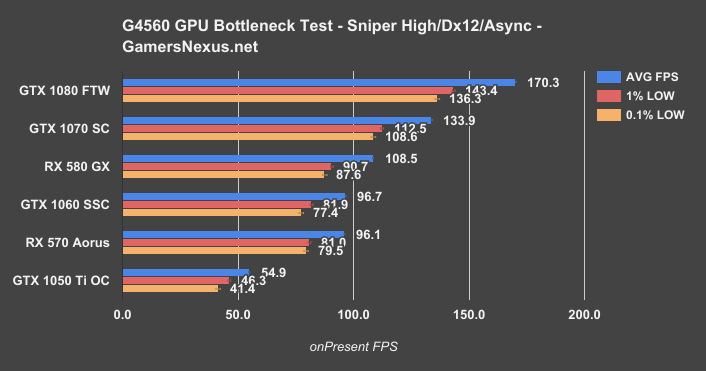

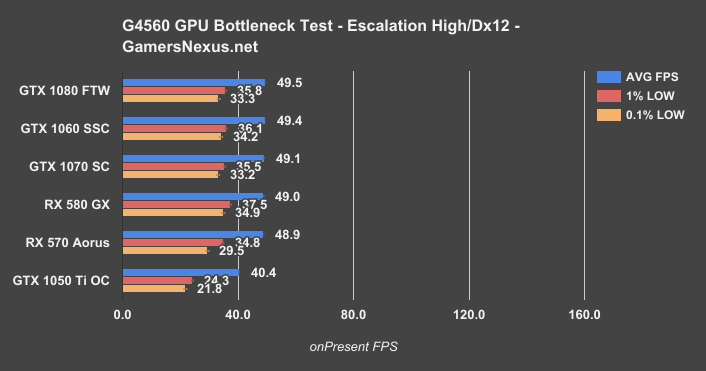

This is what next gen APIs (Vulcan/DX12) are capable of:

This is a CPU bound game (any CPU instantly bottlenecks any GPU basically):

A "CPU bound game" could also be described as a "synthetic benchmark" which does not utilize the GPU.

The conclusion is obvious: GPUs are rarely bottlenecked by CPUs. The benchmarks above are from a dual-core. Once you get into i5 territory, it is just not an issue.

Link to video: https://www.youtube.com/watch?v=DL7YcPlJ83c

And article: http://www.gamersnexus.net/guides/2913-when-does-the-intel-pentium-g4560-bottleneck-gpu

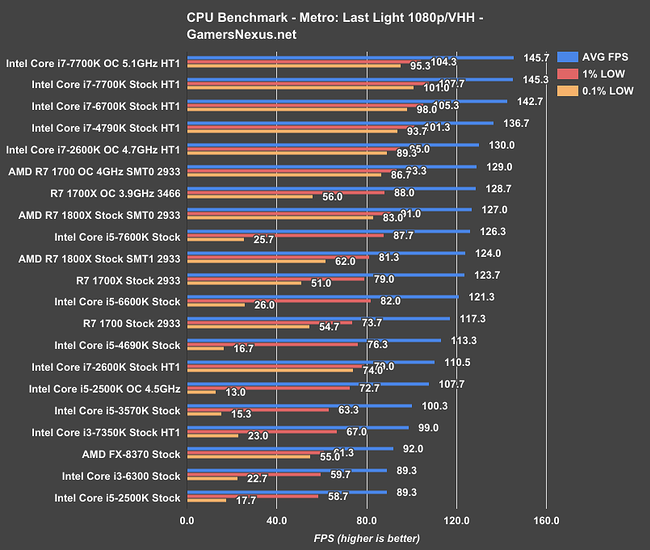

Here is a less extreme example, 2600k (Quad-Core Sandy Bridge @ 3.4 Ghz):

Note the % increase from low to high.

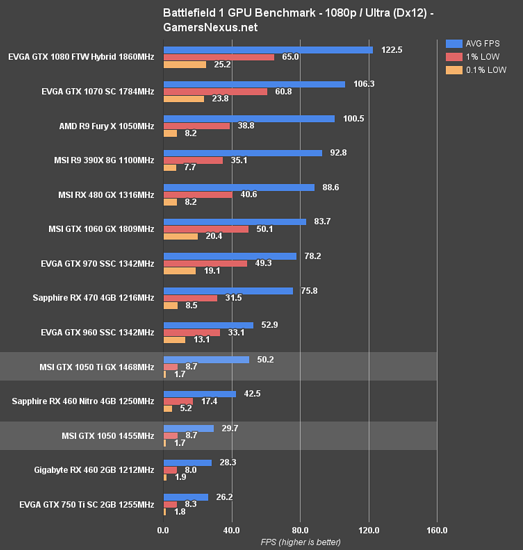

The cavet here is that older i5's do have frame-time issues. Maybe you get 30-40% more performance by investing in a better CPU, but you get 300%+ better performance by investing in a GPU for GPU bound workloads (like games). Kinda-obvious if you think about it that way. Check out the following chart comparing GPUs instead.

This is why gamers usually do i3's and i5's and very powerful GPUs (GTX 1070+) and productivity people typically use very weak GPUs (~GTX 1050) and i7's/FX's.

The performance drop from 16x to 8x for PCI lanes is <1% according to real-world testing. Negligible. That said, while the performance gains from Single GPU->Dual-GPU scale linearly for number-crunching (crypto currency mining), they are marginal for games.

Conclusion: An i7-5820K will not bottleneck any GPU. Get any GPU you want.

The 1080 Ti single-gpu has been described as the only current GPU that can handle 4K gaming and will likely perform better than dual-970s in games due to the diminishing returns of SLI/Crossfire. The caveat here is that next-gen APIs change that with certain specific games like "Sniper" that were written from the ground up to take advantage of those APIs. That game shows linear performance increases under DX12/multi-gpu setups.

edit: typo, edit2: next gen apis/other stuff

I decided on a single 1080ti and the New water-cooling packages from EK water-block,thanks fora great reply though