Update In Details - What I done in chronological order (this is not a how to guide)

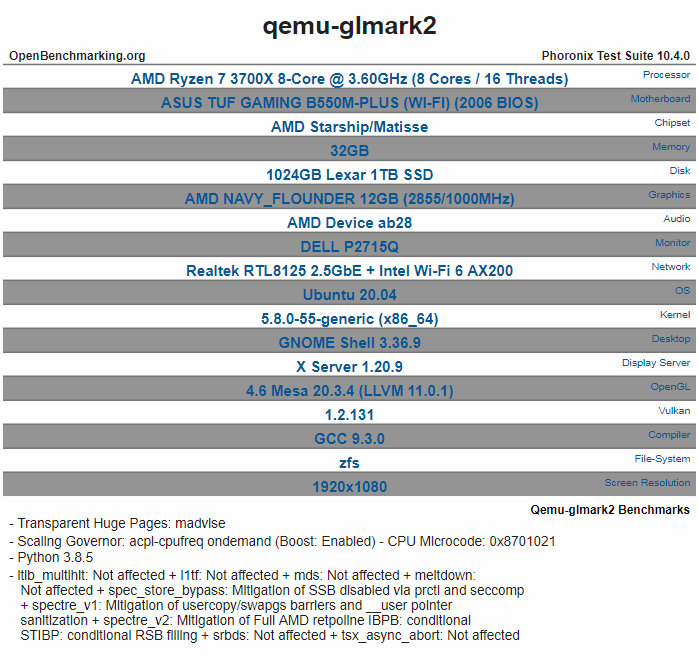

To start with, when my new prebuilt 6700 XT came in, I did a fresh Ubuntu 20 LTS install and updated it accordingly, installed the required drivers (it did not install out of the box) and gave it a phoronix benchmark to form a baseline, before doing the VM based tests. For consistency between host and VM, i am limiting the test to only 1920 x 1080 benchmarks.

PS: if you want to try replicate, I would suggest to use Ubuntu 18 instead, as there was a fair bit of backporting needed to get some drivers to work. For install steps you can refer at the end of the post.

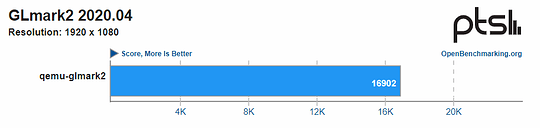

1) Host Specs Benchmark

GLmark2 score: 16,902

Openbenchmark upload: Qemu-glmark2 Benchmarks - OpenBenchmarking.org

There was also a test that managed to hit 18,206 - but i was testing around and didnt save the result online.

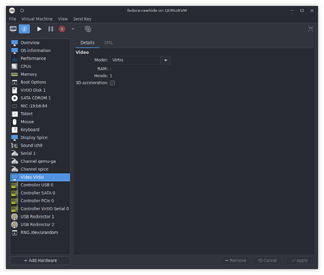

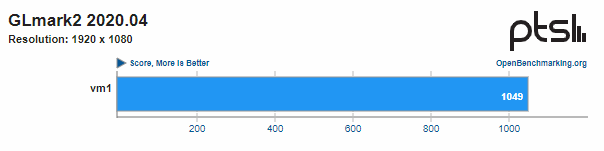

2) VM with “virtio 3D accleration” option in Virtual Machine Manager

Now that we got the baseline, the next thing was to setup a VM quickly via the virtual machine manager, with a raw disk and install ubuntu on it once again.

Under the virtual machine manager, enable virtio, and 3d acceleration (screenshot stolen from here - as i forget to take a screenshot for this step)

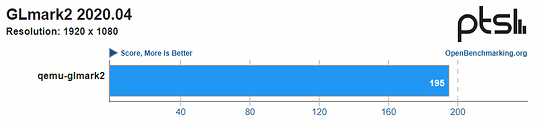

Disappointingly the phoronix test got us a potato score of : 195

Openbenchmark Upload: Qemu-glmark2 Benchmarks - OpenBenchmarking.org

From what I understand (I need someone with better knowledge to confirm) this seems to be using the virtio-vga driver, which provides more backwards compatibility at the cost of performance.

Because I could not get the virtio-gpu-pci option to work in the UI, this is where the elbow greasing on the XML (failed) and the command line begun (worked)

3) Attempting to modify libvirt-server xml files

Im just gonna skip this part … as nothing worked properly, if someone knows how to configure this properly do let me know.

4) Switching to QEMU command line

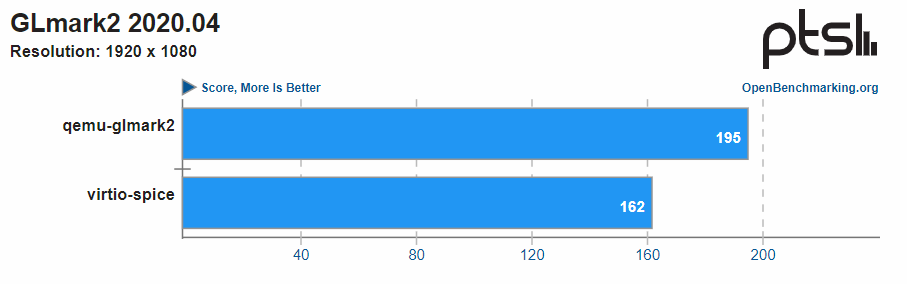

Taking the same raw image file used in 2, manually craft the QEMU command line with the same settings (or as close to it as I could)

Somehow in the process the potatoe got worse even if I manage to get it to switch from llvmpipe, to virgl driver in the process.

Openbenchmark Upload: Qemu-glmark2 Benchmarks - OpenBenchmarking.org

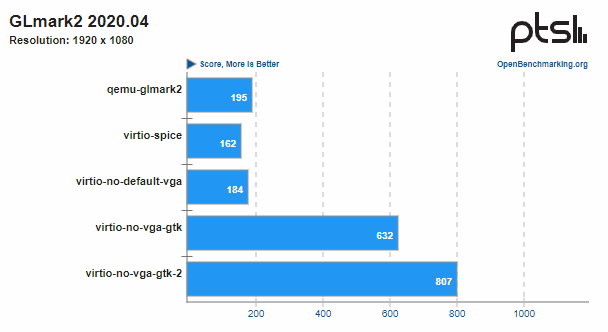

5) Tweaking around at the command line

After testing multiple time, and going back and forth the settings inside the VM (listing pcie devices, etc) I realized that “by default” the VGA GPU is always added. Degrading the performance accordingly, so once i got that removed.

Well VNC stopped working (i guessed it depended on the VGA?) besides it was suggested to use the virtio-gpu-pci with gtk in various examples, so i made the switch as well to use it with spice.

And success ! The score is now brought up to : 800

This is comparable to older integrated graphics, and is generally “good enough” for HD video playback and office work. The result however was disappointing from a gaming perspective.

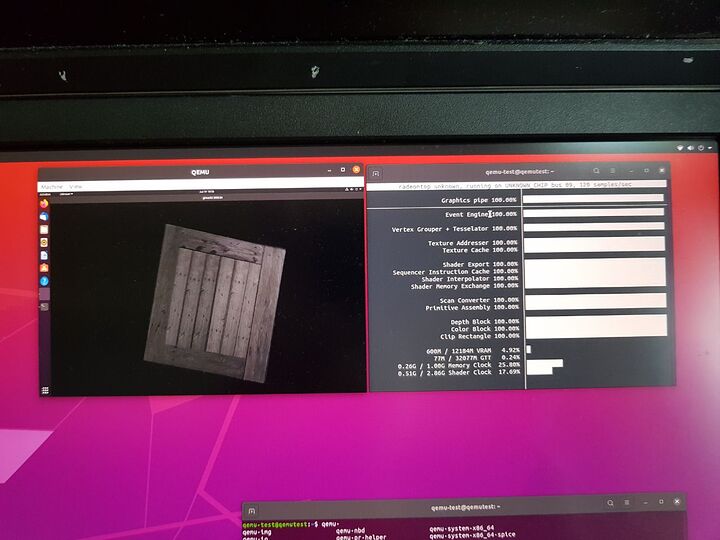

However what i noticed was that, when putting the VM through the paces of testing multiple times - at no point was the GPU ever fully loaded. So time for the next part …

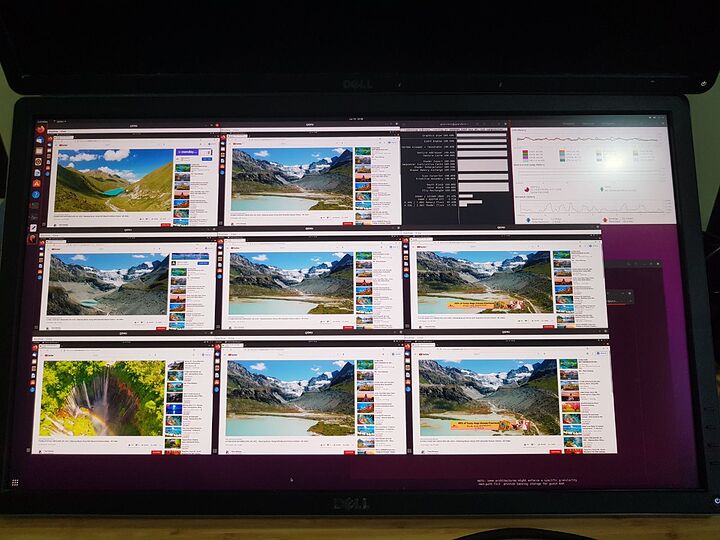

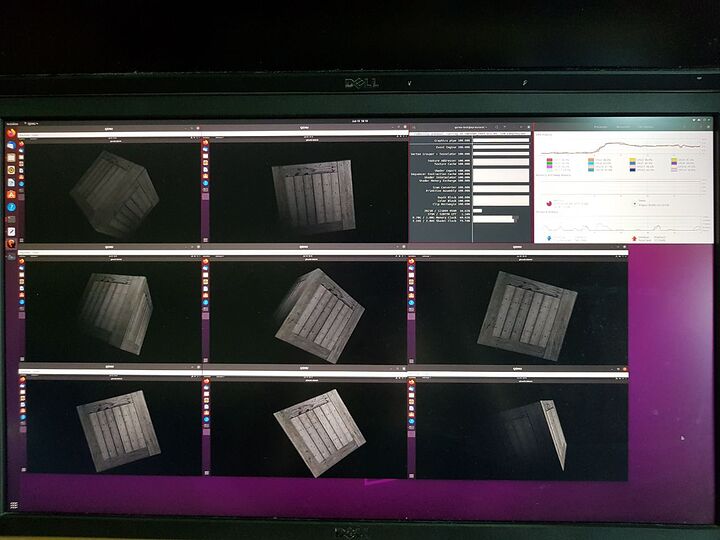

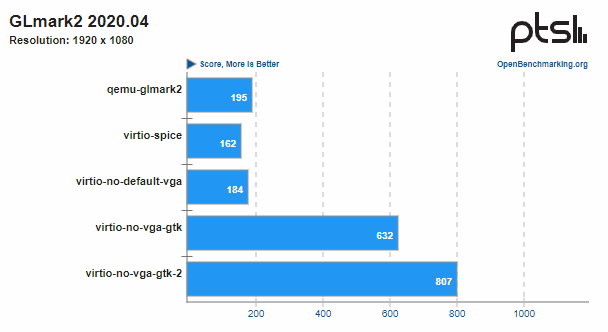

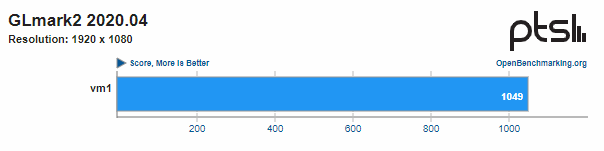

6) Run 8 VM with glmark2 at the same time !

Note that I suspect I might be able to push it slightly higher, as the GPU clock has not been saturated to 100% most of the time, but at this point I’m slowly running out of RAM

Here is the confusing part, it actually performed better - on average the score for all 8 instances were between 900 ~ 1050.

I have validated this increase, by rerunning twice all 8 tests, and the single test separately.

Openbencmark Upload: Qemu-glmark2-1-of-8 Benchmarks - OpenBenchmarking.org

I suspect what is happening here, is that by pushing the GPU closer to the limit, it exists power saving mode, or something similar. But who knows =/

This however gives the VM’s about a total combined score of 8k (1k * 8), which is half of the host 16k - so at about 50% efficiency (napkin math) - if anything at this point, im potentially RAM bound rather then GPU bound in my current setup.

So not bad for a start I guess?

7) Run other workloads, like youtube and stuff

One thing to note - by default Firefox does not enable GPU acceleration for linux install, so go into the config and enable. Once you have that enabled.

Things worked smoothly

Fun aside, i misconfigured the original ram limits - and when opening the browsers, they happily went to take up large chunks of ram, and started crashing one VM after another. - Meaning starting the browser takes up WAY more ram then running GPU benchmarks (hahaha)