Not yet, what’s the grub option and I’ll give it a shot via SSH?

on the same line in the grub config, add your hardware id’s, so it;s sometgin like:

GRUB_CMDLINE_LINUX=" iommu=1 amd_iommu=on rd.driver.pre=vfio-pci vfio-pci.ids=10de:17c8,10de:0fb0"

Like this?

GRUB_CMDLINE_LINUX="iommu=1 amd_iommu=on iommu=pt rd.driver.pre=vfio-pci vfio-pci.ids=1002:687F,1002:aaf8"

Oddly my GPU is still being used as normal even after grub-update. These are 100% the right device ID’s. See: PCI\VEN_1002&DEV_687F - Vega 10 XL/XT [Radeon RX Vega… | Device Hunt

That looks right, and on my system it reserves them for vfio, with driver in use: vfio.

Not sure what else to try.

Might it be something to do with the efib?

Any mention of the he id’s or the pci address in dmesg to say what is working or not working on boot time?

I’m now 100% convinced my GPU is cursed.

Here’s some dmesg output, not sure if it’s what your looking for:

user@userpc:~$ sudo dmesg | grep 1002

[ 0.000000] Command line: BOOT_IMAGE=/vmlinuz-4.19.0-13-amd64 root=/dev/mapper/userpc-lv_root ro iommu=1 amd_iommu=on iommu=pt rd.driver.pre=vfio-pci vfio-pci.ids=1002:687F,1002:aaf8 quiet loglevel=3 fsck.mode=auto

[ 0.172355] Kernel command line: BOOT_IMAGE=/vmlinuz-4.19.0-13-amd64 root=/dev/mapper/userpc-lv_root ro iommu=1 amd_iommu=on iommu=pt rd.driver.pre=vfio-pci vfio-pci.ids=1002:687F,1002:aaf8 quiet loglevel=3 fsck.mode=auto

[ 0.503827] pci 0000:0b:00.0: [1002:687f] type 00 class 0x030000

[ 0.504008] pci 0000:0b:00.1: [1002:aaf8] type 00 class 0x040300

[ 1.847255] [drm] initializing kernel modesetting (VEGA10 0x1002:0x687F 0x1002:0x0B36 0xC3).

[ 2.433841] Topology: Add dGPU node [0x687f:0x1002]

[ 2.433903] kfd kfd: added device 1002:687f

user@userpc:~$ sudo dmesg | grep 0000:0b:00

[ 0.503827] pci 0000:0b:00.0: [1002:687f] type 00 class 0x030000

[ 0.503850] pci 0000:0b:00.0: reg 0x10: [mem 0xd0000000-0xdfffffff 64bit pref]

[ 0.503859] pci 0000:0b:00.0: reg 0x18: [mem 0xe0000000-0xe01fffff 64bit pref]

[ 0.503865] pci 0000:0b:00.0: reg 0x20: [io 0xf000-0xf0ff]

[ 0.503871] pci 0000:0b:00.0: reg 0x24: [mem 0xfcc00000-0xfcc7ffff]

[ 0.503878] pci 0000:0b:00.0: reg 0x30: [mem 0xfcc80000-0xfcc9ffff pref]

[ 0.503890] pci 0000:0b:00.0: BAR 0: assigned to efifb

[ 0.503935] pci 0000:0b:00.0: PME# supported from D1 D2 D3hot D3cold

[ 0.504008] pci 0000:0b:00.1: [1002:aaf8] type 00 class 0x040300

[ 0.504024] pci 0000:0b:00.1: reg 0x10: [mem 0xfcca0000-0xfcca3fff]

[ 0.504099] pci 0000:0b:00.1: PME# supported from D1 D2 D3hot D3cold

[ 0.508847] pci 0000:0b:00.0: vgaarb: setting as boot VGA device

[ 0.508847] pci 0000:0b:00.0: vgaarb: VGA device added: decodes=io+mem,owns=io+mem,locks=none

[ 0.508847] pci 0000:0b:00.0: vgaarb: bridge control possible

[ 0.535543] pci 0000:0b:00.0: Video device with shadowed ROM at [mem 0x000c0000-0x000dffff]

[ 0.535550] pci 0000:0b:00.1: Linked as a consumer to 0000:0b:00.0

[ 0.535551] pci 0000:0b:00.1: D0 power state depends on 0000:0b:00.0

[ 1.152550] iommu: Adding device 0000:0b:00.0 to group 24

[ 1.152610] iommu: Using direct mapping for device 0000:0b:00.0

[ 1.152695] iommu: Adding device 0000:0b:00.1 to group 25

[ 1.152712] iommu: Using direct mapping for device 0000:0b:00.1

[ 1.847293] amdgpu 0000:0b:00.0: firmware: direct-loading firmware amdgpu/vega10_gpu_info.bin

[ 1.847317] amdgpu 0000:0b:00.0: No more image in the PCI ROM

[ 1.847353] amdgpu 0000:0b:00.0: VRAM: 8176M 0x000000F400000000 - 0x000000F5FEFFFFFF (8176M used)

[ 1.847354] amdgpu 0000:0b:00.0: GART: 512M 0x000000F600000000 - 0x000000F61FFFFFFF

[ 1.847657] amdgpu 0000:0b:00.0: firmware: direct-loading firmware amdgpu/vega10_sos.bin

[ 1.847671] amdgpu 0000:0b:00.0: firmware: direct-loading firmware amdgpu/vega10_asd.bin

[ 1.847706] amdgpu 0000:0b:00.0: firmware: direct-loading firmware amdgpu/vega10_acg_smc.bin

[ 1.847726] amdgpu 0000:0b:00.0: firmware: direct-loading firmware amdgpu/vega10_pfp.bin

[ 1.847736] amdgpu 0000:0b:00.0: firmware: direct-loading firmware amdgpu/vega10_me.bin

[ 1.847746] amdgpu 0000:0b:00.0: firmware: direct-loading firmware amdgpu/vega10_ce.bin

[ 1.847755] amdgpu 0000:0b:00.0: firmware: direct-loading firmware amdgpu/vega10_rlc.bin

[ 1.847793] amdgpu 0000:0b:00.0: firmware: direct-loading firmware amdgpu/vega10_mec.bin

[ 1.847830] amdgpu 0000:0b:00.0: firmware: direct-loading firmware amdgpu/vega10_mec2.bin

[ 1.848455] amdgpu 0000:0b:00.0: firmware: direct-loading firmware amdgpu/vega10_sdma.bin

[ 1.848467] amdgpu 0000:0b:00.0: firmware: direct-loading firmware amdgpu/vega10_sdma1.bin

[ 1.848578] amdgpu 0000:0b:00.0: firmware: direct-loading firmware amdgpu/vega10_uvd.bin

[ 1.849121] amdgpu 0000:0b:00.0: firmware: direct-loading firmware amdgpu/vega10_vce.bin

[ 2.528033] amdgpu 0000:0b:00.0: fb0: amdgpudrmfb frame buffer device

[ 2.540371] amdgpu 0000:0b:00.0: ring 0(gfx) uses VM inv eng 4 on hub 0

[ 2.540372] amdgpu 0000:0b:00.0: ring 1(comp_1.0.0) uses VM inv eng 5 on hub 0

[ 2.540373] amdgpu 0000:0b:00.0: ring 2(comp_1.1.0) uses VM inv eng 6 on hub 0

[ 2.540374] amdgpu 0000:0b:00.0: ring 3(comp_1.2.0) uses VM inv eng 7 on hub 0

[ 2.540374] amdgpu 0000:0b:00.0: ring 4(comp_1.3.0) uses VM inv eng 8 on hub 0

[ 2.540375] amdgpu 0000:0b:00.0: ring 5(comp_1.0.1) uses VM inv eng 9 on hub 0

[ 2.540376] amdgpu 0000:0b:00.0: ring 6(comp_1.1.1) uses VM inv eng 10 on hub 0

[ 2.540377] amdgpu 0000:0b:00.0: ring 7(comp_1.2.1) uses VM inv eng 11 on hub 0

[ 2.540377] amdgpu 0000:0b:00.0: ring 8(comp_1.3.1) uses VM inv eng 12 on hub 0

[ 2.540378] amdgpu 0000:0b:00.0: ring 9(kiq_2.1.0) uses VM inv eng 13 on hub 0

[ 2.540379] amdgpu 0000:0b:00.0: ring 10(sdma0) uses VM inv eng 4 on hub 1

[ 2.540380] amdgpu 0000:0b:00.0: ring 11(sdma1) uses VM inv eng 5 on hub 1

[ 2.540380] amdgpu 0000:0b:00.0: ring 12(uvd<0>) uses VM inv eng 6 on hub 1

[ 2.540381] amdgpu 0000:0b:00.0: ring 13(uvd_enc0<0>) uses VM inv eng 7 on hub 1

[ 2.540382] amdgpu 0000:0b:00.0: ring 14(uvd_enc1<0>) uses VM inv eng 8 on hub 1

[ 2.540382] amdgpu 0000:0b:00.0: ring 15(vce0) uses VM inv eng 9 on hub 1

[ 2.540383] amdgpu 0000:0b:00.0: ring 16(vce1) uses VM inv eng 10 on hub 1

[ 2.540384] amdgpu 0000:0b:00.0: ring 17(vce2) uses VM inv eng 11 on hub 1

[ 2.541241] [drm] Initialized amdgpu 3.27.0 20150101 for 0000:0b:00.0 on minor 0

[ 15.243902] snd_hda_intel 0000:0b:00.1: Handle vga_switcheroo audio client

[ 15.283308] input: HD-Audio Generic HDMI/DP,pcm=3 as /devices/pci0000:00/0000:00:03.1/0000:09:00.0/0000:0a:00.0/0000:0b:00.1/sound/card0/input12

[ 15.283391] input: HD-Audio Generic HDMI/DP,pcm=7 as /devices/pci0000:00/0000:00:03.1/0000:09:00.0/0000:0a:00.0/0000:0b:00.1/sound/card0/input13

[ 15.283483] input: HD-Audio Generic HDMI/DP,pcm=8 as /devices/pci0000:00/0000:00:03.1/0000:09:00.0/0000:0a:00.0/0000:0b:00.1/sound/card0/input14

[ 15.283538] input: HD-Audio Generic HDMI/DP,pcm=9 as /devices/pci0000:00/0000:00:03.1/0000:09:00.0/0000:0a:00.0/0000:0b:00.1/sound/card0/input15

[ 15.283643] input: HD-Audio Generic HDMI/DP,pcm=10 as /devices/pci0000:00/0000:00:03.1/0000:09:00.0/0000:0a:00.0/0000:0b:00.1/sound/card0/input16

[ 15.283746] input: HD-Audio Generic HDMI/DP,pcm=11 as /devices/pci0000:00/0000:00:03.1/0000:09:00.0/0000:0a:00.0/0000:0b:00.1/sound/card0/input17You might try the efib=off or whatever in the grub command line?

video=efifb:off

From here:

Nope. It’s still starting as normal.

Grub config:

GRUB_CMDLINE_LINUX="iommu=1 amd_iommu=on iommu=pt rd.driver.pre=vfio-pci vfio-pci.ids=1002:687F,1002:aaf8 video=efifb:off"

Here’s the dmesg logs again:

user@userpc:~$ sudo dmesg | grep 1002

[sudo] password for user:

[ 0.000000] Command line: BOOT_IMAGE=/vmlinuz-4.19.0-13-amd64 root=/dev/mapper/userpc-lv_root ro iommu=1 amd_iommu=on iommu=pt rd.driver.pre=vfio-pci vfio-pci.ids=1002:687F,1002:aaf8 video=efifb:off quiet loglevel=3 fsck.mode=auto

[ 0.169958] Kernel command line: BOOT_IMAGE=/vmlinuz-4.19.0-13-amd64 root=/dev/mapper/userpc-lv_root ro iommu=1 amd_iommu=on iommu=pt rd.driver.pre=vfio-pci vfio-pci.ids=1002:687F,1002:aaf8 video=efifb:off quiet loglevel=3 fsck.mode=auto

[ 0.505647] pci 0000:0b:00.0: [1002:687f] type 00 class 0x030000

[ 0.505829] pci 0000:0b:00.1: [1002:aaf8] type 00 class 0x040300

[ 1.851449] [drm] initializing kernel modesetting (VEGA10 0x1002:0x687F 0x1002:0x0B36 0xC3).

[ 2.439143] Topology: Add dGPU node [0x687f:0x1002]

[ 2.439206] kfd kfd: added device 1002:687f

user@userpc:~$ sudo dmesg | grep 0000:0b:00

[ 0.505647] pci 0000:0b:00.0: [1002:687f] type 00 class 0x030000

[ 0.505671] pci 0000:0b:00.0: reg 0x10: [mem 0xd0000000-0xdfffffff 64bit pref]

[ 0.505680] pci 0000:0b:00.0: reg 0x18: [mem 0xe0000000-0xe01fffff 64bit pref]

[ 0.505686] pci 0000:0b:00.0: reg 0x20: [io 0xf000-0xf0ff]

[ 0.505692] pci 0000:0b:00.0: reg 0x24: [mem 0xfcc00000-0xfcc7ffff]

[ 0.505698] pci 0000:0b:00.0: reg 0x30: [mem 0xfcc80000-0xfcc9ffff pref]

[ 0.505711] pci 0000:0b:00.0: BAR 0: assigned to efifb

[ 0.505757] pci 0000:0b:00.0: PME# supported from D1 D2 D3hot D3cold

[ 0.505829] pci 0000:0b:00.1: [1002:aaf8] type 00 class 0x040300

[ 0.505845] pci 0000:0b:00.1: reg 0x10: [mem 0xfcca0000-0xfcca3fff]

[ 0.505920] pci 0000:0b:00.1: PME# supported from D1 D2 D3hot D3cold

[ 0.510211] pci 0000:0b:00.0: vgaarb: setting as boot VGA device

[ 0.510211] pci 0000:0b:00.0: vgaarb: VGA device added: decodes=io+mem,owns=io+mem,locks=none

[ 0.510211] pci 0000:0b:00.0: vgaarb: bridge control possible

[ 0.536690] pci 0000:0b:00.0: Video device with shadowed ROM at [mem 0x000c0000-0x000dffff]

[ 0.536697] pci 0000:0b:00.1: Linked as a consumer to 0000:0b:00.0

[ 0.536697] pci 0000:0b:00.1: D0 power state depends on 0000:0b:00.0

[ 1.149254] iommu: Adding device 0000:0b:00.0 to group 24

[ 1.149315] iommu: Using direct mapping for device 0000:0b:00.0

[ 1.149398] iommu: Adding device 0000:0b:00.1 to group 25

[ 1.149415] iommu: Using direct mapping for device 0000:0b:00.1

[ 1.851489] amdgpu 0000:0b:00.0: firmware: direct-loading firmware amdgpu/vega10_gpu_info.bin

[ 1.851514] amdgpu 0000:0b:00.0: No more image in the PCI ROM

[ 1.851549] amdgpu 0000:0b:00.0: VRAM: 8176M 0x000000F400000000 - 0x000000F5FEFFFFFF (8176M used)

[ 1.851550] amdgpu 0000:0b:00.0: GART: 512M 0x000000F600000000 - 0x000000F61FFFFFFF

[ 1.851838] amdgpu 0000:0b:00.0: firmware: direct-loading firmware amdgpu/vega10_sos.bin

[ 1.851852] amdgpu 0000:0b:00.0: firmware: direct-loading firmware amdgpu/vega10_asd.bin

[ 1.851888] amdgpu 0000:0b:00.0: firmware: direct-loading firmware amdgpu/vega10_acg_smc.bin

[ 1.851907] amdgpu 0000:0b:00.0: firmware: direct-loading firmware amdgpu/vega10_pfp.bin

[ 1.851918] amdgpu 0000:0b:00.0: firmware: direct-loading firmware amdgpu/vega10_me.bin

[ 1.851926] amdgpu 0000:0b:00.0: firmware: direct-loading firmware amdgpu/vega10_ce.bin

[ 1.851935] amdgpu 0000:0b:00.0: firmware: direct-loading firmware amdgpu/vega10_rlc.bin

[ 1.851971] amdgpu 0000:0b:00.0: firmware: direct-loading firmware amdgpu/vega10_mec.bin

[ 1.852006] amdgpu 0000:0b:00.0: firmware: direct-loading firmware amdgpu/vega10_mec2.bin

[ 1.852606] amdgpu 0000:0b:00.0: firmware: direct-loading firmware amdgpu/vega10_sdma.bin

[ 1.852617] amdgpu 0000:0b:00.0: firmware: direct-loading firmware amdgpu/vega10_sdma1.bin

[ 1.852724] amdgpu 0000:0b:00.0: firmware: direct-loading firmware amdgpu/vega10_uvd.bin

[ 1.853260] amdgpu 0000:0b:00.0: firmware: direct-loading firmware amdgpu/vega10_vce.bin

[ 2.533308] amdgpu 0000:0b:00.0: fb0: amdgpudrmfb frame buffer device

[ 2.545680] amdgpu 0000:0b:00.0: ring 0(gfx) uses VM inv eng 4 on hub 0

[ 2.545681] amdgpu 0000:0b:00.0: ring 1(comp_1.0.0) uses VM inv eng 5 on hub 0

[ 2.545682] amdgpu 0000:0b:00.0: ring 2(comp_1.1.0) uses VM inv eng 6 on hub 0

[ 2.545683] amdgpu 0000:0b:00.0: ring 3(comp_1.2.0) uses VM inv eng 7 on hub 0

[ 2.545683] amdgpu 0000:0b:00.0: ring 4(comp_1.3.0) uses VM inv eng 8 on hub 0

[ 2.545684] amdgpu 0000:0b:00.0: ring 5(comp_1.0.1) uses VM inv eng 9 on hub 0

[ 2.545685] amdgpu 0000:0b:00.0: ring 6(comp_1.1.1) uses VM inv eng 10 on hub 0

[ 2.545685] amdgpu 0000:0b:00.0: ring 7(comp_1.2.1) uses VM inv eng 11 on hub 0

[ 2.545686] amdgpu 0000:0b:00.0: ring 8(comp_1.3.1) uses VM inv eng 12 on hub 0

[ 2.545687] amdgpu 0000:0b:00.0: ring 9(kiq_2.1.0) uses VM inv eng 13 on hub 0

[ 2.545688] amdgpu 0000:0b:00.0: ring 10(sdma0) uses VM inv eng 4 on hub 1

[ 2.545688] amdgpu 0000:0b:00.0: ring 11(sdma1) uses VM inv eng 5 on hub 1

[ 2.545689] amdgpu 0000:0b:00.0: ring 12(uvd<0>) uses VM inv eng 6 on hub 1

[ 2.545690] amdgpu 0000:0b:00.0: ring 13(uvd_enc0<0>) uses VM inv eng 7 on hub 1

[ 2.545690] amdgpu 0000:0b:00.0: ring 14(uvd_enc1<0>) uses VM inv eng 8 on hub 1

[ 2.545691] amdgpu 0000:0b:00.0: ring 15(vce0) uses VM inv eng 9 on hub 1

[ 2.545692] amdgpu 0000:0b:00.0: ring 16(vce1) uses VM inv eng 10 on hub 1

[ 2.545693] amdgpu 0000:0b:00.0: ring 17(vce2) uses VM inv eng 11 on hub 1

[ 2.546554] [drm] Initialized amdgpu 3.27.0 20150101 for 0000:0b:00.0 on minor 0

[ 14.013683] snd_hda_intel 0000:0b:00.1: Handle vga_switcheroo audio client

[ 14.026813] input: HD-Audio Generic HDMI/DP,pcm=3 as /devices/pci0000:00/0000:00:03.1/0000:09:00.0/0000:0a:00.0/0000:0b:00.1/sound/card0/input12

[ 14.026871] input: HD-Audio Generic HDMI/DP,pcm=7 as /devices/pci0000:00/0000:00:03.1/0000:09:00.0/0000:0a:00.0/0000:0b:00.1/sound/card0/input13

[ 14.026953] input: HD-Audio Generic HDMI/DP,pcm=8 as /devices/pci0000:00/0000:00:03.1/0000:09:00.0/0000:0a:00.0/0000:0b:00.1/sound/card0/input14

[ 14.027029] input: HD-Audio Generic HDMI/DP,pcm=9 as /devices/pci0000:00/0000:00:03.1/0000:09:00.0/0000:0a:00.0/0000:0b:00.1/sound/card0/input15

[ 14.027111] input: HD-Audio Generic HDMI/DP,pcm=10 as /devices/pci0000:00/0000:00:03.1/0000:09:00.0/0000:0a:00.0/0000:0b:00.1/sound/card0/input16

[ 14.027167] input: HD-Audio Generic HDMI/DP,pcm=11 as /devices/pci0000:00/0000:00:03.1/0000:09:00.0/0000:0a:00.0/0000:0b:00.1/sound/card0/input17I haven’t had time to read thru the whole post and I have to take off soon so hopefully this isn’t pointing you in the wrong direction but the last “echo” command for both the VGA and Audio devices is unloading the VFIO-pci driver; I would expect that in this case either the normal driver, e.g. nouveau or nvidia or whatever, is then loaded or the device will have no driver at all. I would remove the last “echo” line for both devices that is writing to the /sys/but/pci/drivers/vfio-pci/remove_id file and see if that works. If it doesn’t, I’d also remove the /sys/bus/pci/drivers/vfio-pci/new_id command too. I’m new to this, but from my research I think you only need to use the pci bus address OR the device id, not both. so:

echo ‘0000:0b:00.0’ > /sys/bus/pci/devices/0000:0b:00.0/driver/unbind – unloads the current loaded driver like nvida; thenecho ‘0000:0b:00.0’ > /sys/bus/pci/devices/0000:0b:00.0/driver/bind --loads the VFIO-pci driver. this is all that I think is needed

One other thing of note in general (i.e. doesn’t apply to this specific case) you can also use “virsh” commands to accomplish the same, but I’ve been having problems where the first time I use the “virsh” command it cannot find the device and fails, for some reason the second or third time it is able to locate the device and works. So if you use “virsh” commands in a script, do some checking to see if it succeeded and if not try again. Use a loop or something to try 5 times is my suggestion.

Sorry bud, I’m out of ideas. Maybe keep bumping the thread every now and then?

Did you ensure vfio-pci is in your initramfs?

echo "vfio-pci" >> /etc/modules

update-initramfs -k all -uI did not, no. I have added that now to /etc/modules and updated the initramfs.

It’s still loading my GPU as normal though rather than being headless.

@Copious

I had a similar experience with my rx 580, and something teels me you got the same issue.

Please note that i haven’t read the whole post so if something repeats, it’s because of that.

First things first, in bios, CSM off, SVM on, Iommu on .

Second, the usual stuff that need to be install like kvm ovmf etc , also make sure you have the following entried in your grub boot entry:

amd_iommu=on iommu=pt

And as always update-grub afterwards

Now we can ssay that you are ready to passthrough your gpu, like you probably saw already, there are mostly tutorials for nvidia and not to many for amd, because of that, I created one myself(call this a shameless plug).

Start off by following this tutorial that was created for nvidia: nvidia-single-gpu-with-performance-tricks

Second, after putting all that in place, use my tutorial for your amd gpu : shameless-plug

Now, the gotchas that bit me before solving all issues where apps that were still using the gpu in on way or another or/and modules that would not unload like amdgpu or the sound counterpart.

I am using kde on debian testing.

Please let me know if you need help as I am almost sure that I can help you with this

EDIT: This post was created fro my macOS vm which was already shut down and started back up using @gnif vendor-reset module. It really does work

I did not have CSM off. I have now switched it off in the BIOS. Otherwise all the BIOS settings are correct. Likewise all the GRUB settings are correct, you can see in my earlier posts.

I think I will switch to your script but I’m still not able to unload the amdgpu module.

This is where the problem lies. It’s annoyingly hard to kill GDM/GNOME running under Wayland. I’ve tried killall gdm-wayland-session but there’s no process with that name, even though there is. I’m guessing I’m using killall wrong in that case, but that is the process name.

If I simply don’t login at the GDM screen and run systemctl stop gdm3 it does stop the display manager but I still can’t unload the amdgpu module: modprobe: FATAL: Module amdgpu is in use.

Not really sure how to identify what is holding the GPU hostage?

Had that issue before, you can see what other module is using amdgpu by doing the following , always use sudo.

lsmod | grep <module name>

Also don’t know about wayland , but you usually kill the xorg(kill wayland counterpart) and the display manager.

Yeah I checked lsmod but none of the modules can be unloaded. It seems like I can’t unload amdgpu because the listed modules depending on it but I also can’t unload the depended modules because they are being used by amdgpu.

I’ve switched to X.Org temporarily just to match your config and to eliminate any possible Wayland weirdness.

At this point gdm-x-session has been killed with killall and the display-manager service has been stopped. There doesn’t appear to be anything graphical running.

root@userpc:/home/user# lsmod | grep amdgpu

amdgpu 3461120 1

chash 16384 1 amdgpu

gpu_sched 28672 1 amdgpu

i2c_algo_bit 16384 1 amdgpu

ttm 126976 1 amdgpu

drm_kms_helper 208896 1 amdgpu

drm 495616 5 gpu_sched,drm_kms_helper,amdgpu,ttm

mfd_core 16384 1 amdgpu

root@userpc:/home/user# sudo modprobe -r chash

modprobe: FATAL: Module chash is in use.

root@userpc:/home/user# sudo modprobe -r gpu_sched

modprobe: FATAL: Module gpu_sched is in use.

root@userpc:/home/user# sudo modprobe -r i2c_algo_bit

modprobe: FATAL: Module i2c_algo_bit is in use.

root@userpc:/home/user# sudo modprobe -r ttm

modprobe: FATAL: Module ttm is in use.

root@userpc:/home/user# sudo modprobe -r drm_kms_helper

modprobe: FATAL: Module drm_kms_helper is in use.

root@userpc:/home/user# sudo modprobe -r drm

modprobe: FATAL: Module drm is in use.

root@userpc:/home/user# sudo modprobe -r mfd_core

modprobe: FATAL: Module mfd_core is in use.

root@userpc:/home/user# sudo modprobe -r amdgpu

modprobe: FATAL: Module amdgpu is in use.Very weird. I just tried to run the commands in my startup script one by one. When running modprobe -r amdgpu I got a segmentation fault, but my screens did go blank.

This is unusual not just because of the segfault, but because I’ve done this before several times and it’s never worked.

Here’s the output of lsmod at this point:

user@userpc:~$ sudo lsmod | grep amdgpu

amdgpu 3461120 -1

chash 16384 1 amdgpu

gpu_sched 28672 1 amdgpu

i2c_algo_bit 16384 1 amdgpu

ttm 126976 1 amdgpu

drm_kms_helper 208896 1 amdgpu

drm 495616 5 gpu_sched,drm_kms_helper,amdgpu,ttm

mfd_core 16384 1 amdgpu

So it looks like amdgpu has unloaded (albeit with a segfault), but I still can’t unload any of the other modules.

I also cannot run sudo virsh nodedev-detach pci_0000_0b_00_0. Nothing happens, I just have to CTRL+C. Same for pci_0000_0b_00_1.

Attempting to startup the VM with virsh start win10 doesn’t result in any disk activity so I don’t think it’s starting. I think this is because it’s trying to run the startup script as well and is getting stuck on virsh nodedev-detach pci_0000_0b_00_0 like I was.

I’ve updated my script to match yours (mostly):

#!/bin/bash

# Helpful to read output when debugging

set -x

# Stop display manager

killall gdm-x-session

#pkill gdm-wayland-session

systemctl stop display-manager.service

pulse_pid=$(pgrep -u user pulseaudio)

pipewire_pid=$(pgrep -u user pipewire-media)

kill $pulse_pid

kill $pipewire_pid

# Unbind VTconsoles

echo 0 > /sys/class/vtconsole/vtcon0/bind

echo 0 > /sys/class/vtconsole/vtcon1/bind

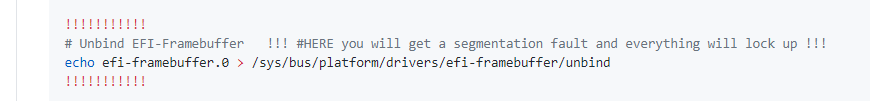

# Unbind EFI-Framebuffer

echo efi-framebuffer.0 > /sys/bus/platform/drivers/efi-framebuffer/unbind

sleep 5

# Unload AMD drivers

modprobe -r amdgpu

# Unbind the GPU from display driver

virsh nodedev-detach pci_0000_0b_00_0

virsh nodedev-detach pci_0000_0b_00_1

# Load VFIO kernel module

modprobe vfio-pci

The additional commands to kill Pulseaudio don’t seem to find anything so I think it’s already dead on my setup once the X session is killed.

Man what does it take to kill the amdgpu module and it’s dependants? Do I have to find its horcruxes?

Most definitely something is still running.

When I first succeded after segfaults and segfault, they way I did it was as follows:

Login to your user on the linux host where you want to use vfio.

Then from another device(preferably a laptop), ssh into the linux host.

Run the commands one by one and then just try to run the vm again.

Also, your script is wrong, I have specifically said that

echo efi-framebuffer.0 > /sys/bus/platform/drivers/efi-framebuffer/unbind

Will result in a segfault:

Use this script vfio-single-amdgpu-passthrough/start.sh at main · cosminmocan/vfio-single-amdgpu-passthrough · GitHub

Also, when modules are not being unloaded, it usually also means that something is using them.

I cannot tell you what, but htop from another device via ssh, should show you the process consuming the most resources, which is probably the process that is using the gpu as well.

There surely must be a better method, but I’m not aware of any

I’ve had some spare time to dig into this again. I have switched to sddm since gdm seems to cause a lot of trouble. Unfortunately, I’m no closer to the culprit that is causing modprobe -r amdgpu to result in a segfault.

I have now changed my startup script to match @examen1996’s entirely:

#!/bin/bash

# Helpful to read output when debugging

set -x

# Load the config file with our environmental variables

source "/etc/libvirt/hooks/kvm.conf"

# Stop your display manager.

systemctl stop sddm.service

pulse_pid=$(pgrep -u user pulseaudio)

kill $pulse_pid

# Unbind VTconsoles

echo 0 > /sys/class/vtconsole/vtcon0/bind

echo 0 > /sys/class/vtconsole/vtcon1/bind

# Avoid a race condition by waiting a couple of seconds. This can be calibrated to be shorter or longer if required for your system

sleep 4

# Unload all Radeon drivers

modprobe -r amdgpu

#modprobe -r gpu_sched

#modprobe -r ttm

#modprobe -r drm_kms_helper

#modprobe -r i2c_algo_bit

#modprobe -r drm

#modprobe -r snd_hda_intel

# Unbind the GPU from display driver

virsh nodedev-detach pci_0000_0b_00_0

virsh nodedev-detach pci_0000_0b_00_1

# Load VFIO kernel module

modprobe vfio

modprobe vfio_pci

modprobe vfio_iommu_type1

I am using SSH to troubleshoot this and I am running each command one by one.

What I will note is that the below two command have to be run as superuser, sudo gets a permission denied error.

echo 0 > /sys/class/vtconsole/vtcon0/bind

echo 0 > /sys/class/vtconsole/vtcon1/bind

Once these are run under superuser I can then run modprobe -r amdgpu and it segfaults. If I unload amdgpu before the two commands that unbind the vtconsoles it just says it’s in use which makes sense.

There is nothing obvious (to me) in htop that seems to be using the GPU or a lot of memory (max I see processes using is ~0.2% of 16GB). Unfortunately there’s no easy way for me to provide my htop output so I’ve thrown my top output into a pastebin: https://pastebin.com/raw/dikQi4rm.

This output was taken after running the two commands that unbind the vtconsoles and before I attempt to unload the amdgpu module.

I’m starting to wonder if I might have to move from buster to bullseye in case this is some weird edge-case bug that has been patched?

Confirmed, kernel and/or firmware bug. I backported the 5.10 kernel along with firmware-amd-graphics from Bullseye to Buster. The segmentation fault is gone!

Turns out I’m not going crazy. There’s nothing running that’s still holding on to the GPU.

So the amdgpu module is unloading correctly now and I can reload it without issue. However, I’m getting no output on my screens when the VM starts. I’ll try some troubleshooting myself but I’m grateful for any suggestions that anyone might have.