what do you use the 1660 for?

Transcoding in Plex and Channels DVR. So I just checked the IPMI logs and I have no further errors since my last post. So I might just be getting the errors at boot, something I did resolved the issue, or the issue is intermittent.

Were you able to get the card successfully recognized in unRAID and with the NVIDIA driver package?

I’ve been on and off trying to get it to work with no luck. I suspect it’s some setting in the BIOS I just (keep) overlooking and whenever I get the ambition to try and fix it it ends up driving me bonkers.

I bought five 1U4LW-X470 RPSU, AMD Ryzen 5950X, 4x KSM32ED8/32ME (128 GB ECC

total), but also tried 4x MTA18ASF4G72AZ-3G2B1. Two of them were stable, three

were crashing when being idle. One after 10 minutes, one after 2 hours, one

after 6 hours average. When they crashed the fans were in the last state,

Power LED was on, using IPMI it said it is off, when I tried to power it on,

IPMI said power on operation failed after three retries. In order to recover, I

had to unplug both power cables, wait 10 seconds, and replug. After that IPMI

was able to power on the systems again. I tried a lot of things to get to the

bottom of the issue. At the end what helped is to underclock the RAM to 1866

MT/s. The default for four dual rank modules is 2666 MT/s.

Bios Version: L4.21

IPMI Version: 0.20.0.27

Load UEFI (Bios) Defaults

Advanced > AMD CBS > UMC > DDR4 Common Options > DRAM Timing Configuration > Accept > Overclock: Enabled

Advanced > AMD CBS > UMC > DDR4 Common Options > DRAM Timing Configuration > Accept > Memory Clock Speed: 933 MHZ (ends up being 1866 MT/s)

I also enabled ECC RAM:

Advanced > AMD CBS > UMC > DDR4 Common Options > Common RAS > ECC Configuration > DRAM ECC Enable = Enabled

I verified that with prime64 torture test for 24 hours. All systems are stable

now. The funny thing is two were stable at full 2666 MT/s, one was stable at

2400 MT/s; two were stable at 1866 MT/s RAM speed. I ended up setting all

systems to 1866 MT/s just to be on the safe side.

I also opened a case with Asrock, if I get any more insight, I let you know.

I did three more experiments: One Memory module in A1 with full memory speed (3200 MT/s) the system is stable. Two Memory modules in A1 and B1 with full memory speed (3200 MT/s) the system crashes in less than 10 minutes when running mprime torture test. Two memory modules in A1 and A2 (maximum supported at 2666 MT/s) the system is stable.

Update 2021-05-14 asrock support send me a new IPMI version (X470D4U_L2.32.00.ima), but the version for one power supply not for two, it works anyway, but did not change anything. Crashed in less than 5 seconds using mprime. In case you’re interested, you can send me an email thomas at glanzmann dot de.

As somebody who has had the same issues with Zen 3 and X470 I really appreciate the thorough write up. Please keep us updated, I’ve reported the issue with RAM a few months ago and was hoping the 4/27 BIOS update would help but I guess not.

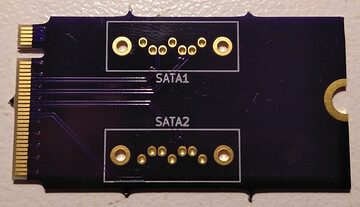

I made an adapter to use the 2 X470 chipset SATA ports that are routed to the M2_2 connector. That means 8 SATA ports on these boards with no external controllers!

The X470 chipset can have up to 8 SATA lanes in total, with 4 SATA lanes plus 2x SATA express interfaces with 2 SATA lanes each. But on X470D4U and X470D4U2-2T only 6 lanes are routed to SATA connectors. The remaining 2 lanes from one of the SATA express interfaces is routed to an M.2 connector to provide 1 lane of SATA or 2 lanes of PCI express Gen 3.

Since this is a SATA express interface under the hood, both lanes can be used as SATA, and if you insert a SATA M.2 device, that already configures the second lane in SATA mode. However, that second SATA lane isn’t part of the M.2 standard.

What my adapter does is simply route the second lane to a SATA port. The project files are available on GitHub. For the SATA headers, I soldered on some Molex 67800-8305 headers.

I originally tried this with an M.2 to SFF-8643 adapter and SFF-8643 to SATA cable bundle, but that didn’t quite work as the polarity of the SATA B pair is reversed from PCIe in the M.2 spec.

I was initially concerned about signal integrity, but it seems like it works OK from a short amount of testing. Not sure how reliable it will be long-term, however.

This could work on other boards that have the same M.2 setup too.

So what 32gb sticks of ram is everyone using?

Seen some Hynix HMA84GR7AFR4N-UH pretty cheap but dunno if it’ll work.

Ive got gskill and team group 32G dimms … i think they are both running 2400mhz

I’m using a pair of Samsung M391A4G43MB1-CTD.

They’re rated at 2666MHz but they passed multiple days of memtest86 at 3200MHz for me.

Some day I’ll upgrade to 4x32GB. Will be interesting to see if the 3200MHz speed still works with all four slots populated with dual rank memory.

I am tempted to get this MB for my home ESXi server. I skimmed through the thread and I would like to hear if the early issues were fixed with latest BIOS 3.50 and BMC 2.20. I’m mostly concerned about stability and compatibility with the HW below.

I would use it with a 2700X and 4x16GB 2600 (non ECC) from Corsair and a Seasonic Focus 850. I also have a Intel i350-T4 quad NIC. I’m considering passing through an LSI 9211 HBA and run TrueNAS as a VM. Eventually, once TrueNAS Scale will mature I plan to move to it, but that’s way down the road.

Any recommendations? Is the board stable? Are there any remaining unsolved issues?

Thanks.

I’d go with a 3700x over the 2700x if you can.

I’ve ran into RAM limitations with the 2000 series Ryzens. Running 4 sticks stably might be an issue with a 2700x.

Plus the 3700x has only 65w TDP over the 2700x’s 105w.

I run the IBM M1015 Crossflashed to LSI 9211-8i IT mode under TrueNAS 12.0-U4 currently … Only issue I had was with my X470D4U possibly over volting my 3700x with PBO enabled … was getting random reboots I think triggered by Plex transcoding stuff and my temps would sky rocket into the 80’s … and hover in the 60’s seemingly when nothing was going on…

Turning off PBO, and some other stuff (I forget exactly what at the moment) …now I’m sitting in the high 20’s and might get into the 40’s on occasion … So watch temps. Maybe just a simple voltage offset would suffice too.

Thanks for the reply. I should clarify that it’s not a completely new build. I already have the 2700X and the RAM. I’m currently using them on an Asus TUF B450M-Plus Gaming board without any issues.

It’s very stable and working pretty well right now. The reason I’m considering changing board is for the IPMI, on-board video and dual onboard Intel NIC, which will give me a bit more headroom and flexibility. But I don’t want to go down that path if this board still have all the weird issues reported at the beginning of this thread.

Hey there,

I’ve run into a strange issue with this board.

I was running it with a Ryzen 2600, 64gb of ram, a p400 for a vm and 1050ti for transcoding.

I recently upgraded to a 3900x and now my graphics cards won’t show up in unRAID when they’re both installed. I can remove either one and the other will show. I can change what slots they’re in and it makes no difference. I tested it with a spare gtx960 and still had the same issues.

Swapped it back to the 2600 and both show up fine again.

I eventually RMAd it thinking that it had dead pciee lanes but the replacement is causing the same issue.

Any ideas would be greatly greatly appreciated.

Thanks

Try to set to x8x8 in pcie configuration.

That worked! Both GPUs showing up but now I have another issue.

When I try to start a VM with a USB controller passed through my whole unraid server starts to grind to a halt and needs a reboot. I’ve read others having this issue but it was apparently fixed in unraid 6.9.2.

Any ideas?

Hi

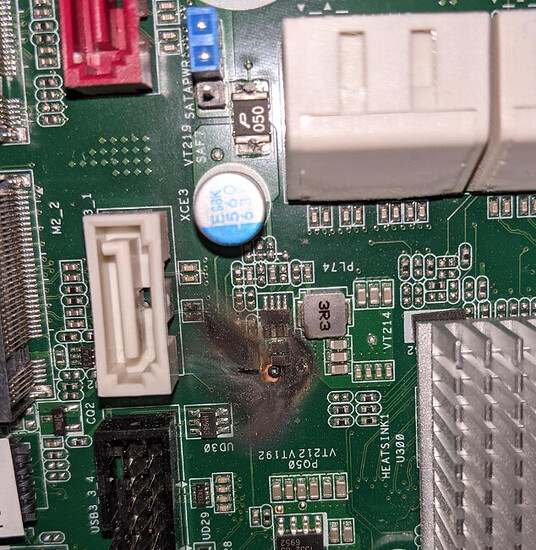

I had some kind of hardware crash tonight and I can’t get it to work again.

It shows “2.5V_PROM 0.44 V” in Sensor Monitoring, and it complains about not installed memory.

Have 2 memory sticks and have tried to only install one in every slot if it’s a memory failing, but no luck.

A1 B1 gives beeps and 55 on debug.

A2 B2 is silent and nothing happens.

Have tested another PSU and no change in result.

What exactly is the 2.5V_PROM?

Suggestions ??

Edit: Nevermind found the issue. Lightning strike.

I’m having the same issues with fan control… issues that have been brought into sharp focus by the current hot weather.

The board is an x470d4u and I’m running BIOS P3.30, and BMC v1.90.00.

Here’s the output of “ipmitool sensor list all | grep FAN”

root@pve01:~# ipmitool sensor list all | grep FAN

FAN1 | 1000.000 | RPM | ok | na | na | 100.000 | na | na | na

FAN2 | 0.000 | RPM | nc | na | na | 1000.000 | na | na | na

FAN3 | 1400.000 | RPM | ok | na | na | 1000.000 | na | na | na

FAN4 | 1000.000 | RPM | ok | na | na | 100.000 | na | na | na

FAN5 | 1700.000 | RPM | ok | na | na | 1000.000 | na | na | na

FAN6 | 1000.000 | RPM | ok | na | na | 100.000 | na | na | na

FAN1 is my heatsink fan. It’s the only fan that seams to change speed. It reads 600rpm or 700rpm most of the time but on the odd occasion, it goes up to 1000rpm. Note the thresholds are al “na” the one entry of 100 for “Lower Non-Critical” (LNC for short). These values are the defaults.

FAN2 is unplugged but the monitoring software things it’s spinning at 0rpm, so I have a persistnat warning… oddly, this behaviour persists accross multiple reboots.

FANs 3 and 5 are Noctua NF-P12 Redux 1700 PWMs. You’ll notice that their LNC’s are 1000, which is because I set it manually using the following command “ipmitool sensor thresh FAN3 lower 800 900 1000”, which should result in the following-

FAN3 | 1400.000 | RPM | ok | 800.000 | 900.000 | 1000.000 | na | na | na

But when I issue said command, I get the following errors-

root@pve01:~# ipmitool sensor thresh FAN5 lower 800 900 1000

Locating sensor record 'FAN5'...

Setting sensor "FAN5" Lower Non-Recoverable threshold to 800.000

Error setting threshold: Command illegal for specified sensor or record type

Setting sensor "FAN5" Lower Critical threshold to 900.000

Error setting threshold: Command illegal for specified sensor or record type

Setting sensor "FAN5" Lower Non-Critical threshold to 1000.000

The LNC is set correctly but the Lower Non-Recoverable and Lower Critical threshold aren’t set and remain as “na”.

FANs 4 and 6 are Fractal Design case fans that max out at 1000rpm. With fan control set to “Auto” in the BIOS, they spin at 1000rpm anyway but with fan control set to “auto”, the two 1700rpm Noctuas spin as low as 500rpm… way too low to keep my disks cool.

So I’ve enabled manual fan control in the BIOS and adjusted the duty cycles so that the fans ramp up to 100% at 65c (rather than 90c). But as all the fans semaingly respond only to the CPU temp, I’ve left the heatsink fan on “smart control” so that it spins according to the duty cycles I’ve set, and I’ve set the Noctuas to 80% and 90% to keep my disks cool… weirdly, if I set them both to 80%, one of them spins as low as 500rpm when connected to the FAN2 header. Which prompted to move the Noctua that was connected to FAN2, to FAN5.

I’ve also tried installing the lm-sensors package because the ASrock monitoring software doesn’t update in realtime and plus, I’m not 100% confident that I trust the readings it’s giving me. Despite lm-sensors detecting the Nuvoton control chip, this is all I get from the “sensors” command-

root@pve01:~# sensors

k10temp-pci-00c3

Adapter: PCI adapter

Tdie: +35.1°C (high = +70.0°C)

Tctl: +45.1°C

Fan control just seams random for me. I’ve found one or two other users who are able to control their fans normally using ipmitool, and in this very thread cat_sed_linux says that their fan control looks to be working as it should.

I’m considering updating to BIOS P3.50 to see if I can control my fans but apparently fan control has been removed from the P3.50 BIOS menus (WT actual F?!).

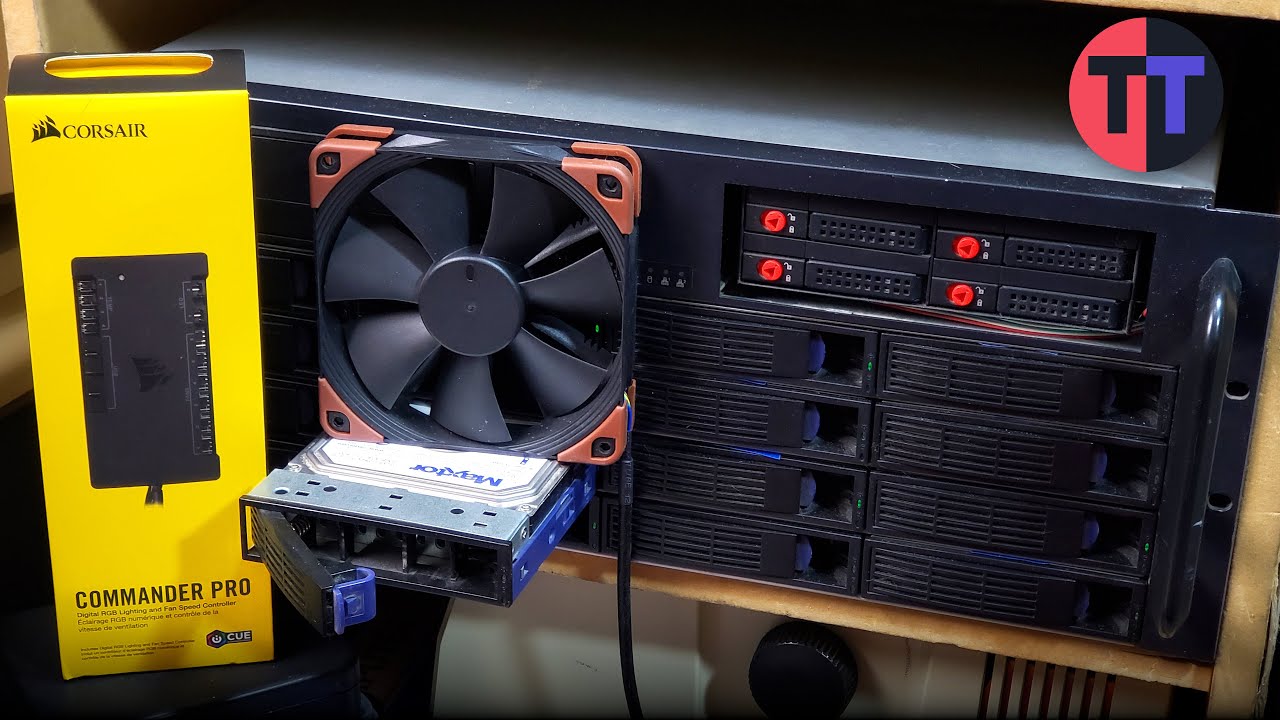

If I can’t get BIOS fan control working properly, I’m tempted to try using a Corsair Commander Pro for fan control (as seen in this video by Tech Tangents) but until I try it, I don’t know whether it or any of it’s requirements/dependacies might also be unable to read the x470d4u’s sensors.

Is anyone else having the same issues?

Has anyone else got lm-sensors fully working?

Has anyone else able to use ipmitool to set all the thresholds?

[EDIT] I’ve just tried to bump the speed of the two Noctuas up a bit, to deal with the hot weather and I have more info to add…

When setting both Noctuas to level 9 (which should be just short of full rpm), the one attached to the FAN5 header would spin at it’s full speed of 1700rpm according to the ASRock monitoring software. But setting the Noctua attached to header FAN3 8 or 9 would make it spin at anywhere from 500, to 900, to 1000, to 1300rpm. Each time I’d change the setting from 8 to 9, or to auto or full on, the speed would show as one of the RPMs listed. So I tried setting all fans to full on, I rebooted and they went to roughly their max RPMs. The FAN5 Noctua is reading at 1700rpm and the other FAN3 one is reading at 1600rpm… I guess neither the BIOS or the monitoring software can show anything other than RPMs in multiples of 100. The difference in RPMs might just be down to manufacturing variances.

I then set just the CPU fan (FAN1) to Auto and rebooted. Everything is spinning at their max RPMs (which is better than nothing) except the CPU fan, which is spinning at 600rpm. That should be enough to keep my CPU cool thankfully.

So I’ve come to the conclusion that the fan control on this board is broken… at least on BIOS P3.30. Which might explaining why it appears that fan control has been removed from the latest P3.50.

I think I need to start looking into the Corsair thingy majig.

You’ll never get any output from sensors from a Nuvoton chipset until you first modprobe the driver into the modules load and also provide a new kernel command line to ignore the ACPI conflict.

sudo modprobe nct6775 for the likely chipset family driver. And edit the grub file in /etc/default to add this:

GRUB_CMDLINE_LINUX_DEFAULT=“acpi_enforce_resources=lax”

and do a update-grub.

After a reboot your sensors command should now pick up your motherboards fan speeds.

Hi,

I’ve got a peculiar problem. I’m running this mobo with NVMe (Adata SX8200 Pro) in M2_2 slot (tried running in M2_1 - it had same performance so I left it as is). Now recently I got my hands on another SX8200 Pro and AORUS Gen4 AIC Adaptor (gigabyte gc-4xm2g4). I installed this giant adaptor in PCIE6 slot (so it should have gen3 x16 lanes) and all booted up nicely. I prepared new drive and started benchmarking. To my surprise both drives perform almost exactly the same

I tried running fio with this config modified to use 8GB file instead of 10GB one, but both drives I get nearly same result. Drive in adapter (that should be much faster):

Run status group 0 (all jobs):

READ: bw=527MiB/s (553MB/s), 527MiB/s-527MiB/s (553MB/s-553MB/s), io=8192MiB (8590MB), run=15541-15541msec

Run status group 1 (all jobs):

READ: bw=252MiB/s (265MB/s), 252MiB/s-252MiB/s (265MB/s-265MB/s), io=8192MiB (8590MB), run=32458-32458msec

Run status group 2 (all jobs):

WRITE: bw=528MiB/s (554MB/s), 528MiB/s-528MiB/s (554MB/s-554MB/s), io=8192MiB (8590MB), run=15502-15502msec

Run status group 3 (all jobs):

WRITE: bw=519MiB/s (544MB/s), 519MiB/s-519MiB/s (544MB/s-544MB/s), io=8192MiB (8590MB), run=15779-15779msec

Drive directly in mobo:

Run status group 0 (all jobs):

READ: bw=527MiB/s (553MB/s), 527MiB/s-527MiB/s (553MB/s-553MB/s), io=8192MiB (8590MB), run=15541-15541msec

Run status group 1 (all jobs):

READ: bw=252MiB/s (265MB/s), 252MiB/s-252MiB/s (265MB/s-265MB/s), io=8192MiB (8590MB), run=32458-32458msec

Run status group 2 (all jobs):

WRITE: bw=528MiB/s (554MB/s), 528MiB/s-528MiB/s (554MB/s-554MB/s), io=8192MiB (8590MB), run=15502-15502msec

Run status group 3 (all jobs):

WRITE: bw=519MiB/s (544MB/s), 519MiB/s-519MiB/s (544MB/s-544MB/s), io=8192MiB (8590MB), run=15779-15779msec

Shouldn’t I be getting like 4 times faster stats for the drive in the adapter?

Both drives are encrypted with LUKS. I ran cryptsetup benchmark on my system and encryption I use should be running with ~1755,9 MiB/s (both encrypt and decrypt speeds are roughly the same).

Any idea what might be slowing me down so much?

Bios: 3.30

CPU: AMD Ryzen 5 3600X

EDIT: I ditched luks on drive with adapter and here is a difference now:

Run status group 0 (all jobs):

READ: bw=822MiB/s (862MB/s), 822MiB/s-822MiB/s (862MB/s-862MB/s), io=8192MiB (8590MB), run=9967-9967msec

Run status group 1 (all jobs):

READ: bw=233MiB/s (244MB/s), 233MiB/s-233MiB/s (244MB/s-244MB/s), io=8192MiB (8590MB), run=35213-35213msec

Run status group 2 (all jobs):

WRITE: bw=977MiB/s (1024MB/s), 977MiB/s-977MiB/s (1024MB/s-1024MB/s), io=8192MiB (8590MB), run=8385-8385msec

Run status group 3 (all jobs):

WRITE: bw=924MiB/s (969MB/s), 924MiB/s-924MiB/s (969MB/s-969MB/s), io=8192MiB (8590MB), run=8862-8862msec

Also dd sequential write shows a major improvement (oflag=direct bs=1M count=10240): 1.7 GB/s vs 590Mb/s.

Pretty sure that one runs off the chipset so yo you might run into bottlenecks down the line.

No? Just because you put it in an adapter doesn’t magically give it more lanes. The drives themselves are PCIe 3 x4 and that’s what they’re getting.

All the adapter does is give you the ability to put 4 drives into an x16 slot (because 4x4 = 16…).