As per above, the Pi is the exception, not the rule. They don’t claim 2GHZ and give real world throttled to sub 1GHZ. Particularly Allwinner and Amlogic boards are really bad with this, although not exclusively them. Supposedly some of them use some sort of stuff that basically lies about the clock speed to the OS, and some of the advertised benchmarks are essentially rigged. Many processors as-is (out of the box with no heatsink) running back-to-back benchmarks will throttle severely and the benchmarks drop like a stone. EDIT - An example of thermal throttling mitigation on Armbian Forums

Summary

In the beginning I allowed 1200 MHz max cpufreq but since I neither used heatsink nor fan throttling had to jump in to prevent overheating. In this mode H3 started running cpuminer at 1200 MHz, clocked down to 1008 MHz pretty fast and from then on always switched between 624 MHz (1.1V VDD_CPU) and 1008 MHz (1.3V VDD_CPU). The possible 816 MHz (1.1V) in between were never used. Average consumption in this mode was 2550 mW and average cpuminer score 1200 khash/s:

I can’t say I personally know this as first hand experience, but the first site I’ve gone to everyday for years is CNX-Software. One of the regular commenters there, tkaiser, does/did work for Armbian. Along with the other Armbian people, they have gone over tons of boards and chips and filled in the blanks for hardware vendors, like Banana Pi and Orange Pi, that don’t really provide their own Linux support and rely on online communities like Armbian and Sunxi to provide functioning Linux distros.

He has brought up stuff about the speed and voltage nerfing to get Armbian to run stable on various boards. I guess going to the Armbian forums would be a better place to start if you are interested in that rabbit hole. The Raspberry Pi boards are great, but the non-profit Raspberry Pi Foundation is one of learning, not of making desktop replacements complete with all of the graphics capabilities of which people have become accustomed. And even in that space they are better than most, but it isn’t their goal and they don’t manufacture their ARM SoC’s.

Broadcom does. They literally hired a guy to reverse engineer their VPU/GPU to provide some level of Linux support. Why? I dunno. It will never happen, but it would be nice if the R Pi4 kicks them to the curb and goes full open hardware, or at the very least gets Broadcom to make them a fully open source SoC. Yup, I can dream!

Another thing that tkaiser harps on a ton is the anemic microUSB connectors on boards drawing upwards of 3 amps in some cases. Some noobs buy the latest and greatest ‘R Pi killer’, uses their old phone charger to try to make it run and it shits the bed. Generally followed by it going in the trash and complaining about it online, but not knowing it is a power issue. Plus countless garbage microUSB wires with similar results.

The original R Pi was not immune to this, but they have since added warnings to let you know when power is insufficient, and have an awesome community with boatloads of resources to figure out such issues. Again, making the R Pi cheap and accessible makes the microUSB connector a sensible decision. Any so-called “R Pi killer” drawing more power with no such warning system is going to leave the uninitiated with a bad taste and no clue why they have problems.

I am super critical of ARM Linux devices because I would love to see a rising tide raise all ships. I think a company like Olimex (<-- great blog, even better people) would be an open source hardware juggernaut if they had a high end ARM SoC that had genuinely open source graphics. Or even have Allwinner stop violating the GPL. ARM desperately needs an AMD or Intel equivalent that can both produce reasonable graphics and share the code that makes it work with Linux, or the current state will continue to be the status quo.

To be completely fair, I genuinely don’t have any credentials to say I know a single thing about the subject, but a long book could be written just from the CNX articles and comments. That’s where I got much of the info that I base my thoughts upon. The fact that with the absurd number of ARM devices on earth, the ONLY well rounded Linux heavyweight is the Raspberry Pi, a non-profit with no remotely comparable competition, speaks volumes of how they were able to squeeze blood from a stone where everyone else fails (though they do have an upper hand). It’s the polar opposite of the Android scene.

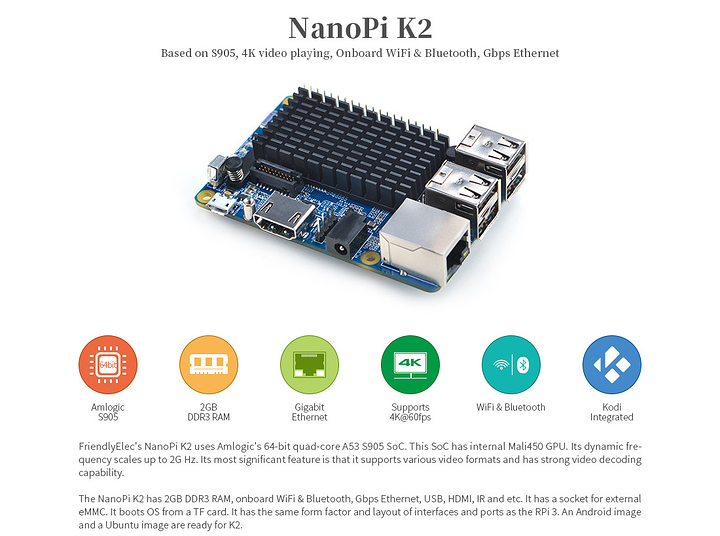

I have an Android box with the same SoC as that K2 above and it runs with no issues. And FriendlyElec (FriendlyArm) is one of the two companies I would look at first when considering a desktop replacement. A fully kitted out ARM SoC running Linux tends to cost as much as an Intel Atom and to me is no competition to Intel in the Linux desktop mini-PC space. I literally wake up everyday hoping to have my mind changed, but that hasn’t happened yet.