If its a pwm version, you could manage it that way

Silly me was thinking in “everything is 12V, just put a resistor”, but yeah… the SBCs are 5V

It is.

I ran into that issue previously, but the “throw in a resistor” theory was my initial plan.

Update: working on dynamic NFS provisioning.

Kubernetes does not have an internal provisioner for this. Since I don’t currently have the flexibility of hardware to provide multiple replicas for Gluster or something (Might transition to this down the road though, sounds like fun tbh), I’m going the “zfs array with NFS hanging off the back” route.

The problem is that while you can dynamically provision PVs with Gluster or Ceph internally on kubernetes, (just point it at the cluster and go) you can’t do that with NFS for some reason. I guess it’s not designed to be “scalable”

Anyways, it looks like it’s as simple as deploying a teeny tiny pod that’ll mkdir the nfs share when we need a PVC.

I’ll update with my notes as soon as it’s done.

@w.meri +1 for watching this.

@Dynamic_Gravity I’m aiming to be a full blown loser, where do I sign up?

@Donk Not that much further, setting up OpenVPN and HAProxy on pfSense, and Apache or Nginx on Linux + scripting stuff in bash (I automated my whole Samba deployment at work, in case SHTF, we can recover in around 2h - and that’s just manually generating different passwords and sending mail, but I could go beyond that and automate everything, but it’s not a likely scenario when we’re monitoring the infrastructure).

@SgtAwesomesauce I kept looking at Prometheus for a while now, I might set it up at my home. At work we use both Zabbix and Centreon (transitioning to the later, though I don’t mind either, both do the job and both have their quirks).

I always have 12v and 19.5v chargers laying around, so powering fans from a wall was always an easy task for me.

Yeah, I’m going to be rolling out prometheus eventually as well. I’ve always seen it as a bit of a bear to deal with HA (which you REALLY need with raspberry pis, since the fucking SD cards are so failure prone), so I’ll have to figure something out for that.

Extremely rough notes:

Deploying kubernetes NFS provisioner

Install and configure NFS on storage server.

don’t forget to chown nobody: $NFS_SHARE_ROOT

use exportfs -v to double-check running config…

Create RBAC, serviceaccount, and whatnot for nfs provisioner.

yaml stuff

Serviceaccount.yml:

---

kind: ServiceAccount

apiVersion: v1

metadata:

name: nfs-client-provisioner

clusterrole.yml

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: nfs-client-provisioner-runner

rules:

- apiGroups: [""]

resources: ["persistentvolumes"]

verbs: ["get", "list", "watch", "create", "delete"]

- apiGroups: [""]

resources: ["persistentvolumeclaims"]

verbs: ["get", "list", "watch", "update"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["events"]

verbs: ["create", "update", "patch"]

clusterRoleBinding.yml:

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: run-nfs-client-provisioner

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

namespace: default

roleRef:

kind: ClusterRole

name: nfs-client-provisioner-runner

apiGroup: rbac.authorization.k8s.io

role.yml

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

rules:

- apiGroups: [""]

resources: ["endpoints"]

verbs: ["get", "list", "watch", "create", "update", "patch"]

rolebinding.yml

---

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

roleRef:

kind: Role

name: leader-locking-nfs-client-provisioner

apiGroup: rbac.authorization.k8s.io

Create storage class

storageclass.yml:

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: managed-nfs-storage

annotations:

storageclass.kubernetes.io/is-default-class: "true"

provisioner: <yourdomain>/nfs

parameters:

archiveOnDelete: "false"

Create deployment:

deployment.yml

kind: Deployment

apiVersion: apps/v1

metadata:

name: nfs-client-provisioner

spec:

selector:

matchLabels:

app: nfs-client-provisioner

replicas: 1

strategy:

type: Recreate

template:

metadata:

labels:

app: nfs-client-provisioner

spec:

serviceAccountName: nfs-client-provisioner

containers:

- name: nfs-client-provisioner

image: quay.io/external_storage/nfs-client-provisioner-arm:latest

volumeMounts:

- name: nfs-client-root

mountPath: /persistentvolumes

env:

- name: PROVISIONER_NAME

value: metalspork.xyz/nfs

- name: NFS_SERVER

value: 192.168.98.70

- name: NFS_PATH

value: /media/storage/kubernetes_nfs

volumes:

- name: nfs-client-root

nfs:

server: 192.168.98.70

path: /media/storage/kubernetes_nfs

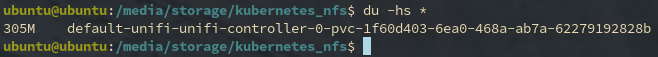

Good news; it’s working.

Here, we have a look at the cluster:

Bad news: I need to rebuild traefik. I have a better understanding now and I’m going spend a bit more time reading the traefik configs before I go and assume it’s working.

k3s?

Lightweight kubernetes.

k3s is smaller than k8s

Didn’t know about that.

My Kubernetes experience is on heavy iron, and that is limited.

Kubernetes, like Linux, comes in many flavors. There’s more than just k8s and k3s. There’s minikube, canonicals variant, kubernetes in docker, and a few others.

Update: I’ve had a moment to continue down this path. I’ve decided to go back to docker swarm, since kubernetes is just too complex for my needs.

Additionally, I found that the Raspberry Pi Foundation has new firmware that supports booting from USB drives, no SD card needed, so I’m going to be reformatting and basing off of that tonight. I need to pop in a raspbian image on each pi 4 to support that though, so it’s gonna be a long night of flashing.

Another update.

I’ve jumped ship back to Manjaro from UWUubuntu, since it’s being shit and using 500MB of ram on boot.

Much happier 120mb on boot with Manjaro.

OMG

really is this a thing

Well, people have memed it, but there’s no official spin.

Yep, I’ve got 3 Pi 4’s booted. No SD card even connected for the boot config, it’ll pull direct from the USB drive for that. xD

do you flash firmware for it?

Yep.

sudo rpi-eeprom-update -a

Might need to install rpi-eeprom

network/pxe boot when?

for sure the end game